tensorrt debug问题汇总

目录

1. Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding

2. sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaStreamCreate

3. Assertion failed: (smVersion < SM_VERSION_A100) && “SM version not supported in this NVRTC version“

1. Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding

问题:pth转onnx时设置了动态维度Dynamic dimensions,如下所示

#

# SPDX-FileCopyrightText: Copyright (c) 1993-2022 NVIDIA CORPORATION & AFFILIATES. All rights reserved.

# SPDX-License-Identifier: Apache-2.0

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

from PIL import Image

from io import BytesIO

import requests

output_image="input.ppm"

# Read sample image input and save it in ppm format

print("Exporting ppm image {}".format(output_image))

response = requests.get("https://pytorch.org/assets/images/deeplab1.png")

with Image.open(BytesIO(response.content)) as img:

ppm = Image.new("RGB", img.size, (255, 255, 255))

ppm.paste(img, mask=img.split()[3])

ppm.save(output_image)

import torch

import torch.nn as nn

output_onnx="fcn-resnet101.onnx"

# FC-ResNet101 pretrained model from torch-hub extended with argmax layer

class FCN_ResNet101(nn.Module):

def __init__(self):

super(FCN_ResNet101, self).__init__()

self.model = torch.hub.load('pytorch/vision:v0.6.0', 'fcn_resnet101', pretrained=True)

def forward(self, inputs):

x = self.model(inputs)['out']

x = x.argmax(1, keepdims=True)

return x

model = FCN_ResNet101()

model.eval()

# Generate input tensor with random values

input_tensor = torch.rand(4, 3, 224, 224)

# Export torch model to ONNX

print("Exporting ONNX model {}".format(output_onnx))

torch.onnx.export(model, input_tensor, output_onnx,

opset_version=12,

do_constant_folding=True,

input_names=["input"],

output_names=["output"],

dynamic_axes={"input": {0: "batch", 2: "height", 3: "width"},

"output": {0: "batch", 2: "height", 3: "width"}},

verbose=False)

但是,onnx转trt时,必须指定推理维度,否则会报warning:

[W] Dynamic dimensions required for input: input, but no shapes were provided. Automatically overriding shape to: 1x3x1x1

输入维度变成了1x3x1x1,显然不对。

解决办法:

需指定维度:

trtexec.exe --onnx=E:\code\python\TensorRT-main\quickstart\SemanticSegmentation\fcn-resnet101.onnx --minShapes=input:1x3x1026x1282 --optShapes=input:1x3x1026x1282 --maxShapes=input:4x3x1026x1282 --workspace=4096 --saveEngine=E:\code\python\TensorRT-main\quickstart\SemanticSegmentation\fcn-resnet101.engine

2. sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaStreamCreate

问题描述

已启动生成…

1>------ 已启动生成: 项目: tensorRTTest, 配置: Debug x64 ------

1>sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaStreamCreate,函数 "void __cdecl doInference(class nvinfer1::IExecutionContext &,float *,float *,int)" (?doInference@@YAXAEAVIExecutionContext@nvinfer1@@PEAM1H@Z) 中引用了该符号

1>sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaStreamDestroy,函数 "void __cdecl doInference(class nvinfer1::IExecutionContext &,float *,float *,int)" (?doInference@@YAXAEAVIExecutionContext@nvinfer1@@PEAM1H@Z) 中引用了该符号

1>sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaStreamSynchronize,函数 "void __cdecl doInference(class nvinfer1::IExecutionContext &,float *,float *,int)" (?doInference@@YAXAEAVIExecutionContext@nvinfer1@@PEAM1H@Z) 中引用了该符号

1>sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaMalloc,函数 "void __cdecl doInference(class nvinfer1::IExecutionContext &,float *,float *,int)" (?doInference@@YAXAEAVIExecutionContext@nvinfer1@@PEAM1H@Z) 中引用了该符号

1>sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaFree,函数 "void __cdecl doInference(class nvinfer1::IExecutionContext &,float *,float *,int)" (?doInference@@YAXAEAVIExecutionContext@nvinfer1@@PEAM1H@Z) 中引用了该符号

1>sampleMNIST.obj : error LNK2019: 无法解析的外部符号 cudaMemcpyAsync,函数 "void __cdecl doInference(class nvinfer1::IExecutionContext &,float *,float *,int)" (?doInference@@YAXAEAVIExecutionContext@nvinfer1@@PEAM1H@Z) 中引用了该符号

1>D:\code\Cplusplus\tensorRTTest\x64\Debug\tensorRTTest.exe : fatal error LNK1120: 6 个无法解析的外部命令

1>已完成生成项目“tensorRTTest.vcxproj”的操作 - 失败。

========== 生成: 成功 0 个,失败 1 个,最新 0 个,跳过 0 个 ==========一般这种“无法解析的外部符号”,多半是缺少lib库。这里是缺少cudart.lib和cuda.lib,在vs2019链接器上加上,问题解决。

myelin64_1.lib

nvinfer.lib

nvinfer_plugin.lib

nvonnxparser.lib

nvparsers.lib

cudart.lib

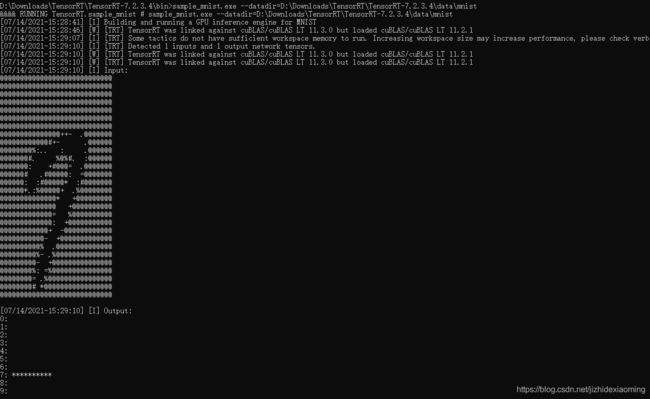

cuda.lib3. Assertion failed: (smVersion < SM_VERSION_A100) && “SM version not supported in this NVRTC version“

问题描述:

以为是环境:

window10

TensorRT-7.0.0.11

cuda 10.2

cudnn 8.0.3

刚开始以为是TensorRT版本太低,7.0换成7.2.3,还是报一样的错误。

即环境

window10

TensorRT-7.2.3

cuda 10.2

cudnn 8.0.3

也不行。

升级cuda版本,由cuda10.2升级到11.0。报新的错误信息:

C:\source\rtSafe\cuda\cudaConvolutionRunner.cpp (483) - Cudnn Error in nvinfer1::rt::cuda::CudnnConvolutionRunner::executeConv

从错误信息看是cudnn的问题。

解决办法:可能是显卡太高端了,全面升级

tensorRT 7.2.3 (TensorRT-7.2.3.4.Windows10.x86_64.cuda-11.1.cudnn8.1.zip)

cuda11.1 (cuda_11.1.0_456.43_win10.exe)

cudnn8.1 (cudnn-11.2-windows-x64-v8.1.0.77.zip)

问题解决