基于MindSpore实现yolov3_darknet53

话不多说,先上一段MindSpore基本介绍

MindSpore是一个全场景深度学习框架,旨在实现易开发、高效执行、全场景覆盖三大目标,其中易开发表现为API友好、调试难度低,高效执行包括计算效率、数据预处理效率和分布式训练效率,全场景则指框架同时支持云、边缘以及端侧场景。

MindSpore总体架构如下图所示,下面介绍主要的扩展层(MindSpore Extend)、前端表达层(MindExpression,ME)、编译优化层(MindCompiler)和全场景运行时(MindRT)四个部分

-

MindSpore Extend(扩展层):MindSpore的扩展包,期待更多开发者来一起贡献和构建。

-

MindExpression(表达层):基于Python的前端表达,未来计划陆续提供C/C++、Java等不同的前端;MindSpore也在考虑支持华为自研编程语言前端-仓颉,目前还处于预研阶段;同时也在做与Julia等第三方前端的对接工作,引入更多的第三方生态。

-

MindCompiler(编译优化层):图层的核心编译器,主要基于端云统一的MindIR实现三大功能,包括硬件无关的优化(类型推导、自动微分、表达式化简等)、硬件相关优化(自动并行、内存优化、图算融合、流水线执行等)、部署推理相关的优化(量化、剪枝等);其中,MindAKG是MindSpore的自动算子生成编译器,目前还在持续完善中。

-

MindRT(全场景运行时):这里含云侧、端侧以及更小的IoT

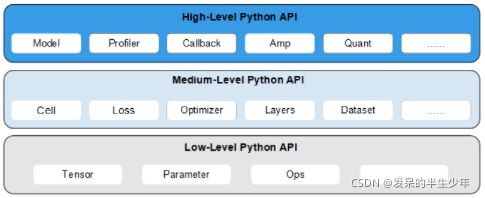

MindSpore向用户提供了3个不同层次的API,支撑用户进行网络构建、整图执行、子图执行以及单算子执行,从低到高分别为Low-Level Python API、Medium-Level Python API以及High-Level Python API

更多内容参考MindSpore官网:MindSpore官网

yolov3结构图:

下面是yolov3网络的实现:

# MindSpore的Cell类是构建所有网络的基类,也是网络的基本单元。 # 当用户需要神经网络时,需要继承Cell类,并重写__init__方法和construct方法 import mindspore.nn as nn from mindspore import dtype as mstype from mindspore.ops import operations as P

class ConvolutionalLayer(nn.Cell):

# 定义卷积层

def __init__(self,in_c,out_c,kernal_size,stride,pad_mode,padding):

super(ConvolutionalLayer,self).__init__()

self.conv=nn.SequentialCell([

nn.Conv2d(in_c,out_c,kernal_size,stride,pad_mode,padding),

nn.BatchNorm2d(out_c),

nn.LeakyReLU(0.1)

])

def construct(self, x):

return self.conv(x)class ResidualLayer(nn.Cell):

# 定义残差层

def __init__(self,in_c):

super(ResidualLayer,self).__init__()

self.reseblock=nn.SequentialCell([

ConvolutionalLayer(in_c,in_c//2,kernal_size=1,stride=1,pad_mode='same',padding=0),

ConvolutionalLayer(in_c//2,in_c,kernal_size=3,stride=1,pad_mode='pad',padding=1)

])

def construct(self,x):

# return x+self.reseblock(x)

return P.Add()(x, self.reseblock(x))class warpLayer(nn.Cell):

# 定义残差叠加块

def __init__(self,in_c,count):

super(warpLayer,self).__init__()

self.count=count

self.in_channels=in_c

self.res=ResidualLayer(self.in_channels)

def construct(self,x):

for i in range(0,self.count):

x=self.res(x)

return xclass DownSampleLayer(nn.Cell):

# 定义下采样层

def __init__(self,in_c,out_c):

super(DownSampleLayer,self).__init__()

self.conv=

ConvolutionalLayer(in_c,out_c,kernal_size=3,stride=2,pad_mode='pad',padding=1)

def construct(self,x):

return self.conv(x)class ConvolotionalSetLayer(nn.Cell):

# 定义卷积集

def __init__(self,in_c,out_c):

super(ConvolotionalSetLayer, self).__init__()

self.convset=nn.SequentialCell([

ConvolutionalLayer(in_c,out_c,kernal_size=1,stride=1,pad_mode='same',padding=0),

ConvolutionalLayer(out_c,out_c,kernal_size=3,stride=1,pad_mode='pad',padding=1),

ConvolutionalLayer(out_c,in_c,kernal_size=1,stride=1,pad_mode='same',padding=0),

ConvolutionalLayer(in_c,in_c,kernal_size=3,stride=1,pad_mode='pad',padding=1),

ConvolutionalLayer(in_c,out_c,kernal_size=1,stride=1,pad_mode='same',padding=0)

])

def construct(self,x):

return self.convset(x)# class UpsampleLayer(nn.Cell):

# # 定义上采样层

# def __init__(self):

# super(UpsampleLayer, self).__init__()

#

# def construct(self,x):

# return nn.ResizeBilinear()(x,scale_factor=2)

def UpSampleLayer(x):

return nn.ResizeBilinear()(x, scale_factor=2)class DarkNet53(nn.Cell):

def __init__(self):

super(DarkNet53,self).__init__()

self.feature_52=nn.SequentialCell([

ConvolutionalLayer(3,32,kernal_size=3,stride=1,pad_mode='pad',padding=1),

DownSampleLayer(32,64),

ResidualLayer(64),

DownSampleLayer(64,128),

warpLayer(128,2),

DownSampleLayer(128,256),

warpLayer(256,8),

])

self.feature_26=nn.SequentialCell([

DownSampleLayer(256,512),

warpLayer(512,8)

])

self.feature_13=nn.SequentialCell([

DownSampleLayer(512,1024),

warpLayer(1024,4)

])

self.convolset_13=nn.SequentialCell([

ConvolotionalSetLayer(1024,512)

])

self.convolset_26=nn.SequentialCell([

ConvolotionalSetLayer(768,256)

])

self.convolset_52=nn.SequentialCell([

ConvolotionalSetLayer(384,128)

])

self.detection_13=nn.SequentialCell([

ConvolutionalLayer(512,1024,kernal_size=3,stride=1,pad_mode='pad',padding=1),

nn.Conv2d(1024,255,kernel_size=1,stride=1,pad_mode='same',padding=0)

])

self.detection_26 = nn.SequentialCell([

ConvolutionalLayer(256, 512, kernal_size=3, stride=1, pad_mode='pad', padding=1),

nn.Conv2d(512, 255, kernel_size=1, stride=1, pad_mode='same', padding=0)

])

self.detection_52 = nn.SequentialCell([

ConvolutionalLayer(128, 256, kernal_size=3, stride=1, pad_mode='pad', padding=1),

nn.Conv2d(256, 255, kernel_size=1, stride=1, pad_mode='same', padding=0)

])

self.up_26=nn.SequentialCell([

ConvolutionalLayer(512,256,kernal_size=1,stride=1,pad_mode='same',padding=0)

])

self.up_52=nn.SequentialCell([

ConvolutionalLayer(256,128,kernal_size=1,stride=1,pad_mode='same',padding=0)

])

def construct(self,x):

h_52=self.feature_52(x)

h_26=self.feature_26(h_52)

h_13=self.feature_13(h_26)

conval_13=self.convolset_13(h_13)

detection_13=self.detection_13(conval_13)

up_26_con=self.up_26(conval_13)

up_26_up=UpSampleLayer(up_26_con)

# op=P.Concat(axis=1)

# route_26=op((up_26_up,h_26))

route_26=P.Concat(axis=1)((up_26_up,h_26))

conval_26=self.convolset_26(route_26)

detection_26=self.detection_26(conval_26)

up_52_con=self.up_52(conval_26)

up_52_up=UpSampleLayer(up_52_con)

route_52=P.Concat(axis=1)((up_52_up,h_52))

conval_52=self.convolset_52(route_52)

detection_52=self.detection_52(conval_52)

return detection_13,detection_26,detection_52if __name__ == '__main__':

shape = (1, 3, 416, 416)

x = P.Ones()

x = x(shape, mstype.float32)

det_13,det_26,det_52=DarkNet53()(x)

print(det_13.shape)

print(det_26.shape)

print(det_52.shape)