深度学习--猴痘识别

一.引言

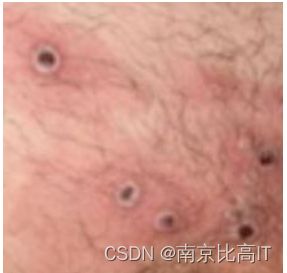

本文使用自己手工搭建的神经网络进行猴痘识别,猴痘是近年来的一个重大传染病。

二.前期准备

导入依赖

from tensorflow import keras

from tensorflow.keras import layers,models

import os,PIL,pathlib

import matplotlib.pyplot as plt

import tensorflow as tf设置GPU

gpus=tf.config.list_physical_devices("GPU")

if gpus:

gpus0=gpus[0]

tf.config.experimental.set_memory_growth(gpus0,True)

tf.config.set_visible_devices([gpus0],"GPU")

gpus三.实验

获取文件对象,统计图片总数:

data_dir="./45-data/"

data_dir=pathlib.Path(data_dir)

image_count=len(list(data_dir.glob('*/*.jpg')))

print("图片的总数为:",image_count)可视化猴痘图像

Monkeypox=list(data_dir.glob('Monkeypox/*.jpg'))

PIL.Image.open(str(Monkeypox[0]))设置输入的图像的大小:

batch_size=32

img_height=224

img_width=224设置训练集

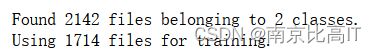

train_ds=tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=123,

image_size=(img_height,img_width),

batch_size=batch_size

)设置验证集

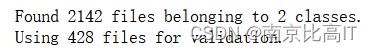

val=tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=123,

image_size=(img_height,img_width),

batch_size=batch_size

)

得到类别标签

class_names=train_ds.class_names

print(class_names)再次可视化图像:

plt.figure(figsize=(20,10))

for images,labels in train_ds.take(1):

for i in range(20):

ax=plt.subplot(5,10,i+1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")得到图像的张量大小:

for image_batch,labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break配置数据集:

AUTOTUNE=tf.data.AUTOTUNE

train_ds=train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds=val.cache().prefetch(buffer_size=AUTOTUNE)搭建网络模型

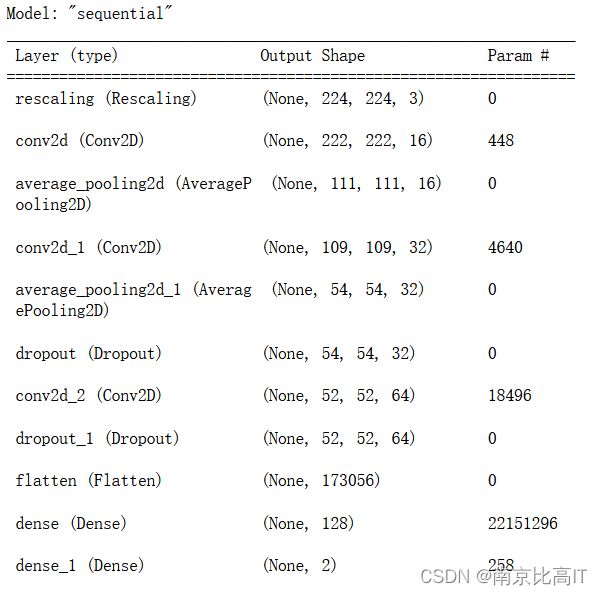

num_classes=2

model=models.Sequential([

layers.experimental.preprocessing.Rescaling(1./255,input_shape=(img_height,img_width,3)),

layers.Conv2D(16.,(3,3),activation='relu',input_shape=(img_height,img_width,3)),

layers.AveragePooling2D((2,2)),

layers.Conv2D(32,(3,3),activation='relu'),

layers.AveragePooling2D((2,2)),

layers.Dropout(0.3),

layers.Conv2D(64,(3,3),activation='relu'),

layers.Dropout(0.3),

layers.Flatten(),

layers.Dense(128,activation='relu'),

layers.Dense(num_classes)

])

model.summary()设置优化器并进行模型编译

opt=tf.keras.optimizers.Adam(learning_rate=1e-4)

model.compile(

optimizer=opt,

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)模型训练:

from tensorflow.keras.callbacks import ModelCheckpoint

epochs=50

checkpointer=ModelCheckpoint(

'best_model.h5',

monitor='val_accuracy',

verbose=1,

save_best_only=True,

save_weights_only=True

)

history=model.fit(train_ds,validation_data=val_ds,epochs=epochs,callbacks=[checkpointer])使用训练得到的最好的权重进行预测:

model.load_weights('best_model.h5')from PIL import Image

import numpy as np

img=Image.open('./45-data/Others/NM15_02_11.jpg')

image=tf.image.resize(img,[img_height,img_width])

img_array=tf.expand_dims(image,0)

predictions=model.predict(img_array)

print("预测结果为:",class_names[np.argmax(predictions)])四.总结

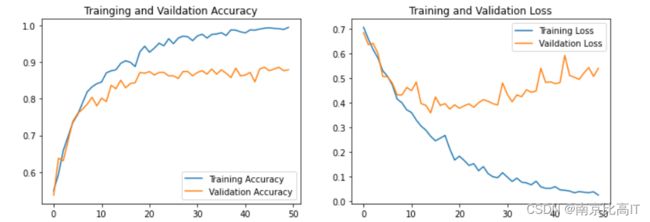

进行模型训练结果的可视化结果如下:

观察上述结果可以发现,在训练批次为8左右模型的训练结果尚好,验证集的准确率在不断增加, 训练集尽管他的准确率还在提高,但在20以后验证集准确率趋于稳定,尽管越到后面的训练批次模型的准确率在不断升高,但实际已经发生了模型在训练集上的过拟合。