Deeplab_v2+VOC数据集

概述

Deeplab系列,是图像语意分割的经典之作,用深度学习的方法实现图像分割,绕不过系统学习Deeplab系列,而学习的最快途径就是先把demo跑起来,之后再去细读文献和代码。

本博客主要是基于v2版本的deeplab,使用VGG16模型,在VOC2012数据集上进行测试!

参考了很多博客的精华,但是有些博客记录的不是很全,核心资料就是参看官网:deeplab_v2

我把我的踩坑过程记录如下,让自己再熟悉一遍。记录按照demo运行顺序进行:

收集处理数据:

文章中的数据集由两部分组成,已经有人写好脚本,可以直接从网上拉下来,这样就不去网页上自己手动搜索了。

下载脚本如下:

# 这里建议自己建立一个data文件夹,存放相关数据

# augmented PASCAL VOC #增强数据集

mkdir -p ~/DL_dataset

cd ~/DL_dataset #save datasets 为$DATASETS

wget http://www.eecs.berkeley.edu/Research/Projects/CS/vision/grouping/semantic_contours/benchmark.tgz # 1.3 GB

tar -zxvf benchmark.tgz

mv benchmark_RELEASE VOC_aug

# original PASCAL VOC 2012 #原始数据集

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar # 2 GB

tar -xvf VOCtrainval_11-May-2012.tar

mv VOCdevkit/VOC2012 VOC2012_orig && rm -r VOCdevkit

数据转换 :

- 因为pascal voc2012增强数据集的label是mat格式的文件,所以我们需要把mat格式的label转为png格式的图片,脚本如下:

cd ./DL_dataset/VOC_aug/dataset

if [ ! -d cls_png ];

then

mkdir cls_png

else

echo dir exist

fi

cd ../../../

python3 ./mat2png.py ./DL_dataset/VOC_aug/dataset/cls ./DL_dataset/VOC_aug/dataset/cls_png

- pascal voc2012原始数据集的label为三通道RGB图像,但是caffe最后一层softmax loss 层只能识别一通道的label,所以此处我们需要对原始数据集的label进行降维,脚本如下:

cd ./DL_dataset/VOC2012_orig

if [ ! -d SegmentationClass_1D ];

then

mkdir SegmentationClass_1D

else

echo dir exist

fi

cd ../../

python3 convert_labels.py ./DL_dataset/VOC2012_orig/SegmentationClass/ ./DL_dataset/VOC2012_orig/ImageSets/Segmentation/trainval.txt ./DL_dataset/VOC2012_orig/SegmentationClass_1D/

为了方便直接使用图片,我们将图片两个图片源合并,且将文件夹改成train.txt里要求的形式:

脚本如下:

cp ./DL_dataset/VOC2012_orig/SegmentationClass_1D/* ./DL_dataset/VOC_aug/dataset/cls_png

cp ./DL_dataset/VOC2012_orig/JPEGImages/* ./DL_dataset/VOC_aug/dataset/img/

echo "复制完毕"

cd ./DL_dataset/VOC_aug/dataset

mv ./img ./JPEGImages

mv ./cls_png ./SegmentationClassAug

echo "文件夹改名"

echo "查看JPEGImages文件数量:"

cd ./JPEGImages

ls -l | grep "^-" | wc -l

echo "查看SegmentationClassAug文件数量::"

cd ../SegmentationClassAug

ls -l | grep "^-" | wc -l

到此处,在 /DL_dataset/VOC_aug/dataset文件夹中

- images数据集的文件名为:JPEGImages ,jpg图片数由5073变为17125

- labels数据集文件名为:cls_png ,png图片数由11355变为12031

数据收集工作也到此结束。

数据收集完之后第二步,就是建立一些文件夹,开始一些配置工作,之后就可以把数据扔进caffe里训练了。

配置环境

参看官方文档:

1、用来运行caffe的脚本和数据list都可以直接下载

2、难点是安装matio

安装matio:

第一步下载:

官网链接

下载下来发现是7z压缩包

第二步解压:

sudo apt-get install p7zip

7z x matio-1.5.12.7z -r -o/home/xx //解压到目标文件夹,若遇到需要下载完整7z提示,按提示下载即可。

sudo apt-get install p7zip-full

第三步安装:

cd matio-1.5.12

./configure //如果这一句不能用的话用 bash configure 代替

make

make check

make install

会出现很多类似错误:

*xxxx/Depends/matio-1.5.12/src’ //报错文件目录 /bin/sh …/libtool --tag=CC --mode=compile mipsel-linux-gcc -DHAVE_CONFIG_H -I. -I… -I…/include -I…/include -O20 -Wall -ffast-math -fsigned-char -g -O2 -MT framing.lo -MD -MP -MF .deps/framing.Tpo -c -o framing.lo framing.c

…/libtool: 1564: …/libtool: preserve_args+= --tag CC: not found

…/libtool: 1: eval: base_compile+= mipsel-linux-gcc: not found

…/libtool: 1: eval: base_compile+= -DHAVE_CONFIG_H: not found

找到src文件里的makefile文件,定位到SHELL变量定义处的/bin/sh,改为/bin/bash后重新编译

这样的文件夹有很多:类似在"src", “tools” , “test” 等文件夹处均遇到上面错误,修改对应的Makefile文件即可。

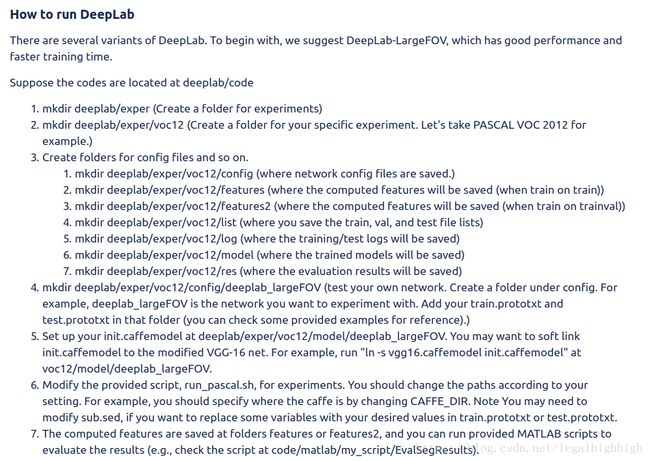

接下来就是配置环境,创建文件夹,及编译caffe了。

参考官网:

第一步:创建文件夹、拉取deep_lab源码,一个脚本搞定:

#!/bin/bash

mkdir deep_lab

cd deep_lab

git clone https://bitbucket.org/aquariusjay/deeplab-public-ver2.git

echo "源码拉取成功"

cd ..

mkdir -p ./deep_lab/exper/voc12/config/deeplab_largeFOV

mkdir -p ./deep_lab/exper/voc12/features/labels

mkdir -p ./deep_lab/exper/voc12/features2/labels

mkdir -p ./deep_lab/exper/voc12/list

mkdir -p ./deep_lab/exper/voc12/model/deeplab_largeFOV

mkdir -p ./deep_lab/exper/voc12/log

mkdir -p ./deep_lab/exper/voc12/res

echo "创建文件夹成功,用来存放txt,log,model等内容"

第二步:拉取配置文件prototxt和训练模型分别放进各自文件夹,脚本如下:

#!/bin/bash

echo "正在下载..."

wget http://liangchiehchen.com/projects/released/deeplab_aspp_vgg16/prototxt_and_model.zip

unzip prototxt_and_model.zip

echo "解压完毕"

mv *.prototxt ./deep_lab/exper/voc12/config/deeplab_largeFOV

mv *caffemodel ./deep_lab/exper/voc12/model/deeplab_largeFOV

rm -rf *.prototxt

rm -rf *caffemodel

echo "完成"

第三步:编译caffe

和BVLC版本一样,对DeepLab的caffe进行编译,我喜欢用cmake

在使用cmake之前,先检查Cmakelist的配置,我需要选择python3编译

#!/bin/bash

cd ./deep_lab/deeplab_public_ver2

mkdir build

cd build

cmake ..

make -j8

make pycafef

make test

echo "完成"

这样编译会出不少问题,主要参考编译错误总结都能顺利解决。

比如:

1、./include/caffe/common.cuh(9): error: function “atomicAdd(double *, double)” has already been defined

原因是CUDA 8.0 提供了对atomicAdd函数的定义,但atomicAdd在之前的CUDA toolkit中并未出现,因此一些程序自定义了atomicAdd函数。

解决方法:打开./include/caffe/common.cuh文件,在atomicAdd前添加宏判断即可。

如下:

#if !defined(__CUDA_ARCH__) || __CUDA_ARCH__ >= 600

#else

static __inline__ __device__ double atomicAdd(double* address, double val)

{

...

}

#endif

2、cuDNN v5环境会出现类似下面的接口错误:

./include/caffe/util/cudnn.hpp: In function ‘void caffe::cudnn::createPoolingDesc(cudnnPoolingStruct**, caffe::PoolingParameter_PoolMethod, cudnnPoolingMode_t*, int, int, int, int, int, int)’:

./include/caffe/util/cudnn.hpp:127:41: error: too few arguments to function ‘cudnnStatus_t cudnnSetPooling2dDescriptor(cudnnPoolingDescriptor_t, cudnnPoolingMode_t, cudnnNanPropagation_t, int, int, int, int, int, int)’

pad_h, pad_w, stride_h, stride_w));

这是由于所使用的cuDNN版本不一致的导致的,作者配置环境是cuDNN 4.0,但是5.0版本后的cuDNN接口有所变化。

解决方法 :将以下几个文件用最新BVLC版本的caffe对应文件替换并重新编译

./include/caffe/util/cudnn.hpp

./include/caffe/layers/cudnn_conv_layer.hpp

./include/caffe/layers/cudnn_relu_layer.hpp

./include/caffe/layers/cudnn_sigmoid_layer.hpp

./include/caffe/layers/cudnn_tanh_layer.hpp

./src/caffe/layers/cudnn_conv_layer.cpp

./src/caffe/layers/cudnn_conv_layer.cu

./src/caffe/layers/cudnn_relu_layer.cpp

./src/caffe/layers/cudnn_relu_layer.cu

./src/caffe/layers/cudnn_sigmoid_layer.cpp

./src/caffe/layers/cudnn_sigmoid_layer.cu

./src/caffe/layers/cudnn_tanh_layer.cpp

./src/caffe/layers/cudnn_tanh_layer.cu

3、使用cmake编译时会遇到以下错误

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_VarFree'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_VarReadDataLinear'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_Open'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_VarCreate'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_CreateVer'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_VarWrite'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_VarReadInfo'

../lib/libcaffe.so.1.0.0-rc3: undefined reference to `Mat_Close'

解决方法:

下载FindMATIO.cmake.zip文件,解压缩后拷贝到./cmake/Modules目录中。

文件下载路径

并添加以下代码至./cmake/Dependencies.cmake文件中

# ---[ MATIO--add

find_package(MATIO REQUIRED)

include_directories(${MATIO_INCLUDE_DIR})

list(APPEND Caffe_LINKER_LIBS ${MATIO_LIBRARIES})

这样基本能正常编译caffe了。

以上基本就是整个环境配置的全过程,接下来就是开始把deeplab_v2跑起来了。

编译deeplab_v2

之前提到过编译caffe的脚本可以直接下载,但是需要才能连上:

我下载下来的文件如下:包括三个sh和一个sed,放在了voc12文件夹里,接下来就是修改run_pascal.sh里的一些文件地址,然后运行即可。

还有一个list,需要放进list文件夹:

我的文件如下:

#!/bin/sh

## MODIFY PATH for YOUR SETTING

ROOT_DIR=~/Documents/data/deeplab/DL_dataset #此处为voc数据集主路径

CAFFE_DIR=../deeplab-public-ver2 #此处为官方caffe源码文件夹

CAFFE_BIN=${CAFFE_DIR}/build/tools/caffe #需要修改源文件

EXP=.

if [ "${EXP}" = "." ]; then

NUM_LABELS=21

DATA_ROOT=${ROOT_DIR}/VOC_aug/dataset/

else

NUM_LABELS=0

echo "Wrong exp name"

fi

## Specify which model to train

########### voc12 ################

NET_ID=deeplab_largeFOV

## Variables used for weakly or semi-supervisedly training

#TRAIN_SET_SUFFIX=

TRAIN_SET_SUFFIX=_aug

#TRAIN_SET_STRONG=train

#TRAIN_SET_STRONG=train200

#TRAIN_SET_STRONG=train500

#TRAIN_SET_STRONG=train1000

#TRAIN_SET_STRONG=train750

#TRAIN_SET_WEAK_LEN=5000

DEV_ID=0

#####

## Create dirs

CONFIG_DIR=${EXP}/config/${NET_ID}

MODEL_DIR=${EXP}/model/${NET_ID}

mkdir -p ${MODEL_DIR}

LOG_DIR=${EXP}/log/${NET_ID}

mkdir -p ${LOG_DIR}

export GLOG_log_dir=${LOG_DIR}

## Run

RUN_TRAIN=1

RUN_TEST=0

RUN_TRAIN2=0

RUN_TEST2=0

## Training #1 (on train_aug)

if [ ${RUN_TRAIN} -eq 1 ]; then

#

LIST_DIR=${EXP}/list

TRAIN_SET=train${TRAIN_SET_SUFFIX}

if [ -z ${TRAIN_SET_WEAK_LEN} ]; then

TRAIN_SET_WEAK=${TRAIN_SET}_diff_${TRAIN_SET_STRONG}

comm -3 ${LIST_DIR}/${TRAIN_SET}.txt ${LIST_DIR}/${TRAIN_SET_STRONG}.txt > ${LIST_DIR}/${TRAIN_SET_WEAK}.txt

else

TRAIN_SET_WEAK=${TRAIN_SET}_diff_${TRAIN_SET_STRONG}_head${TRAIN_SET_WEAK_LEN}

comm -3 ${LIST_DIR}/${TRAIN_SET}.txt ${LIST_DIR}/${TRAIN_SET_STRONG}.txt | head -n ${TRAIN_SET_WEAK_LEN} > ${LIST_DIR}/${TRAIN_SET_WEAK}.txt

fi

#

MODEL=${EXP}/model/${NET_ID}/init.caffemodel

#

echo Training net ${EXP}/${NET_ID}

for pname in train solver; do

sed "$(eval echo $(cat sub.sed))" \

${CONFIG_DIR}/${pname}.prototxt > ${CONFIG_DIR}/${pname}_${TRAIN_SET}.prototxt

done

CMD="${CAFFE_BIN} train \

--solver=${CONFIG_DIR}/solver_${TRAIN_SET}.prototxt \

--gpu=${DEV_ID}"

if [ -f ${MODEL} ]; then

CMD="${CMD} --weights=${MODEL}"

fi

echo Running ${CMD} && ${CMD}

fi

## Test #1 specification (on val or test)

if [ ${RUN_TEST} -eq 1 ]; then

#

for TEST_SET in val; do

TEST_ITER=`cat ${EXP}/list/${TEST_SET}.txt | wc -l`

MODEL=${EXP}/model/${NET_ID}/test.caffemodel

if [ ! -f ${MODEL} ]; then

MODEL=`ls -t ${EXP}/model/${NET_ID}/train_iter_*.caffemodel | head -n 1`

fi

#

echo Testing net ${EXP}/${NET_ID}

FEATURE_DIR=${EXP}/features/${NET_ID}

mkdir -p ${FEATURE_DIR}/${TEST_SET}/fc8

mkdir -p ${FEATURE_DIR}/${TEST_SET}/fc9

mkdir -p ${FEATURE_DIR}/${TEST_SET}/seg_score

sed "$(eval echo $(cat sub.sed))" \

${CONFIG_DIR}/test.prototxt > ${CONFIG_DIR}/test_${TEST_SET}.prototxt

CMD="${CAFFE_BIN} test \

--model=${CONFIG_DIR}/test_${TEST_SET}.prototxt \

--weights=${MODEL} \

--gpu=${DEV_ID} \

--iterations=${TEST_ITER}"

echo Running ${CMD} && ${CMD}

done

fi

## Training #2 (finetune on trainval_aug)

if [ ${RUN_TRAIN2} -eq 1 ]; then

#

LIST_DIR=${EXP}/list

TRAIN_SET=trainval${TRAIN_SET_SUFFIX}

if [ -z ${TRAIN_SET_WEAK_LEN} ]; then

TRAIN_SET_WEAK=${TRAIN_SET}_diff_${TRAIN_SET_STRONG}

comm -3 ${LIST_DIR}/${TRAIN_SET}.txt ${LIST_DIR}/${TRAIN_SET_STRONG}.txt > ${LIST_DIR}/${TRAIN_SET_WEAK}.txt

else

TRAIN_SET_WEAK=${TRAIN_SET}_diff_${TRAIN_SET_STRONG}_head${TRAIN_SET_WEAK_LEN}

comm -3 ${LIST_DIR}/${TRAIN_SET}.txt ${LIST_DIR}/${TRAIN_SET_STRONG}.txt | head -n ${TRAIN_SET_WEAK_LEN} > ${LIST_DIR}/${TRAIN_SET_WEAK}.txt

fi

#

MODEL=${EXP}/model/${NET_ID}/init2.caffemodel

if [ ! -f ${MODEL} ]; then

MODEL=`ls -t ${EXP}/model/${NET_ID}/train_iter_*.caffemodel | head -n 1`

fi

#

echo Training2 net ${EXP}/${NET_ID}

for pname in train solver2; do

sed "$(eval echo $(cat sub.sed))" \

${CONFIG_DIR}/${pname}.prototxt > ${CONFIG_DIR}/${pname}_${TRAIN_SET}.prototxt

done

CMD="${CAFFE_BIN} train \

--solver=${CONFIG_DIR}/solver2_${TRAIN_SET}.prototxt \

--weights=${MODEL} \

--gpu=${DEV_ID}"

echo Running ${CMD} && ${CMD}

fi

## Test #2 on official test set

if [ ${RUN_TEST2} -eq 1 ]; then

#

for TEST_SET in val test; do

TEST_ITER=`cat ${EXP}/list/${TEST_SET}.txt | wc -l`

MODEL=${EXP}/model/${NET_ID}/test2.caffemodel

if [ ! -f ${MODEL} ]; then

MODEL=`ls -t ${EXP}/model/${NET_ID}/train2_iter_*.caffemodel | head -n 1`

fi

#

echo Testing2 net ${EXP}/${NET_ID}

FEATURE_DIR=${EXP}/features2/${NET_ID}

mkdir -p ${FEATURE_DIR}/${TEST_SET}/fc8

mkdir -p ${FEATURE_DIR}/${TEST_SET}/crf

sed "$(eval echo $(cat sub.sed))" \

${CONFIG_DIR}/test.prototxt > ${CONFIG_DIR}/test_${TEST_SET}.prototxt

CMD="${CAFFE_BIN} test \

--model=${CONFIG_DIR}/test_${TEST_SET}.prototxt \

--weights=${MODEL} \

--gpu=${DEV_ID} \

--iterations=${TEST_ITER}"

echo Running ${CMD} && ${CMD}

done

fi

详细文件可从github上获得,包括脚本和py文件。

欢迎关注个人公号:ThuerStory,讲述毕业后的带娃生活。