第四次作业:猫狗大战挑战赛

本次实验再度拓宽了我的知识面,让我第一次接触了ai的竞赛。

首先阅读了老师的代码,也专门去看了各个数据结构的组成,大概知道了vgg的现实运用是怎样的。

然后我去网站上认真阅读了说明,但还是一头雾水,最后不得不参考了往年的博客来开始我的第一次提交。

在此之前我已经掌握了各种卷积层以及训练,测试(毕竟我敲了之前所有lab的代码),但是由于我numpy基础薄弱,大部分问题都来自于往csv里写数据这种问题。

Round 1

本质上与老师的代码类似只不过在训练的时候多加了

if epoch_acc >= 0.96: #当准确率超过一定数值时,保存模型

localtime = time.strftime('%Y-%m-%d_%H:%M:%S')

path = log_dir + str(epoch) + '_' + str(epoch_acc) + '_' + localtime

torch.save(model,path)保存每个准确率较高的模型

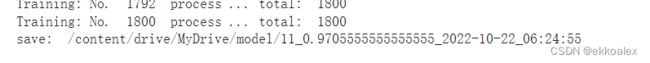

可以看到我保存了一个0.972准确率的model

model_vgg_new = torch.load(r'/content/drive/MyDrive/model/19_0.9722222222222222_2022-10-21_09:16:21')#加载之前保存的模型然后我将其读出,使用它预测数据。

with open("/content/drive/MyDrive/csv/result.csv",'a+') as f:

for key in range(2000):

#这里的yanxishe/test/是我的图片路径,按需更换

f.write("{},{}\n".format(key,dic["/content/drive/MyDrive/data/cat_dog/test/"+str(key)]))保存进一个csv文件。

最后达到了97分。

这次算是我的初次尝试,对于其中很多原理都没有搞懂,所以我接下来将逐条代码进行深究。

Round 2

关于transforms的较为详细的解释

(11条消息) PyTorch 学习笔记(三):transforms的二十二个方法_TensorSense的博客-CSDN博客

关于ImageFolder我一直以为目录是指图片上一级,其实是上两级

(11条消息) torchvision.datasets.ImageFolder_平凡的久月的博客-CSDN博客_datasets.imagefolder

from google.colab import drive

drive.mount('/content/drive')

import numpy as np

import matplotlib.pyplot as plt

import os

import torch

import torch.nn as nn

import torchvision

from torchvision import models,transforms,datasets

import time

import json

# 判断是否存在GPU设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print('Using gpu: %s ' % torch.cuda.is_available())

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

vgg_format = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize,

])

data_dir = './dogscats'

dsets = {x: datasets.ImageFolder(os.path.join(data_dir, x), vgg_format)#os.path.join(data_dir)其实就是'./dogscats'+'/train'='./dogscats/train'

for x in ['train', 'valid']} #其实是一个字典,x是字符

dset_sizes = {x: len(dsets[x]) for x in ['train', 'valid']}

model_vgg = models.vgg16(pretrained=True)

model_vgg = model_vgg.to(device)

loader_train = torch.utils.data.DataLoader(dsets['train'], batch_size=64, shuffle=True, num_workers=6)#dsets['train']是ImageFolder对象

loader_valid = torch.utils.data.DataLoader(dsets['valid'], batch_size=5, shuffle=False, num_workers=6)#dsets['train'][0]是一个元组,只有两个元素,前一个是多维tensor,后一个是分类数

#a,b=dsets['train'][0]后,a是tensor并且torch.Size([3, 224, 224]),len(dsets['train']的值是1800

model_vgg_new = model_vgg;

for param in model_vgg_new.parameters():

param.requires_grad = False

model_vgg_new.classifier._modules['6'] = nn.Linear(4096, 2)

model_vgg_new.classifier._modules['7'] = torch.nn.LogSoftmax(dim = 1)

criterion = nn.NLLLoss()

# 学习率

lr = 0.001

# 随机梯度下降

optimizer_vgg = torch.optim.SGD(model_vgg_new.classifier[6].parameters(),lr = lr)

'''

第二步:训练模型

'''

log_dir = '/content/drive/MyDrive/model/'

def train_model(model,dataloader,size,epochs=1,optimizer=None):

model.train()

for epoch in range(epochs):

running_loss = 0.0

running_corrects = 0

count = 0

for inputs,classes in dataloader:

inputs = inputs.to(device) #inputs是Tensor,torch.Size([64, 3, 224, 224])

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes) #outputs是tensor,torch.Size([64, 2])

optimizer = optimizer

optimizer.zero_grad()

loss.backward() #loss是tensor

optimizer.step()

_,preds = torch.max(outputs.data,1)#outputs.data也是tensor,那为啥不直接用outputs

#preds是tensor

running_loss += loss.data.item()#loss.data.item()是浮点数

running_corrects += torch.sum(preds == classes.data)

count += len(inputs)

print('Training: No. ', count, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

if epoch_acc >= 0.96: #当准确率超过一定数值时,保存模型

localtime = time.strftime('%Y-%m-%d_%H:%M:%S')

path = log_dir + str(epoch) + '_' + str(epoch_acc) + '_' + localtime

torch.save(model,path)

print("save: ", path,"\n")

# 模型训练

train_model(model_vgg_new,loader_train,size=dset_sizes['train'], epochs=1,

optimizer=optimizer_vgg)import numpy as np

import matplotlib.pyplot as plt

import os

import torch

import torch.nn as nn

import torchvision

from torchvision import models,transforms,datasets

import time

import json

from tqdm import tqdm

device = torch.device("cuda:0" )

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

vgg_format = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize,

])

dsets_mine = datasets.ImageFolder(r"/content/drive/MyDrive/data/cat_dog", vgg_format)#这里的地址是我存放赛题数据集的地址,需要注意的是这个地址只包含test

loader_test = torch.utils.data.DataLoader(dsets_mine, batch_size=1, shuffle=False, num_workers=0)

#dsets_mine.imgs[0][0]是/content/drive/MyDrive/data/cat_dog/test/0.jpg 没法help

#dsets_mine.imgs[1][0]是/content/drive/MyDrive/data/cat_dog/test/1.jpg

#dsets_mine.imgs[0][1]是0 分类

model_vgg_new = torch.load(r'/content/drive/MyDrive/model/19_0.9722222222222222_2022-10-21_09:16:21')#加载之前保存的模型

model_vgg_new = model_vgg_new.to(device)

print(dsets_mine.imgs[0][1])

dic = {}

def test(model,dataloader,size):

model.eval()

predictions = np.zeros(size)

print(predictions)

cnt = 0

for inputs,_ in tqdm(dataloader): #inputs是tensor,torch.Size([1, 3, 224, 224])

inputs = inputs.to(device)

outputs = model(inputs)

_,preds = torch.max(outputs.data,1)#preds是tensor([0], device='cuda:0')是tensor

#这里是切割路径,因为dset中的数据不是按1-2000顺序排列的

key = dsets_mine.imgs[cnt][0].split("\\")[-1].split('.')[0]

dic[key] = preds[0]#preds[0]是tensor(0, device='cuda:0')是tensor

cnt = cnt +1

test(model_vgg_new,loader_test,size=2000)

with open("/content/drive/MyDrive/csv/result.csv",'a+') as f:

for key in range(2000):

f.write("{},{}\n".format(key,dic["/content/drive/MyDrive/data/cat_dog/test/"+str(key)]))

改进措施

我的第一想法就是改一下optimzier,或者改两个全连接层

改成Adam肯定有提升。

optimizer_vgg = torch.optim.Adam(model_vgg_new.classifier[6].parameters(),lr = lr)或者多加全连接层

model_vgg_new.classifier._modules['6'] = nn.Linear(4096, 4096)

model_vgg_new.classifier._modules['7'] = nn.ReLU(inplace=True)

model_vgg_new.classifier._modules['8'] = nn.Dropout(p=0.5, inplace=False)

model_vgg_new.classifier._modules['9'] = nn.Linear(4096, 2)

model_vgg_new.classifier._modules['10'] = torch.nn.LogSoftmax(dim = 1)

我还尝试着多改一层参数

model_vgg_new.classifier._modules['3'] = nn.Linear(4096, 4096)结果发现连一个96准确率的都找不到(后来发现是model.parameter()的问题)

RESNET

之后就是尝试用resnet了。

最好的一组

好吧,看起来不尽人意

代码如下,许多名字我没改,还用的是vgg的

from google.colab import drive

drive.mount('/content/drive')

import numpy as np

import matplotlib.pyplot as plt

import os

import torch

import torch.nn as nn

import torchvision

from torchvision import models,transforms,datasets

import time

import json

# 判断是否存在GPU设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print('Using gpu: %s ' % torch.cuda.is_available())

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

vgg_format = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize,

])

data_dir = './dogscats'

dsets = {x: datasets.ImageFolder(os.path.join(data_dir, x), vgg_format)#os.path.join(data_dir)其实就是'./dogscats'+'/train'='./dogscats/train'

for x in ['train', 'valid']} #其实是一个字典,x是字符

dset_sizes = {x: len(dsets[x]) for x in ['train', 'valid']}

model_vgg = torchvision.models.resnet18(pretrained=True)

num_ftrs = model_vgg.fc.in_features

model_vgg = model_vgg.to(device)

loader_train = torch.utils.data.DataLoader(dsets['train'], batch_size=64, shuffle=True, num_workers=6)#dsets['train']是ImageFolder对象

loader_valid = torch.utils.data.DataLoader(dsets['valid'], batch_size=5, shuffle=False, num_workers=6)#dsets['train'][0]是一个元组,只有两个元素,前一个是多维tensor,后一个是分类数

#a,b=dsets['train'][0]后,a是tensor并且torch.Size([3, 224, 224]),len(dsets['train']的值是1800

model_vgg_new = model_vgg;

for param in model_vgg_new.parameters():

param.requires_grad = False

model_vgg.fc = nn.Linear(num_ftrs, 2)

model_vgg_new = model_vgg_new.to(device)

criterion = torch.nn.CrossEntropyLoss()

# 学习率

lr = 0.01

# 随机梯度下降

optimizer_vgg = torch.optim.SGD(model_vgg.fc.parameters(),lr = lr,weight_decay=0.001)

'''

第二步:训练模型

'''

log_dir = '/content/drive/MyDrive/model/'

def train_model(model,dataloader,size,epochs=1,optimizer=None):

model.train()

for epoch in range(epochs):

running_loss = 0.0

running_corrects = 0

count = 0

for inputs,classes in dataloader:

inputs = inputs.to(device) #inputs是Tensor,torch.Size([64, 3, 224, 224])

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes) #outputs是tensor,torch.Size([64, 2])

optimizer = optimizer

optimizer.zero_grad()

loss.backward() #loss是tensor

optimizer.step()

_,preds = torch.max(outputs.data,1)#outputs.data也是tensor,那为啥不直接用outputs

#preds是tensor

running_loss += loss.data.item()#loss.data.item()是浮点数

running_corrects += torch.sum(preds == classes.data)

count += len(inputs)

print('Training: No. ', count, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

if epoch_acc >= 0.96: #当准确率超过一定数值时,保存模型

localtime = time.strftime('%Y-%m-%d_%H:%M:%S')

path = log_dir + str(epoch) + '_' + str(epoch_acc) + '_' + localtime

torch.save(model,path)

print("save: ", path,"\n")

# 模型训练

train_model(model_vgg_new,loader_train,size=dset_sizes['train'], epochs=20,

optimizer=optimizer_vgg)help&&dir

dic_imagenet是列表

for data in loader_valid://这里的data其实是一个list,包含inputs和label