NNOM第一个模型实例

目录

一、keras开发环境搭建

二、安装visual studio 2019

1. 下载安装

2. 配置使用MSVC编译器

三、编译第一个NNOM的demo

1. 下载源码

2. 安装依赖库

3. 编译auto_test

四、移植

1. 新建新的VS项目

2. 拷贝相关源码

3. 配置工程

4. 编译并运行

一、keras开发环境搭建

参考:keras环境搭建_卡卡6的博客-CSDN博客

二、安装visual studio 2019

1. 下载安装

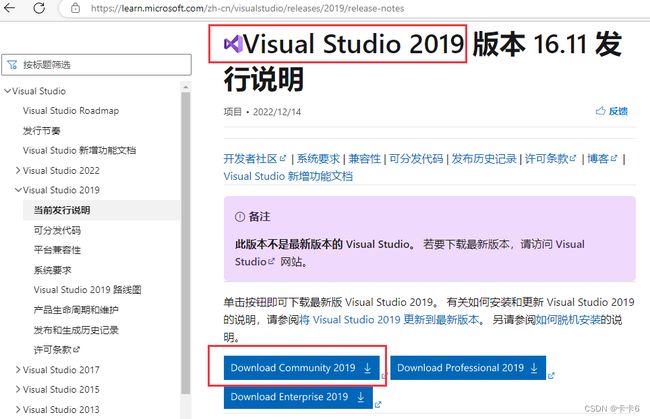

选择VS2019的community版本进行安装。官网链接如下:

Visual Studio 2019 版本 16.11 发行说明 | Microsoft Learn

注:(1)一定要使用默认的安装路径,即C盘;(2)只勾选“使用C++的桌面开发”这一项即可。

![]()

安装结束,重启计算机。

可以简单新建一个C++工程,测试一下。--->略

2. 配置使用MSVC编译器

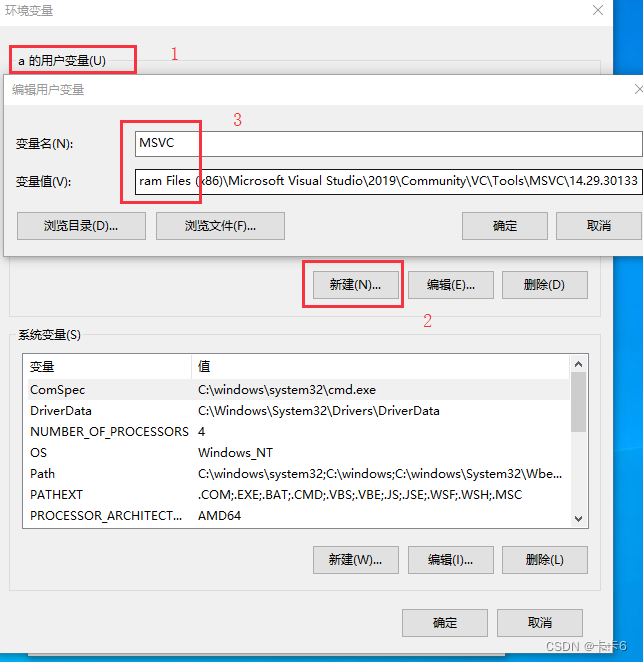

在当前用户环境变量中新建如下几个环境变量:

| MSVC: C:\Program Files (x86) \Microsoft Visual Studio\2019\Community\VC\Tools\MSVC\14.29.30133 |

如下图:

按照同样的方法,依次新增如下环境变量:

| WK10_BIN: C:\Program Files (x86)\Windows Kits\10\bin\10.0.19041.0 WK10_LIB: C:\Program Files (x86)\Windows Kits\10\Lib\10.0.19041.0 WK10_INCLUDE: C:\Program Files (x86)\Windows Kits\10\Include\10.0.19041.0 INCLUDE: %WK10_INCLUDE%\ucrt;%WK10_INCLUDE%\um;%WK10_INCLUDE%\shared;%MSVC%\include; LIB: %WK10_LIB%\um\x64;%WK10_LIB%\ucrt\x64;%MSVC%\lib\x64; |

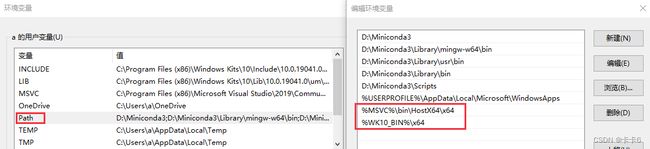

最后在Path环境变量下新增:

| %MSVC%\bin\HostX64\x64 %WK10_BIN%\x64 |

如下图:

测试:新建一个文件hello.cpp

| #include int main() { printf("hello msvc\n"); return 0; } |

Cmd命令行进入到该目录下,直接用cl指令编译源文件后可直接运行hello.exe

到此为止,说明cl指令可用。后续keras模型编译会依赖于cl,所以这里的工作一定要做好!

三、编译第一个NNOM的demo

1. 下载源码

地址:GitHub - majianjia/nnom: A higher-level Neural Network library for microcontrollers.

2. 安装依赖库

Keras模型的构建和编译依赖于scons和机器学习库scikit-learn

| pip install scons pip install scikit-learn |

3. 编译auto_test

直接进入demo目录,执行:python main.py

编译过程输出如下:

| (base) C:\Users\a\Desktop\nnom-master\examples\auto_test>python main.py ['C:\\Users\\a\\Desktop\\nnom-master\\examples\\auto_test', 'D:\\Miniconda3\\python37.zip', 'D:\\Miniconda3\\DLLs', 'D:\\Miniconda3\\lib', 'D:\\Miniconda3', 'D:\\Miniconda3\\lib\\site-packages', 'D:\\Miniconda3\\lib\\site-packages\\win32', 'D:\\Miniconda3\\lib\\site-packages\\win32\\lib', 'D:\\Miniconda3\\lib\\site-packages\\Pythonwin', 'C:\\Users\\a\\Desktop\\nnom-master\\scripts'] 60000 train samples 10000 test samples x_train shape: (60000, 28, 28, 1) data range 0.0 1.0 2022-12-27 16:39:09.432602: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN)to use the following CPU instructions in performance-critical operations: AVX2 To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags. 2022-12-27 16:39:09.442539: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x24e46b20410 initialized for platform Host (this does not guarantee that XLA will be used). Devices: 2022-12-27 16:39:09.442681: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version Model: "functional_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) [(None, 28, 28, 1)] 0 _________________________________________________________________ conv2d (Conv2D) (None, 26, 26, 16) 160 _________________________________________________________________ batch_normalization (BatchNo (None, 26, 26, 16) 64 _________________________________________________________________ conv2d_1 (Conv2D) (None, 22, 22, 16) 6416 _________________________________________________________________ batch_normalization_1 (Batch (None, 22, 22, 16) 64 _________________________________________________________________ leaky_re_lu (LeakyReLU) (None, 22, 22, 16) 0 _________________________________________________________________ max_pooling2d (MaxPooling2D) (None, 11, 11, 16) 0 _________________________________________________________________ dropout (Dropout) (None, 11, 11, 16) 0 _________________________________________________________________ depthwise_conv2d (DepthwiseC (None, 7, 7, 32) 320 _________________________________________________________________ batch_normalization_2 (Batch (None, 7, 7, 32) 128 _________________________________________________________________ re_lu (ReLU) (None, 7, 7, 32) 0 _________________________________________________________________ dropout_1 (Dropout) (None, 7, 7, 32) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 7, 7, 16) 528 _________________________________________________________________ batch_normalization_3 (Batch (None, 7, 7, 16) 64 _________________________________________________________________ re_lu_1 (ReLU) (None, 7, 7, 16) 0 _________________________________________________________________ max_pooling2d_1 (MaxPooling2 (None, 4, 4, 16) 0 _________________________________________________________________ dropout_2 (Dropout) (None, 4, 4, 16) 0 _________________________________________________________________ flatten (Flatten) (None, 256) 0 _________________________________________________________________ dense (Dense) (None, 64) 16448 _________________________________________________________________ re_lu_2 (ReLU) (None, 64) 0 _________________________________________________________________ dropout_3 (Dropout) (None, 64) 0 _________________________________________________________________ dense_1 (Dense) (None, 10) 650 _________________________________________________________________ softmax (Softmax) (None, 10) 0 ================================================================= Total params: 24,842 Trainable params: 24,682 Non-trainable params: 160 _________________________________________________________________ Epoch 1/2 938/938 - 120s - loss: 0.5140 - accuracy: 0.8334 - val_loss: 0.1132 - val_accuracy: 0.9654 Epoch 2/2 938/938 - 117s - loss: 0.1902 - accuracy: 0.9421 - val_loss: 0.0777 - val_accuracy: 0.9749 binary test file generated: test_data.bin test data length: 1000 32/32 - 0s - loss: 0.0960 - accuracy: 0.9700 Test loss: 0.09596335142850876 Top 1: 0.9700000286102295 [[ 84 0 0 0 0 1 0 0 0 0] [ 0 126 0 0 0 0 0 0 0 0] [ 1 1 110 0 0 0 0 3 1 0] [ 0 0 0 103 0 3 0 1 0 0] [ 0 1 0 0 104 0 1 0 0 4] [ 0 0 0 0 0 86 1 0 0 0] [ 3 0 0 0 0 0 84 0 0 0] [ 0 0 0 0 0 1 0 98 0 0] [ 2 0 1 0 1 0 0 1 83 1] [ 0 0 0 0 0 0 0 2 0 92]] input_1 Quantized method: max-min Values max: 1.0 min: 0.0 dec bit 7 conv2d Quantized method: max-min Values max: 0.8345297 min: -0.5735731 dec bit 7 batch_normalization Quantized method: max-min Values max: 6.404142 min: -6.462939 dec bit 4 conv2d_1 Quantized method: max-min Values max: 19.733093 min: -25.782127 dec bit 2 batch_normalization_1 Quantized method: max-min Values max: 3.6588879 min: -4.0082974 dec bit 5 leaky_re_lu Quantized method: max-min Values max: 3.6588879 min: -4.0082974 dec bit 5 max_pooling2d Quantized method: max-min Values max: 3.6588879 min: -4.0082974 dec bit 5 dropout Quantized method: max-min Values max: 3.6588879 min: -4.0082974 dec bit 5 depthwise_conv2d Quantized method: max-min Values max: 1.4940153 min: -1.1628311 dec bit 6 batch_normalization_2 Quantized method: max-min Values max: 6.617676 min: -5.725276 dec bit 4 re_lu Quantized method: max-min Values max: 6.617676 min: -5.725276 dec bit 4 dropout_1 Quantized method: max-min Values max: 6.617676 min: -5.725276 dec bit 4 conv2d_2 Quantized method: max-min Values max: 5.6870685 min: -3.9420059 dec bit 4 batch_normalization_3 Quantized method: max-min Values max: 5.288866 min: -3.8065898 dec bit 4 re_lu_1 Quantized method: max-min Values max: 5.288866 min: -3.8065898 dec bit 4 max_pooling2d_1 Quantized method: max-min Values max: 5.288866 min: -3.8065898 dec bit 4 dropout_2 Quantized method: max-min Values max: 5.288866 min: -3.8065898 dec bit 4 flatten Quantized method: max-min Values max: 5.288866 min: -3.8065898 dec bit 4 dense Quantized method: max-min Values max: 9.351792 min: -8.989536 dec bit 3 re_lu_2 Quantized method: max-min Values max: 9.351792 min: -8.989536 dec bit 3 dropout_3 Quantized method: max-min Values max: 9.351792 min: -8.989536 dec bit 3 dense_1 Quantized method: max-min Values max: 17.520363 min: -14.6242285 dec bit 2 softmax Quantized method: max-min Values max: 0.9999988 min: 1.5207032e-12 dec bit 7 quantisation list {'input_1': [7, 0], 'conv2d': [4, 0], 'batch_normalization': [4, 0], 'conv2d_1': [5, 0], 'batch_normalization_1': [5, 0], 'leaky_re_lu': [5, 0], 'max_pooling2d': [5, 0], 'dropout': [5, 0], 'depthwise_conv2d': [4, 0], 'batch_normalization_2': [4, 0], 're_lu': [4, 0], 'dropout_1': [4, 0], 'conv2d_2': [4, 0], 'batch_normalization_3': [4, 0], 're_lu_1': [4, 0], 'max_pooling2d_1': [4, 0], 'dropout_2': [4, 0], 'flatten': [4, 0], 'dense': [3, 0], 're_lu_2': [3, 0], 'dropout_3': [3, 0], 'dense_1': [2, 0], 'softmax': [7, 0]} fusing batch normalization to conv2d original weight max 0.2417007 min -0.20782447 original bias max 0.10783075 min -0.08728593 fused weight max 3.562787 min -3.2020652 fused bias max 0.51197034 min -0.49423537 quantizing weights for layer conv2d tensor_conv2d_kernel_0 dec bit 5 tensor_conv2d_bias_0 dec bit 7 quantizing weights for layer batch_normalization fusing batch normalization to conv2d_1 original weight max 0.19523335 min -0.1901753 original bias max 0.029084973 min -0.0711878 fused weight max 0.043349944 min -0.044777423 fused bias max -0.16451724 min -0.49612433 quantizing weights for layer conv2d_1 tensor_conv2d_1_kernel_0 dec bit 11 tensor_conv2d_1_bias_0 dec bit 8 quantizing weights for layer batch_normalization_1 fusing batch normalization to depthwise_conv2d original weight max 0.35383728 min -0.23351322 original bias max 0.042270824 min -0.033526164 fused weight max 4.7558317 min -2.1644483 fused bias max 0.95259607 min -1.1018924 quantizing weights for layer depthwise_conv2d tensor_depthwise_conv2d_depthwise_kernel_0 dec bit 4 tensor_depthwise_conv2d_bias_0 dec bit 6 quantizing weights for layer batch_normalization_2 fusing batch normalization to conv2d_2 original weight max 0.5379859 min -0.4994891 original bias max 0.014815442 min -0.011116973 fused weight max 0.62768686 min -0.4733633 fused bias max 0.5063162 min -0.5095656 quantizing weights for layer conv2d_2 tensor_conv2d_2_kernel_0 dec bit 7 tensor_conv2d_2_bias_0 dec bit 7 quantizing weights for layer batch_normalization_3 quantizing weights for layer dense tensor_dense_kernel_0 dec bit 8 tensor_dense_bias_0 dec bit 11 quantizing weights for layer dense_1 tensor_dense_1_kernel_0 dec bit 8 tensor_dense_1_bias_0 dec bit 10 scons: Reading SConscript files ... scons: done reading SConscript files. scons: Building targets ... CC main.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” main.c CC C:\Users\a\Desktop\nnom-master\src\core\nnom.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom.c CC C:\Users\a\Desktop\nnom-master\src\core\nnom_layers.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_layers.c CC C:\Users\a\Desktop\nnom-master\src\core\nnom_tensor.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_tensor.c CC C:\Users\a\Desktop\nnom-master\src\core\nnom_utils.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_utils.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_activation.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_activation.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_avgpool.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_avgpool.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_baselayer.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_baselayer.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_concat.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_concat.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_conv2d.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_conv2d.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_conv2d_trans.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_conv2d_trans.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_cropping.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_cropping.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_dense.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_dense.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_dw_conv2d.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_dw_conv2d.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_flatten.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_flatten.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_global_pool.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_global_pool.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_gru_cell.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_gru_cell.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_input.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_input.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_lambda.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_lambda.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_lstm_cell.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_lstm_cell.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_matrix.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_matrix.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_maxpool.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_maxpool.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_output.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_output.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_reshape.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_reshape.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_rnn.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_rnn.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_simple_cell.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_simple_cell.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_softmax.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_softmax.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_sumpool.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_sumpool.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_upsample.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_upsample.c CC C:\Users\a\Desktop\nnom-master\src\layers\nnom_zero_padding.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_zero_padding.c CC C:\Users\a\Desktop\nnom-master\src\backends\nnom_local.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_local.c CC C:\Users\a\Desktop\nnom-master\src\backends\nnom_local_q15.c cl: 命令行 warning D9002 :忽略未知选项“-std=c99” nnom_local_q15.c LINK mnist.exe scons: done building targets. validation size: 785024 Model version: 0.4.3 NNoM version 0.4.3 To disable logs, please void the marco 'NNOM_LOG(...)' in 'nnom_port.h'. Data format: Channel last (HWC) Start compiling model... Layer(#) Activation output shape ops(MAC) mem(in, out, buf) mem blk lifetime ------------------------------------------------------------------------------------------------- #1 Input - - ( 28, 28, 1,) ( 784, 784, 0) 1 - - - - - - - #2 Conv2D - - ( 26, 26, 16,) 97k ( 784, 10816, 0) 1 1 - - - - - - #3 Conv2D - LkyReLU - ( 22, 22, 16,) 3.09M ( 10816, 7744, 0) 1 1 - - - - - - #4 MaxPool - - ( 11, 11, 16,) ( 7744, 1936, 0) 1 1 1 - - - - - #5 DW_Conv2D - AdvReLU - ( 7, 7, 32,) 14k ( 1936, 1568, 0) 1 - 1 - - - - - #6 Conv2D - ReLU - ( 7, 7, 16,) 25k ( 1568, 784, 0) 1 1 - - - - - - #7 MaxPool - - ( 4, 4, 16,) ( 784, 256, 0) 1 1 1 - - - - - #8 Flatten - - ( 256, ) ( 256, 256, 0) - - 1 - - - - - #9 Dense - ReLU - ( 64, ) 16k ( 256, 64, 512) 1 1 1 - - - - - #10 Dense - - ( 10, ) 640 ( 64, 10, 128) 1 1 1 - - - - - #11 Softmax - - ( 10, ) ( 10, 10, 0) 1 - 1 - - - - - #12 Output - - ( 10, ) ( 10, 10, 0) 1 - - - - - - - ------------------------------------------------------------------------------------------------- Memory cost by each block: blk_0:7744 blk_1:10816 blk_2:1936 blk_3:0 blk_4:0 blk_5:0 blk_6:0 blk_7:0 Memory cost by network buffers: 20496 bytes Total memory occupied: 24536 bytes Processing 12% Processing 25% Processing 38% Processing 51% Processing 63% Processing 76% Processing 89% Processing 100% Prediction summary: Test frames: 1000 Test running time: 0 sec Model running time: 0 ms Average prediction time: 0 us Top 1 Accuracy: 97.20% Top 2 Accuracy: 98.70% Top 3 Accuracy: 98.80% Top 4 Accuracy: 99.20% Confusion matrix: predict 0 1 2 3 4 5 6 7 8 9 actual 0 | 84 0 0 0 0 1 0 0 0 0 | 98% 1 | 0 126 0 0 0 0 0 0 0 0 | 100% 2 | 2 0 110 0 0 0 1 3 0 0 | 94% 3 | 0 0 0 104 0 2 0 1 0 0 | 97% 4 | 0 1 0 0 105 0 0 0 0 4 | 95% 5 | 0 0 0 0 0 86 1 0 0 0 | 98% 6 | 3 0 0 0 0 0 84 0 0 0 | 96% 7 | 0 0 0 0 0 1 0 98 0 0 | 98% 8 | 2 0 1 0 1 0 0 1 83 1 | 93% 9 | 0 0 0 0 0 0 0 2 0 92 | 97% Print running stat.. Layer(#) - Time(us) ops(MACs) ops/us -------------------------------------------------------- #1 Input - 0 #2 Conv2D - 0 97k #3 Conv2D - 0 3.09M #4 MaxPool - 0 #5 DW_Conv2D - 0 14k #6 Conv2D - 0 25k #7 MaxPool - 0 #8 Flatten - 0 #9 Dense - 0 16k #10 Dense - 0 640 #11 Softmax - 0 #12 Output - 0 Summary: Total ops (MAC): 3251168(3.25M) Prediction time :0us Total memory:24536 Top 1 Accuracy on Keras 97.00% Top 1 Accuracy on NNoM 97.20% (base) C:\Users\a\Desktop\nnom-master\examples\auto_test> |

等待几分钟,最后看到输出:

Top 1 Accuracy on Keras 97.00%

Top 1 Accuracy on NNoM 97.20%

说明模型训练结束。

下面几个文件重点关注:

| main.c:模型初始化和运行,推理 weights.h:模型结构和相关参数,是上一步骤训练的结果 test_data.bin:测试用的输入数据集 |

四、移植

1. 新建新的VS项目

工程自由命名,这里命名为auto_test。建立过程略。

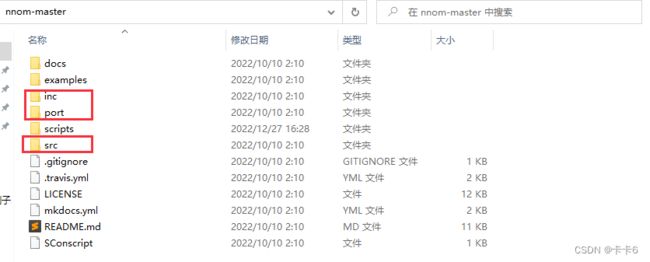

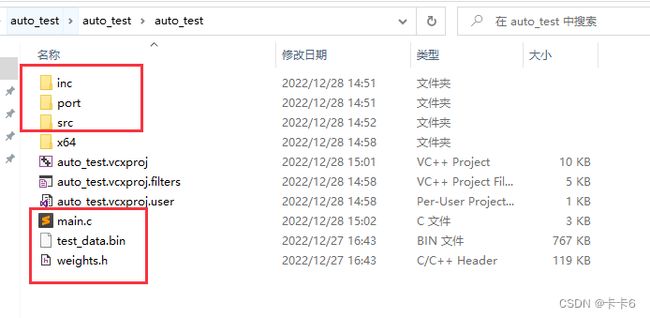

2. 拷贝相关源码

将NNOM源码目录中的这三个目录拷贝到VS工程目录下,这是NNOM源码目录:

将上一步骤中这三个文件也拷贝到VS工程目录下:main.c weights.h test_data.bin

最终的VS工程目录如下:

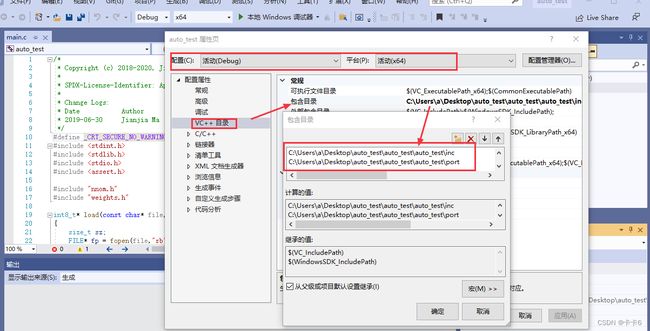

3. 配置工程

(1)将main.c以及整个src目录都添加到工程中。

(2)配置头文件路径

右键--->属性--->VC++目录--->包含目录:添加inc和port目录

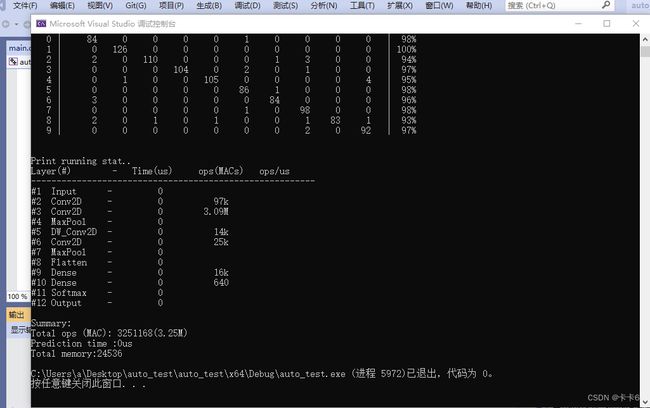

4. 编译并运行

输出如下,模型成功运行,仅依赖于C环境:

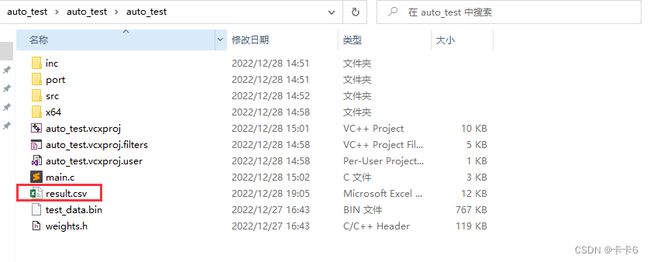

并且工程目录下生成了结果文件: