【Sparse-to-Dense】《Sparse-to-Dense:Depth Prediction from Sparse Depth Samples and a Single Image》

ICRA-2018

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 Method

- 5 Experiments

-

- 5.1 Datasets

- 5.2 RESULTS

- 6 Conclusion(own) / Future work

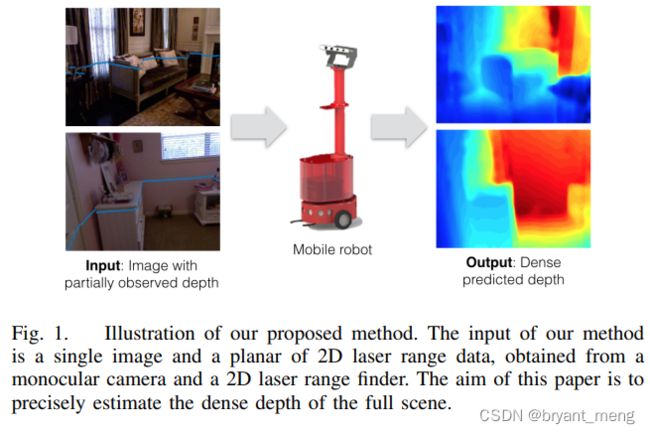

1 Background and Motivation

深度感知和深度估计在 robotics, autonomous driving, augmented reality (AR) and 3D mapping 等工程应用中至关重要!

然而现有的深度估计手段在落地时或多或少有着它的局限性:

1)3D LiDARs are cost-prohibitive

2)Structured-light-based depth sensors (e.g. Kinect) are sunlight-sensitive and power-consuming

3)stereo cameras require a large baseline and careful calibration for accurate triangulation, and usually fails at featureless regions

单目摄像头由于其体积小,成本低,节能,在消费电子产品中无处不在等特点,单目深度估计方法也成为了人们探索的兴趣点!

然而,the accuracy and reliability of such methods is still far from being practical(尽管这些年有了显著的提升)

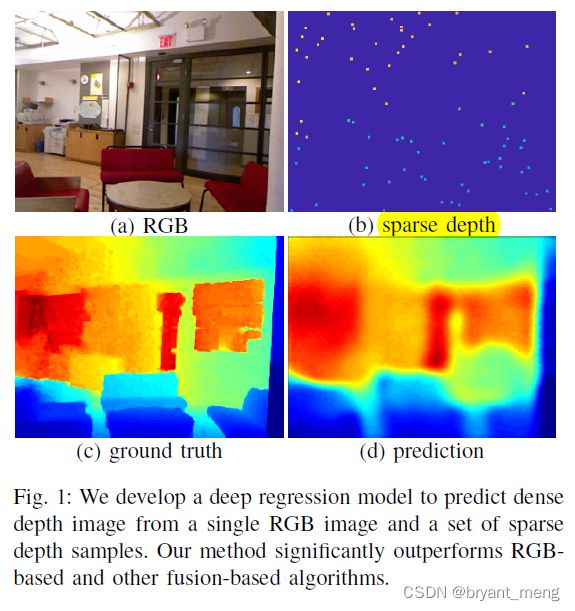

作者在 rgb 图像的基础上,配合 sparse depth measurements,来进行深度估计,a few sparse depth samples drastically improves depth reconstruction performance

2 Related Work

- RGB-based depth prediction

- hand-crafted features

- probabilistic graphical models

- Non-parametric approaches

- Semi-supervised learning

- unsupervised learning

- Depth reconstruction from sparse samples

- Sensor fusion

3 Advantages / Contributions

rgb + sparse depth 进行单目深度预测

ps:网络结构没啥创新,sparse depth 这种多模态也是借鉴别人的思想(当然,采样方式不一样)

4 Method

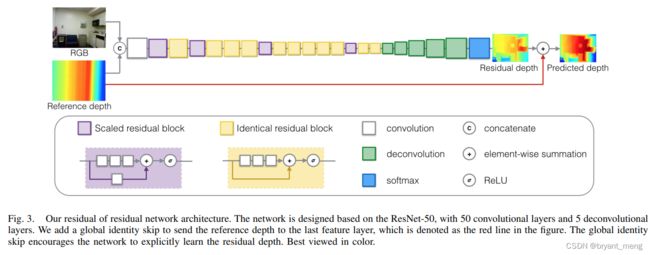

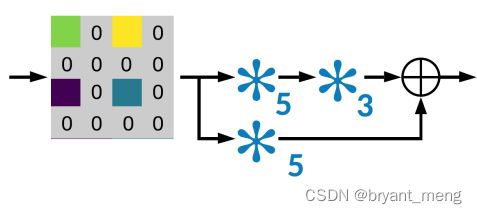

整体结构

采用的是 encoder 和 decoder 的形式

UpProj 的形式如下:

2)Depth Sampling

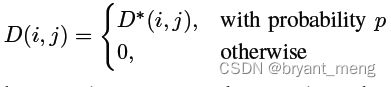

根据 Bernoulli probability 采样(eg:抛硬币,每次结果不相关), p = m n p = \frac{m}{n} p=nm

伯努利试验(Bernoulli experiment)是在同样的条件下重复地、相互独立地进行的一种随机试验,其特点是该随机试验只有两种可能结果:发生或者不发生。我们假设该项试验独立重复地进行了n次,那么就称这一系列重复独立的随机试验为n重伯努利试验,或称为伯努利概型。

D ∗ D* D∗ 完整的深度图,dense depth map

D D D sparse depth map

3)Data Augmentation

Scale / Rotation / Color Jitter / Color Normalization / Flips

scale 和 rotation 的时候采用的是 Nearest neighbor interpolation 以避免 creating spurious sparse depth points

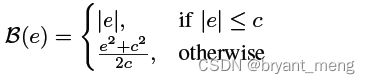

4)loss function

- l1

- l2:sensitive to outliers,over-smooth boundaries instead of sharp transitions

- berHu

berHu 综合了 l1 和 l2

作者”事实说话”采用的是 l1

5 Experiments

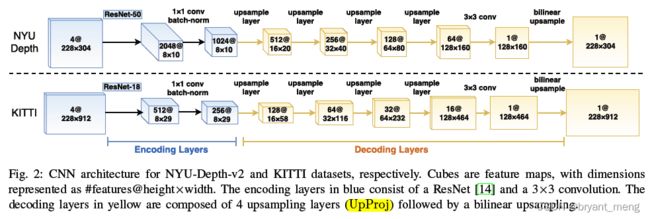

5.1 Datasets

-

NYU-Depth-v2

464 different indoor scenes,249 Train + 215 test

the small labeled test dataset with 654 images is used for evaluating the final performance

-

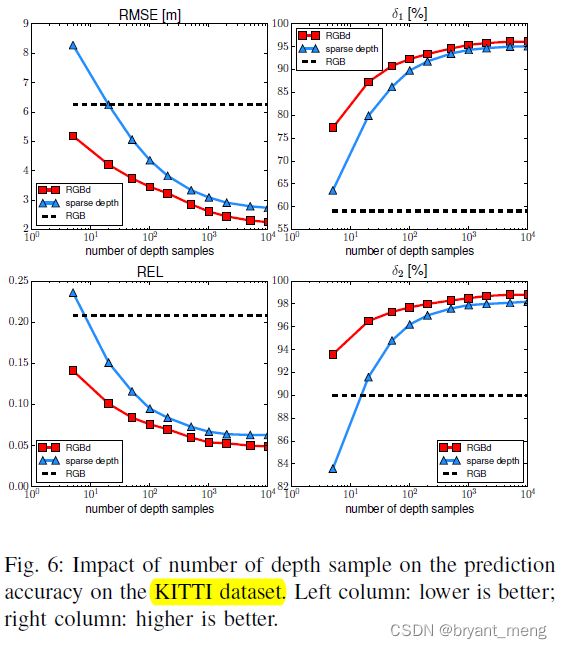

KITTI Odometry Dataset

The KITTI dataset is more challenging for depth prediction, since the maximum distance is 100 meters as opposed to only 10 meters in the NYU-Depth-v2 dataset.

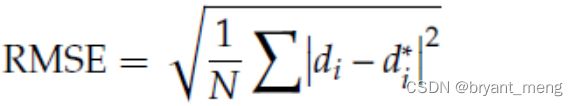

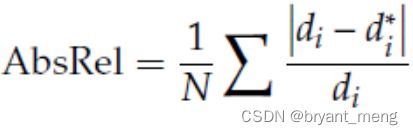

评价指标

RMSE: root mean squared error

REL: mean absolute relative error

δ i \delta_i δi:

- card:is the cardinality of a set(可简单理解为对元素个数计数)

- y ^ \hat{y} y^:prediction

- y y y:GT

更多相关评价指标参考 单目深度估计指标:SILog, SqRel, AbsRel, RMSE, RMSE(log)

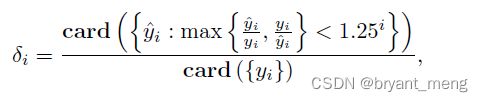

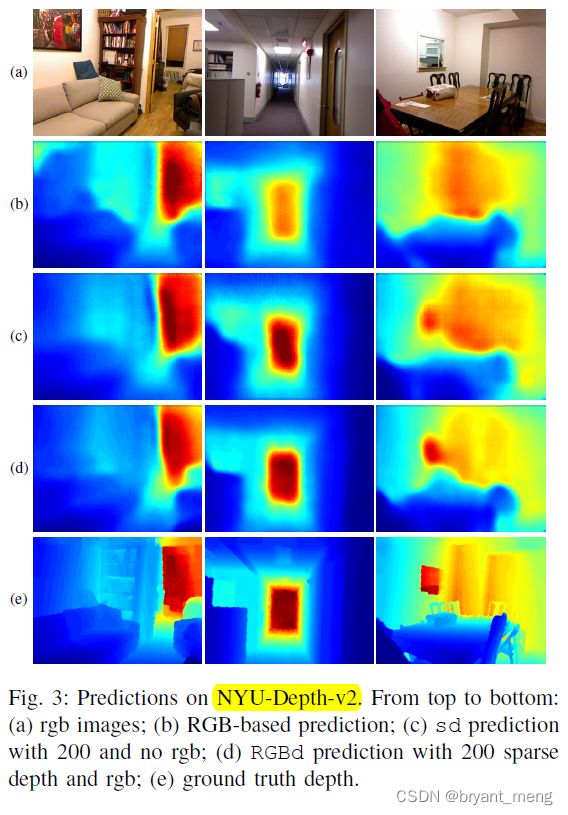

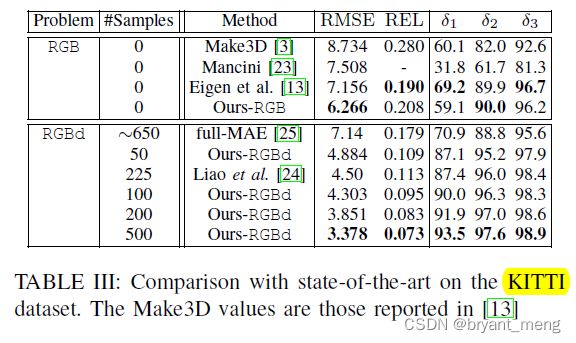

5.2 RESULTS

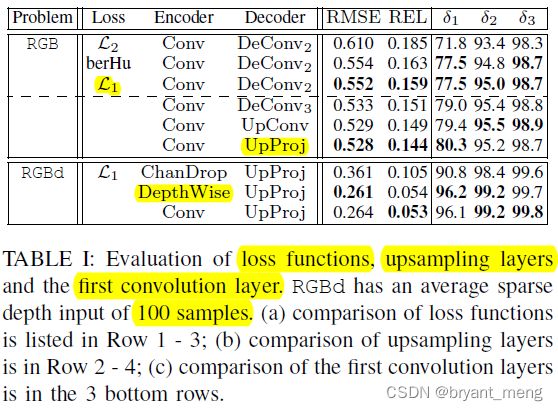

1)Architecture Evaluation

DeConv3 比 DeConv2 好,

UpProj 比 DeConv3 好(even larger receptive field of 4x4, the UpProj module outperforms the others)

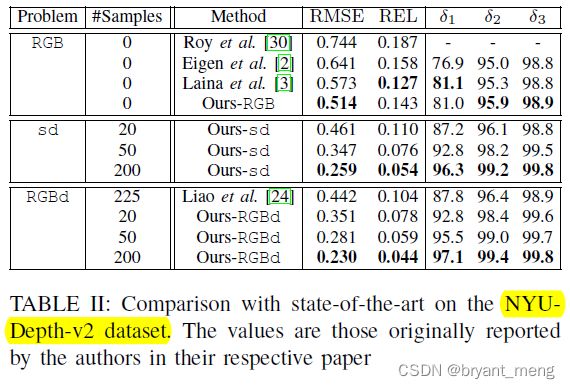

2)Comparison with the State-of-the-Art

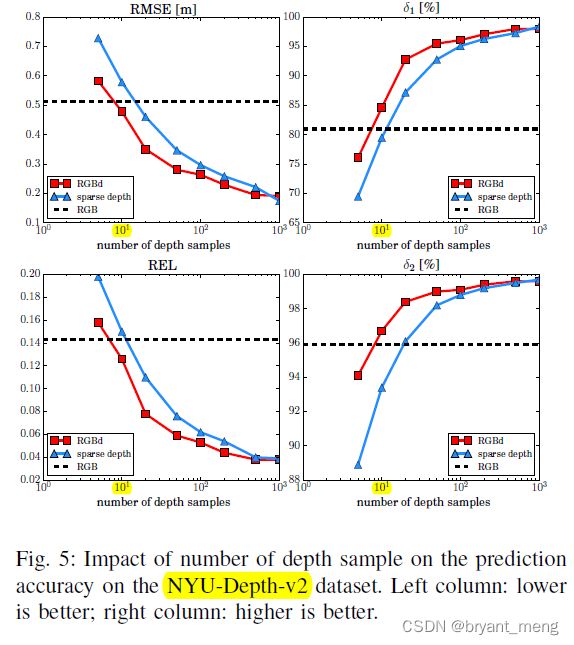

NYU-Depth-v2 Dataset

sd 是 sparse-depth 的缩写,也即输入没有 rgb

sparse 1 0 1 10^1 101 这个数量级就可以和 rgb 媲美, 1 0 2 10^2 102 飞跃,

采样越多,和 rgb 关系就不大了(performance gap between RGBd and sd shrinks as the sample size increases),哈哈哈

This observation indicates that the information extracted from the sparse sample set dominates the prediction when the sample size is sufficiently large, and in this case the color cue becomes almost irrelevant. (全采样,怎么输入我就怎么给你输出出来,别说跟 rgb 关系不大,跟神经网络关系也不大了,哈哈哈)

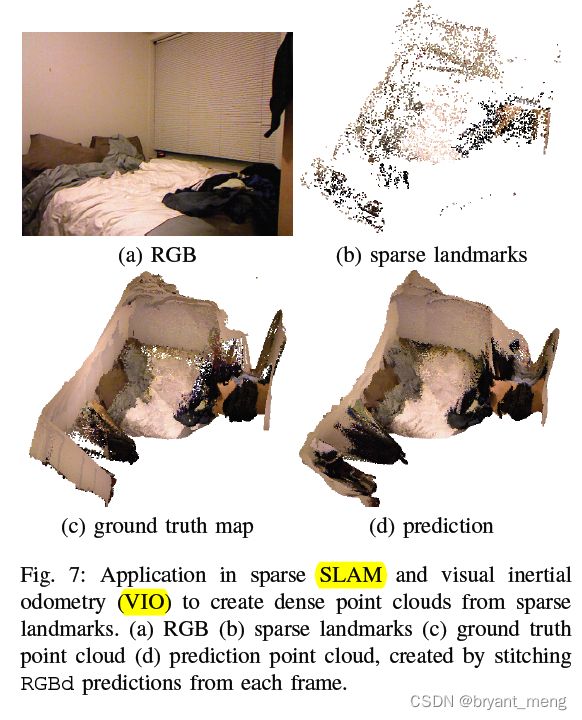

4)Application: Dense Map from Visual Odometry Features

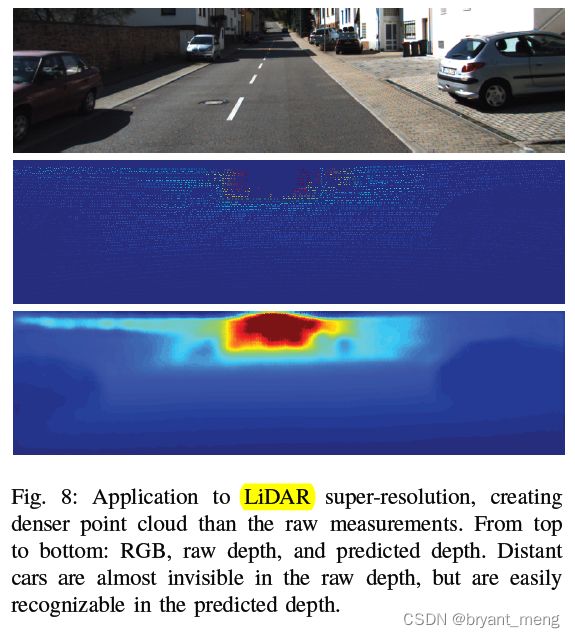

5)Application: LiDAR Super-Resolution

6 Conclusion(own) / Future work

-

presentation

https://www.bilibili.com/video/av66343637/

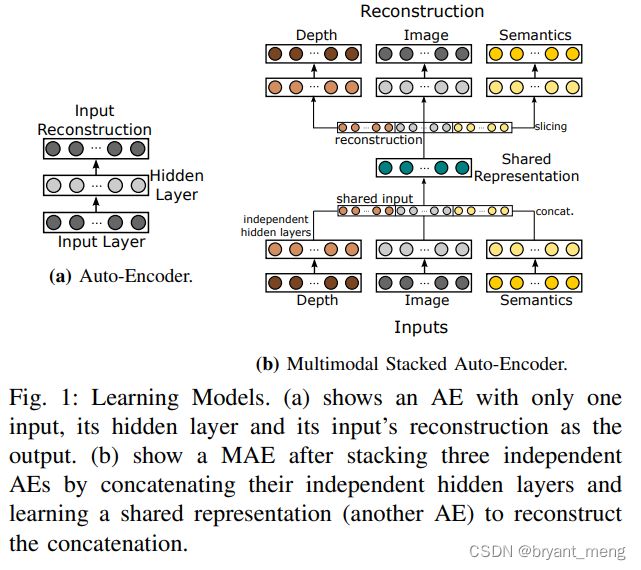

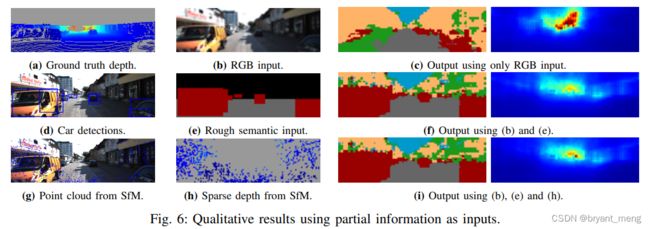

下面看看另外一些多模态的单目深度预测方法