python-机器学习-预测房价问题 House Price Advanced Regression Techniques

预测房价问题 House Price Advanced Regression Techniques

1.绪论

1.1需求分析

购房者描述他们的梦想房屋,他们可能不会从地下室天花板的高度或靠近东西方铁路开始。但是这个游乐场比赛的数据集证明了价格谈判比卧室或白色栅栏的数量更多。同时项目给出维79特征向量用来描述(几乎)爱荷华州Ames住宅的每一个方面。有79个解释变量描述(几乎)爱荷华州埃姆斯的住宅的各个方面,这次竞赛挑战需要预测每个家庭的最终价格。预测每栋房屋的销售价格是此次的职责。对于测试集中的每个Id,必须预测SalePrice变量的值,在预测值的对数与观察到的销售价格的对数之间的均方根误差(RMSE)上评估提交,记录日志意味着预测昂贵房屋和廉价房屋的错误将同样影响结果。

1.2开发环境

PyCharm是一种Python IDE,带有一整套可以帮助用户在使用Python语言开发时提高其效率的工具,比如调试、语法高亮、Project管理、代码跳转、智能提示、自动完成、单元测试、版本控制。此外,该IDE提供了一些高级功能,以用于支持Django框架下的专业Web开发。

PyCharm是由JetBrains打造的一款Python IDE,VS2010的重构插件Resharper就是出自JetBrains之手。同时支持Google App Engine,PyCharm支持IronPython。这些功能在先进代码分析程序的支持下,使 PyCharm 成为 Python 专业开发人员和刚起步人员使用的有力工具。

PyCharm拥有一般IDE具备的功能,比如, 调试、语法高亮、Project管理、代码跳转、智能提示、自动完成、单元测试、版本控制,另外,PyCharm还提供了一些很好的功能用于Django开发,同时支持Google App Engine,更酷的是,PyCharm支持IronPython。

1.3相关理论

数据清洗:

数据清洗从名字上也看的出就是把“脏”的“洗掉”,指发现并纠正数据文件中可识别的错误的最后一道程序,包括检查数据一致性,处理无效值和缺失值等。因为数据仓库中的数据是面向某一主题的数据的集合,这些数据从多个业务系统中抽取而来而且包含历史数据,这样就避免不了有的数据是错误数据、有的数据相互之间有冲突,这些错误的或有冲突的数据显然是我们不想要的,称为“脏数据”。我们要按照一定的规则把“脏数据”“洗掉”,这就是数据清洗。而数据清洗的任务是过滤那些不符合要求的数据,将过滤的结果交给业务主管部门,确认是否过滤掉还是由业务单位修正之后再进行抽取。不符合要求的数据主要是有不完整的数据、错误的数据、重复的数据三大类。数据清洗是与问卷审核不同,录入后的数据清理一般是由计算机而不是人工完成 。

特征工程:

数据和特征决定了机器学习的上限,而模型和算法则是逼近这个上限。因此,特征工程就变得尤为重要了。特征工程的主要工作就是对特征的处理,包括数据的采集,数据预处理,特征选择,甚至降维技术等跟特征有关的工作。

建模:

建模就是建立模型,就是为了理解事物而对事物做出的一种抽象,是对事物的一种无歧义的书面描述。建立系统模型的过程,又称模型化。建模是研究系统的重要手段和前提。凡是用模型描述系统的因果关系或相互关系的过程都属于建模。因描述的关系各异,所以实现这一过程的手段和方法也是多种多样的。可以通过对系统本身运动规律的分析,根据事物的机理来建模;也可以通过对系统的实验或统计数据的处理,并根据关于系统的已有的知识和经验来建模。还可以同时使用几种方法。

高级回归技术:

回归分析是研究自变量和因变量之间关系的一种预测模型技术。这些技术应用于预测,时间序列模型和找到变量之间关系。例如可以通过回归去研究超速与交通事故发生次数的关系。这里有一些使用回归分析的好处:它指示出自变量与因变量之间的显著关系;它指示出多个自变量对因变量的影响。回归分析允许我们比较不同尺度的变量,例如:价格改变的影响和宣传活动的次数。这些好处可以帮助市场研究者/数据分析师去除和评价用于建立预测模型里面的变量

2.设计与实现

2.1概要设计

数据导入→操作数据→模型训练→分析预测房价

2.2详细设计与实现

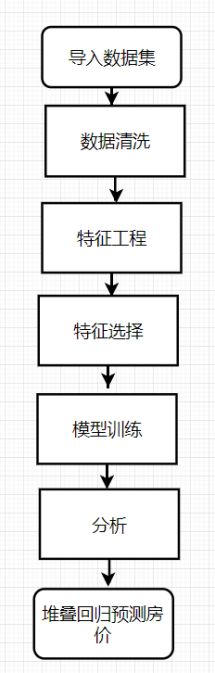

图2.2-1系统总体流程图

导入并将测试集和测试数据集放入pandas dataframe

train.drop("Id", axis=1, inplace=True)

test.drop("Id", axis=1, inplace=True)修改异常值

fig, ax = plt.subplots()

ax.scatter(x=train['GrLivArea'], y=train['SalePrice'])

plt.ylabel('SalePrice', fontsize=13)

plt.xlabel('GrLivArea', fontsize=13)

plt.show()检查样本数量和特征

print("The train data size before dropping Id feature is : {} ".format(train.shape))

print("The test data size before dropping Id feature is : {} ".format(test.shape))保存“id”列

train_ID = train['Id']

test_ID = test['Id']现在去掉“id”列,因为它对于预测过程是不必要的

train.drop("Id", axis=1, inplace=True)

test.drop("Id", axis=1, inplace=True)删除“id”变量后再次检查数据大小

print("\nThe train data size after dropping Id feature is : {} ".format(train.shape))

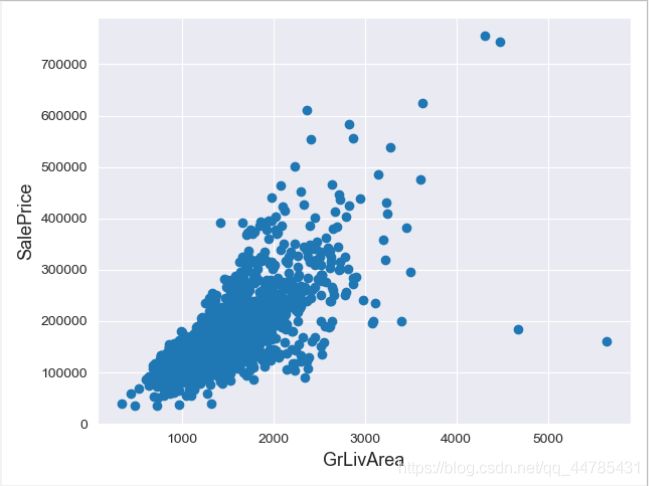

print("The test data size after dropping Id feature is : {} ".format(test.shape))数据中对于Ames住房数据,表明培训数据中存在异常值,我们来研究一下这些异常值

fig, ax = plt.subplots()

ax.scatter(x=train['GrLivArea'], y=train['SalePrice'])

plt.ylabel('SalePrice', fontsize=13)

plt.xlabel('GrLivArea', fontsize=13)

plt.show()可以在右下角看到两个地皮非常大的地皮,价格很低。这些价值观是巨大的bug,可以安全地删除它们

train = train.drop(train[(train['GrLivArea']>4000) & (train['SalePrice']<300000)].index)

#Check the graphic again

fig, ax = plt.subplots()

ax.scatter(train['GrLivArea'], train['SalePrice'])

plt.ylabel('SalePrice', fontsize=13)

plt.xlabel('GrLivArea', fontsize=13)

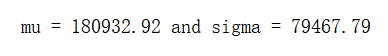

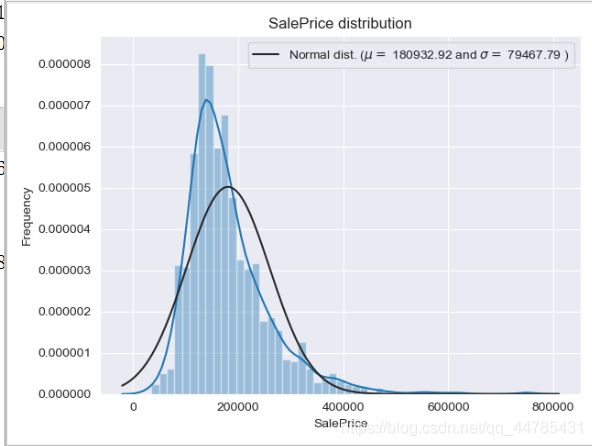

plt.show()销售价格是需要预测的变量,先来分析一下这个变量

sns.distplot(train['SalePrice'], fit=norm)

(mu, sigma) = norm.fit(train['SalePrice'])

print('\n mu = {:.2f} and sigma = {:.2f}\n'.format(mu, sigma))

plt.legend(['Normal dist. ($\mu=$ {:.2f} and $\sigma=$ {:.2f} )'.format(mu, sigma)], loc='best')

plt.ylabel('Frequency')

plt.title('SalePrice distribution')

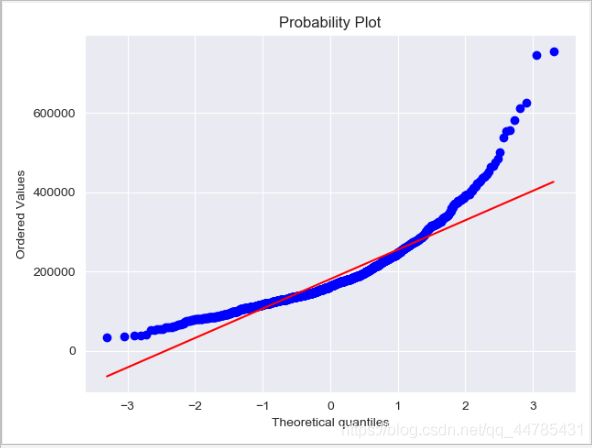

# Get also the QQ-plot

fig = plt.figure()

res = stats.probplot(train['SalePrice'], plot=plt)

plt.show()目标变量是右倾斜的。由于(线性)模型喜欢正态分布的数据,我们需要转换这个变量,使其更为正态分布,目标变量的对数转换

sns.distplot(train['SalePrice'] , fit=norm);

(mu, sigma) = norm.fit(train['SalePrice'])

print( '\n mu = {:.2f} and sigma = {:.2f}\n'.format(mu, sigma))

plt.legend(['Normal dist. ($\mu=$ {:.2f} and $\sigma=$ {:.2f} )'.format(mu, sigma)],

loc='best')

plt.ylabel('Frequency')

plt.title('SalePrice distribution')

#Get also the QQ-plot

fig = plt.figure()

res = stats.probplot(train['SalePrice'], plot=plt)

plt.show()将训练数据和测试数据合并,一起进行特征工程

ntrain = train.shape[0]

ntest = test.shape[0]

y_train = train.SalePrice.values

all_data = pd.concat((train, test)).reset_index(drop=True)

all_data.drop(['SalePrice'], axis=1, inplace=True)

print("all_data size is : {}".format(all_data.shape))分析数据确实情况:缺失数据可视化

all_data_na = (all_data.isnull().sum() / len(all_data)) * 100

all_data_na = all_data_na.drop(all_data_na[all_data_na == 0].index).sort_values(ascending=False)[:30]

missing_data = pd.DataFrame({'Missing Ratio' :all_data_na})

missing_data.head(20)

f, ax = plt.subplots(figsize=(15, 12))

plt.xticks(rotation='90')

sns.barplot(x=all_data_na.index, y=all_data_na)

plt.xlabel('Features', fontsize=15)

plt.ylabel('Percent of missing values', fontsize=15)

plt.title('Percent missing data by feature', fontsize=15)相关图以查看功能如何与销售价格相关

corrmat = train.corr()

plt.subplots(figsize=(12,9))

sns.heatmap(corrmat, vmax=0.9, square=True)根据上图相关性,以及各个特征的实际含义进行缺失值填充

all_data["PoolQC"] = all_data["PoolQC"].fillna("None")

# MiscFeature:

all_data["MiscFeature"] = all_data["MiscFeature"].fillna("None")

# Alley:

all_data["Alley"] = all_data["Alley"].fillna("None")

# Fence:

all_data["Fence"] = all_data["Fence"].fillna("None")

# FireplaceQu

all_data["FireplaceQu"] = all_data["FireplaceQu"].fillna("None")

# LotFrontage :

all_data["LotFrontage"] = all_data.groupby("Neighborhood")["LotFrontage"].transform(lambda x: x.fillna(x.median()))

for col in ('GarageType', 'GarageFinish', 'GarageQual', 'GarageCond'):

all_data[col] = all_data[col].fillna('None')

# GarageYrBlt, GarageArea and GarageCars :

for col in ('GarageYrBlt', 'GarageArea', 'GarageCars'):

all_data[col] = all_data[col].fillna(0)

# BsmtFinSF1, BsmtFinSF2, BsmtUnfSF, TotalBsmtSF, BsmtFullBath and BsmtHalfBath

for col in ('BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', 'BsmtFullBath', 'BsmtHalfBath'):

all_data[col] = all_data[col].fillna(0)

# BsmtQual, BsmtCond, BsmtExposure, BsmtFinType1 and BsmtFinType2 :

for col in ('BsmtQual', 'BsmtCond', 'BsmtExposure', 'BsmtFinType1', 'BsmtFinType2'):

all_data[col] = all_data[col].fillna('None')

# MasVnrArea and MasVnrType :

all_data["MasVnrType"] = all_data["MasVnrType"].fillna("None")

all_data["MasVnrArea"] = all_data["MasVnrArea"].fillna(0)

# MSZoning (The general zoning classification) :

all_data['MSZoning'] = all_data['MSZoning'].fillna(all_data['MSZoning'].mode()[0])

# Utilities

# 对于'Utilities'这个特征,所有记录均为“AllPub”,除了一个“NoSeWa”和2个NA。 由于拥有'NoSewa'的房子在训练集中,

# 因此此特征对预测建模无助。 然后我们可以安全地删除它。

all_data = all_data.drop(['Utilities'], axis=1)

# Functional :

all_data["Functional"] = all_data["Functional"].fillna("Typ")

# Electrical

all_data['Electrical'] = all_data['Electrical'].fillna(all_data['Electrical'].mode()[0])

# KitchenQual

all_data['KitchenQual'] = all_data['KitchenQual'].fillna(all_data['KitchenQual'].mode()[0])

# Exterior1st and Exterior2nd

all_data['Exterior1st'] = all_data['Exterior1st'].fillna(all_data['Exterior1st'].mode()[0])

all_data['Exterior2nd'] = all_data['Exterior2nd'].fillna(all_data['Exterior2nd'].mode()[0])

# SaleType :

all_data['SaleType'] = all_data['SaleType'].fillna(all_data['SaleType'].mode()[0])

# MSSubClass

all_data['MSSubClass'] = all_data['MSSubClass'].fillna("None")

all_data.isnull().sum()把一些数值型特征转换为类别型特征

all_data['MSSubClass'] = all_data['MSSubClass'].apply(str)

all_data['OverallCond'] = all_data['OverallCond'].astype(str)

all_data['YrSold'] = all_data['YrSold'].astype(str)

all_data['MoSold'] = all_data['MoSold'].astype(str)对一些类别性特征进行lE,因为他们的顺序可能反应某些关系

from sklearn.preprocessing import LabelEncoder

cols = ('FireplaceQu', 'BsmtQual', 'BsmtCond', 'GarageQual', 'GarageCond',

'ExterQual', 'ExterCond', 'HeatingQC', 'PoolQC', 'KitchenQual', 'BsmtFinType1',

'BsmtFinType2', 'Functional', 'Fence', 'BsmtExposure', 'GarageFinish', 'LandSlope',

'LotShape', 'PavedDrive', 'Street', 'Alley', 'CentralAir', 'MSSubClass', 'OverallCond',

'YrSold', 'MoSold')

for c in cols:

lbl = LabelEncoder()

lbl.fit(list(all_data[c].values))

all_data[c] = lbl.transform(list(all_data[c].values))

print('Shape all_data: {}'.format(all_data.shape))创造更多特征,接下来添加一个重要的特征,因为我们实际在购买房子的时候会考虑

all_data['TotalSF'] = all_data['TotalBsmtSF'] + all_data['1stFlrSF'] + all_d偏度特征:对数值型检查他们的偏度如何,超过阀值要进行正态转换

numeric_feats = all_data.dtypes[all_data.dtypes != "object"].index

skewed_feats = all_data[numeric_feats].apply(lambda x: skew(x.dropna())).sort_values(ascending=False)

print("\nSkew in numerical features: \n")

skewness = pd.DataFrame({'Skew': skewed_feats})

skewness.head(10)

skewness = skewness[abs(skewness) > 0.75]

print("There are {} skewed numerical features to Box Cox transform".format(skewness.shape[0]))

from scipy.special import boxcox1p

skewed_features = skewness.index

lam = 0.15

for feat in skewed_features:

# all_data[feat] += 1

all_data[feat] = boxcox1p(all_data[feat], lam)将类别特征进行哑变量转化

all_data = pd.get_dummies(all_data)

print(all_data.shape)特征工程完毕,将数据重分为训练集和测试集

train = all_data[:ntrain]

test = all_data[ntrain:]模型训练

from sklearn.linear_model import ElasticNet, Lasso, BayesianRidge, LassoLarsIC

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor

from sklearn.kernel_ridge import KernelRidge

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import RobustScaler

from sklearn.base import BaseEstimator, TransformerMixin, RegressorMixin, clone

from sklearn.model_selection import KFold, cross_val_score, train_test_split

from sklearn.metrics import mean_squared_error

import xgboost as xgb

import lightgbm as lgb

n_folds = 5

def rmsle_cv(model):

kf = KFold(n_folds, shuffle=True, random_state=42).get_n_splits(train.values)

rmse = np.sqrt(-cross_val_score(model, train.values, y_train, scoring="neg_mean_squared_error", cv=kf))

return (rmse)基线模型,该模型可能对异常值非常敏感。 所以我们需要让它们更加健壮

lasso = make_pipeline(RobustScaler(), Lasso(alpha=0.0005, random_state=1))

ENet = make_pipeline(RobustScaler(), ElasticNet(alpha=0.0005, l1_ratio=.9, random_state=3))

# Kernel Ridge Regression

KRR = KernelRidge(alpha=0.6, kernel='polynomial', degree=2, coef0=2.5)

# Gradient Boosting Regression :huber损失有很好的鲁棒性

GBoost = GradientBoostingRegressor(n_estimators=3000, learning_rate=0.05,

max_depth=4, max_features='sqrt',

min_samples_leaf=15, min_samples_split=10,

loss='huber', random_state=5)

# xgb

model_xgb = xgb.XGBRegressor(colsample_bytree=0.4603, gamma=0.0468,

learning_rate=0.05, max_depth=3,

min_child_weight=1.7817, n_estimators=2200,

reg_alpha=0.4640, reg_lambda=0.8571,

subsample=0.5213, silent=1,

nthread=-1)

# lgb

model_lgb = lgb.LGBMRegressor(objective='regression', num_leaves=5,

learning_rate=0.05, n_estimators=720,

max_bin=55, bagging_fraction=0.8,

bagging_freq=5, feature_fraction=0.2319,

feature_fraction_seed=9, bagging_seed=9,

min_data_in_leaf=6, min_sum_hessian_in_leaf=11)

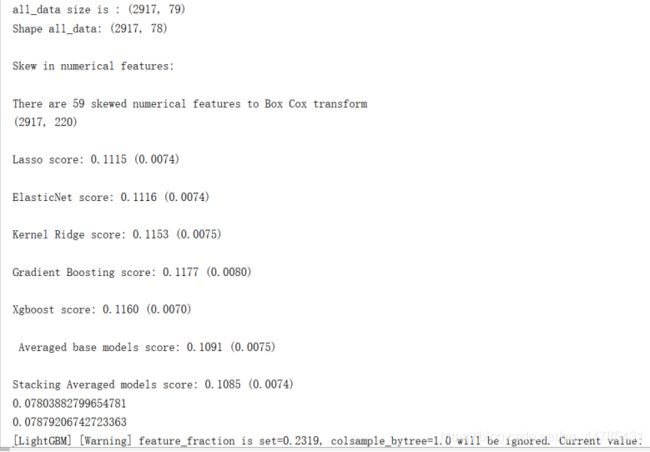

基线模型得分

score = rmsle_cv(lasso)

print("\nLasso score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Lasso score: 0.1115 (0.0074)

score = rmsle_cv(ENet)

print("ElasticNet score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# ElasticNet score: 0.1116 (0.0074)

score = rmsle_cv(KRR)

print("Kernel Ridge score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Kernel Ridge score: 0.1153 (0.0075)

score = rmsle_cv(GBoost)

print("Gradient Boosting score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Gradient Boosting score: 0.1177 (0.0080)

score = rmsle_cv(model_xgb)

print("Xgboost score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))最简单的融合方式:平均值融合

class AveragingModels(BaseEstimator, RegressorMixin, TransformerMixin):

def __init__(self, models):

self.models = models

def fit(self, X, y):

self.models_ = [clone(x) for x in self.models]

for model in self.models_:

model.fit(X, y)

return self

def predict(self, X):

predictions = np.column_stack([

model.predict(X) for model in self.models_

])

return np.mean(predictions, axis=1)暂时只平均以下四个模型的值

averaged_models = AveragingModels(models=(ENet, GBoost, KRR, lasso))

score = rmsle_cv(averaged_models)

print(" Averaged base models score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))把基础模型训练的结果作为特征继续训练模型

class StackingAveragedModels(BaseEstimator, RegressorMixin, TransformerMixin):

def __init__(self, base_models, meta_model, n_folds=5):

self.base_models = base_models

self.meta_model = meta_model

self.n_folds = n_folds

# We again fit the data on clones of the original models

def fit(self, X, y):

self.base_models_ = [list() for x in self.base_models]

self.meta_model_ = clone(self.meta_model)

kfold = KFold(n_splits=self.n_folds, shuffle=True, random_state=156)

# Train cloned base models then create out-of-fold predictions

# that are needed to train the cloned meta-model

out_of_fold_predictions = np.zeros((X.shape[0], len(self.base_models)))

for i, model in enumerate(self.base_models):

for train_index, holdout_index in kfold.split(X, y):

instance = clone(model)

self.base_models_[i].append(instance)

instance.fit(X[train_index], y[train_index])

y_pred = instance.predict(X[holdout_index])

out_of_fold_predictions[holdout_index, i] = y_pred

# Now train the cloned meta-model using the out-of-fold predictions as new feature

self.meta_model_.fit(out_of_fold_predictions, y)

return self

# Do the predictions of all base models on the test data and use the averaged predictions as

# meta-features for the final prediction which is done by the meta-model

def predict(self, X):

meta_features = np.column_stack([

np.column_stack([model.predict(X) for model in base_models]).mean(axis=1)

for base_models in self.base_models_])

return self.meta_model_.predict(meta_features)

stacked_averaged_models = StackingAveragedModels(base_models=(ENet, GBoost, KRR),meta_model=lasso)为了使结果具有可比性,我们使用相同的模型,因此lasso用于搭建第二层

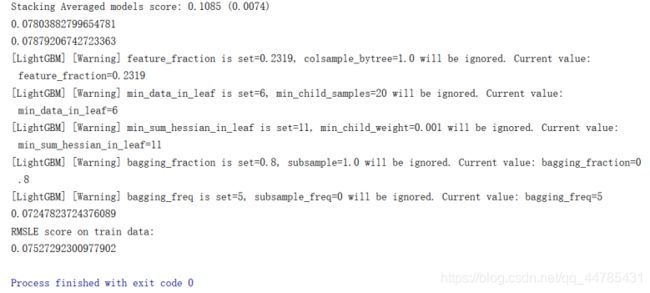

score = rmsle_cv(stacked_averaged_models)

print("Stacking Averaged models score: {:.4f} ({:.4f})".format(score.mean(), score.std()))首先定义一个rmsle评价函数

def rmsle(y, y_pred):

return np.sqrt(mean_squared_error(y, y_pred))最终培训和预测,叠加回归量

stacked_averaged_models.fit(train.values, y_train)

stacked_train_pred = stacked_averaged_models.predict(train.values)

stacked_pred = np.expm1(stacked_averaged_models.predict(test.values))

print(rmsle(y_train, stacked_train_pred))

XGBoost

model_xgb.fit(train, y_train)

xgb_train_pred = model_xgb.predict(train)

xgb_pred = np.expm1(model_xgb.predict(test))

print(rmsle(y_train, xgb_train_pred))

LightGBM

model_lgb.fit(train, y_train)

lgb_train_pred = model_lgb.predict(train)

lgb_pred = np.expm1(model_lgb.predict(test.values))

print(rmsle(y_train, lgb_train_pred))平均时整列数据上的RMSE

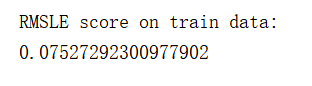

print('RMSLE score on train data:')

print(rmsle(y_train, stacked_train_pred * 0.70 +

xgb_train_pred * 0.15 + lgb_train_pred * 0.15))

ensemble = stacked_pred * 0.70 + xgb_pred * 0.15 + lgb_pred * 0.15系综预测

ensemble = stacked_pred * 0.70 + xgb_pred * 0.15 + lgb_pred * 0.15提交

sub = pd.DataFrame()

sub['Id'] = test_ID

sub['SalePrice'] = ensemble

sub.to_csv('submission.csv', index=False)3.系统测试

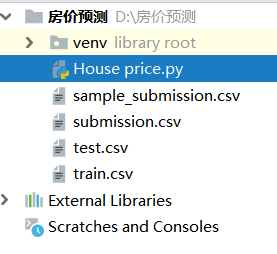

训练集、测试集、提交样例和最终提交

图 3-1 给的源数据

检查样本数量和特征

图 3-2 检查样本数量和特征

training data存在异常值

图 3-3 training data数据测试

删除training data中的异常值

图 3-4 删除training data中的异常值

mu和sigma

图 3-5 mu和sigma

销售价格与频率

理论分析数与理论值

图 3-7 理论分析数与理论值

目标变量的对数转换,使其正态分布

图 3-8 目标变量的对数转换,使其正态分布

按功能列出的丢失数据百分比

图 3-9 按功能列出的丢失数据百分比

数据相关性

图 3-10 数据相关性

最终输出的数据

图 3-11 最终输出的数据

预测值的对数与观察到的销售价格的对数之间的均方根误差(RMSE)

图 3-12 预测值的对数与观察到的销售价格的对数之间的均方根误差(RMSE)

4.总结

系统的特点:较为详细的展示了预测价格与相关特征的关系。在刚开始要做关于机器学习的预测房价问题House Price Advanced Regression Techniques系统时,由于之前没有接触过类似的设计,所以对于系统没有大概准确的概念。对于系统的如何写代码也不熟悉,在学习此类操作查阅许多资料和从网上搜索到的例子,经过学习和模仿后大概了解到了机器学习有关框架:scikit-learn框架和tensorflow 深度学习框架,本次我是使用stacking,先建立了四个基线模型,分别预测,然后第一个简单的stacking是求平均值。第二个stacking的方式是使用基线模型训练好的结果作为特征,再进行预测。对于相关语法也不是很熟悉,一边学习相关的知识一边设计代码和图形界面,所以系统还有很多不足的地方。在从网上找到很多例子进行学习后能自我进行大部分的设计,系统具有基本的功能,但是功能还不够完善,我会在今后的学习生活中继续学习相关部分的知识,并逐步完善系统功能和设计,尽量运用所学知识到实践中,解决实际问题。

参考文献

- 徐光侠等著,Python程序设计案例教程,人民邮电出版社,2017

- 蔡永铭主编,Python程序设计基础,人民邮电出版社,2019

- 胡松陶著,Python网络爬虫实战(第2版),清华大学出版社,2018

- 谢琼编著,深度学习-基于Python语言与Tensorflow平台,人民邮电出版社,2018

- 董付国著, Python程序设计(第2版),清华大学出版社,2015

备注

源代码:House price.py

训练集:train.csv

测试集:test.csv

提交样例:sample_submission.csv

最终提交:submission.csv

House price.py源代码:

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import matplotlib.pyplot as plt # Matlab-style plotting

import seaborn as sns

color = sns.color_palette()

sns.set_style('darkgrid')

import warnings

def ignore_warn(*args, **kwargs):

pass

warnings.warn = ignore_warn # ignore annoying warning (from sklearn and seaborn)

from scipy import stats

from scipy.stats import norm, skew # for some statistics

pd.set_option('display.float_format', lambda x: '{:.3f}'.format(x)) # Limiting floats output to 3 decimal points

train = pd.read_csv('train.csv')

test = pd.read_csv('test.csv')

print("The train data size before dropping Id feature is : {} ".format(train.shape))

print("The test data size before dropping Id feature is : {} ".format(test.shape))

# Save the 'Id' column

train_ID = train['Id']

test_ID = test['Id']

# Now drop the 'Id' colum since it's unnecessary for the prediction process.

train.drop("Id", axis=1, inplace=True)

test.drop("Id", axis=1, inplace=True)

# check again the data size after dropping the 'Id' variable

print("\nThe train data size after dropping Id feature is : {} ".format(train.shape))

print("The test data size after dropping Id feature is : {} ".format(test.shape))

# 修改异常值

fig, ax = plt.subplots()

ax.scatter(x=train['GrLivArea'], y=train['SalePrice'])

plt.ylabel('SalePrice', fontsize=13)

plt.xlabel('GrLivArea', fontsize=13)

plt.show()

# 删除右下角的两个异常值,即离群点

# 通过绘制各个特征与Y值(房价)的关系,确认离群点,并去除离群点.

train = train.drop(train[(train['GrLivArea'] > 4000) & (train['SalePrice'] < 300000)].index)

# 分析标签

# 目标值处理:分析Y值得分布,线性的模型需要正态分布的目标值才能发挥最大的作用。使用probplot函数,绘制正态概率图

# 通过分析正太概率图,发现:此时的正态分布属于右偏态分布,即整体峰值向左偏离,并且偏度(skewness)较大,需要对目标值做log转换,以恢复目标值的正态性。

sns.distplot(train['SalePrice'], fit=norm)

(mu, sigma) = norm.fit(train['SalePrice'])

print('\n mu = {:.2f} and sigma = {:.2f}\n'.format(mu, sigma))

plt.legend(['Normal dist. ($\mu=$ {:.2f} and $\sigma=$ {:.2f} )'.format(mu, sigma)], loc='best')

plt.ylabel('Frequency')

plt.title('SalePrice distribution')

# Get also the QQ-plot

fig = plt.figure()

res = stats.probplot(train['SalePrice'], plot=plt)

plt.show()

# 目标变量是右倾斜的。 由于(线性)模型喜欢正态分布的数据,我们需要转换此变量并使其更正常分布。

train["SalePrice"] = np.log1p(train["SalePrice"])

# 将训练数据和测试数据合并,一起进行特征工程

ntrain = train.shape[0]

ntest = test.shape[0]

y_train = train.SalePrice.values

all_data = pd.concat((train, test)).reset_index(drop=True)

all_data.drop(['SalePrice'], axis=1, inplace=True)

print("all_data size is : {}".format(all_data.shape))

# 分析数据确实情况:缺失数据可视化

all_data_na = (all_data.isnull().sum() / len(all_data)) * 100

all_data_na = all_data_na.drop(all_data_na[all_data_na == 0].index).sort_values(ascending=False)[:30]

missing_data = pd.DataFrame({'Missing Ratio': all_data_na})

f, ax = plt.subplots(figsize=(15, 12))

plt.xticks(rotation='90')

sns.barplot(x=all_data_na.index, y=all_data_na)

plt.xlabel('Features', fontsize=15)

plt.ylabel('Percent of missing values', fontsize=15)

plt.title('Percent missing data by feature', fontsize=15)

# PoolQC:根据上图相关性,以及各个特征的实际含义进行缺失值填充

all_data["PoolQC"] = all_data["PoolQC"].fillna("None")

# MiscFeature:

all_data["MiscFeature"] = all_data["MiscFeature"].fillna("None")

# Alley:

all_data["Alley"] = all_data["Alley"].fillna("None")

# Fence:

all_data["Fence"] = all_data["Fence"].fillna("None")

# FireplaceQu

all_data["FireplaceQu"] = all_data["FireplaceQu"].fillna("None")

# LotFrontage :

all_data["LotFrontage"] = all_data.groupby("Neighborhood")["LotFrontage"].transform(lambda x: x.fillna(x.median()))

# GarageType, GarageFinish, GarageQual and GarageCond :

for col in ('GarageType', 'GarageFinish', 'GarageQual', 'GarageCond'):

all_data[col] = all_data[col].fillna('None')

# GarageYrBlt, GarageArea and GarageCars :

for col in ('GarageYrBlt', 'GarageArea', 'GarageCars'):

all_data[col] = all_data[col].fillna(0)

# BsmtFinSF1, BsmtFinSF2, BsmtUnfSF, TotalBsmtSF, BsmtFullBath and BsmtHalfBath

for col in ('BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', 'BsmtFullBath', 'BsmtHalfBath'):

all_data[col] = all_data[col].fillna(0)

# BsmtQual, BsmtCond, BsmtExposure, BsmtFinType1 and BsmtFinType2 :

for col in ('BsmtQual', 'BsmtCond', 'BsmtExposure', 'BsmtFinType1', 'BsmtFinType2'):

all_data[col] = all_data[col].fillna('None')

# MasVnrArea and MasVnrType :

all_data["MasVnrType"] = all_data["MasVnrType"].fillna("None")

all_data["MasVnrArea"] = all_data["MasVnrArea"].fillna(0)

# MSZoning (The general zoning classification) :

all_data['MSZoning'] = all_data['MSZoning'].fillna(all_data['MSZoning'].mode()[0])

# Utilities

# 对于'Utilities'这个特征,所有记录均为“AllPub”,除了一个“NoSeWa”和2个NA。 由于拥有'NoSewa'的房子在训练集中,

# 因此此特征对预测建模无助。 然后我们可以安全地删除它。

all_data = all_data.drop(['Utilities'], axis=1)

# Functional :

all_data["Functional"] = all_data["Functional"].fillna("Typ")

# Electrical

all_data['Electrical'] = all_data['Electrical'].fillna(all_data['Electrical'].mode()[0])

# KitchenQual

all_data['KitchenQual'] = all_data['KitchenQual'].fillna(all_data['KitchenQual'].mode()[0])

# Exterior1st and Exterior2nd

all_data['Exterior1st'] = all_data['Exterior1st'].fillna(all_data['Exterior1st'].mode()[0])

all_data['Exterior2nd'] = all_data['Exterior2nd'].fillna(all_data['Exterior2nd'].mode()[0])

# SaleType :

all_data['SaleType'] = all_data['SaleType'].fillna(all_data['SaleType'].mode()[0])

# MSSubClass

all_data['MSSubClass'] = all_data['MSSubClass'].fillna("None")

all_data.isnull().sum()

# 把一些数值型特征转换为类别型特征

all_data['MSSubClass'] = all_data['MSSubClass'].apply(str)

all_data['OverallCond'] = all_data['OverallCond'].astype(str)

all_data['YrSold'] = all_data['YrSold'].astype(str)

all_data['MoSold'] = all_data['MoSold'].astype(str)

# 对一些类别性特征进行lE,因为他们的顺序可能反应某些关系

from sklearn.preprocessing import LabelEncoder

cols = ('FireplaceQu', 'BsmtQual', 'BsmtCond', 'GarageQual', 'GarageCond',

'ExterQual', 'ExterCond', 'HeatingQC', 'PoolQC', 'KitchenQual', 'BsmtFinType1',

'BsmtFinType2', 'Functional', 'Fence', 'BsmtExposure', 'GarageFinish', 'LandSlope',

'LotShape', 'PavedDrive', 'Street', 'Alley', 'CentralAir', 'MSSubClass', 'OverallCond',

'YrSold', 'MoSold')

for c in cols:

lbl = LabelEncoder()

lbl.fit(list(all_data[c].values))

all_data[c] = lbl.transform(list(all_data[c].values))

print('Shape all_data: {}'.format(all_data.shape))

# 创造更多特征,接下来添加一个重要的特征,因为我们实际在购买房子的时候会考虑总面积的大小,但是此数据集中并没有包含此数据。总面积等于地下室面积+1层面积+2层面积

all_data['TotalSF'] = all_data['TotalBsmtSF'] + all_data['1stFlrSF'] + all_data['2ndFlrSF']

# 偏度特征:对数值型检查他们的偏度如何,超过阀值要进行正态转换

numeric_feats = all_data.dtypes[all_data.dtypes != "object"].index

skewed_feats = all_data[numeric_feats].apply(lambda x: skew(x.dropna())).sort_values(ascending=False)

print("\nSkew in numerical features: \n")

skewness = pd.DataFrame({'Skew': skewed_feats})

skewness.head(10)

skewness = skewness[abs(skewness) > 0.75]

print("There are {} skewed numerical features to Box Cox transform".format(skewness.shape[0]))

from scipy.special import boxcox1p

skewed_features = skewness.index

lam = 0.15

for feat in skewed_features:

# all_data[feat] += 1

all_data[feat] = boxcox1p(all_data[feat], lam)

# 将类别特征进行哑变量转化

all_data = pd.get_dummies(all_data)

print(all_data.shape)

# 特征工程完毕,将数据重分为训练集和测试集

train = all_data[:ntrain]

test = all_data[ntrain:]

# 模型训练

from sklearn.linear_model import ElasticNet, Lasso, BayesianRidge, LassoLarsIC

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor

from sklearn.kernel_ridge import KernelRidge

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import RobustScaler

from sklearn.base import BaseEstimator, TransformerMixin, RegressorMixin, clone

from sklearn.model_selection import KFold, cross_val_score, train_test_split

from sklearn.metrics import mean_squared_error

import xgboost as xgb

import lightgbm as lgb

n_folds = 5

def rmsle_cv(model):

kf = KFold(n_folds, shuffle=True, random_state=42).get_n_splits(train.values)

rmse = np.sqrt(-cross_val_score(model, train.values, y_train, scoring="neg_mean_squared_error", cv=kf))

return (rmse)

#

基线模型

# LASSO Regression : 该模型可能对异常值非常敏感。 所以我们需要让它们更加健壮。 为此,我们在管道上使用sklearn的Robustscaler()方法

lasso = make_pipeline(RobustScaler(), Lasso(alpha=0.0005, random_state=1))

# Elastic Net Regression 同上

ENet = make_pipeline(RobustScaler(), ElasticNet(alpha=0.0005, l1_ratio=.9, random_state=3))

# Kernel Ridge Regression

KRR = KernelRidge(alpha=0.6, kernel='polynomial', degree=2, coef0=2.5)

# Gradient Boosting Regression :huber损失有很好的鲁棒性

GBoost = GradientBoostingRegressor(n_estimators=3000, learning_rate=0.05,

max_depth=4, max_features='sqrt',

min_samples_leaf=15, min_samples_split=10,

loss='huber', random_state=5)

# xgb

model_xgb = xgb.XGBRegressor(colsample_bytree=0.4603, gamma=0.0468,

learning_rate=0.05, max_depth=3,

min_child_weight=1.7817, n_estimators=2200,

reg_alpha=0.4640, reg_lambda=0.8571,

subsample=0.5213, silent=1,

nthread=-1)

# lgb

model_lgb = lgb.LGBMRegressor(objective='regression', num_leaves=5,

learning_rate=0.05, n_estimators=720,

max_bin=55, bagging_fraction=0.8,

bagging_freq=5, feature_fraction=0.2319,

feature_fraction_seed=9, bagging_seed=9,

min_data_in_leaf=6, min_sum_hessian_in_leaf=11)

# 基线模型得分

score = rmsle_cv(lasso)

print("\nLasso score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Lasso score: 0.1115 (0.0074)

score = rmsle_cv(ENet)

print("ElasticNet score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# ElasticNet score: 0.1116 (0.0074)

score = rmsle_cv(KRR)

print("Kernel Ridge score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Kernel Ridge score: 0.1153 (0.0075)

score = rmsle_cv(GBoost)

print("Gradient Boosting score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Gradient Boosting score: 0.1177 (0.0080)

score = rmsle_cv(model_xgb)

print("Xgboost score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Xgboost score: 0.1151 (0.0060)

# stacking model

# 最简单的融合方式:平均值融合

class AveragingModels(BaseEstimator, RegressorMixin, TransformerMixin):

def __init__(self, models):

self.models = models

def fit(self, X, y):

self.models_ = [clone(x) for x in self.models]

for model in self.models_:

model.fit(X, y)

return self

def predict(self, X):

predictions = np.column_stack([

model.predict(X) for model in self.models_

])

return np.mean(predictions, axis=1)

# 我们暂时只平均以下四个模型的值

# ENet, GBoost, KRR and lasso

averaged_models = AveragingModels(models=(ENet, GBoost, KRR, lasso))

score = rmsle_cv(averaged_models)

print(" Averaged base models score: {:.4f} ({:.4f})\n".format(score.mean(), score.std()))

# Averaged base models score: 0.1091 (0.0075)

# 看起来最简单的stacking策略也导致了分数的提升,这是我们去探索更多的方式

# 进阶版的stacking: meta-model.

# 把基础模型训练的结果作为特征继续训练模型

# Stacking averaged Models Class

class StackingAveragedModels(BaseEstimator, RegressorMixin, TransformerMixin):

def __init__(self, base_models, meta_model, n_folds=5):

self.base_models = base_models

self.meta_model = meta_model

self.n_folds = n_folds

# We again fit the data on clones of the original models

def fit(self, X, y):

self.base_models_ = [list() for x in self.base_models]

self.meta_model_ = clone(self.meta_model)

kfold = KFold(n_splits=self.n_folds, shuffle=True, random_state=156)

# Train cloned base models then create out-of-fold predictions

# that are needed to train the cloned meta-model

out_of_fold_predictions = np.zeros((X.shape[0], len(self.base_models)))

for i, model in enumerate(self.base_models):

for train_index, holdout_index in kfold.split(X, y):

instance = clone(model)

self.base_models_[i].append(instance)

instance.fit(X[train_index], y[train_index])

y_pred = instance.predict(X[holdout_index])

out_of_fold_predictions[holdout_index, i] = y_pred

# Now train the cloned meta-model using the out-of-fold predictions as new feature

self.meta_model_.fit(out_of_fold_predictions, y)

return self

# Do the predictions of all base models on the test data and use the averaged predictions as

# meta-features for the final prediction which is done by the meta-model

def predict(self, X):

meta_features = np.column_stack([

np.column_stack([model.predict(X) for model in base_models]).mean(axis=1)

for base_models in self.base_models_])

return self.meta_model_.predict(meta_features)

stacked_averaged_models = StackingAveragedModels(base_models=(ENet, GBoost, KRR),

meta_model=lasso)

# 为了使结果具有可比性,我们使用相同的模型,因此lasso用于搭建第二层

score = rmsle_cv(stacked_averaged_models)

print("Stacking Averaged models score: {:.4f} ({:.4f})".format(score.mean(), score.std()))

# Stacking Averaged models score: 0.1085 (0.0074)

# Ensembling StackedRegressor, XGBoost and LightGBM

def rmsle(y, y_pred):

return np.sqrt(mean_squared_error(y, y_pred))

# StackedRegressor:

stacked_averaged_models.fit(train.values, y_train)

stacked_train_pred = stacked_averaged_models.predict(train.values)

stacked_pred = np.expm1(stacked_averaged_models.predict(test.values))

print(rmsle(y_train, stacked_train_pred))

# 0.0781571937916

# XGBoost:

model_xgb.fit(train, y_train)

xgb_train_pred = model_xgb.predict(train)

xgb_pred = np.expm1(model_xgb.predict(test))

print(rmsle(y_train, xgb_train_pred))

# 0.0785366834315

# LightGBM:

model_lgb.fit(train, y_train)

lgb_train_pred = model_lgb.predict(train)

lgb_pred = np.expm1(model_lgb.predict(test.values))

print(rmsle(y_train, lgb_train_pred))

# 0.0734374313099

print('RMSLE score on train data:')

print(rmsle(y_train, stacked_train_pred * 0.70 +

xgb_train_pred * 0.15 + lgb_train_pred * 0.15))

ensemble = stacked_pred * 0.70 + xgb_pred * 0.15 + lgb_pred * 0.15

sub = pd.DataFrame()

sub['Id'] = test_ID

sub['SalePrice'] = ensemble

sub.to_csv('submission.csv', index=False)

#We use the numpy fuction log1p which applies log(1+x) to all elements of the column

train["SalePrice"] = np.log1p(train["SalePrice"])

全部文件,我会另外上传,有需要的小伙伴,欢迎骚扰。