第四天:paddlehub的应用

**

第四天:paddlehub的应用

**

深度学习的难点,通过大数据和小样本的局限,建立模型,通过大模型和模型设计的门槛设计损失函数,通过大算力和计算资源限制来进行参数学习

总结:先导入paddlehub包第二布输入对应模型代码,第三步找到相应的路径图片。

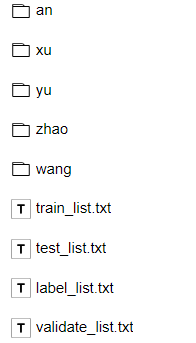

训练集:训练模型,量最多

测试集:模型未见过的数据进行测试

验证集:类似测试机,训练过程中输出的准确率。

总占比为8:1:1

模型规范化,生成一个数据读取器,data_reader

上述图片的代码实现

作业:

1导包

#CPU环境启动请务必执行该指令

%set_env CPU_NUM=1

#安装paddlehub

!pip install paddlehub==1.6.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

2.加载训练模型

module = hub.Module(name="mobilenet_v3_large_imagenet_ssld")

from paddlehub.dataset.base_cv_dataset import BaseCVDataset

class DemoDataset(BaseCVDataset):

def __init__(self):

# 数据集存放位置

self.dataset_dir = "data"

super(DemoDataset, self).__init__(

base_path=self.dataset_dir,

train_list_file="train_list.txt",

validate_list_file="validate_list.txt",

test_list_file="test_list.txt",

label_list_file="label_list.txt",

)

dataset = DemoDataset()

4.格式规范化,生成数据读取器

data_reader = hub.reader.ImageClassificationReader(

image_width=module.get_expected_image_width(),

image_height=module.get_expected_image_height(),

images_mean=module.get_pretrained_images_mean(),

images_std=module.get_pretrained_images_std(),

dataset=dataset)

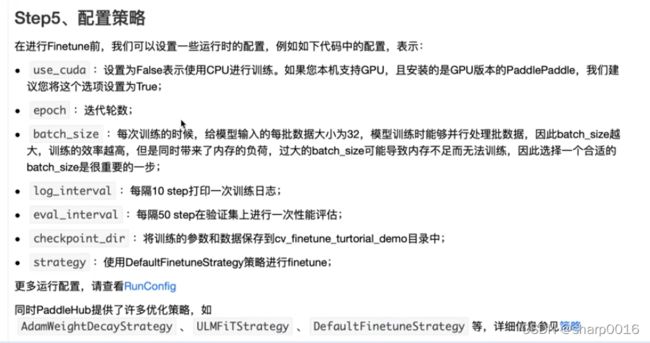

5.配置策略

config = hub.RunConfig(

use_cuda=True, #是否使用GPU训练,默认为False;

num_epoch=100, #Fine-tune的轮数;

checkpoint_dir="cv_finetune_turtorial_demo",#模型checkpoint保存路径, 若用户没有指定,程序会自动生成;

batch_size=32, #训练的批大小,如果使用GPU,请根据实际情况调整batch_size;

eval_interval=10, #模型评估的间隔,默认每100个step评估一次验证集;

strategy=hub.finetune.strategy.DefaultFinetuneStrategy()) #Fine-tune优化策略;

6.组件Finetune Task

input_dict, output_dict, program = module.context(trainable=True)

img = input_dict["image"]

feature_map = output_dict["feature_map"]

feed_list = [img.name]

task = hub.ImageClassifierTask(

data_reader=data_reader,

feed_list=feed_list,

feature=feature_map,

num_classes=dataset.num_labels,

config=config)

选择finetune_and_eval接口来进行模型训练

run_states = task.finetune_and_eval()

进行图片预测

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

with open("dataset/test_list.txt","r") as f:

filepath = f.readlines()

data = [filepath[0].split(" ")[0],filepath[1].split(" ")[0],filepath[2].split(" ")[0],filepath[3].split(" ")[0],filepath[4].split(" ")[0]]

label_map = dataset.label_dict()

index = 0

run_states = task.predict(data=data)

results = [run_state.run_results for run_state in run_states]

for batch_result in results:

print(batch_result)

batch_result = np.argmax(batch_result, axis=2)[0]

print(batch_result)

for result in batch_result:

index += 1

result = label_map[result]

print("input %i is %s, and the predict result is %s" %

(index, data[index - 1], result))

运行结果:

[array([[0.0576836 , 0.0284046 , 0.03645257, 0.8068382 , 0.07062104],

[0.26035282, 0.2416725 , 0.2682499 , 0.02967251, 0.20005229],

[0.12643923, 0.06227767, 0.28906223, 0.15370262, 0.36851835],

[0.6775572 , 0.07749658, 0.13289577, 0.08125971, 0.03079086],

[0.01687081, 0.9575028 , 0.00494054, 0.00224534, 0.01844056]],

dtype=float32)]

[3 2 4 0 1]

input 1 is dataset/test/yushuxin.jpg, and the predict result is 虞书欣

input 2 is dataset/test/xujiaqi.jpg, and the predict result is 许佳琪

input 3 is dataset/test/zhaoxiaotang.jpg, and the predict result is 赵小棠

input 4 is dataset/test/anqi.jpg, and the predict result is 安崎

input 5 is dataset/test/wangchengxuan.jpg, and the predict result is 王承渲