奥德赛修改器

At Speech Odyssey 2020 IQT Labs sponsored a special session on applications of VOiCES, a dataset targeting acoustically challenging and reverberant environments with robust labels and truth data for transcription, denoising, and speaker identification. In the next two blog posts we will be surveying the papers that were accepted to this session.

在2020年语音奥德赛上, IQT实验室赞助了关于VOiCES应用的特别会议, VOiCES是针对声学挑战性和混响环境的数据集,具有可靠的标签和用于转录,降噪和说话人识别的真相数据。 在接下来的两个博客文章中,我们将调查本届会议接受的论文。

Following the overall theme of Speaker Odyssey, the accepted papers focused on improving the robustness of speaker verification (SV) systems, with respect to environment noise and reverberation. Speaker verification (also referred to as speaker authentication) is the task of deciding whether or not a segment of speech audio, or utterance, was spoken by a specific individual (target speaker). Thus SV is a binary classification problem and performance can be characterized in terms of the rate of type I and type II errors, false rejections and false acceptances respectively, or visually summarized in plots of the false rejection rate vs. the false acceptance rate for different values of the decision threshold, known as detection error tradeoff (DET) curves. In the biometrics field, two statistics are commonly used to reduce the information of a DET curve to a scalar performance metric:

遵循Speaker Odyssey的总体主题,被接受的论文集中于提高扬声器验证 (SV)系统在环境噪声和混响方面的鲁棒性。 说话者验证(也称为说话者身份验证)是确定特定个人(目标说话者)是否说出一段语音音频或话语的任务。 因此,SV是一个二元分类问题,其性能可以分别根据I型和II型错误的发生率 ,错误拒绝和错误接受的程度来表征,或者在不同的错误拒绝率与错误接受率的关系图中直观地进行总结决策阈值的值,称为检测误差折衷(DET)曲线 。 在生物识别领域,通常使用两种统计量将DET曲线的信息简化为标量性能度量:

- Equal Error Rate (EER): The intersection of the DET-curve with the diagonal, or the value of the false rejection rate and false error rate when the two are equal. 等错误率(EER):DET曲线与对角线的交点,或者当两者相等时的假拒绝率和假错误率的值。

minDCF: The detection cost function (DCF) is a weighted sum of the false rejection and acceptance rates, where each rate is weighted by a prescribed penalty for that type of error and the probability of the target speaker being/not being present. minDCF is the value of DCF when the decision threshold is set optimally. See here (section 2.3) for more discussion.

minDCF:检测成本函数(DCF)是错误拒绝率和接受率的加权总和,其中,每种错误率均由针对该类型错误和目标说话者存在/不存在的概率的规定损失加权。 minDCF是最佳设置决策阈值时的DCF值。 有关更多讨论,请参见此处(第2.3节) 。

Typical text-independent speaker verification systems operate by computing a “score” that measures how similar an utterance is to speech audio obtained from the target speaker, also known as enrollment data. This is done in two parts: A front end that produces a fixed-length vector representation of variable length utterances, known as a speaker embedding, and a back end that computes the score between the test embedding and embedding of the enrollment data, typically using cosine similarity. Prediction is then a matter of thresholding the score. However, for systems that are designed to work for many different target speakers and in varying environments the score distribution can vary wildly between speakers and conditions. To counteract this, score normalization is used to standardize the score distribution between speakers, usually by normalizing the raw scores with the statistics of the score between the test/enrollment embedding and the embeddings of many other target speakers. Similarly, domain adaptation is used to match the statistics between embeddings produced in differing acoustic conditions.

典型的独立于文本的说话者验证系统通过计算“分数”来运行,该“分数”测量的话语与从目标说话者获得的语音音频有多相似,也称为注册数据。 这分为两个部分:产生可变长度话语的固定长度矢量表示的前端(称为说话者嵌入),以及后端(通常使用)计算测试嵌入和注册数据嵌入之间的分数余弦相似度 。 因此,预测只是对分数进行阈值处理。 但是,对于设计用于许多不同目标演讲者并在不同环境中工作的系统,分数分布在演讲者和条件之间可能会发生巨大变化。 为了解决这个问题,通常使用分数归一化来标准化演讲者之间的分数分布,通常是通过使用测试/注册嵌入与许多其他目标演讲者嵌入之间的分数统计来标准化原始分数。 类似地, 域自适应用于匹配在不同声学条件下产生的嵌入之间的统计信息。

The front end itself is composed of several steps including voice activity detection, feature extraction, and statistics pooling. Recent performance advances have come from training deep neural networks to perform some or all of these steps, using large datasets of speaker-tagged utterances, producing deep speaker embeddings. In this post we will summarize the three papers accepted to this session focused on techniques for improving deep speaker embeddings.

前端本身由几个步骤组成,包括语音活动检测,功能提取和统计池。 最近的性能进步来自训练深度神经网络来执行某些或所有这些步骤,其中使用了带有说话者标签的话语的大型数据集,产生了深度的说话者嵌入。 在这篇文章中,我们将总结本次会议接受的三篇论文,这些论文的重点是改善深层演讲者嵌入的技术。

说话人嵌入的混合表示 (Mixture Representations for Speaker Embeddings)

The first paper, “Learning Mixture Representation for Deep Speaker Embedding Using Attention” by Lin, et al. addresses the statistics pooling step of speaker embeddings. Most speaker embedding systems, particularly those involving deep neural networks, extract a fixed-dimensional feature vector for each frame (e.g., time window in a spectrogram) of an utterance. Because utterances vary in length, the lengths of these feature vector sequences will also vary. As speaker embeddings are elements of a vector space, there must necessarily be a point in the algorithm where the sequence of frame level features is reduced to a vector of constant dimension, which is called statistics pooling. Many state-of-the-art (SOTA) speaker verification systems are based around the X-vector approach which uses deep neural networks for feature extraction and performs statistics pooling by concatenating the mean and (diagonal) standard deviation of feature vectors over the course of the utterance.

Lin等人的第一篇论文“ 使用注意力学习深度说话者嵌入的混合表示法 ”。 解决了说话人嵌入的统计数据合并步骤。 大多数说话者嵌入系统,特别是涉及深度神经网络的系统,都会为发声的每个帧(例如,声谱图中的时间窗口)提取固定维特征向量。 因为发声的长度不同,所以这些特征向量序列的长度也将变化。 由于说话人嵌入是向量空间的元素,因此算法中必须有一点必须将帧级特征的序列简化为恒定维数的向量,这称为统计池。 许多最先进的(SOTA)说话人验证系统都基于X向量方法,该方法使用深度神经网络进行特征提取,并通过在整个过程中将特征向量的均值和(对角)标准偏差进行级联来执行统计汇总话语。

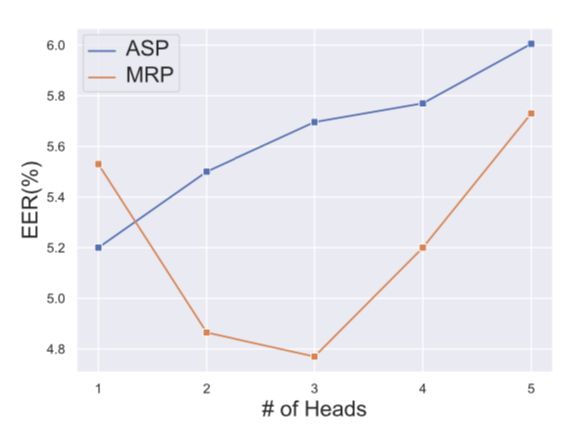

Because some frames in an utterance may be more speaker-discriminative than others, previous authors have suggested augmenting the X-vector approach using attention to weight the frame level features before computing the mean and standard deviation. This approach, attentive statistics pooling (ASP), can be generalized to the case of multiple attention heads, with a separate mean and standard deviation vector for each head. Lin et al claim that multi-headed ASP alone does not create a richer representation with more attention heads and instead propose a modification where the attention scores are normalized across heads at the frame level. After some normalization, the resulting mean and standard deviation vectors produced at the statistics pooling step are analogous to the component parameters of a gaussian mixture model with one component for each attention head. They denote this method as mixture representation pooling (MRP).

由于发声中的某些帧可能比其他帧更具说话人辨别力,因此以前的作者建议在计算均值和标准差之前使用注意力加权帧级别特征来增强X向量方法。 这种方法,即专心统计池(ASP),可以推广到多个关注头的情况,每个头都有独立的均值和标准差向量。 Lin等人声称,单用多头ASP并不能创建具有更多注意力头的更丰富的表示,而是提出了一种修改方法,其中在框架级别将注意力得分在各个头之间进行了归一化。 在进行一些归一化之后,在统计汇总步骤中生成的结果均值和标准差矢量类似于高斯混合模型的组成参数,每个注意头具有一个组成部分。 他们将此方法表示为混合物表示池(MRP)。

The authors ran several experiments to demonstrate the effectiveness of MRP over ASP and X-vector. They use the Densenet architecture as a feature extractor network for ASP and MRP, as well as for a baseline mean and standard deviation statistics pooling. The EER and minDCF are evaluated for each approach on VoxCeleb1, VOiCES19-dev, and VOiCES19-eval. Importantly, for multi-headed ASP and MRP, they scale the dimensionality of mean and standard deviation vectors inversely with the number of heads for a fairer comparison in terms of the number of parameters. They find that in almost all cases, MRP with three attention heads performs the best.

作者进行了一些实验,以证明MRP在ASP和X-vector上的有效性。 他们使用Densenet体系结构作为ASP和MRP以及基线均值和标准差统计池的特征提取器网络。 在VoxCeleb1,VOiCES19-dev和VOiCES19-eval上针对每种方法评估EER和minDCF。 重要的是,对于多头ASP和MRP,它们与头数成反比地缩放均值和标准差向量的维数,以便在参数数量方面进行更公平的比较。 他们发现,几乎在所有情况下,具有三个关注头的MRP都表现最佳。

Furthermore, to reinforce the author’s intuition that multi-headed ASP doesn’t make proper use of extra attention heads they compare the performance of ASP and MRP on VOiCES19-eval as a function of the number of heads. They find that ASP performs best with just one head, whereas the optimal number of heads for MRP is three.

此外,为了增强作者的直觉,即多头ASP没有适当地使用额外的关注头,他们将VOiCES19-eval上ASP和MRP的性能作为头数的函数进行了比较。 他们发现ASP的最佳表现是只有一个头,而MRP的最佳头数是三个。

EER as a function of attention heads for ASP and MRP. Dimensionality of pooling statistics is scaled inversely with number of heads so that total dimensionality is fixed. EER是ASP和MRP注意事项的函数。 合并统计信息的维数与磁头数成反比,因此总维数是固定的。改善深度扬声器嵌入的管道 (Improving Pipelines for Deep Speaker Embeddings)

The second paper “Deep Speaker Embeddings for Far-Field Speaker Recognition on Short Utterances” by Gusev, et al. explores a variety of choices of system architecture, loss functions, and data preprocessing with the aim of improving the performance of SV systems on both distant speech and short utterances. They evaluate different combinations of these design choices on the VOiCES and VoxCeleb test sets, for both full utterances and utterances that have been shortened to 1, 2, and 5 seconds, and arrive at several conclusions:

Gusev等人的第二篇论文“ 用于短话语的远场说话人识别的深度说话人嵌入 ”。 探索了系统架构,损失函数和数据预处理的多种选择,旨在提高SV系统在远距离语音和短话语下的性能。 他们在VOiCES和VoxCeleb测试仪上评估了这些设计选择的不同组合,包括完整发声和已缩短为1、2和5秒的发声,并得出以下结论:

Performing voiced activity with a U-net based model that is trained end-to-end outperforms the standard energy-detection based model in the popular automatic speech recognition toolkit Kaldi.

使用经过端到端训练的基于U-net的模型执行语音活动,胜过流行的自动语音识别工具包Kaldi中基于标准能量检测的模型。

- Data augmentation improves performance. In particular, when adding noise and reverb to clean utterances, it is important to apply reverb to both the noise and speech. 数据扩充可提高性能。 尤其是,在将杂音和混响添加到干净的语音时,将混响应用于杂音和语音非常重要。

- It is better to perform frame-level feature extraction with a neural network based on the ResNet architecture than the X-vector architecture, which is based around time-delay neural networks. This holds true even when the X-vector network is augmented with recurrent connections. 与基于时延神经网络的X向量架构相比,使用基于ResNet体系结构的神经网络执行帧级特征提取效果更好。 即使X向量网络增加了经常性的连接,也是如此。

- Higher dimensional acoustic input features increase performance. Specifically 80 dimensional Log Mel-filter Bank Energies lead to better performing models than 40 dimensional Mel Frequency Cepstral Coefficients. 高维声学输入功能可提高性能。 具体地说,与40维梅尔频率倒谱系数相比,80维对数梅尔滤波器组能量可产生更好的模型。

- Finetuning SV models on reduced duration utterances decreases their performance on full length utterances, so there is a fundamental tradeoff in performance between these two application domains. 在减少持续时间话语的情况下对SV模型进行微调会降低其在全长话语中的性能,因此在这两个应用程序域之间的性能之间存在根本的权衡。

选择性嵌入增强 (Selective Embedding Enhancement)

The final paper to touch on deep speaker embeddings was “Selective Deep Speaker Embedding Enhancement for Speaker Verification” by Jung et al. While deep speaker embeddings have led to high SV performance on utterances recorded at close range and in clear conditions, their performance degrades considerably on speech with reverberation and environmental noise. At the same time, systems trained to compensate for noisy and distant speech underperform on clean and close speech, as we reported in a previous blog post for automatic speech recognition. Furthermore, every newly proposed system for speaker embeddings has to be separately fine-tuned and calibrated for noisy utterances. In light of these concerns, Jung et al. propose two systems to enhance speaker embeddings that satisfy the following three desiderata:

关于深度演讲者嵌入的最后一篇论文是Jung等人的“ 用于演讲者验证的选择性深度演讲者嵌入增强功能 ”。 虽然深层的扬声器嵌入可以在近距离和清晰条件下录制的语音中实现较高的SV性能,但在具有混响和环境噪声的语音中,它们的性能会大大降低。 同时,如我们在之前的博客文章中自动语音识别中所报道的那样 ,经过训练以补偿嘈杂和远距离语音的系统在干净和封闭的语音上表现不佳。 此外,每个新提出的用于扬声器嵌入的系统都必须分别进行微调和校准,以实现嘈杂的语音效果。 鉴于这些担忧,Jung等人。 提出了两个系统来增强发言人嵌入,使其满足以下三个需求:

- The systems can be applied to utterance examples that range from close talk to distant speech and are adaptive to the level of distortion present. 该系统可以应用于发声示例,其范围从近距离讲话到远距离讲话,并且适合于出现的失真水平。

- These systems operate directly on the embeddings produced by front-end speaker embedding systems and treat the speaker embedding systems as a black box model. 这些系统直接在前端扬声器嵌入系统产生的嵌入上运行,并将扬声器嵌入系统视为黑匣子模型。

- The architectural complexity and computational cost of inference are minimized. 体系结构的复杂性和推理的计算成本被最小化。

To train and test the proposed systems, Jung et al. used the VOiCES dataset because it contains many different distant speech utterances corresponding to a given close-talk (source) utterance and the underlying source utterances are also available for training/testing data.

为了训练和测试所提出的系统,Jung等人。 之所以使用VOiCES数据集,是因为它包含许多与给定的近距离(源)话语相对应的远距离语音话语,并且潜在的源话语也可用于训练/测试数据。

The first system they propose, skip connection-based selective enhancement (SCSE), utilizes two deep neural networks to perform distortion-adaptive enhancement of speaker embeddings from the front-end, represented as x in the figure. The first network, SDDNN, is trained as a binary classifier to distinguish source utterances from distant utterances. The second network, SEDNN, outputs a “denoised” version of the embedding. The original embedding x is multiplied by either the output of SDDNN (at test time) or a binary source/distant label (during training) and added to the output of SEDNN to produce the enhanced embedding x’. To encourage SEDNN to behave like a denoising system, the training includes a mean squared error loss between x’ and either x, if x came from a source utterance, or the embedding of the corresponding source utterance if x came from a distant utterance. Finally the whole system is trained with categorical cross entropy to ensure that the enhanced embeddings are still informative about speaker identity.

他们提出的第一个系统是基于跳过连接的选择性增强(SCSE),它利用两个深度神经网络从前端对说话人嵌入进行失真自适应增强,在图中用x表示。 第一个网络SDDNN被训练为二进制分类器,以区分源话语和远方话语。 第二个网络SEDNN输出嵌入的“去噪”版本。 原始嵌入x乘以SDDNN的输出(在测试时)或二进制源/远距离标签(在训练过程中),然后加到SEDNN的输出以产生增强的嵌入x' 。 为了鼓励SEDNN表现得像去噪系统,训练包括x'和x之间的均方误差损失(如果x来自源话语,或者嵌入相应的源话语,如果x来自远方话语)。 最后,对整个系统进行分类交叉熵训练,以确保增强的嵌入内容仍能提供有关说话人身份的信息。

The second system is based on the discriminative auto-encoder framework, and denoted as selective enhancement discriminative auto-encoder (SEDA). The input embedding, x, is passed through a pair of encoder and decoder networks producing the reconstruction y. Like in SCSE, the reconstruction target for y depends on whether x is the embedding of a source or target utterance. Additionally, during training, y is trained to be speaker-discriminative with a categorical cross entropy loss, though y is not used as the enhanced embedding. The encoding of x produced by the encoder network is partitioned into two components, x’ and n, where x’ is the enhanced embedding used at test time and n contains information about nuisance variables such as noise and reverberation. The distribution of enhanced embeddings x’ is regularized with two loss functions to make sure that embeddings information about the speaker and minimal nuisance information. The first loss function, center loss, penalizes the average euclidean distance between an embedding and the mean vector of all embeddings corresponding to the same speaker. The second regularizer, internal dispersion loss, maximizes the average distance between an embedding and the average vector over all embeddings. When combined, these two loss functions minimize intra-class variance and maximize inter-class variance.

第二系统基于判别式自动编码器框架 ,并表示为选择性增强判别式自动编码器(SEDA)。 输入的嵌入x穿过一对编码器和解码器网络,产生重构y 。 像在SCSE中一样, y的重建目标取决于x是源话语还是目标话语的嵌入。 另外,在训练过程中,尽管y未被用作增强的嵌入,但y被训练为具有判别性交叉熵损失的说话人区分性。 编码器网络产生的x的编码分为两个分量x'和n ,其中x'是在测试时使用的增强嵌入,并且n包含有关有害变量(如噪声和混响)的信息。 增强嵌入x'的分布通过两个损失函数进行正则化,以确保嵌入有关扬声器的信息和最小的干扰信息。 第一个损失函数,即中心损失,惩罚了嵌入和对应于同一说话者的所有嵌入的均值矢量之间的平均欧几里德距离。 第二个正则化函数,内部色散损失,使所有嵌入中嵌入和平均矢量之间的平均距离最大化。 当组合时,这两个损失函数将类别内方差最小化并将类别间方差最大化。

The enhanced embedding x’ is regularized with two loss functions to make sure that it contains information about the speaker and minimal nuisance information: Center loss and internal dispersion loss.

增强的嵌入x'通过两个损失函数进行正则化,以确保它包含有关扬声器的信息和最小的有害信息:中心损失和内部色散损失。

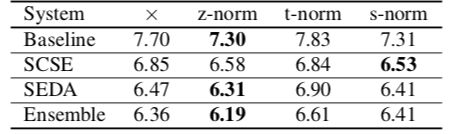

Jung et al. used a slightly modified version of the RawNet speaker embedding architecture as the embedding front-end for all their experiments. RawNet operates on raw waveforms, performs feature extraction with a series of convolutional and residual layers, and does statistics pooling with a gated recurrent unit (GRU) layer. The authors performed extensive hyper-parameter optimization for SCSE and SEDA, and compared the performance of the two systems to a baseline that uses the embeddings produced by RawNet with no enhancement. Additionally, they experiment with multiple types of score normalization and with ensembling the two systems by summing the embedding scores. They find that z-norm score normalization and ensembling result in the optimal performance.

Jung等。 使用RawNet扬声器嵌入架构的略微修改版本作为所有实验的嵌入前端。 RawNet对原始波形进行操作,通过一系列卷积和残差层执行特征提取,并通过门控循环单元(GRU)层进行统计汇总。 作者对SCSE和SEDA进行了广泛的超参数优化,并将这两个系统的性能与使用RawNet生成的嵌入进行了增强的基线进行了比较。 此外,他们使用多种类型的分数归一化进行实验,并通过对嵌入分数求和来整合两个系统。 他们发现z范数得分归一化和集合可产生最佳性能。

Selective Deep Speaker Embedding Enhancement for Speaker Verification. 选择性深说话者嵌入增强用于说话者验证的不同选择性增强系统的性能。Conclusion

结论

Though none of their approaches match state of the art EER on VOiCES, compared to the performance of teams in the Interspeech 2019 VOiCES from a Distance Challenge, achieving competitive performance on noisy data without sophisticated denoising preprocessing while also maintaining performance on clean data is important for systems deployed in the real world.

尽管他们的方法都无法与VOiCES上最先进的EER相匹配,但与Interspeech 2019 VOiCES上的团队在远距离挑战赛中的表现相比,在嘈杂的数据上实现竞争性能而无需复杂的去噪预处理,同时还要保持干净数据上的性能对于在现实世界中部署的系统。

Speaker verification systems based on deep speaker embeddings have recently demonstrated significant advances in performance. However, as all three papers surveyed in this post have demonstrated, different choices in architecture, data preprocessing, and inference procedure can strongly impact how a speaker verification system performs in the presence of noise. Developing robust systems requires data that reflect the realistic conditions they will be deployed in, such as the VOiCES dataset. We are excited to see how advances in deep speaker embedding will push forward the state of the art in speaker verification. In the next blog post we will review other papers from this session that explored other aspects of the SV pipeline, such as beamforming and learnable scoring rules.

最近,基于深度说话者嵌入的说话者验证系统在性能上已取得了重大进步。 但是,正如本文中调查的所有三篇论文都表明的那样,体系结构,数据预处理和推理过程中的不同选择会严重影响说话者验证系统在存在噪声的情况下的性能。 开发健壮的系统需要反映其部署实际条件的数据,例如VOiCES数据集。 我们很高兴看到演讲者深层嵌入技术的进步将如何推动演讲者验证的最新发展。 在下一篇博客文章中,我们将审阅本次会议的其他论文,这些论文探讨了SV管道的其他方面,例如波束成形和可学习的评分规则。

IQT Labs uses applied research to help the Intelligence Community better understand and de-risk both strategic and tactical technology challenges. We use agile, open-source collaborations across academia, industry and government to explore specific facets of larger problems — often gleaning valuable insights in “fast-failure” as well as catalyzing incremental advances in capabilities. Follow us on Twitter @_lab41

IQT Labs通过应用研究来帮助情报界更好地理解和降低战略和战术技术挑战的风险。 我们使用跨学术界,行业和政府的敏捷,开放源代码合作来探索更大问题的特定方面-经常在“快速失败”中收集有价值的见解,并促进能力的逐步提高。 在Twitter @ _lab41上关注我们

翻译自: https://gab41.lab41.org/voices-at-speech-odyssey-2020-advances-in-speaker-embeddings-13dd5d14f753

奥德赛修改器