SENet: Squeeze-and-Excitation Networks

Squeeze-and-Excitation Networks

目录

具体步骤

Squeeze-and-Excitation在做啥

SE-Inception Module和SE-ResNet Module

额外的参数量

消融实验

实现代码

具体步骤

- 输入特征图

,经过全局平均池化(global average pooling),输出特征图

,经过全局平均池化(global average pooling),输出特征图

依次经过fc、relu、fc、sigmoid得到输出

依次经过fc、relu、fc、sigmoid得到输出

和

和 经过channel-wise mulplication得到最终的输出

经过channel-wise mulplication得到最终的输出

Squeeze-and-Excitation在做啥

- Squeeze: global information embedding,即通过全局平局池化将每个通道的全局信息编码进一个单独的信号中

- Excitation: adaptive recalibration,通过两层全连接层来学习channel-wise dependencies。这里为了降低模型复杂度,第一个全连接层根据reduction ratio

将输入通道

将输入通道 映射为

映射为 ,然后是一个relu,第二个全连接层再映射回

,然后是一个relu,第二个全连接层再映射回 ,最后是一个sigmoid。这一步可以看做注意力机制attention,通过这一步模型 can learn to use global information to selectively emphasise informative features and suppress less useful ones.

,最后是一个sigmoid。这一步可以看做注意力机制attention,通过这一步模型 can learn to use global information to selectively emphasise informative features and suppress less useful ones. - 将原始输入与第2步学习到的channel-wise权重相乘,得到最终输出。

SE-Inception Module和SE-ResNet Module

额外的参数量

其中r是reduction ratio,S是stage的数量,![]() 是输出通道数,

是输出通道数,![]() 是一个stage中block的数量。这些额外的参数量都是两个全连接层产生的。

是一个stage中block的数量。这些额外的参数量都是两个全连接层产生的。

SE-ResNet-50相比于ResNet-50带来了额外10%的参数,大部分增加的参数来源于最后一个stage,因为这个stage的channel最多。作者发现,去掉最后一个stage的SE,可以将额外的参数减少到约4%,但在ImageNet上,只会增加小于0.1%的top-5 error。

消融实验

除了通过实验在分类,检测,场景分类等各种公开数据集上证明了SE可以带来精度的提升外,作者还做了很多消融实验,来验证当前的SE结构是最优的结构。

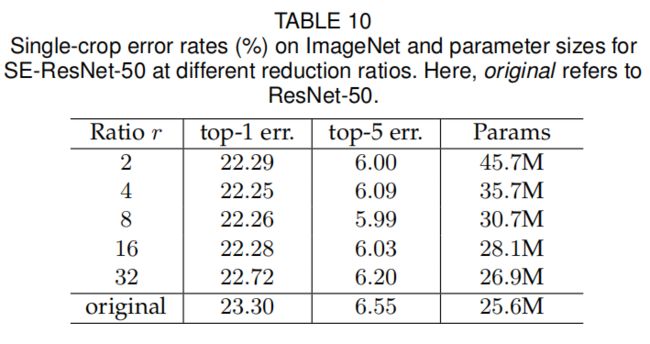

- reduction ratio

r取16时可以获得很好的精度和复杂度之间的平衡。作者同时还提出因为不同的层的作用不同,在网络所有深度采用同样的r可能不是最优的,通过调整r可能会获得更进一步的精度提升。

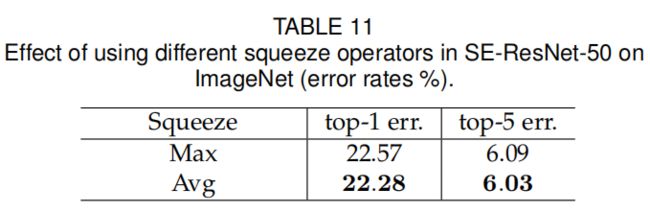

- squeeze operator

在squeeze阶段,max pooling vs. avarage pooling,通过实验证明avg pooling的效果更好。

- excitation operator

在excitation阶段,sigmoid vs. relu、tanh,实验结果表明sigmoid的效果更好。

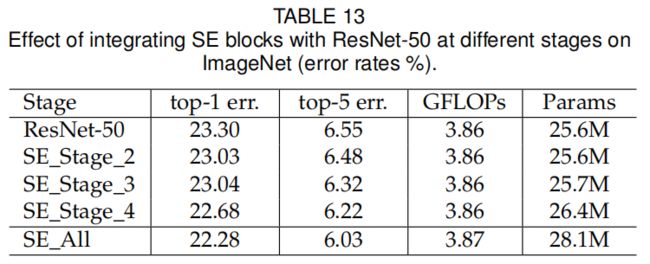

- different stages

在ResNet-50中的不同stage插入se block的效果差异,实验证明在每个stage中插入se block都能带来精度的提升,并且效果是互补的,在所有stage中都插入se block可以带来更进一步的精度提升。

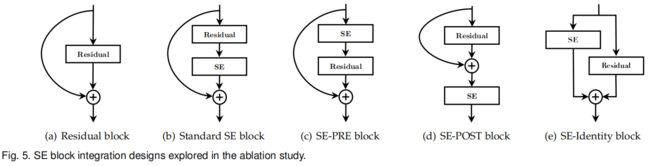

- integration strategy

上面的几种结构SE都是在residual unit外面,作者还把SE放到residual里面,即3×3卷积的后面,结果如下

实现代码

class SELayer(BaseModule):

"""Squeeze-and-Excitation Module.

Args:

channels (int): The input (and output) channels of the SE layer.

squeeze_channels (None or int): The intermediate channel number of

SElayer. Default: None, means the value of ``squeeze_channels``

is ``make_divisible(channels // ratio, divisor)``.

ratio (int): Squeeze ratio in SELayer, the intermediate channel will

be ``make_divisible(channels // ratio, divisor)``. Only used when

``squeeze_channels`` is None. Default: 16.

divisor(int): The divisor to true divide the channel number. Only

used when ``squeeze_channels`` is None. Default: 8.

conv_cfg (None or dict): Config dict for convolution layer. Default:

None, which means using conv2d.

act_cfg (dict or Sequence[dict]): Config dict for activation layer.

If act_cfg is a dict, two activation layers will be configurated

by this dict. If act_cfg is a sequence of dicts, the first

activation layer will be configurated by the first dict and the

second activation layer will be configurated by the second dict.

Default: (dict(type='ReLU'), dict(type='Sigmoid'))

"""

def __init__(self,

channels,

squeeze_channels=None,

ratio=16,

divisor=8,

bias='auto',

conv_cfg=None,

act_cfg=(dict(type='ReLU'), dict(type='Sigmoid')),

init_cfg=None):

super(SELayer, self).__init__(init_cfg)

if isinstance(act_cfg, dict):

act_cfg = (act_cfg, act_cfg)

assert len(act_cfg) == 2

assert mmcv.is_tuple_of(act_cfg, dict)

self.global_avgpool = nn.AdaptiveAvgPool2d(1)

if squeeze_channels is None:

squeeze_channels = make_divisible(channels // ratio, divisor)

assert isinstance(squeeze_channels, int) and squeeze_channels > 0, \

'"squeeze_channels" should be a positive integer, but get ' + \

f'{squeeze_channels} instead.'

self.conv1 = ConvModule(

in_channels=channels,

out_channels=squeeze_channels,

kernel_size=1,

stride=1,

bias=bias,

conv_cfg=conv_cfg,

act_cfg=act_cfg[0])

self.conv2 = ConvModule(

in_channels=squeeze_channels,

out_channels=channels,

kernel_size=1,

stride=1,

bias=bias,

conv_cfg=conv_cfg,

act_cfg=act_cfg[1])

def forward(self, x):

out = self.global_avgpool(x)

out = self.conv1(out)

out = self.conv2(out)

return x * out