PyTorch--模型剪枝案例

一、基础知识:

1.模型剪枝:

通俗理解就是将神经网络某些冗余连接层的权重置为0,使得模型更加具有稀疏化,从而提升模型性能

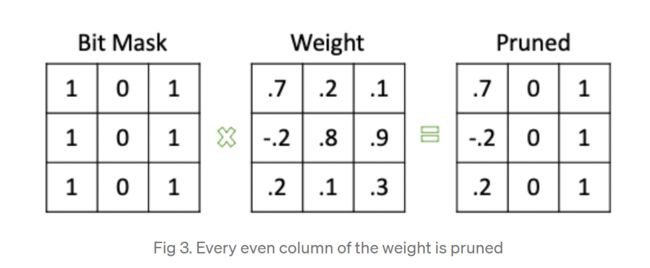

下图通过掩码图,根据掩码图对应权重矩阵将对应位置上的值替换为0

2.模型剪枝的方式:

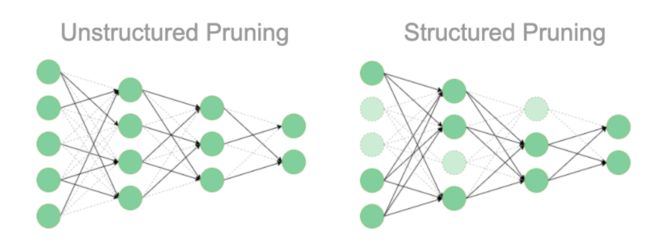

剪枝可以分为非结构剪枝和结构剪枝:

非结构剪枝:

如下图(左):神经元的个数不变,将某些神经元的部分连接权重置为零(虚线部分)

结构剪枝:

如下图(右):神经元的个数不变,将某些神经元的全部连接权重置为零(虚线部分)

二、pytorch中的官方模型剪枝:

1.局部剪枝

1.首先构建一个网络模型,根据torch官方采用LeNet:

'''

https://pytorch.org/tutorials/intermediate/pruning_tutorial.html

'''

import torch

from torch import nn

import torch.nn.utils.prune as prune

import torch.nn.functional as F

# 选定设备

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 构建LeNet网络模型

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=6, kernel_size=3)

self.conv2 = nn.Conv2d(6, 16, 3)

self.fc1 = nn.Linear(16 * 5 * 5, 120) # 5x5 image dimension

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

x = x.view(-1, int(x.nelement() / x.shape[0]))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

model = LeNet().to(device=device) # 模型传入cpu/gpu

module = model.conv1 # 选定为第一个卷积层为操作的模块查看第一层卷积的参数: 包含weight和bias

named_parameters()内存储的对象除非手动删除,否则在剪枝过程中对其无影响

print(list(module.named_parameters()))

[('weight', Parameter containing:

tensor([[[[ 1.5996e-01, 1.0775e-01, 2.6948e-01],

[-6.4919e-02, -1.8932e-01, 2.2985e-02],

[ 8.5653e-02, 2.9077e-01, 2.0981e-01]]],

[[[-2.0450e-01, 5.4691e-02, 2.4475e-01],

[ 3.1142e-01, 1.9070e-01, -1.7687e-01],

[-3.9149e-02, 3.1566e-01, 1.8814e-02]]],

[[[ 3.1525e-01, 6.9234e-03, 2.1742e-01],

[ 1.2825e-02, -1.4790e-01, 1.2469e-01],

[ 2.0399e-01, -1.1661e-01, 2.5957e-01]]],

[[[-8.3796e-02, -2.3485e-01, -2.3683e-01],

[-3.1888e-01, -2.5757e-01, 9.3788e-02],

[-2.6058e-01, -1.6827e-01, 3.0230e-02]]],

[[[-1.4831e-02, -2.8372e-04, -2.2037e-01],

[-2.6850e-01, -1.9531e-01, -3.0480e-01],

[ 2.1453e-02, 1.0219e-02, 1.0799e-01]]],

[[[ 6.6237e-03, -1.1856e-02, 2.1806e-01],

[-1.2661e-01, -1.2371e-01, 2.9971e-01],

[ 4.9645e-03, 7.6150e-02, -1.2875e-01]]]], device='cuda:0',

requires_grad=True)), ('bias', Parameter containing:

tensor([ 0.1880, -0.3062, -0.3171, -0.1479, 0.2673, -0.1545], device='cuda:0',

requires_grad=True))]2.对weight和bias的剪枝

(1)weight剪枝:

# 选择需要剪枝的参数,随机非结构化剪掉30%的参数

prune.random_unstructured(module, name="weight", amount=0.3)

剪枝后查看named_parameters(不变):

print(list(module.named_parameters())) # 查看第一层卷积层的参数,包括weight和bias

[('bias', Parameter containing:

tensor([ 0.1880, -0.3062, -0.3171, -0.1479, 0.2673, -0.1545], device='cuda:0',

requires_grad=True)), ('weight_orig', Parameter containing:

tensor([[[[ 1.5996e-01, 1.0775e-01, 2.6948e-01],

[-6.4919e-02, -1.8932e-01, 2.2985e-02],

[ 8.5653e-02, 2.9077e-01, 2.0981e-01]]],

[[[-2.0450e-01, 5.4691e-02, 2.4475e-01],

[ 3.1142e-01, 1.9070e-01, -1.7687e-01],

[-3.9149e-02, 3.1566e-01, 1.8814e-02]]],

[[[ 3.1525e-01, 6.9234e-03, 2.1742e-01],

[ 1.2825e-02, -1.4790e-01, 1.2469e-01],

[ 2.0399e-01, -1.1661e-01, 2.5957e-01]]],

[[[-8.3796e-02, -2.3485e-01, -2.3683e-01],

[-3.1888e-01, -2.5757e-01, 9.3788e-02],

[-2.6058e-01, -1.6827e-01, 3.0230e-02]]],

[[[-1.4831e-02, -2.8372e-04, -2.2037e-01],

[-2.6850e-01, -1.9531e-01, -3.0480e-01],

[ 2.1453e-02, 1.0219e-02, 1.0799e-01]]],

[[[ 6.6237e-03, -1.1856e-02, 2.1806e-01],

[-1.2661e-01, -1.2371e-01, 2.9971e-01],

[ 4.9645e-03, 7.6150e-02, -1.2875e-01]]]], device='cuda:0',

requires_grad=True))]查看本次剪枝的掩码,所有剪枝操作的掩码都存储与named_buffers中(其中0和1分别代表丢弃和保留该wei位置的参数):

print(list(module.named_buffers()))

[('weight_mask', tensor([[[[1., 1., 0.],

[1., 0., 0.],

[1., 0., 1.]]],

[[[1., 1., 1.],

[0., 0., 1.],

[0., 1., 1.]]],

[[[1., 0., 0.],

[1., 0., 1.],

[1., 1., 1.]]],

[[[1., 1., 1.],

[1., 1., 0.],

[1., 1., 1.]]],

[[[1., 0., 1.],

[1., 0., 0.],

[1., 1., 0.]]],

[[[1., 1., 1.],

[0., 1., 1.],

[1., 1., 1.]]]], device='cuda:0'))]查看剪枝后权重参数(对应掩码位置为0的位置上的参数已经被替换为0):

print(module.weight)

tensor([[[[ 0.1600, 0.1078, 0.0000],

[-0.0649, -0.0000, 0.0000],

[ 0.0857, 0.0000, 0.2098]]],

[[[-0.2045, 0.0547, 0.2447],

[ 0.0000, 0.0000, -0.1769],

[-0.0000, 0.3157, 0.0188]]],

[[[ 0.3153, 0.0000, 0.0000],

[ 0.0128, -0.0000, 0.1247],

[ 0.2040, -0.1166, 0.2596]]],

[[[-0.0838, -0.2349, -0.2368],

[-0.3189, -0.2576, 0.0000],

[-0.2606, -0.1683, 0.0302]]],

[[[-0.0148, -0.0000, -0.2204],

[-0.2685, -0.0000, -0.0000],

[ 0.0215, 0.0102, 0.0000]]],

[[[ 0.0066, -0.0119, 0.2181],

[-0.0000, -0.1237, 0.2997],

[ 0.0050, 0.0762, -0.1288]]]], device='cuda:0',

grad_fn=) (2)对bias进行剪枝:

# 使用l1正则非结构剪枝对bias进行剪枝--->将绝对值最小的3个bias的值改为0

prune.l1_unstructured(module, name="bias", amount=3)

查看剪枝前后的bias

prune.l1_unstructured(module, name="bias", amount=3)

print("bias剪枝前:",list(module.named_parameters()))

print("bias剪枝后:",module.bias)

bias剪枝前: [('weight_orig', Parameter containing:

tensor([[[[ 1.5996e-01, 1.0775e-01, 2.6948e-01],

[-6.4919e-02, -1.8932e-01, 2.2985e-02],

[ 8.5653e-02, 2.9077e-01, 2.0981e-01]]],

[[[-2.0450e-01, 5.4691e-02, 2.4475e-01],

[ 3.1142e-01, 1.9070e-01, -1.7687e-01],

[-3.9149e-02, 3.1566e-01, 1.8814e-02]]],

[[[ 3.1525e-01, 6.9234e-03, 2.1742e-01],

[ 1.2825e-02, -1.4790e-01, 1.2469e-01],

[ 2.0399e-01, -1.1661e-01, 2.5957e-01]]],

[[[-8.3796e-02, -2.3485e-01, -2.3683e-01],

[-3.1888e-01, -2.5757e-01, 9.3788e-02],

[-2.6058e-01, -1.6827e-01, 3.0230e-02]]],

[[[-1.4831e-02, -2.8372e-04, -2.2037e-01],

[-2.6850e-01, -1.9531e-01, -3.0480e-01],

[ 2.1453e-02, 1.0219e-02, 1.0799e-01]]],

[[[ 6.6237e-03, -1.1856e-02, 2.1806e-01],

[-1.2661e-01, -1.2371e-01, 2.9971e-01],

[ 4.9645e-03, 7.6150e-02, -1.2875e-01]]]], device='cuda:0',

requires_grad=True)), ('bias_orig', Parameter containing:

tensor([ 0.1880, -0.3062, -0.3171, -0.1479, 0.2673, -0.1545], device='cuda:0',

requires_grad=True))]

bias剪枝后: tensor([ 0.0000, -0.3062, -0.3171, -0.0000, 0.2673, -0.0000], device='cuda:0',

grad_fn=) (3)迭代剪枝:

# 此操作将剪掉2个node的weight,总的剪除数量为50%

prune.ln_structured(module, name="weight", amount=0.5, n=2, dim=0)

查看剪枝后的权重

print(module.weight)

tensor([[[[ 0.0000, 0.0000, 0.0000],

[-0.0000, -0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000]]],

[[[-0.2045, 0.0547, 0.2447],

[ 0.0000, 0.0000, -0.1769],

[-0.0000, 0.3157, 0.0188]]],

[[[ 0.3153, 0.0000, 0.0000],

[ 0.0128, -0.0000, 0.1247],

[ 0.2040, -0.1166, 0.2596]]],

[[[-0.0838, -0.2349, -0.2368],

[-0.3189, -0.2576, 0.0000],

[-0.2606, -0.1683, 0.0302]]],

[[[-0.0000, -0.0000, -0.0000],

[-0.0000, -0.0000, -0.0000],

[ 0.0000, 0.0000, 0.0000]]],

[[[ 0.0000, -0.0000, 0.0000],

[-0.0000, -0.0000, 0.0000],

[ 0.0000, 0.0000, -0.0000]]]], device='cuda:0',

grad_fn=) (4)对整个模型进行剪枝:

new_model = LeNet()

for name, module in new_model.named_modules():

# 在所有的2d卷积层中使用l1正则非结构剪枝20%的权重

if isinstance(module, torch.nn.Conv2d):

prune.l1_unstructured(module, name='weight', amount=0.2)

# 在所有的线性层使用l1正则非结构剪枝40%的权重

elif isinstance(module, torch.nn.Linear):

prune.l1_unstructured(module, name='weight', amount=0.4)

print(dict(new_model.named_buffers()).keys()) 参考:

Part 1: What is Pruning in Machine Learning? - Neural Magic

Pruning deep neural networks to make them fast and small

https://towardsdatascience.com/model-compression-via-pruning-ac9b730a7c7b#:~:text=Pruning%20is%20one%20model%20compression,models%20across%20various%20different%20architectures.

https://www.reddit.com/r/deeplearning/comments/dw8x42/dropout_and_pruning/

Weights & Biases

官方网站:Pruning Tutorial — PyTorch Tutorials 1.11.0+cu102 documentation