- Kafka 消息丢失如何处理?

架构文摘JGWZ

学习

今天给大家分享一个在面试中经常遇到的问题:Kafka消息丢失该如何处理?这个问题啊,看似简单,其实里面藏着很多“套路”。来,咱们先讲一个面试的“真实”案例。面试官问:“Kafka消息丢失如何处理?”小明一听,反问:“你是怎么发现消息丢失了?”面试官顿时一愣,沉默了片刻后,可能有点不耐烦,说道:“这个你不用管,反正现在发现消息丢失了,你就说如何处理。”小明一头雾水:“问题是都不知道怎么丢的,处理起来

- nosql数据库技术与应用知识点

皆过客,揽星河

NoSQLnosql数据库大数据数据分析数据结构非关系型数据库

Nosql知识回顾大数据处理流程数据采集(flume、爬虫、传感器)数据存储(本门课程NoSQL所处的阶段)Hdfs、MongoDB、HBase等数据清洗(入仓)Hive等数据处理、分析(Spark、Flink等)数据可视化数据挖掘、机器学习应用(Python、SparkMLlib等)大数据时代存储的挑战(三高)高并发(同一时间很多人访问)高扩展(要求随时根据需求扩展存储)高效率(要求读写速度快)

- 【六】阿伟开始搭建Kafka学习环境

能源恒观

中间件学习kafkaspring

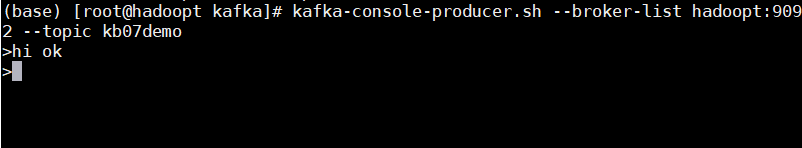

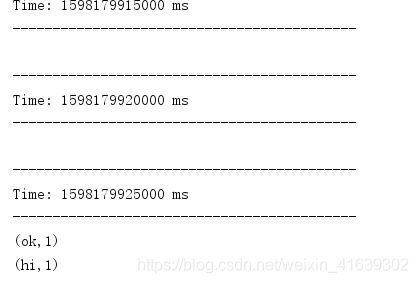

阿伟开始搭建Kafka学习环境概述上一篇文章阿伟学习了Kafka的核心概念,并且把市面上流行的消息中间件特性进行了梳理和对比,方便大家在学习过程中进行对比学习,最后梳理了一些Kafka使用中经常遇到的Kafka难题以及解决思路,经过上一篇的学习我相信大家对Kafka有了初步的认识,本篇将继续学习Kafka。一、安装和配置学习一项技术首先要搭建一套服务,而Kafka的运行主要需要部署jdk、zook

- Java面试题精选:消息队列(二)

芒果不是芒

Java面试题精选javakafka

一、Kafka的特性1.消息持久化:消息存储在磁盘,所以消息不会丢失2.高吞吐量:可以轻松实现单机百万级别的并发3.扩展性:扩展性强,还是动态扩展4.多客户端支持:支持多种语言(Java、C、C++、GO、)5.KafkaStreams(一个天生的流处理):在双十一或者销售大屏就会用到这种流处理。使用KafkaStreams可以快速的把销售额统计出来6.安全机制:Kafka进行生产或者消费的时候会

- Kafka是如何保证数据的安全性、可靠性和分区的

喜欢猪猪

kafka分布式

Kafka作为一个高性能、可扩展的分布式流处理平台,通过多种机制来确保数据的安全性、可靠性和分区的有效管理。以下是关于Kafka如何保证数据安全性、可靠性和分区的详细解析:一、数据安全性SSL/TLS加密:Kafka支持SSL/TLS协议,通过配置SSL证书和密钥来加密数据传输,确保数据在传输过程中不会被窃取或篡改。这一机制有效防止了中间人攻击,保护了数据的安全性。SASL认证:Kafka支持多种

- svg图片兼容性和用法优缺点

独行侠_ef93

svg图片的使用方法第一次来认认真真的研究了下svg图片,之前只是在网上见过,但都是一晃而过也没当回事,最近网站改版看到同事有用到svg格式的图片,想想自己干了几年的重构也没用过,这些细节的知识是应该好好研究研究了。暂时还没研究得完全透切,先记下目前为止所看到的吧不然又给忘了。svg可缩放矢量图形(ScalableVectorGraphics),顾名思义就是任意改变其大小也不会变形,是基于可扩展标

- Kafka详细解析与应用分析

芊言芊语

kafka分布式

Kafka是一个开源的分布式事件流平台(EventStreamingPlatform),由LinkedIn公司最初采用Scala语言开发,并基于ZooKeeper协调管理。如今,Kafka已经被Apache基金会纳入其项目体系,广泛应用于大数据实时处理领域。Kafka凭借其高吞吐量、持久化、分布式和可靠性的特点,成为构建实时流数据管道和流处理应用程序的重要工具。Kafka架构Kafka的架构主要由

- 分享一个基于python的电子书数据采集与可视化分析 hadoop电子书数据分析与推荐系统 spark大数据毕设项目(源码、调试、LW、开题、PPT)

计算机源码社

Python项目大数据大数据pythonhadoop计算机毕业设计选题计算机毕业设计源码数据分析spark毕设

作者:计算机源码社个人简介:本人八年开发经验,擅长Java、Python、PHP、.NET、Node.js、Android、微信小程序、爬虫、大数据、机器学习等,大家有这一块的问题可以一起交流!学习资料、程序开发、技术解答、文档报告如需要源码,可以扫取文章下方二维码联系咨询Java项目微信小程序项目Android项目Python项目PHP项目ASP.NET项目Node.js项目选题推荐项目实战|p

- Kafka 基础与架构理解

StaticKing

KAFKAkafka

目录前言Kafka基础概念消息队列简介:Kafka与传统消息队列(如RabbitMQ、ActiveMQ)的对比Kafka的组件Kafka的工作原理:消息的生产、分发、消费流程Kafka系统架构Kafka的分布式架构设计Leader-Follower机制与数据复制Log-basedStorage和持久化Broker间通信协议Zookeeper在Kafka中的角色总结前言Kafka是一个分布式的消息系

- Spark 组件 GraphX、Streaming

叶域

大数据sparkspark大数据分布式

Spark组件GraphX、Streaming一、SparkGraphX1.1GraphX的主要概念1.2GraphX的核心操作1.3示例代码1.4GraphX的应用场景二、SparkStreaming2.1SparkStreaming的主要概念2.2示例代码2.3SparkStreaming的集成2.4SparkStreaming的应用场景SparkGraphX用于处理图和图并行计算。Graph

- 探索未来,大规模分布式深度强化学习——深入解析IMPALA架构

汤萌妮Margaret

探索未来,大规模分布式深度强化学习——深入解析IMPALA架构scalable_agent项目地址:https://gitcode.com/gh_mirrors/sc/scalable_agent在当今的人工智能研究前沿,深度强化学习(DRL)因其在复杂任务中的卓越表现而备受瞩目。本文要介绍的是一个开源于GitHub的重量级项目:“ScalableDistributedDeep-RLwithImp

- 全面指南:用户行为从前端数据采集到实时处理的最佳实践

数字沉思

营销流量运营系统架构前端内容运营大数据

引言在当今的数据驱动世界,实时数据采集和处理已经成为企业做出及时决策的重要手段。本文将详细介绍如何通过前端JavaScript代码采集用户行为数据、利用API和Kafka进行数据传输、通过Flink实时处理数据的完整流程。无论你是想提升产品体验还是做用户行为分析,这篇文章都将为你提供全面的解决方案。设计一个通用的ClickHouse表来存储用户事件时,需要考虑多种因素,包括事件类型、时间戳、用户信

- 车载以太网之SOME/IP

IT_码农

车载以太网车载以太网SOME/IP

整体介绍SOME/IP(全称为:Scalableservice-OrientedMiddlewarEoverIP),是运行在车载以太网协议栈基础之上的中间件,或者也可以称为应用层软件。发展历程AUTOSAR4.0-完成宝马SOME/IP消息的初步集成;AUTOSAR4.1-支持SOME/IP-SD及其发布/订阅功能;AUTOSAR4.2-添加transformer用于序列化以及其他相关优化;AUT

- 大数据毕业设计hadoop+spark+hive知识图谱租房数据分析可视化大屏 租房推荐系统 58同城租房爬虫 房源推荐系统 房价预测系统 计算机毕业设计 机器学习 深度学习 人工智能

2401_84572577

程序员大数据hadoop人工智能

做了那么多年开发,自学了很多门编程语言,我很明白学习资源对于学一门新语言的重要性,这些年也收藏了不少的Python干货,对我来说这些东西确实已经用不到了,但对于准备自学Python的人来说,或许它就是一个宝藏,可以给你省去很多的时间和精力。别在网上瞎学了,我最近也做了一些资源的更新,只要你是我的粉丝,这期福利你都可拿走。我先来介绍一下这些东西怎么用,文末抱走。(1)Python所有方向的学习路线(

- Docker安装Kafka和Kafka-Manager

阿靖哦

本文介绍如何通过Docker安装kafka与kafka界面管理界面一、拉取zookeeper由于kafka需要依赖于zookeeper,因此这里先运行zookeeper1、拉取镜像dockerpullwurstmeister/zookeeper2、启动dockerrun-d--namezookeeper-p2181:2181-eTZ="Asia/Shanghai"--restartalwayswu

- 主流行架构

rainbowcheng

架构架构

nexus,gitlab,svn,jenkins,sonar,docker,apollo,catteambition,axure,蓝湖,禅道,WCP;redis,kafka,es,zookeeper,dubbo,shardingjdbc,mysql,InfluxDB,Telegraf,Grafana,Nginx,xxl-job,Neo4j,NebulaGraph是一个高性能的,NOSQL图形数据库

- Spark集群的三种模式

MelodyYN

#Sparksparkhadoopbigdata

文章目录1、Spark的由来1.1Hadoop的发展1.2MapReduce与Spark对比2、Spark内置模块3、Spark运行模式3.1Standalone模式部署配置历史服务器配置高可用运行模式3.2Yarn模式安装部署配置历史服务器运行模式4、WordCount案例1、Spark的由来定义:Hadoop主要解决,海量数据的存储和海量数据的分析计算。Spark是一种基于内存的快速、通用、可

- 月度总结 | 2022年03月 | 考研与就业的抉择 | 确定未来走大数据开发路线

「已注销」

个人总结hadoop

一、时间线梳理3月3日,寻找到同专业的就业伙伴3月5日,着手准备Java八股文,决定先走Java后端路线3月8月,申请到了校图书馆的考研专座,决定暂时放弃就业,先准备考研,买了数学和408的资料书3月9日-3月13日,因疫情原因,宿舍区暂封,这段时间在准备考研,发现内容特别多3月13日-3月19日,大部分时间在刷Hadoop、Zookeeper、Kafka的视频,同时在准备实习的项目3月20日,退

- 分布式消息队列Kafka

叶域

大数据分布式kafkascalaspark

分布式消息队列Kafka简介:Kafka是一个分布式消息队列系统,用于处理实时数据流。消息按照主题(Topic)进行分类存储,发送消息的实体称为Producer,接收消息的实体称为Consumer。Kafka集群由多个Kafka实例(Server)组成,每个实例称为Broker。主要用途:广泛应用于构建实时数据管道和流应用程序,适用于需要高吞吐量和低延迟的数据处理场景依赖:Kafka集群和消费者依

- Java中的大数据处理框架对比分析

省赚客app开发者

java开发语言

Java中的大数据处理框架对比分析大家好,我是微赚淘客系统3.0的小编,是个冬天不穿秋裤,天冷也要风度的程序猿!今天,我们将深入探讨Java中常用的大数据处理框架,并对它们进行对比分析。大数据处理框架是现代数据驱动应用的核心,它们帮助企业处理和分析海量数据,以提取有价值的信息。本文将重点介绍ApacheHadoop、ApacheSpark、ApacheFlink和ApacheStorm这四种流行的

- K8S学习之PV&&PVC

david161

部署mysql之前我们需要先了解一个概念有状态服务。这是一种特殊的服务,简单的归纳下就是会产生需要持久化的数据,并且有很强的I/O需求,且重启需要依赖上次存储到磁盘的数据。如典型的mysql,kafka,zookeeper等等。在我们有比较优秀的商业存储的前提下,非常推荐使用有状态服务进行部署,计算和存储分离那是相当的爽的。在实际生产中如果没有这种存储,localPV也是不错的选择,当然local

- PostgreSQL进阶教程

爱分享的码瑞哥

postgresql

PostgreSQL进阶教程目录事务和并发控制事务事务隔离级别锁高级查询联合查询窗口函数子查询CTE(公用表表达式)数据类型自定义数据类型数组JSON高级索引部分索引表达式索引GIN和GiST索引性能调优查询优化配置优化备份与恢复物理备份逻辑备份扩展与插件PostGISpg_cron集群与高可用StreamingReplicationPatroni事务和并发控制事务事务是一个或多个SQL语句的组合

- ExoPlayer简单使用

csdn_zxw

安卓视频播放android

ExoPlayerLibrary概述ExoPlayer是运行在YouTubeappAndroid版本上的视频播放器ExoPlayer是构建在Android低水平媒体API之上的一个应用层媒体播放器。和Android内置的媒体播放器相比,ExoPlayer有许多优点。ExoPlayer支持内置的媒体播放器支持的所有格式外加自适应格式DASH和SmoothStreaming。ExoPlayer可以被高

- 写出渗透测试信息收集详细流程

卿酌南烛_b805

一、扫描域名漏洞:域名漏洞扫描工具有AWVS、APPSCAN、Netspark、WebInspect、Nmap、Nessus、天镜、明鉴、WVSS、RSAS等。二、子域名探测:1、dns域传送漏洞2、搜索引擎查找(通过Google、bing、搜索c段)3、通过ssl证书查询网站:https://myssl.com/ssl.html和https://www.chinassl.net/ssltools

- Scala学习之旅-对Option友好的flatMap

喝冰咖啡

scala学习

聊点什么OptionflatMapvs.OptionOption的作用在Java/Scala中,Optional/Option(本文还是以scala代码为例)是用来表示某个对象存在或者不存在,也就是说,Option是某个类型T的Wrapper,如果T!=null,Option(T).isDefined==true如果T==null,Option(T).isEmpty==true有了Option这层

- Spark MLlib模型训练—推荐算法 ALS(Alternative Least Squares)

不二人生

SparkML实战spark-ml推荐算法算法

SparkMLlib模型训练—推荐算法ALS(AlternativeLeastSquares)如果你平时爱刷抖音,或者热衷看电影,不知道有没有过这样的体验:这类影视App你用得越久,它就好像会读心术一样,总能给你推荐对胃口的内容。其实这种迎合用户喜好的推荐,离不开机器学习中的推荐算法。在今天这一讲,我们就结合两个有趣的电影推荐场景,为你讲解SparkMLlib支持的协同过滤与频繁项集算法电影推荐场

- Kafka系列之:kafka命令详细总结

快乐骑行^_^

日常分享专栏KafkaKafka系列kafka命令详细总结

Kafka系列之:kafka命令详细总结一、添加和删除topic二、修改topic三、平衡领导者四、检查消费者位置五、管理消费者群体一、添加和删除topicbin/kafka-topics.sh--bootstrap-serverbroker_host:port--create--topicmy_topic_name\--partitions20--replication-factor3--con

- 「经济学人」Streaming-video wars

英语学习社

GameofphonesHBOwillleadAT&T’schallengetoNetflixTimeWarner’scrownjewelmustscaleupwhilemaintainingqualityINLATE2012,justbeforethereleaseof“HouseofCards”,TedSarandos,chiefcontentofficerofNetflix,declared

- 搭建Kafka+zookeeper集群调度

krb___

kafka分布式

前言硬件环境172.18.0.5kafkazk1Kafka+zookeeperKafkaBroker集群172.18.0.6kafkazk2Kafka+zookeeperKafkaBroker集群172.18.0.7kafkazk3Kafka+zookeeperKafkaBroker集群软件环境zookeeper3.5.9资源调度、写作Kafka2.8.0消息通信中间件安装JDK1.8安装搭建zo

- 【HDFS】【HDFS架构】【HDFS Architecture】【架构】

资源存储库

hdfs架构hadoop

目录1Introduction介绍2AssumptionsandGoals假设和目标HardwareFailure硬件故障StreamingDataAccess流式数据访问LargeDataSets大型数据集SimpleCoherencyModel简单凝聚力模型“MovingComputationisCheaperthanMovingData”“移动计算比移动数据更便宜”PortabilityAc

- 算法 单链的创建与删除

换个号韩国红果果

c算法

先创建结构体

struct student {

int data;

//int tag;//标记这是第几个

struct student *next;

};

// addone 用于将一个数插入已从小到大排好序的链中

struct student *addone(struct student *h,int x){

if(h==NULL) //??????

- 《大型网站系统与Java中间件实践》第2章读后感

白糖_

java中间件

断断续续花了两天时间试读了《大型网站系统与Java中间件实践》的第2章,这章总述了从一个小型单机构建的网站发展到大型网站的演化过程---整个过程会遇到很多困难,但每一个屏障都会有解决方案,最终就是依靠这些个解决方案汇聚到一起组成了一个健壮稳定高效的大型系统。

看完整章内容,

- zeus持久层spring事务单元测试

deng520159

javaDAOspringjdbc

今天把zeus事务单元测试放出来,让大家指出他的毛病,

1.ZeusTransactionTest.java 单元测试

package com.dengliang.zeus.webdemo.test;

import java.util.ArrayList;

import java.util.List;

import org.junit.Test;

import

- Rss 订阅 开发

周凡杨

htmlxml订阅rss规范

RSS是 Really Simple Syndication的缩写(对rss2.0而言,是这三个词的缩写,对rss1.0而言则是RDF Site Summary的缩写,1.0与2.0走的是两个体系)。

RSS

- 分页查询实现

g21121

分页查询

在查询列表时我们常常会用到分页,分页的好处就是减少数据交换,每次查询一定数量减少数据库压力等等。

按实现形式分前台分页和服务器分页:

前台分页就是一次查询出所有记录,在页面中用js进行虚拟分页,这种形式在数据量较小时优势比较明显,一次加载就不必再访问服务器了,但当数据量较大时会对页面造成压力,传输速度也会大幅下降。

服务器分页就是每次请求相同数量记录,按一定规则排序,每次取一定序号直接的数据

- spring jms异步消息处理

510888780

jms

spring JMS对于异步消息处理基本上只需配置下就能进行高效的处理。其核心就是消息侦听器容器,常用的类就是DefaultMessageListenerContainer。该容器可配置侦听器的并发数量,以及配合MessageListenerAdapter使用消息驱动POJO进行消息处理。且消息驱动POJO是放入TaskExecutor中进行处理,进一步提高性能,减少侦听器的阻塞。具体配置如下:

- highCharts柱状图

布衣凌宇

hightCharts柱图

第一步:导入 exporting.js,grid.js,highcharts.js;第二步:写controller

@Controller@RequestMapping(value="${adminPath}/statistick")public class StatistickController { private UserServi

- 我的spring学习笔记2-IoC(反向控制 依赖注入)

aijuans

springmvcSpring 教程spring3 教程Spring 入门

IoC(反向控制 依赖注入)这是Spring提出来了,这也是Spring一大特色。这里我不用多说,我们看Spring教程就可以了解。当然我们不用Spring也可以用IoC,下面我将介绍不用Spring的IoC。

IoC不是框架,她是java的技术,如今大多数轻量级的容器都会用到IoC技术。这里我就用一个例子来说明:

如:程序中有 Mysql.calss 、Oracle.class 、SqlSe

- TLS java简单实现

antlove

javasslkeystoretlssecure

1. SSLServer.java

package ssl;

import java.io.FileInputStream;

import java.io.InputStream;

import java.net.ServerSocket;

import java.net.Socket;

import java.security.KeyStore;

import

- Zip解压压缩文件

百合不是茶

Zip格式解压Zip流的使用文件解压

ZIP文件的解压缩实质上就是从输入流中读取数据。Java.util.zip包提供了类ZipInputStream来读取ZIP文件,下面的代码段创建了一个输入流来读取ZIP格式的文件;

ZipInputStream in = new ZipInputStream(new FileInputStream(zipFileName));

&n

- underscore.js 学习(一)

bijian1013

JavaScriptunderscore

工作中需要用到underscore.js,发现这是一个包括了很多基本功能函数的js库,里面有很多实用的函数。而且它没有扩展 javascript的原生对象。主要涉及对Collection、Object、Array、Function的操作。 学

- java jvm常用命令工具——jstatd命令(Java Statistics Monitoring Daemon)

bijian1013

javajvmjstatd

1.介绍

jstatd是一个基于RMI(Remove Method Invocation)的服务程序,它用于监控基于HotSpot的JVM中资源的创建及销毁,并且提供了一个远程接口允许远程的监控工具连接到本地的JVM执行命令。

jstatd是基于RMI的,所以在运行jstatd的服务

- 【Spring框架三】Spring常用注解之Transactional

bit1129

transactional

Spring可以通过注解@Transactional来为业务逻辑层的方法(调用DAO完成持久化动作)添加事务能力,如下是@Transactional注解的定义:

/*

* Copyright 2002-2010 the original author or authors.

*

* Licensed under the Apache License, Version

- 我(程序员)的前进方向

bitray

程序员

作为一个普通的程序员,我一直游走在java语言中,java也确实让我有了很多的体会.不过随着学习的深入,java语言的新技术产生的越来越多,从最初期的javase,我逐渐开始转变到ssh,ssi,这种主流的码农,.过了几天为了解决新问题,webservice的大旗也被我祭出来了,又过了些日子jms架构的activemq也开始必须学习了.再后来开始了一系列技术学习,osgi,restful.....

- nginx lua开发经验总结

ronin47

使用nginx lua已经两三个月了,项目接开发完毕了,这几天准备上线并且跟高德地图对接。回顾下来lua在项目中占得必中还是比较大的,跟PHP的占比差不多持平了,因此在开发中遇到一些问题备忘一下 1:content_by_lua中代码容量有限制,一般不要写太多代码,正常编写代码一般在100行左右(具体容量没有细心测哈哈,在4kb左右),如果超出了则重启nginx的时候会报 too long pa

- java-66-用递归颠倒一个栈。例如输入栈{1,2,3,4,5},1在栈顶。颠倒之后的栈为{5,4,3,2,1},5处在栈顶

bylijinnan

java

import java.util.Stack;

public class ReverseStackRecursive {

/**

* Q 66.颠倒栈。

* 题目:用递归颠倒一个栈。例如输入栈{1,2,3,4,5},1在栈顶。

* 颠倒之后的栈为{5,4,3,2,1},5处在栈顶。

*1. Pop the top element

*2. Revers

- 正确理解Linux内存占用过高的问题

cfyme

linux

Linux开机后,使用top命令查看,4G物理内存发现已使用的多大3.2G,占用率高达80%以上:

Mem: 3889836k total, 3341868k used, 547968k free, 286044k buffers

Swap: 6127608k total,&nb

- [JWFD开源工作流]当前流程引擎设计的一个急需解决的问题

comsci

工作流

当我们的流程引擎进入IRC阶段的时候,当循环反馈模型出现之后,每次循环都会导致一大堆节点内存数据残留在系统内存中,循环的次数越多,这些残留数据将导致系统内存溢出,并使得引擎崩溃。。。。。。

而解决办法就是利用汇编语言或者其它系统编程语言,在引擎运行时,把这些残留数据清除掉。

- 自定义类的equals函数

dai_lm

equals

仅作笔记使用

public class VectorQueue {

private final Vector<VectorItem> queue;

private class VectorItem {

private final Object item;

private final int quantity;

public VectorI

- Linux下安装R语言

datageek

R语言 linux

命令如下:sudo gedit /etc/apt/sources.list1、deb http://mirrors.ustc.edu.cn/CRAN/bin/linux/ubuntu/ precise/ 2、deb http://dk.archive.ubuntu.com/ubuntu hardy universesudo apt-key adv --keyserver ke

- 如何修改mysql 并发数(连接数)最大值

dcj3sjt126com

mysql

MySQL的连接数最大值跟MySQL没关系,主要看系统和业务逻辑了

方法一:进入MYSQL安装目录 打开MYSQL配置文件 my.ini 或 my.cnf查找 max_connections=100 修改为 max_connections=1000 服务里重起MYSQL即可

方法二:MySQL的最大连接数默认是100客户端登录:mysql -uusername -ppass

- 单一功能原则

dcj3sjt126com

面向对象的程序设计软件设计编程原则

单一功能原则[

编辑]

SOLID 原则

单一功能原则

开闭原则

Liskov代换原则

接口隔离原则

依赖反转原则

查

论

编

在面向对象编程领域中,单一功能原则(Single responsibility principle)规定每个类都应该有

- POJO、VO和JavaBean区别和联系

fanmingxing

VOPOJOjavabean

POJO和JavaBean是我们常见的两个关键字,一般容易混淆,POJO全称是Plain Ordinary Java Object / Plain Old Java Object,中文可以翻译成:普通Java类,具有一部分getter/setter方法的那种类就可以称作POJO,但是JavaBean则比POJO复杂很多,JavaBean是一种组件技术,就好像你做了一个扳子,而这个扳子会在很多地方被

- SpringSecurity3.X--LDAP:AD配置

hanqunfeng

SpringSecurity

前面介绍过基于本地数据库验证的方式,参考http://hanqunfeng.iteye.com/blog/1155226,这里说一下如何修改为使用AD进行身份验证【只对用户名和密码进行验证,权限依旧存储在本地数据库中】。

将配置文件中的如下部分删除:

<!-- 认证管理器,使用自定义的UserDetailsService,并对密码采用md5加密-->

- mac mysql 修改密码

IXHONG

mysql

$ sudo /usr/local/mysql/bin/mysqld_safe –user=root & //启动MySQL(也可以通过偏好设置面板来启动)$ sudo /usr/local/mysql/bin/mysqladmin -uroot password yourpassword //设置MySQL密码(注意,这是第一次MySQL密码为空的时候的设置命令,如果是修改密码,还需在-

- 设计模式--抽象工厂模式

kerryg

设计模式

抽象工厂模式:

工厂模式有一个问题就是,类的创建依赖于工厂类,也就是说,如果想要拓展程序,必须对工厂类进行修改,这违背了闭包原则。我们采用抽象工厂模式,创建多个工厂类,这样一旦需要增加新的功能,直接增加新的工厂类就可以了,不需要修改之前的代码。

总结:这个模式的好处就是,如果想增加一个功能,就需要做一个实现类,

- 评"高中女生军训期跳楼”

nannan408

首先,先抛出我的观点,各位看官少点砖头。那就是,中国的差异化教育必须做起来。

孔圣人有云:有教无类。不同类型的人,都应该有对应的教育方法。目前中国的一体化教育,不知道已经扼杀了多少创造性人才。我们出不了爱迪生,出不了爱因斯坦,很大原因,是我们的培养思路错了,我们是第一要“顺从”。如果不顺从,我们的学校,就会用各种方法,罚站,罚写作业,各种罚。军

- scala如何读取和写入文件内容?

qindongliang1922

javajvmscala

直接看如下代码:

package file

import java.io.RandomAccessFile

import java.nio.charset.Charset

import scala.io.Source

import scala.reflect.io.{File, Path}

/**

* Created by qindongliang on 2015/

- C语言算法之百元买百鸡

qiufeihu

c算法

中国古代数学家张丘建在他的《算经》中提出了一个著名的“百钱买百鸡问题”,鸡翁一,值钱五,鸡母一,值钱三,鸡雏三,值钱一,百钱买百鸡,问翁,母,雏各几何?

代码如下:

#include <stdio.h>

int main()

{

int cock,hen,chick; /*定义变量为基本整型*/

for(coc

- Hadoop集群安全性:Hadoop中Namenode单点故障的解决方案及详细介绍AvatarNode

wyz2009107220

NameNode

正如大家所知,NameNode在Hadoop系统中存在单点故障问题,这个对于标榜高可用性的Hadoop来说一直是个软肋。本文讨论一下为了解决这个问题而存在的几个solution。

1. Secondary NameNode

原理:Secondary NN会定期的从NN中读取editlog,与自己存储的Image进行合并形成新的metadata image

优点:Hadoop较早的版本都自带,