使用EfficientNetB0网络分类乳腺癌图片

一、数据集选取

本文进行使用EfficientNetB0网络对乳腺癌图片进行分类,分为无肿瘤,良性肿瘤,恶性肿瘤。

数据集为:数据集|阿里·法米 (cu.edu.eg)

Al-Dhabyani W, Gomaa M, Khaled H, Fahmy A. Dataset of breast ultrasound images. Data in Brief. 2020 Feb;28:104863. DOI: 10.1016/j.dib.2019.104863.

二、数据处理

数据处理借鉴了此篇博客:

基于VGGNet乳腺超声图像数据集分析_欸嘿哟吼的博客-CSDN博客_busi数据集

此文引用部分代码:

import numpy as np

import matplotlib.pyplot as plt

import glob, os

import tensorflow as tf

from tensorflow.keras import layers, activations, optimizers, losses, metrics, initializers

from tensorflow.keras.preprocessing import image, image_dataset_from_directory

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

dir_path = 'C:/Users/Administrator/Desktop/SRP_1/image_1/'

IMAGE_SHAPE = (224, 224)

directories = os.listdir(dir_path)

files = []

labels = []

for folder in directories:

fileList = glob.glob(dir_path + '/' + folder + '/*')

labels.extend([folder for l in fileList])

files.extend(fileList)

selected_files = []

selected_labels = []

for file, label in zip(files, labels):

if 'mask' not in file:

selected_files.append(file)

selected_labels.append(label)

def prepare_image(file):

img = image.load_img(file, target_size=IMAGE_SHAPE)

img_array = image.img_to_array(img)

return tf.keras.applications.efficientnet.preprocess_input(img_array)

images = {

'image': [],

'target': []

}

for i, (file, label) in enumerate(zip(selected_files,selected_labels)):

images['image'].append(prepare_image(file))

images['target'].append(label)

images['image'] = np.array(images['image'])

images['target'] = np.array(images['target'])

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

images['target'] = le.fit_transform(images['target'])

classes = le.classes_

print(f'标签数据(target)有: {classes}')此数据集中,normal的图片数量最少,只有一百多张(不包括掩模图),为了保证学习效果,我将加入模型中训练的benign类和malignant类图片也都减少到一百多。使用以上方法分割数据会得到有序数据集。

三、模型搭建

1、首先进行训练集和测试集的分类,此分类还可以保证得到的是打乱重排的数据集,以避免sparse_categorical_accuracy和val_sparse_categorical_accuracy出现极度不匹配情况。

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(images['image'], images['target'],test_size=0.15)2、模型搭建

(1)、调用了keras自带的EfficientNetB0,并进行自己训练层的添加。

(2)、进行归一化,添加BatchNormalization层

(3)、使用switch激活函数

(4)、进行全局平均池化,添加GlobalAveragePooling2D层

(5)、进行正则化,添加Dropout层

(6)、添加1000个神经元,使用relu激活函数

(7)、输出3类,使用softmax激活函数

from tensorflow.keras.applications import EfficientNetB0

from tensorflow.keras import models, layers

def modelEfficientNetB0():

model = models.Sequential()

model.add(EfficientNetB0(include_top=False, weights="imagenet",

input_shape=(*IMAGE_SHAPE, 3)))

model.add(layers.BatchNormalization())

model.add(layers.Activation('swish'))

model.add(layers.GlobalAveragePooling2D())

model.add(layers.Dropout(rate=0.5))

model.add(layers.Dense(1000, activation='relu'))

model.add(layers.Dense(3, activation="softmax"))

return model

model = modelEfficientNetB0()

model.summary()3、优化器、损失函数等的配置

model.compile(optimizer=optimizers.SGD(0.1), loss=losses.sparse_categorical_crossentropy,

metrics=[metrics.SparseCategoricalAccuracy()])4、查看训练过程

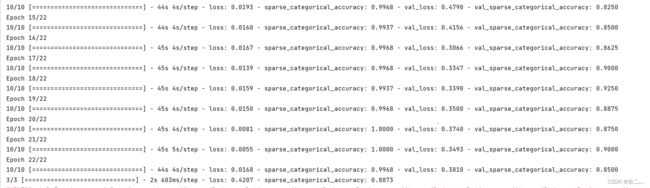

history = model.fit(x=x_train,y=y_train,epochs=20,validation_split=0.2,batch_size=32)

val_loss,val_sparse_categorical_accuracy=model.evaluate(x_test,y_test)5、保存模型

model.save("model", include_optimizer=True)6、打印loss,acc等

plt.plot(history.history['sparse_categorical_accuracy'])

plt.plot(history.history['val_sparse_categorical_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('acc')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()四、完整代码

import numpy as np

import matplotlib.pyplot as plt

import glob, os

import tensorflow as tf

from tensorflow.keras import layers, activations, optimizers, losses, metrics, initializers

from tensorflow.keras.preprocessing import image, image_dataset_from_directory

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

dir_path = 'C:/Users/Administrator/Desktop/SRP_1/image_1/'

IMAGE_SHAPE = (224, 224)

directories = os.listdir(dir_path)

files = []

labels = []

for folder in directories:

fileList = glob.glob(dir_path + '/' + folder + '/*')

labels.extend([folder for l in fileList])

files.extend(fileList)

selected_files = []

selected_labels = []

for file, label in zip(files, labels):

if 'mask' not in file:

selected_files.append(file)

selected_labels.append(label)

def prepare_image(file):

img = image.load_img(file, target_size=IMAGE_SHAPE)

img_array = image.img_to_array(img)

return tf.keras.applications.efficientnet.preprocess_input(img_array)

images = {

'image': [],

'target': []

}

for i, (file, label) in enumerate(zip(selected_files,selected_labels)):

images['image'].append(prepare_image(file))

images['target'].append(label)

images['image'] = np.array(images['image'])

images['target'] = np.array(images['target'])

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

images['target'] = le.fit_transform(images['target'])

classes = le.classes_

print(f'标签数据(target)有: {classes}')

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(images['image'], images['target'],test_size=0.15)

#print("x_train",x_train, "x_test",x_test, "y_train",y_train, "y_test",y_test)

from tensorflow.keras.applications import EfficientNetB0

from tensorflow.keras import models, layers

def modelEfficientNetB0():

model = models.Sequential()

model.add(EfficientNetB0(include_top=False, weights="imagenet",

input_shape=(*IMAGE_SHAPE, 3)))

model.add(layers.BatchNormalization())

model.add(layers.Activation('swish'))

model.add(layers.GlobalAveragePooling2D())

model.add(layers.Dropout(rate=0.5))

model.add(layers.Dense(1000, activation='relu'))

model.add(layers.Dense(3, activation="softmax"))

return model

model = modelEfficientNetB0()

#model.summary()

# compile the model

model.compile(optimizer=optimizers.SGD(0.1), loss=losses.sparse_categorical_crossentropy,

metrics=[metrics.SparseCategoricalAccuracy()])

history = model.fit(x=x_train,y=y_train,epochs=20,validation_split=0.2,batch_size=32)

val_loss,val_sparse_categorical_accuracy=model.evaluate(x_test,y_test)

model.save("model_g_1", include_optimizer=True)

plt.plot(history.history['sparse_categorical_accuracy'])

plt.plot(history.history['val_sparse_categorical_accuracy'])

plt.title('Model Accuracy')

plt.ylabel('acc')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model Loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()五、准确率

最终训练准确率可以达到80%以上,效果还是可以的