spark第二章:sparkcore实例

系列文章目录

spark第一章:环境安装

spark第二章:sparkcore实例

文章目录

- 系列文章目录

- 前言

- 一、idea创建项目

- 二、编写实例

-

- 1.WordCount

- 2.RDD实例

- 3.Spark实例

- 总结

前言

上次我们搭建了环境,现在就要开始上实例,这次拖了比较长的时间,实在是sparkcore的知识点有点多,而且例子有些复杂,尽自己最大的能力说清楚,说不清楚也没办法了。

一、idea创建项目

这个可以参考我搭建scala环境

Scala第一章:环境搭建

pom添加需要的依赖

org.apache.spark

spark-core_2.12

3.2.3

二、编写实例

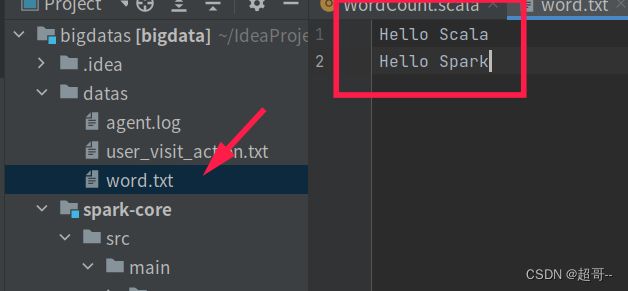

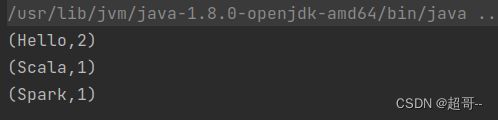

1.WordCount

package com.atguigu.bigdata.spark.core.rdd.wc

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object WordCount {

def main(args: Array[String]): Unit = {

// 创建 Spark 运行配置对象

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("WordCount")

// 创建 Spark 上下文环境对象(连接对象)

val sc : SparkContext = new SparkContext(sparkConf)

// 读取文件 获取一行一行的数据

val lines: RDD[String] = sc.textFile("datas/word.txt")

// 将一行数据进行拆分

val words: RDD[String] = lines.flatMap(_.split(" "))

// 将数据根据单次进行分组,便于统计

val wordToOne: RDD[(String, Int)] = words.map(word => (word, 1))

// 对分组后的数据进行转换

val wordToSum: RDD[(String, Int)] = wordToOne.reduceByKey(_ + _)

// 打印输出

val array: Array[(String, Int)] = wordToSum.collect()

array.foreach(println)

sc.stop()

}

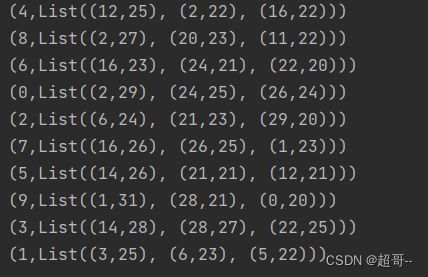

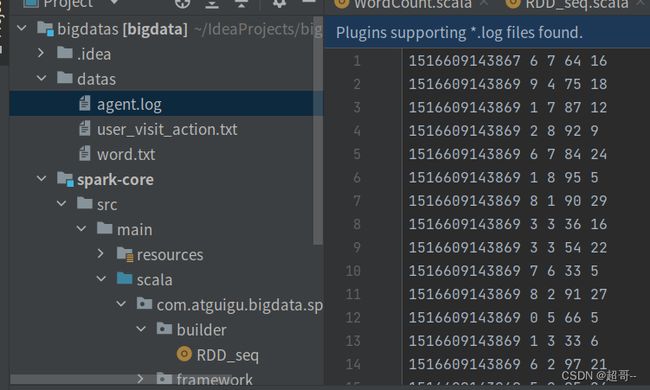

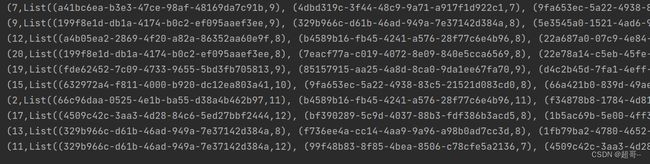

2.RDD实例

1.数据准备

agent.log:时间戳,省份,城市,用户,广告,中间字段使用空格分隔。

实验数据可以到尚硅谷的平台寻找。

RDD_seq.scala

package com.atguigu.bigdata.spark.core.rdd.builder

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object RDD_seq {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("Operator")

val sc = new SparkContext(sparkConf)

//1.获取原始数据

val dataRDD: RDD[String] = sc.textFile("datas/agent.log")

//2.将原始数据进行结构的转换。方便统计

// 时间戳 省份 城市 用户 广告 =>

// ((省份,广告),1)

val mapRDD: RDD[((String, String), Int)] = dataRDD.map(

(line: String) => {

val datas: Array[String] = line.split(" ")

((datas(1), datas(4)), 1)

}

)

//3.将转换结构厚的数据进行分组聚合

// ((省份,广告),1)=>((省份,广告),sum)

val reduceRDD: RDD[((String, String), Int)] = mapRDD.reduceByKey((_: Int) + (_: Int))

//4.将聚合的结果进行结构的转换

// ((省份,广告),sum) =>(省份,(广告,sum))

val newMapRDD: RDD[(String, (String, Int))] = reduceRDD.map {

case ((prv, ad), sum) =>

(prv, (ad, sum))

}

//5.将转换结构后的数据根据省份进行分组

//(省份,[(广告A,sumA)(广告B,sumB)])

val groupRDD: RDD[(String, Iterable[(String, Int)])] = newMapRDD.groupByKey()

//6.将分组后的数据组内排序(降序) 取前三名

val resultRDD: RDD[(String, List[(String, Int)])] = groupRDD.mapValues(

(iter: Iterable[(String, Int)]) => {

iter.toList.sortBy((_: (String, Int))._2)(Ordering.Int.reverse).take(3)

}

)

//7.采集数据打印在控制台

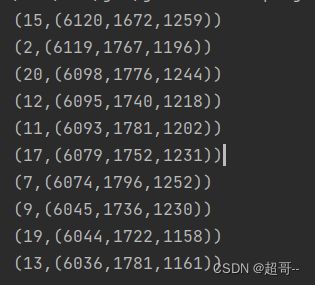

resultRDD.collect().foreach(println)

sc.stop()

}

}

3.Spark实例

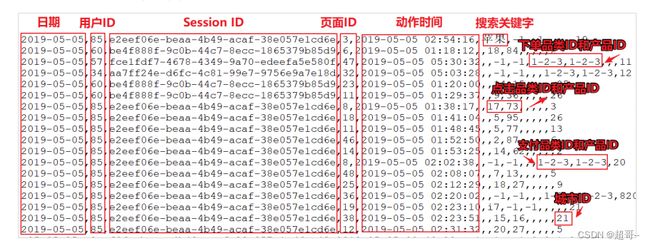

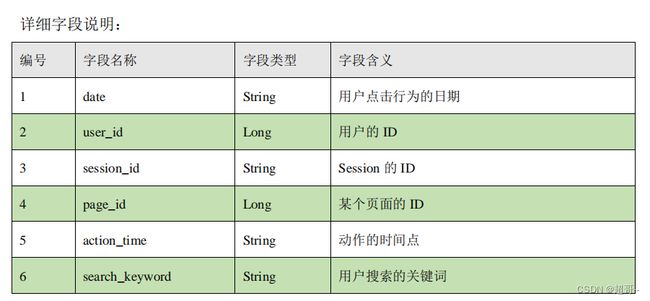

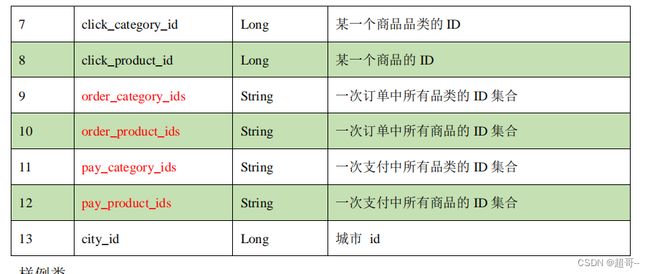

1.数据准备

user_visit_action.txt

主要包含用户的 4 种行为:搜索,点击,下单,支付。数据规则如下:

主要包含用户的 4 种行为:搜索,点击,下单,支付。数据规则如下:

- 数据文件中每行数据采用下划线分隔数据

- 每一行数据表示用户的一次行为,这个行为只能是 4 种行为的一种

- 如果搜索关键字为 null,表示数据不是搜索数据

- 如果点击的品类 ID 和产品 ID 为-1,表示数据不是点击数据

- 针对于下单行为,一次可以下单多个商品,所以品类 ID 和产品 ID 可以是多个,id 之间采用逗号分隔,如果本次不是下单行为,则数据采用null 表示

- 支付行为和下单行为类似

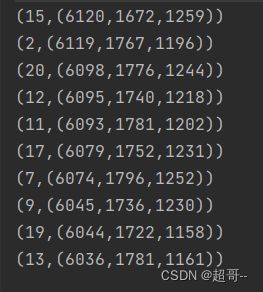

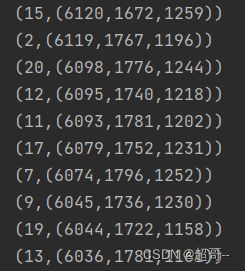

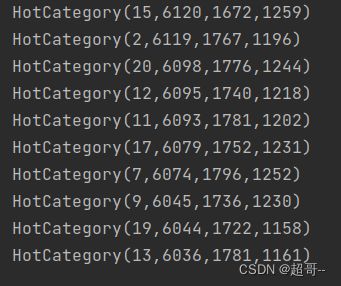

需求 1:Top10 热门品类

品类是指产品的分类,大型电商网站品类分多级,咱们的项目中品类只有一级,不同的

公司可能对热门的定义不一样。我们按照每个品类的点击、下单、支付的量来统计热门品类。

鞋 点击数 下单数 支付数

衣服 点击数 下单数 支付数

电脑 点击数 下单数 支付数

例如,综合排名 = 点击数20%+下单数30%+支付数*50%

本项目需求优化为:先按照点击数排名,靠前的就排名高;如果点击数相同,再比较下

单数;下单数再相同,就比较支付数

HotCategoryTop10Analysis.scala

package com.atguigu.bigdata.spark.core.rdd.seq

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object HotCategoryTop10Analysis {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf =

new SparkConf().setMaster("local[*]").setAppName("HotCategoryTop10Analysis")

val sc = new SparkContext(sparkConf)

//1.读取原始日志数据

val actionRDD: RDD[String] = sc.textFile("datas/user_visit_action.txt")

//2.统计品类的点击数量:(品类ID,点击数量)

val clickActionRDD: RDD[String] = actionRDD.filter(

(action: String) => {

val datas: Array[String] = action.split("_")

datas(6) != "-1"

}

)

val clickCountRDD: RDD[(String, Int)] = clickActionRDD.map(

(action: String) => {

val datas: Array[String] = action.split("_")

(datas(6), 1)

}

).reduceByKey((_: Int) + (_: Int))

//3.统计品类的下单数量:(品类ID,下单数量)

val orderActionRDD: RDD[String] = actionRDD.filter(

(action: String) => {

val datas: Array[String] = action.split("_")

datas(8) != "null"

}

)

val orderCountRDD: RDD[(String, Int)] = orderActionRDD.flatMap(

(action: String) => {

val datas: Array[String] = action.split("_")

val cid: String = datas(8)

val cids: Array[String] = cid.split(",")

cids.map((id: String) => (id, 1))

}

).reduceByKey((_: Int) + (_: Int))

//4.统计品类的支付数量:(品类ID,支付数量)

val payActionRDD: RDD[String] = actionRDD.filter(

(action: String) => {

val datas: Array[String] = action.split("_")

datas(10) != "null"

}

)

val payCountRDD: RDD[(String, Int)] = payActionRDD.flatMap(

(action: String) => {

val datas: Array[String] = action.split("_")

val cid: String = datas(10)

val cids: Array[String] = cid.split(",")

cids.map((id: String) => (id, 1))

}

).reduceByKey((_: Int) + (_: Int))

//5.将品类进行排序,并且取钱10名

val cogroupRDD: RDD[(String, (Iterable[Int], Iterable[Int], Iterable[Int]))] =

clickCountRDD.cogroup(orderCountRDD, payCountRDD)

val analysisRDD: RDD[(String, (Int, Int, Int))] = cogroupRDD.mapValues {

case (clickIter, orderIter, payIter) =>

var clickCnt = 0

val iter1: Iterator[Int] = clickIter.iterator

if (iter1.hasNext) {

clickCnt = iter1.next()

}

var orderCnt = 0

val iter2: Iterator[Int] = orderIter.iterator

if (iter2.hasNext) {

orderCnt = iter2.next()

}

var payCnt = 0

val iter3: Iterator[Int] = payIter.iterator

if (iter3.hasNext) {

payCnt = iter3.next()

}

(clickCnt, orderCnt, payCnt)

}

val resultRDD: Array[(String, (Int, Int, Int))] = analysisRDD.sortBy((_: (String, (Int, Int, Int)))._2, ascending = false).take(10)

//6.将结果采集到控制台打印出来

resultRDD.foreach(println)

sc.stop()

}

}

HotCategoryTop10Analysis1.scala

package com.atguigu.bigdata.spark.core.rdd.seq

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object HotCategoryTop10Analysis1 {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("HotCategoryTop10Analysis")

val sc = new SparkContext(sparkConf)

//1.读取原始日志数据

val actionRDD: RDD[String] = sc.textFile("datas/user_visit_action.txt")

actionRDD.cache()

//2.统计品类的点击数量:(品类ID,点击数量)

val clickActionRDD: RDD[String] = actionRDD.filter(

(action: String) => {

val datas: Array[String] = action.split("_")

datas(6) != "-1"

}

)

val clickCountRDD: RDD[(String, Int)] = clickActionRDD.map(

(action: String) => {

val datas: Array[String] = action.split("_")

(datas(6), 1)

}

).reduceByKey((_: Int) + (_: Int))

//3.统计品类的下单数量:(品类ID,下单数量)

val orderActionRDD: RDD[String] = actionRDD.filter(

(action: String) => {

val datas: Array[String] = action.split("_")

datas(8) != "null"

}

)

val orderCountRDD: RDD[(String, Int)] = orderActionRDD.flatMap(

(action: String) => {

val datas: Array[String] = action.split("_")

val cid: String = datas(8)

val cids: Array[String] = cid.split(",")

cids.map((id: String) => (id, 1))

}

).reduceByKey((_: Int) + (_: Int))

//4.统计品类的支付数量:(品类ID,支付数量)

val payActionRDD: RDD[String] = actionRDD.filter(

(action: String) => {

val datas: Array[String] = action.split("_")

datas(10) != "null"

}

)

val payCountRDD: RDD[(String, Int)] = payActionRDD.flatMap(

(action: String) => {

val datas: Array[String] = action.split("_")

val cid: String = datas(10)

val cids: Array[String] = cid.split(",")

cids.map((id: String) => (id, 1))

}

).reduceByKey((_: Int) + (_: Int))

//5.将品类进行排序,并且取钱10名

val rdd1: RDD[(String, (Int, Int, Int))] = clickCountRDD.map {

case (cid, cnt) =>

(cid, (cnt, 0, 0))

}

val rdd2: RDD[(String, (Int, Int, Int))] = orderCountRDD.map {

case (cid, cnt) =>

(cid, (0, cnt, 0))

}

val rdd3: RDD[(String, (Int, Int, Int))] = payCountRDD.map {

case (cid, cnt) =>

(cid, (0, 0, cnt))

}

//将三个数据源合并在一起,统一进行聚合计算

val sourceRDD: RDD[(String, (Int, Int, Int))] = rdd1.union(rdd2).union(rdd3)

val analysisRDD: RDD[(String, (Int, Int, Int))] = sourceRDD.reduceByKey(

(t1: (Int, Int, Int), t2: (Int, Int, Int)) => {

(t1._1 + t2._1, t1._2 + t2._2 ,t1._3 + t2._3)

}

)

val resultRDD: Array[(String, (Int, Int, Int))] = analysisRDD.sortBy((_: (String, (Int, Int, Int)))._2, ascending = false).take(10)

//6.将结果采集到控制台打印出来

resultRDD.foreach(println)

sc.stop()

}

}

HotCategoryTop10Analysis2.scala

package com.atguigu.bigdata.spark.core.rdd.seq

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object HotCategoryTop10Analysis2 {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("HotCategoryTop10Analysis")

val sc = new SparkContext(sparkConf)

//1.读取原始日志数据

val actionRDD: RDD[String] = sc.textFile("datas/user_visit_action.txt")

//2.将数据转换结构

val flatRDD: RDD[(String, (Int, Int, Int))] = actionRDD.flatMap(

action => {

val datas = action.split("_")

if (datas(6) != "-1") {

//点击

List((datas(6), (1, 0, 0)))

} else if (datas(8) != "null") {

//下单

val ids = datas(8).split(",")

ids.map(id => (id, (0, 1, 0)))

} else if (datas(10) != "null") {

//支付

val ids = datas(10).split(",")

ids.map(id => (id, (0, 0, 1)))

} else {

Nil

}

}

)

//3.将相同的品类ID的数据进行分组聚合

val analysisRDD=flatRDD.reduceByKey(

(t1,t2)=>

(t1._1 + t2._1, t1._2 + t2._2 ,t1._3 + t2._3)

)

//4.将统计结果根据数据进行降序出来,去前10名

val resultRDD: Array[(String, (Int, Int, Int))] = analysisRDD.sortBy((_: (String, (Int, Int, Int)))._2, ascending = false).take(10)

//5.将结果采集到控制台打印出来

resultRDD.foreach(println)

sc.stop()

}

}

HotCategoryTop10Analysis3.scala

package com.atguigu.bigdata.spark.core.rdd.seq

import org.apache.spark.rdd.RDD

import org.apache.spark.util.AccumulatorV2

import org.apache.spark.{SparkConf, SparkContext}

import scala.collection.mutable

object HotCategoryTop10Analysis3 {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster(“local[*]”).setAppName(“HotCategoryTop10Analysis”)

val sc = new SparkContext(sparkConf)

//1.读取原始日志数据

val actionRDD: RDD[String] = sc.textFile("datas/user_visit_action.txt")

val acc =new HotCategoryAccumulator

sc.register(acc,"hotCategory")

//2.将数据转换结构

actionRDD.foreach(

action => {

val datas = action.split("_")

if (datas(6) != "-1") {

//点击

acc.add((datas(6),"click"))

} else if (datas(8) != "null") {

//下单

val ids = datas(8).split(",")

ids.foreach(

id=>{

acc.add(id,"order")

}

)

} else if (datas(10) != "null") {

//支付

val ids = datas(10).split(",")

ids.foreach(

id=>{

acc.add(id,"pay")

}

)

}

}

)

val accVal: mutable.Map[String, HotCategory] = acc.value

val categories: mutable.Iterable[HotCategory] = accVal.map(_._2)

val sort=categories.toList.sortWith(

(left,right)=>{

if (left.clickCnt>right.clickCnt) {

true

} else if (left.clickCnt==right.clickCnt){

if (left.orderCnt>right.orderCnt) {

true

} else if(left.orderCnt==right.orderCnt) {

left.payCnt>right.payCnt

}else {

false

}

}else{

false

}

}

)

//5.将结果采集到控制台打印出来

sort.take(10).foreach(println)

sc.stop()

}

case class HotCategory(cid:String,var clickCnt:Int,var orderCnt :Int,var payCnt:Int)

class HotCategoryAccumulator extends AccumulatorV2[(String,String),mutable.Map[String,HotCategory]]{

private val hcMap=mutable.Map[String,HotCategory]()

override def isZero: Boolean = {

hcMap.isEmpty

}

override def copy(): AccumulatorV2[(String, String), mutable.Map[String, HotCategory]] = {

new HotCategoryAccumulator

}

override def reset(): Unit = {

hcMap.clear()

}

override def add(v: (String, String)): Unit = {

val cid= v._1

val actionType= v._2

val category: HotCategory = hcMap.getOrElse(cid, HotCategory(cid, 0, 0, 0))

if (actionType =="click"){

category.clickCnt+=1

}else if (actionType == "order") {

category.orderCnt+=1

}else if (actionType =="pay"){

category.payCnt+=1

}

hcMap.update(cid,category)

}

override def merge(other: AccumulatorV2[(String, String), mutable.Map[String, HotCategory]]): Unit = {

val map1=this.hcMap

val map2=other.value

map2.foreach{

case (cid,hc)=>{

val category: HotCategory = map1.getOrElse(cid, HotCategory(cid, 0, 0, 0))

category.clickCnt+=hc.clickCnt

category.orderCnt+=hc.orderCnt

category.payCnt+=hc.payCnt

map1.update(cid,category)

}

}

}

override def value: mutable.Map[String, HotCategory] = hcMap

}

}

需求 2:Top10 热门品类中每个品类的 Top10 活跃 Session 统计

在需求一的基础上,增加每个品类用户 session 的点击统计

HotCategoryTop10SessionAnalysis.scala

package com.atguigu.bigdata.spark.core.rdd.seq

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object HotCategoryTop10SessionAnalysis {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("HotCategoryTop10Analysis")

val sc = new SparkContext(sparkConf)

val actionRDD: RDD[String] = sc.textFile("datas/user_visit_action.txt")

actionRDD.cache()

val top10Ids: Array[String] = top10Category(actionRDD)

//1.过滤原始数据,保留点击和前10品类ID

val filterActionRDD: RDD[String] = actionRDD.filter(

action => {

val datas: Array[String] = action.split("_")

if (datas(6) != "-1") {

top10Ids.contains(datas(6))

} else {

false

}

}

)

//2.根据评类ID和session进行点击量的统计

val reduceRDD: RDD[((String, String), Int)] = filterActionRDD.map(

action => {

val datas: Array[String] = action.split("_")

((datas(6), datas(2)), 1)

}

) reduceByKey (_ + _)

//3.将统计的结果进行结构的转换

val mapRDD: RDD[(String, (String, Int))] = reduceRDD.map {

case ((cid, sid), sum) => {

(cid, (sid, sum))

}

}

//4.相同的品类进行分组

val groupRDD: RDD[(String, Iterable[(String, Int)])] = mapRDD.groupByKey()

//5.将分组后的数据进行点击量的排序,取前10名

val resultRDD: RDD[(String, List[(String, Int)])] = groupRDD.mapValues(

iter => {

iter.toList.sortBy(_._2)(Ordering.Int.reverse).take(10)

}

)

resultRDD.foreach(println)

sc.stop()

}

def top10Category(actionRDD: RDD[String]) ={

val flatRDD: RDD[(String, (Int, Int, Int))] = actionRDD.flatMap(

action => {

val datas = action.split("_")

if (datas(6) != "-1") {

List((datas(6), (1, 0, 0)))

} else if (datas(8) != "null") {

val ids = datas(8).split(",")

ids.map(id => (id, (0, 1, 0)))

} else if (datas(10) != "null") {

val ids = datas(10).split(",")

ids.map(id => (id, (0, 0, 1)))

} else {

Nil

}

}

)

val analysisRDD=flatRDD.reduceByKey(

(t1,t2)=>

(t1._1 + t2._1, t1._2 + t2._2 ,t1._3 + t2._3)

)

analysisRDD.sortBy((_: (String, (Int, Int, Int)))._2, ascending = false).take(10).map(_._1)

}

}

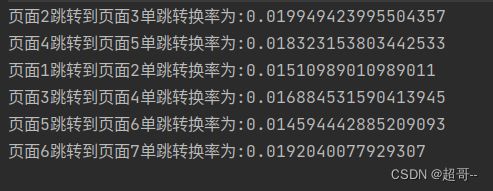

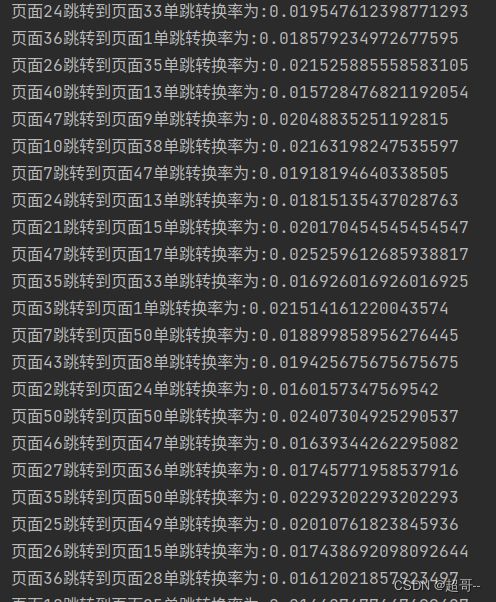

需求 3:页面单跳转换率统计

页面单跳转化率

计算页面单跳转化率,什么是页面单跳转换率,比如一个用户在一次 Session 过程中

访问的页面路径 3,5,7,9,10,21,那么页面 3 跳到页面 5 叫一次单跳,7-9 也叫一次单跳,

那么单跳转化率就是要统计页面点击的概率。

比如:计算 3-5 的单跳转化率,先获取符合条件的 Session 对于页面 3 的访问次数(PV) 为 A,然后获取符合条件的 Session 中访问了页面 3 又紧接着访问了页面 5 的次数为 B,

那么 B/A 就是 3-5 的页面单跳转化率

PageflowAnalysis.scala

package com.atguigu.bigdata.spark.core.rdd.seq

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object PageflowAnalysis {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("HotCategoryTop10Analysis")

val sc = new SparkContext(sparkConf)

val actionRDD: RDD[String] = sc.textFile("datas/user_visit_action.txt")

val actionDataRDD=actionRDD.map(

action=>{

val datas: Array[String] = action.split("_")

UserVisitAction(

datas(0),

datas(1).toLong,

datas(2),

datas(3).toLong,

datas(4),

datas(5),

datas(6).toLong,

datas(7).toLong,

datas(8),

datas(9),

datas(10),

datas(11),

datas(12).toLong,

)

}

)

actionDataRDD.cache()

val pageidToCountMap: Map[Long, Long] = actionDataRDD.map(

action => {

(action.page_id, 1L)

}

).reduceByKey(_ + _).collect().toMap

val sessionRDD: RDD[(String, Iterable[UserVisitAction])] = actionDataRDD.groupBy(_.session_id)

val mvRDD: RDD[(String, List[((Long, Long), Int)])] = sessionRDD.mapValues(

iter => {

val sortList: List[UserVisitAction] = iter.toList.sortBy(_.action_time)

val flowIds: List[Long] = sortList.map(_.page_id)

val pageflowIds: List[(Long, Long)] = flowIds.zip(flowIds.tail)

pageflowIds.map(

t => {

(t, 1)

}

)

}

)

val flatRDD: RDD[((Long, Long), Int)] = mvRDD.map(_._2).flatMap(list => list)

val dataRDD: RDD[((Long, Long), Int)] = flatRDD.reduceByKey(_ + _)

dataRDD.foreach {

case ((pageid1, pageid2), sum) => {

val lon: Long = pageidToCountMap.getOrElse(pageid1, 0L)

println(s"页面${pageid1}跳转到页面${pageid2}单跳转换率为:"+(sum.toDouble/lon))

}

}

sc.stop()

}

case class UserVisitAction(

date: String,//用户点击行为的日期

user_id: Long,//用户的 ID

session_id: String,//Session 的 ID

page_id: Long,//某个页面的 ID

action_time: String,//动作的时间点

search_keyword: String,//用户搜索的关键词

click_category_id: Long,//某一个商品品类的 ID

click_product_id: Long,//某一个商品的 ID

order_category_ids: String,//一次订单中所有品类的 ID 集合

order_product_ids: String,//一次订单中所有商品的 ID 集合

pay_category_ids: String,//一次支付中所有品类的 ID 集合

pay_product_ids: String,//一次支付中所有商品的 ID 集合

city_id: Long

)//城市 id

}

可以看到这个数据非常多,所以我们精简一下,只求出几个特定页面的跳出率。

PageflowAnalysis2.scala

package com.atguigu.bigdata.spark.core.rdd.seq

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object PageflowAnalysis {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("HotCategoryTop10Analysis")

val sc = new SparkContext(sparkConf)

val actionRDD: RDD[String] = sc.textFile("datas/user_visit_action.txt")

val actionDataRDD=actionRDD.map(

action=>{

val datas: Array[String] = action.split("_")

UserVisitAction(

datas(0),

datas(1).toLong,

datas(2),

datas(3).toLong,

datas(4),

datas(5),

datas(6).toLong,

datas(7).toLong,

datas(8),

datas(9),

datas(10),

datas(11),

datas(12).toLong,

)

}

)

actionDataRDD.cache()

val pageidToCountMap: Map[Long, Long] = actionDataRDD.map(

action => {

(action.page_id, 1L)

}

).reduceByKey(_ + _).collect().toMap

val sessionRDD: RDD[(String, Iterable[UserVisitAction])] = actionDataRDD.groupBy(_.session_id)

val mvRDD: RDD[(String, List[((Long, Long), Int)])] = sessionRDD.mapValues(

iter => {

val sortList: List[UserVisitAction] = iter.toList.sortBy(_.action_time)

val flowIds: List[Long] = sortList.map(_.page_id)

val pageflowIds: List[(Long, Long)] = flowIds.zip(flowIds.tail)

pageflowIds.map(

t => {

(t, 1)

}

)

}

)

val flatRDD: RDD[((Long, Long), Int)] = mvRDD.map(_._2).flatMap(list => list)

val dataRDD: RDD[((Long, Long), Int)] = flatRDD.reduceByKey(_ + _)

dataRDD.foreach {

case ((pageid1, pageid2), sum) => {

val lon: Long = pageidToCountMap.getOrElse(pageid1, 0L)

println(s"页面${pageid1}跳转到页面${pageid2}单跳转换率为:"+(sum.toDouble/lon))

}

}

sc.stop()

}

case class UserVisitAction(

date: String,//用户点击行为的日期

user_id: Long,//用户的 ID

session_id: String,//Session 的 ID

page_id: Long,//某个页面的 ID

action_time: String,//动作的时间点

search_keyword: String,//用户搜索的关键词

click_category_id: Long,//某一个商品品类的 ID

click_product_id: Long,//某一个商品的 ID

order_category_ids: String,//一次订单中所有品类的 ID 集合

order_product_ids: String,//一次订单中所有商品的 ID 集合

pay_category_ids: String,//一次支付中所有品类的 ID 集合

pay_product_ids: String,//一次支付中所有商品的 ID 集合

city_id: Long

)//城市 id

}

总结

这次的spark实例就到这里