使用自己的数据训练MobileNet SSD v2目标检测--TensorFlow object detection

使用自己的数据训练MobileNet SSD v2目标检测--TensorFlow object detection

- 1. 配置

-

- 1.1 下载models-1.12.0

- 2. 准备数据集

- 3. 配置文件和模型

-

- 3.1 下载预训练模型

- 3.2 修改配置文件

- 4. 训练

-

- 4.1 使用tensorboard查看训练过程

- 5. 冻结模型参数

- 6. 调用pb文件进行预测

1. 配置

| 基本配置 | 版本号 |

|---|---|

| CPU | Intel® Core™ i5-8400 CPU @ 2.80GHz × 6 |

| GPU | GeForce RTX 2070 SUPER/PCIe/SSE2 |

| OS | Ubuntu18.04 |

| openjdk | 1.8.0_242 |

| python | 3.6.9 |

1.1 下载models-1.12.0

https://github.com/tensorflow/models/tree/v1.12.0

在~/.bashrc中加入配置

export PYTHONPATH=$PYTHONPATH:pwd:pwd/slim

2. 准备数据集

我是检测鸽子的头部,颈部和尾部. 将自己的数据集图片存放在/models/research/object_detection下新建的images文件夹内,images文件夹下新建dove_three和test_three两个文件夹,分别将训练和测试的图片放进文件夹.

接下来使用 LabelImg 这款小软件(自行百度如何安装), 对dove_three和test_three里的图片进行人工标注, 如下图所示。

对于每个标注框都要进行标签的命名.

标注完成后保存为同名的xml文件,并存在原文件夹中。

对于Tensorflow,需要输入专门的 TFRecords Format 格式。

写两个小python脚本文件,第一个将文件夹内的xml文件内的信息统一记录到.csv表格中,第二个从.csv表格中创建tfrecord格式。

附上对应代码:

xml转换为csv

# xml2csv.py

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET

os.chdir('/home/ying/usb/models/models-1.12.0/research/object_detection/images/test_three')

path = '/home/ying/usb/models/models-1.12.0/research/object_detection/images/test_three'

def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df

def main():

image_path = path

xml_df = xml_to_csv(image_path)

xml_df.to_csv('test.csv', index=None) #需要更改

print('Successfully converted xml to csv.')

main()

csv转换为tfrecord

命令:

python csv2tfrecord.py --csv_input=images/test_three/test.csv --output_path=images/test.record

# csv2tfrecord.py

# -*- coding: utf-8 -*-

"""

Usage:

# From tensorflow/models/

# Create train data:

python csv2tfrecord.py --csv_input=images/dove_three/train.csv --output_path=images/train.record

# Create test data:

python csv2tfrecord.py --csv_input=images/test_three/test.csv --output_path=images/test.record

"""

import os

import io

import pandas as pd

import tensorflow as tf

from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict

os.chdir('/home/ying/usb/models/models-1.12.0/research/object_detection')

flags = tf.app.flags

flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

FLAGS = flags.FLAGS

# TO-DO replace this with label map

def class_text_to_int(row_label): # 根据自己的标签修改

if row_label == 'head':

return 1

elif row_label == 'neck':

return 2

elif row_label == 'tail':

return 3

else:

None

def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)]

def create_tf_example(group, path):

with tf.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size

filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = []

for index, row in group.object.iterrows():

xmins.append(row['xmin'] / width)

xmaxs.append(row['xmax'] / width)

ymins.append(row['ymin'] / height)

ymaxs.append(row['ymax'] / height)

classes_text.append(row['class'].encode('utf8'))

classes.append(class_text_to_int(row['class']))

tf_example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename),

'image/source_id': dataset_util.bytes_feature(filename),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature(image_format),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

}))

return tf_example

def main(_):

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

path = os.path.join(os.getcwd(), 'images/test_three') # 需改动

examples = pd.read_csv(FLAGS.csv_input)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_example.SerializeToString())

writer.close()

output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path))

if __name__ == '__main__':

tf.app.run()

3. 配置文件和模型

为了方便,我把images下的dove_three和test_three的csv和record文件都放到object_detection/data目录下.

在object_detection新建training文件夹, 用于一会存放config文件.

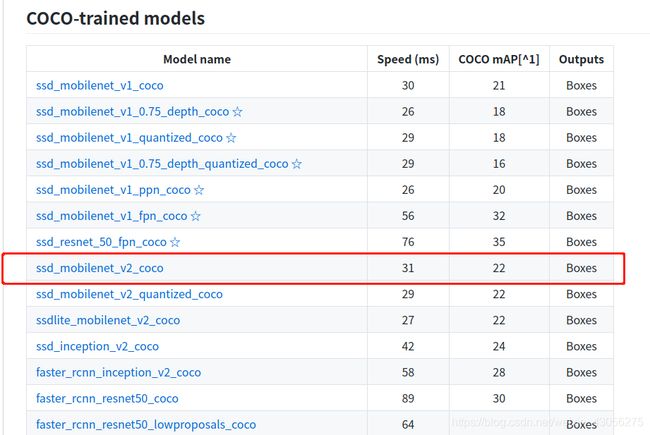

3.1 下载预训练模型

https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/detection_model_zoo.md

3.2 修改配置文件

- 在object_detection/data下新建three.pbtxt记录标签, 内容如下:

item {

name: "head"

id: 1

display_name: "head"

}

item {

name: "neck"

id: 2

display_name: "neck"

}

item {

name: "tail"

id: 3

display_name: "tail"

}

- 关于配置文件,我们能够在/research/object_detection/samples/configs/ 文件夹下找到很多配置文件模板。由于我们下载的是ssd_mobilenet_v2_coco模型,那么我们就找ssd_mobilenet_v2_coco.config文件,将这个文件复制到training文件夹下

开始进行配置.

model {

ssd {

num_classes: 3

image_resizer {

fixed_shape_resizer {

height: 300

width: 300

}

}

feature_extractor {

type: "ssd_mobilenet_v2"

depth_multiplier: 1.0

min_depth: 16

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 3.99999989895e-05

}

}

initializer {

truncated_normal_initializer {

mean: 0.0

stddev: 0.0299999993294

}

}

activation: RELU_6

batch_norm {

decay: 0.999700009823

center: true

scale: true

epsilon: 0.0010000000475

train: true

}

}

#batch_norm_trainable: true

use_depthwise: true

}

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_unmatched: true

force_match_for_each_row: true

}

}

similarity_calculator {

iou_similarity {

}

}

box_predictor {

convolutional_box_predictor {

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 3.99999989895e-05

}

}

initializer {

truncated_normal_initializer {

mean: 0.0

stddev: 0.0299999993294

}

}

activation: RELU_6

batch_norm {

decay: 0.999700009823

center: true

scale: true

epsilon: 0.0010000000475

train: true

}

}

min_depth: 0

max_depth: 0

num_layers_before_predictor: 0

use_dropout: false

dropout_keep_probability: 0.800000011921

kernel_size: 3

box_code_size: 4

apply_sigmoid_to_scores: false

}

}

anchor_generator {

ssd_anchor_generator {

num_layers: 6

min_scale: 0.20000000298

max_scale: 0.949999988079

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

aspect_ratios: 3.0

aspect_ratios: 0.333299994469

}

}

post_processing {

batch_non_max_suppression {

score_threshold: 0.300000011921

iou_threshold: 0.600000023842

max_detections_per_class: 100

max_total_detections: 100

}

score_converter: SIGMOID

}

normalize_loss_by_num_matches: true

loss {

localization_loss {

weighted_smooth_l1 {

}

}

classification_loss {

weighted_sigmoid {

}

}

hard_example_miner {

num_hard_examples: 3000

iou_threshold: 0.990000009537

loss_type: CLASSIFICATION

max_negatives_per_positive: 3

min_negatives_per_image: 3

}

classification_weight: 1.0

localization_weight: 1.0

}

}

}

train_config {

batch_size: 4

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

ssd_random_crop {

}

}

optimizer {

rms_prop_optimizer {

learning_rate {

exponential_decay_learning_rate {

initial_learning_rate: 0.00400000018999

decay_steps: 800720

decay_factor: 0.949999988079

}

}

momentum_optimizer_value: 0.899999976158

decay: 0.899999976158

epsilon: 1.0

}

}

fine_tune_checkpoint: "/home/ying/usb/models/models-1.12.0/research/object_detection/ssd_mobilenet_v2_coco_2018_03_29/model.ckpt"

num_steps: 200000

fine_tune_checkpoint_type: "detection"

from_detection_checkpoint: true

}

train_input_reader {

label_map_path: "/home/ying/usb/models/models-1.12.0/research/object_detection/data/three.pbtxt"

tf_record_input_reader {

input_path: "/home/ying/usb/models/models-1.12.0/research/object_detection/data/train_three.record"

}

}

eval_config {

num_examples: 24

max_evals: 10

use_moving_averages: false

metrics_set: "coco_detection_metrics"

}

eval_input_reader {

label_map_path: "/home/ying/usb/models/models-1.12.0/research/object_detection/data/three.pbtxt"

shuffle: false

num_readers: 1

tf_record_input_reader {

input_path: "/home/ying/usb/models/models-1.12.0/research/object_detection/data/test_three.record"

}

}

由于配置文件参数很多,我就讲几个只要简单改动后就能训练的参数:

1)num_classes: 这个参数就是你所训练模型的检测目标数(我的是三类)

2)decay_steps: 这个参数的意义是每训练decay_steps步数,就进行将学习率将低一次(new_learning_rate = old_learnning_rate * decay_factor)这个根据个人训练情况改,因为这里并不是从头训练(我使用的迁移学习方法),而且我就准备迭代20000步,所以就加快降低学习率的速度(学习率过大会导致准确率一直震荡,很难收敛)。

3)fine_tune_checkpoint: 这个参数就是加载预训练模型的路径,也就是我们下载解压的ssd_mobilenet_v2_coco路径。

4)from_detection_checkpoint: 这个参数是选择加载全部模型参数true,还是只加载前置网络模型参数(分类网络)false。

5)num_steps: 20000 这个参数就是总迭代步数

6)input_path: 这个参数就是读取tfrecord文件的路径,在train_input_reader中填pascal_train.record路径,在eval_input_reader中填pascal_val.record路径。

7)label_map_path: 这个参数就是pascal_label_map.pbtxt字典文件的路径。

8)num_examples: 这个参数就是你验证集的图像数目。

9)metrics_set: 这个参数指定在验证时使用哪种评价标准,这里使用的是"coco_detection_metrics"

关于如何控制验证频率的问题:

在之前的版本中可以在eval_config中设置eval_interval_secs的值来改变验证之间的间隔,但现在已经失效了。官方也有说要解决这个问题,但到现在还未解决。下面给出一个控制验证间隔的方法:

打开 research/object_detection/model_lib.py文件在create_train_and_eval_specs函数中,大概750行左右的位置有如下代码:

tf.estimator.EvalSpec(

name=eval_spec_name,

input_fn=eval_input_fn,

steps=None,

exporters=exporter)

在tf.estimator.EvalSpec函数中添加如下一行(throttle_secs=3600,代表间隔1个小时验证一次,在Tensorflow文档,tf.estimator.EvalSpec函数中,默认参数start_delay_secs=120,throttle_secs=600代表默认从训练开始的第120秒开始第一次验证,之后每隔600秒验证一次):

tf.estimator.EvalSpec(

name=eval_spec_name,

input_fn=eval_input_fn,

steps=None,

exporters=exporter,

throttle_secs=3600)

修改完配置文件后,我们就可以开始训练模型了

4. 训练

我们回到 models-1.12.0/research文件夹下,建立一个训练模型的脚本文件(train.sh):

PIPELINE_CONFIG_PATH=/home/ying/usb/models/models-1.12.0/research/object_detection/training/pipeline.config

MODEL_DIR=/home/ying/usb/models/models-1.12.0/research/object_detection/train

NUM_TRAIN_STEPS=20000

SAMPLE_1_OF_N_EVAL_EXAMPLES=1

python object_detection/model_main.py \

--pipeline_config_path=${PIPELINE_CONFIG_PATH} \

--model_dir=${MODEL_DIR} \

--num_train_steps=${NUM_TRAIN_STEPS} \

--sample_1_of_n_eval_examples=$SAMPLE_1_OF_N_EVAL_EXAMPLES \

--logtostderr

其中PIPELINE_CONFIG_PATH是我们的config配置文件路径;MODEL_DIR是训练模型的保存位置;NUM_TRAIN_STEPS是总共训练的步数;SAMPLE_1_OF_N_EVAL_EXAMPLES是验证过程中对样本的采样频率(在之前说过,假设为n则表示只对验证样本中的n分一的样本进行采样验证),若为1表示将验证所有样本,一般设为1。建立好脚本文件后通过sh train_VOC.sh开始执行。

如果需要在后台运行可通过以下指令,通过该指令将终端输出信息定向输出到train.log文件中:

nohup sh train_VOC.sh > train.log 2>&1 &

通过下面指令查看log中的信息:

tail -f train.log

若需要提前终止程序,可通过查询对应程序的pid进行终止。

ps -ef | grep python # 查找后台运行的所有pyhton程序的pid

kill -9 9208 # 终端pid为9208的程序

在coco的检测标准中IOU=0.50:0.95对应的Average Precision为0.367,IOU=0.50(IOU=0.5可以理解为pscal voc的评价标准)对应的Average Precision为0.604。

4.1 使用tensorboard查看训练过程

在训练过程中,所有的训练文件都保存在我们的train文件夹下,打开train文件夹你会发现里面有个eval_0文件夹,这个文件里保存是在整个训练过程中在验证集上的准确率变化。我们通过终端进入train文件夹,接着输入以下指令调用tensorboard:

tensorboard --logdir=eval_0/

输入指令后终端会打印出一个本地网页链接,复制该链接,打开你的浏览器输入该链接就能看到整个过程的训练结果。在SCALARS栏中有DetectionBoxes_Precision、DetectionBoxes_Pecall、Loss、leaning_rate等曲线,在IMAGES栏中有使用模型在验证集上预测的图像,左侧是预测的结果右侧是标准检测结果。GRAPHS是整个训练的流程图。

5. 冻结模型参数

模型训练完成后所有的参数是以model.ckpt.data、model.ckpt.meta和model.ckpt.index三个文件共同保存,在我们使用的ssd_mobilenet_v2模型中,模型ckpt文件大小约78M,如果将模型参数冻结后只有20M。当然,不论是ckpt文件还是冻结后的pb文件我们都能够进行调用,但ckpt文件更适合在训练模型等实验过程中使用,而pb文件是根据我们需要的输出节点反向查找将ckpt中不需要的节点全部删除后获得的文件(还将模型参数的保存形式进行了改变)由于该文件占用内存更小,更适合部署在你所需要使用的设备上。(tensorflow还有很多方法能够将模型的大小进一步缩减占用更少的内存)

我们直接进入 models-1.12.0/research/ 文件夹下,建立冻结模型权重脚本(export_Pb.sh):

INPUT_TYPE=image_tensor

PIPELINE_CONFIG_PATH=/home/ying/usb/models/models-1.12.0/research/object_detection/training/pipeline.config

TRAINED_CKPT_PREFIX=/home/ying/usb/models/models-1.12.0/research/object_detection/train/model.ckpt-20000

EXPORT_DIR=/home/ying/usb/models/models-1.12.0/research/object_detection/eval

python object_detection/export_inference_graph.py \

--input_type=${INPUT_TYPE} \

--pipeline_config_path=${PIPELINE_CONFIG_PATH} \

--trained_checkpoint_prefix=${TRAINED_CKPT_PREFIX} \

--output_directory=${EXPORT_DIR}

PIPELINE_CONFIG_PATH就是模型的config配置文件路径;TRAINED_CKPT_PREFIX是训练模型的ckpt权重最后面的数字对应的训练步数(一般选择最后保存的一个ckpt就行);EXPORT_DIR就是冻结模型pb的输出位置。执行脚本后在/xxx/model/eval/目录下就会生成我们所需要的pb文件(frozen_inference_graph.pb)。

6. 调用pb文件进行预测

object_detection文件夹下的test_images文件夹中放测试图片

创建一个python文件main.py,将下面代码复制到py文件中,该代码是根据官网的object_detection_tutorial.ipynb教程简单修改得到的。代码中也有注释,便于理解。

import numpy as np

import os

import glob

import tensorflow as tf

import time

from distutils.version import StrictVersion

import matplotlib

from matplotlib import pyplot as plt

from PIL import Image

import keras

import tensorflow as tf

from object_detection.utils import ops as utils_ops

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as vis_util

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

keras.backend.tensorflow_backend.set_session(tf.Session(config=config))

# 防止backend='Agg'导致不显示图像的情况

#os.environ["CUDA_VISIBLE_DEVICES"] = "-1" #CPU

matplotlib.use('TkAgg')

if StrictVersion(tf.__version__) < StrictVersion('1.12.0'):

raise ImportError('Please upgrade your TensorFlow installation to v1.12.*.')

MODEL_NAME = 'eval'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = 'data/three.pbtxt'

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

category_index = label_map_util.create_category_index_from_labelmap(PATH_TO_LABELS, use_display_name=True)

def load_image_into_numpy_array(image):

im_width, im_height = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# For the sake of simplicity we will use only 2 images:

# image1.jpg

# image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = 'test_images/*.jpg'

TEST_IMAGE_PATHS = glob.glob(PATH_TO_TEST_IMAGES_DIR)

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[1], image.shape[2])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

# Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: image})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.int64)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

start = time.time()

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np_expanded, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

print("class:",output_dict['detection_classes'])

print("score:",output_dict['detection_scores'])

end = time.time()

print("internel:",end-start)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show()