word2vec原理

word2vec原理

- 1. 什么是独热编码(Onehot)?

-

- 1.1 为什么使用Onehot编码?

- 1.2 什么是Onehot编码?

- 1.3 python实现Onehot编码

- 1.4 Onehot编码的缺点

- 2. CBOW模型原理

-

- 2.1 模型预测形式

- 2.2 模型网络

- 3. skip-gram模型原理

-

- 3.1 模型预测形式

- 3.2 模型网络

- 4. word2vec结构总结

-

- 4.1 skip-gram与CBOW的区别

- 5. 代码简单实现

-

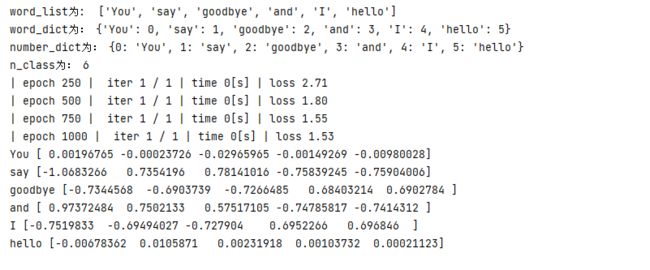

- 5.1 CBOW 代码简单实现

-

- 5.1.1 数据集创建代码

- 5.1.2 模型类创建

- 5.1.3 优化器

- 5.1.4 训练类

- 5.1.5 获取词向量

- 5.1.6 完整代码

- 5.2 skip-gram代码简单实现

-

- 5.2.1 `skip-gram模型类`

- 5.2.2 完整代码

- 总结

- 文章参考书籍:深度学习进阶:自然语言处理

1. 什么是独热编码(Onehot)?

1.1 为什么使用Onehot编码?

- 在自然语言处理中,数据集大多都为字或词,计算机只可识别二进制数值型的数据,如果把字或词给计算机,计算机不能识别 【如果不是数值型数据,识别不了】,把他们转化为计算机适合处理的数值类数据非常重要

1.2 什么是Onehot编码?

- 对角线值为1,其他位置全为0的单位矩阵

- 当有n个词的时候,那么会生成n*n大小的矩阵,只有自己对应的位置为1,其他位置为0

1.3 python实现Onehot编码

import pandas as pd

df = pd.DataFrame([

['green', 'M', 20],

['red', 'L', 21],

['blue', 'XL', 30]

])

data = pd.get_dummies(df[0]) # 将第一列变成one-hot编码

data.index = data.columns

print(data)

1.4 Onehot编码的缺点

-

无法解决词之间的相似性问题

- 使用余弦相似度计算向量间的相似度,任意两者向量的相似度结果都为 0

- 相关代码

import pandas as pd import numpy as np df = pd.DataFrame([['blue', 'XL', 30],['green', 'M', 20],['red', 'L', 21]]) data = pd.get_dummies(df[0]) # 将第一列变成one-hot编码 data.index = data.columns def cos_similarity(x, y, eps=1e-8): nx = x / (np.sqrt(np.sum(x ** 2)) + eps) ny = y / (np.sqrt(np.sum(y ** 2)) + eps) return np.dot(nx, ny) print(data) print(f'{data.iloc[0].name}与{data.iloc[1].name}的相似度为:{cos_similarity(data.iloc[0], data.iloc[1])}') print(f'{data.iloc[0].name}与{data.iloc[2].name}的相似度为:{cos_similarity(data.iloc[0], data.iloc[2])}') print(f'{data.iloc[1].name}与{data.iloc[2].name}的相似度为:{cos_similarity(data.iloc[1], data.iloc[2])}')

-

占用内存大且是稀疏矩阵

- 实际应用中,会有很多单词出现,如果有

n个词,会生成n*n大小的稀疏矩阵,浪费内存什么是稀疏矩阵和密集矩阵?

大多数值全为0的矩阵是稀疏矩阵

大多数值不为0的矩阵是密集矩阵

- 实际应用中,会有很多单词出现,如果有

-

one-hot编码之后出现了神经网络语言模型(NNLM)- 文章链接:https://blog.csdn.net/m0_46926492/article/details/130580187

-

之后发展为

word2vec

2. CBOW模型原理

2.1 模型预测形式

- 根据

上下文预测目标词的神经网络 - “目标词” 是指中间的单词

- 周围的单词是上下文

2.2 模型网络

- 两个输入层,因为根据上下文预测目标词

因为对上下文仅考虑两个单词,所以输入层有两个。如果对上下文考虑 N 个单词,则输入层会有 N 个。

- 过程

- 从

输入层到中间层的变换由相同的全连接层(权重为 W i n W_{in} Win)完成 - 从

中间层到输出层神经元的变换由另一个全连接层(权重为 W o u t W_{out} Wout)完成

- 从

- 一个 W i n W_{in} Win就是一个词的

one-hot编码- 使用几个上下文预测,就会有几个 W i n W_{in} Win,就是几个词的

one-hot编码

- 使用几个上下文预测,就会有几个 W i n W_{in} Win,就是几个词的

- 一个 W o u t W_{out} Wout,对应每个词的得分,那个得分高,预测的是哪个词

3. skip-gram模型原理

3.1 模型预测形式

- 根据

目标词预测上下文的神经网络 - “目标词” 是指中间的单词

- 周围的单词是上下文

3.2 模型网络

- 两个输出层,因为根据目标词预测,上文+下文

因为对上下文仅考虑两个单词,所以输出层有两个。如果对上下文考虑 N 个单词,则输出层会有 N 个。

- 过程

- W i n W_{in} Win就是目标词的

one-hot编码 - 一个 W o u t W_{out} Wout,对应预测一个上下文词得分,那个得分高,预测的是哪个上下文词

4. word2vec结构总结

4.1 skip-gram与CBOW的区别

skip-gram模型与CBOW模型是相反过程模型 输入 输出 预测方式 白话解释 CBOWcontextstarget上下文预测目标词多个老师教一个学生【多个词预测一个词】skip-gramtargetcontexts目标词预测上下文一个老师教多个学生【一个词预测多个词】- 窗口大小

- 确定了

CBOW模型输入层个数,skip-gram模型的输出层个数 - 确定

上下文个数

- 确定了

5. 代码简单实现

5.1 CBOW 代码简单实现

5.1.1 数据集创建代码

- 上下文与目标词数据创建

def preprocess(sentences_list,lis = []): """ 语料库预处理 :param text_list:句子列表 :return: word_list 是单词列表 word_dict:是单词到单词 ID 的字典 number_dict 是单词 ID 到单词的字典 n_class 单词数 """ for i in sentences_list: text = i.split('.')[0].split(' ') # 按照空格分词,统计 sentences的分词的个数 word_list = list({}.fromkeys(text).keys()) # 去重 统计词典个数 lis=lis+word_list word_list=list({}.fromkeys(lis).keys()) word_dict = {w: i for i, w in enumerate(word_list)} number_dict = {i: w for i, w in enumerate(word_list)} n_class = len(word_dict) # 词典的个数,也是softmax 最终分类的个数 return word_list, word_dict, number_dict,n_class def create_contexts_target(sentences_list, word_dict, window_size=1): """ 词向量编码函数 :param sentences_list:句子列表 :param word_dict: 字典{'You': 0,,,} key:单词,value:索引,为词向量做准备 :param windows_size: 上下文窗口大小 :return: contexts:数据集向量 target:标签值 """ contexts, target = [], [] # 上下文+目标词总列表 for sen in sentences_list: target_list = sen.split('.')[0].split(' ') # 句子按照空格分词 target_index=[word_dict[i] for i in sen.split(' ')[window_size:-window_size]] # 句子目标标签列表 target+=target_index text=[] # 上下文列表 for t_index in target_index: word_list = [] # 句子上下文列表 for t in range(-window_size, window_size + 1): # 上下文窗口 if t == 0: # 目标标签 continue word_list.append(word_dict[target_list[t_index + t]]) # 获取单词索引并加入列表 text.append(word_list) contexts+=text return np.array(contexts), np.array(target) def convert_one_hot(corpus, vocab_size): ''' 转换为one-hot表示 :param corpus: 单词ID列表(一维或二维的NumPy数组) :param vocab_size: 词汇个数 :return: one-hot表示(二维或三维的NumPy数组) ''' N = corpus.shape[0] if corpus.ndim == 1: one_hot = np.zeros((N, vocab_size), dtype=np.int32) for idx, word_id in enumerate(corpus): one_hot[idx, word_id] = 1 elif corpus.ndim == 2: C = corpus.shape[1] one_hot = np.zeros((N, C, vocab_size), dtype=np.int32) for idx_0, word_ids in enumerate(corpus): for idx_1, word_id in enumerate(word_ids): one_hot[idx_0, idx_1, word_id] = 1 return one_hot

5.1.2 模型类创建

MatMul层:矩阵相乘类class MatMul: # 初始化参数 def __init__(self, W): self.params = [W] self.grads = [np.zeros_like(W)] self.x = None # 正向传播,没有偏置项 def forward(self, x): W, = self.params out = np.dot(x, W) self.x = x return out # 反向传播 def backward(self, dout): W, = self.params dx = np.dot(dout, W.T) dW = np.dot(self.x.T, dout) self.grads[0][...] = dW return dxSoftmax损失类- softmax损失函数

def softmax(x): if x.ndim == 2: x = x - x.max(axis=1, keepdims=True) x = np.exp(x) x /= x.sum(axis=1, keepdims=True) elif x.ndim == 1: x = x - np.max(x) x = np.exp(x) / np.sum(np.exp(x)) return x - 交叉熵损失函数

def cross_entropy_error(y, t): if y.ndim == 1: t = t.reshape(1, t.size) y = y.reshape(1, y.size) # 在监督标签为one-hot-vector的情况下,转换为正确解标签的索引 if t.size == y.size: t = t.argmax(axis=1) batch_size = y.shape[0] return -np.sum(np.log(y[np.arange(batch_size), t] + 1e-7)) / batch_size - CBOW模型类

class SimpleCBOW: def __init__(self, vocab_size, hidden_size): V, H = vocab_size, hidden_size # 初始化权重 W_in = 0.01 * np.random.randn(V, H).astype('f') W_out = 0.01 * np.random.randn(H, V).astype('f') # 生成层 self.in_layer0 = MatMul(W_in) self.in_layer1 = MatMul(W_in) self.out_layer = MatMul(W_out) self.loss_layer = SoftmaxWithLoss() # 将所有的权重和梯度整理到列表中 layers = [self.in_layer0, self.in_layer1, self.out_layer] self.params, self.grads = [], [] for layer in layers: self.params += layer.params self.grads += layer.grads # 将单词的分布式表示设置为成员变量 self.word_vecs = W_in def forward(self, contexts, target): h0 = self.in_layer0.forward(contexts[:, 0]) h1 = self.in_layer1.forward(contexts[:, 1]) h = (h0 + h1) * 0.5 score = self.out_layer.forward(h) loss = self.loss_layer.forward(score, target) return loss def backward(self, dout=1): ds = self.loss_layer.backward(dout) da = self.out_layer.backward(ds) da *= 0.5 self.in_layer1.backward(da) self.in_layer0.backward(da) return None

- softmax损失函数

5.1.3 优化器

-

Adam类class Adam: def __init__(self, lr=0.001, beta1=0.9, beta2=0.999): self.lr = lr self.beta1 = beta1 self.beta2 = beta2 self.iter = 0 self.m = None self.v = None def update(self, params, grads): if self.m is None: self.m, self.v = [], [] for param in params: self.m.append(np.zeros_like(param)) self.v.append(np.zeros_like(param)) self.iter += 1 lr_t = self.lr * np.sqrt(1.0 - self.beta2 ** self.iter) / (1.0 - self.beta1 ** self.iter) for i in range(len(params)): self.m[i] += (1 - self.beta1) * (grads[i] - self.m[i]) self.v[i] += (1 - self.beta2) * (grads[i] ** 2 - self.v[i]) params[i] -= lr_t * self.m[i] / (np.sqrt(self.v[i]) + 1e-7)

5.1.4 训练类

- 相关函数

def remove_duplicate(params, grads): ''' 将参数列表中重复的权重整合为1个, 加上与该权重对应的梯度 ''' params, grads = params[:], grads[:] # copy list while True: find_flg = False L = len(params) for i in range(0, L - 1): for j in range(i + 1, L): # 在共享权重的情况下 if params[i] is params[j]: grads[i] += grads[j] # 加上梯度 find_flg = True params.pop(j) grads.pop(j) # 在作为转置矩阵共享权重的情况下(weight tying) elif params[i].ndim == 2 and params[j].ndim == 2 and \ params[i].T.shape == params[j].shape and np.all(params[i].T == params[j]): grads[i] += grads[j].T find_flg = True params.pop(j) grads.pop(j) if find_flg: break if find_flg: break if not find_flg: break return params, grads def clip_grads(grads, max_norm): total_norm = 0 for grad in grads: total_norm += np.sum(grad ** 2) total_norm = np.sqrt(total_norm) rate = max_norm / (total_norm + 1e-6) if rate < 1: for grad in grads: grad *= rate trainer类class Trainer: def __init__(self, model, optimizer): self.model = model self.optimizer = optimizer self.loss_list = [] self.eval_interval = None self.current_epoch = 0 def fit(self, x, t, max_epoch=10, batch_size=32, max_grad=None, eval_interval=20): data_size = len(x) max_iters = data_size // batch_size self.eval_interval = eval_interval model, optimizer = self.model, self.optimizer total_loss = 0 loss_count = 0 start_time = time.time() for epoch in range(max_epoch): # 打乱 idx = np.random.permutation(np.arange(data_size)) x = x[idx] t = t[idx] for iters in range(max_iters): batch_x = x[iters*batch_size:(iters+1)*batch_size] batch_t = t[iters*batch_size:(iters+1)*batch_size] # 计算梯度,更新参数 loss = model.forward(batch_x, batch_t) model.backward() params, grads = remove_duplicate(model.params, model.grads) # 将共享的权重整合为1个 if max_grad is not None: clip_grads(grads, max_grad) optimizer.update(params, grads) total_loss += loss loss_count += 1 # 评价 if (eval_interval is not None) and (iters % eval_interval) == 0: avg_loss = total_loss / loss_count elapsed_time = time.time() - start_time print('| epoch %d | iter %d / %d | time %d[s] | loss %.2f' % (self.current_epoch + 1, iters + 1, max_iters, elapsed_time, avg_loss)) self.loss_list.append(float(avg_loss)) total_loss, loss_count = 0, 0 self.current_epoch += 1 def plot(self, ylim=None): x = np.arange(len(self.loss_list)) if ylim is not None: plt.ylim(*ylim) plt.plot(x, self.loss_list, label='train') plt.xlabel('iterations (x' + str(self.eval_interval) + ')') plt.ylabel('loss') plt.show()

5.1.5 获取词向量

word_vecs = model.word_vecs

for word_id, word in number_dict.items():

print(word, word_vecs[word_id])

5.1.6 完整代码

import time

import numpy as np

import matplotlib.pyplot as plt

def preprocess(sentences_list,lis = []):

"""

语料库预处理

:param text_list:句子列表

:return:

word_list 是单词列表

word_dict:是单词到单词 ID 的字典

number_dict 是单词 ID 到单词的字典

n_class 单词数

"""

for i in sentences_list:

text = i.split('.')[0].split(' ') # 按照空格分词,统计 sentences的分词的个数

word_list = list({}.fromkeys(text).keys()) # 去重 统计词典个数

lis=lis+word_list

word_list=list({}.fromkeys(lis).keys())

word_dict = {w: i for i, w in enumerate(word_list)}

number_dict = {i: w for i, w in enumerate(word_list)}

n_class = len(word_dict) # 词典的个数,也是softmax 最终分类的个数

return word_list, word_dict, number_dict,n_class

def create_contexts_target(sentences_list, word_dict, window_size=1):

"""

词向量编码函数

:param sentences_list:句子列表

:param word_dict: 字典{'You': 0,,,} key:单词,value:索引,为词向量做准备

:param windows_size: 上下文窗口大小

:return:

contexts:数据集向量

target:标签值

"""

contexts, target = [], [] # 上下文+目标词总列表

for sen in sentences_list:

target_list = sen.split('.')[0].split(' ') # 句子按照空格分词

target_index=[word_dict[i] for i in sen.split(' ')[window_size:-window_size]] # 句子目标标签列表

target+=target_index

text=[] # 上下文列表

for t_index in target_index:

word_list = [] # 句子上下文列表

for t in range(-window_size, window_size + 1): # 上下文窗口

if t == 0: # 目标标签

continue

word_list.append(word_dict[target_list[t_index + t]]) # 获取单词索引并加入列表

text.append(word_list)

contexts+=text

return np.array(contexts), np.array(target)

def convert_one_hot(corpus, vocab_size):

'''

转换为one-hot表示

:param corpus: 单词ID列表(一维或二维的NumPy数组)

:param vocab_size: 词汇个数

:return: one-hot表示(二维或三维的NumPy数组)

'''

N = corpus.shape[0]

if corpus.ndim == 1:

one_hot = np.zeros((N, vocab_size), dtype=np.int32)

for idx, word_id in enumerate(corpus):

one_hot[idx, word_id] = 1

elif corpus.ndim == 2:

C = corpus.shape[1]

one_hot = np.zeros((N, C, vocab_size), dtype=np.int32)

for idx_0, word_ids in enumerate(corpus):

for idx_1, word_id in enumerate(word_ids):

one_hot[idx_0, idx_1, word_id] = 1

return one_hot

def softmax(x):

if x.ndim == 2:

x = x - x.max(axis=1, keepdims=True)

x = np.exp(x)

x /= x.sum(axis=1, keepdims=True)

elif x.ndim == 1:

x = x - np.max(x)

x = np.exp(x) / np.sum(np.exp(x))

return x

def cross_entropy_error(y, t):

if y.ndim == 1:

t = t.reshape(1, t.size)

y = y.reshape(1, y.size)

# 在监督标签为one-hot-vector的情况下,转换为正确解标签的索引

if t.size == y.size:

t = t.argmax(axis=1)

batch_size = y.shape[0]

return -np.sum(np.log(y[np.arange(batch_size), t] + 1e-7)) / batch_size

class MatMul:

def __init__(self, W):

self.params = [W]

self.grads = [np.zeros_like(W)]

self.x = None

def forward(self, x):

W, = self.params

out = np.dot(x, W)

self.x = x

return out

def backward(self, dout):

W, = self.params

dx = np.dot(dout, W.T)

dW = np.dot(self.x.T, dout)

self.grads[0][...] = dW

return dx

class SoftmaxWithLoss:

def __init__(self):

self.params, self.grads = [], []

self.y = None # softmax的输出

self.t = None # 监督标签

def forward(self, x, t):

self.t = t

self.y = softmax(x)

# 在监督标签为one-hot向量的情况下,转换为正确解标签的索引

if self.t.size == self.y.size:

self.t = self.t.argmax(axis=1)

loss = cross_entropy_error(self.y, self.t)

return loss

def backward(self, dout=1):

batch_size = self.t.shape[0]

dx = self.y.copy()

dx[np.arange(batch_size), self.t] -= 1

dx *= dout

dx = dx / batch_size

return dx

class SimpleCBOW:

def __init__(self, vocab_size, hidden_size):

V, H = vocab_size, hidden_size

# 初始化权重

W_in = 0.01 * np.random.randn(V, H).astype('f')

W_out = 0.01 * np.random.randn(H, V).astype('f')

# 生成层

self.in_layer0 = MatMul(W_in)

self.in_layer1 = MatMul(W_in)

self.out_layer = MatMul(W_out)

self.loss_layer = SoftmaxWithLoss()

# 将所有的权重和梯度整理到列表中

layers = [self.in_layer0, self.in_layer1, self.out_layer]

self.params, self.grads = [], []

for layer in layers:

self.params += layer.params

self.grads += layer.grads

# 将单词的分布式表示设置为成员变量

self.word_vecs = W_in

def forward(self, contexts, target):

h0 = self.in_layer0.forward(contexts[:, 0])

h1 = self.in_layer1.forward(contexts[:, 1])

h = (h0 + h1) * 0.5

score = self.out_layer.forward(h)

loss = self.loss_layer.forward(score, target)

return loss

def backward(self, dout=1):

ds = self.loss_layer.backward(dout)

da = self.out_layer.backward(ds)

da *= 0.5

self.in_layer1.backward(da)

self.in_layer0.backward(da)

return None

class Adam:

def __init__(self, lr=0.001, beta1=0.9, beta2=0.999):

self.lr = lr

self.beta1 = beta1

self.beta2 = beta2

self.iter = 0

self.m = None

self.v = None

def update(self, params, grads):

if self.m is None:

self.m, self.v = [], []

for param in params:

self.m.append(np.zeros_like(param))

self.v.append(np.zeros_like(param))

self.iter += 1

lr_t = self.lr * np.sqrt(1.0 - self.beta2 ** self.iter) / (1.0 - self.beta1 ** self.iter)

for i in range(len(params)):

self.m[i] += (1 - self.beta1) * (grads[i] - self.m[i])

self.v[i] += (1 - self.beta2) * (grads[i] ** 2 - self.v[i])

params[i] -= lr_t * self.m[i] / (np.sqrt(self.v[i]) + 1e-7)

def remove_duplicate(params, grads):

'''

将参数列表中重复的权重整合为1个,

加上与该权重对应的梯度

'''

params, grads = params[:], grads[:] # copy list

while True:

find_flg = False

L = len(params)

for i in range(0, L - 1):

for j in range(i + 1, L):

# 在共享权重的情况下

if params[i] is params[j]:

grads[i] += grads[j] # 加上梯度

find_flg = True

params.pop(j)

grads.pop(j)

# 在作为转置矩阵共享权重的情况下(weight tying)

elif params[i].ndim == 2 and params[j].ndim == 2 and \

params[i].T.shape == params[j].shape and np.all(params[i].T == params[j]):

grads[i] += grads[j].T

find_flg = True

params.pop(j)

grads.pop(j)

if find_flg: break

if find_flg: break

if not find_flg: break

return params, grads

def clip_grads(grads, max_norm):

total_norm = 0

for grad in grads:

total_norm += np.sum(grad ** 2)

total_norm = np.sqrt(total_norm)

rate = max_norm / (total_norm + 1e-6)

if rate < 1:

for grad in grads:

grad *= rate

class Trainer:

def __init__(self, model, optimizer):

self.model = model

self.optimizer = optimizer

self.loss_list = []

self.eval_interval = None

self.current_epoch = 0

def fit(self, x, t, max_epoch=10, batch_size=32, max_grad=None, eval_interval=20):

data_size = len(x)

max_iters = data_size // batch_size

self.eval_interval = eval_interval

model, optimizer = self.model, self.optimizer

total_loss = 0

loss_count = 0

start_time = time.time()

for epoch in range(max_epoch):

# 打乱

idx = np.random.permutation(np.arange(data_size))

x = x[idx]

t = t[idx]

for iters in range(max_iters):

batch_x = x[iters*batch_size:(iters+1)*batch_size]

batch_t = t[iters*batch_size:(iters+1)*batch_size]

# 计算梯度,更新参数

loss = model.forward(batch_x, batch_t)

model.backward()

params, grads = remove_duplicate(model.params, model.grads) # 将共享的权重整合为1个

if max_grad is not None:

clip_grads(grads, max_grad)

optimizer.update(params, grads)

total_loss += loss

loss_count += 1

# 评价

if (eval_interval is not None) and (iters % eval_interval) == 0:

avg_loss = total_loss / loss_count

elapsed_time = time.time() - start_time

if (self.current_epoch + 1) % 250 == 0:

print('| epoch %d | iter %d / %d | time %d[s] | loss %.2f'

% (self.current_epoch + 1, iters + 1, max_iters, elapsed_time, avg_loss))

self.loss_list.append(float(avg_loss))

total_loss, loss_count = 0, 0

self.current_epoch += 1

def plot(self, ylim=None):

x = np.arange(len(self.loss_list))

if ylim is not None:

plt.ylim(*ylim)

plt.plot(x, self.loss_list, label='train')

plt.xlabel('iterations (x' + str(self.eval_interval) + ')')

plt.ylabel('loss')

plt.show()

if __name__ == '__main__':

hidden_size = 5

batch_size = 3

max_epoch = 1000

sentences_list = ['You say goodbye and I say hello.'] # 训练数据,'You say goodbye and I say hello.'

word_list, word_dict, number_dict,n_class=preprocess(sentences_list)

print('word_list为: ',word_list)

print('word_dict为:',word_dict)

print('number_dict为:',number_dict)

print('n_class为:',n_class)

contexts, target = create_contexts_target(sentences_list, word_dict) # 获取上下文及标签值

# print('contexts',contexts)

# print('target',target)

# 上下文及标签值转为one-hot编码

target=convert_one_hot(target, n_class)

contexts = convert_one_hot(contexts, n_class)

# print('contexts', contexts)

# print('target', target)

model = SimpleCBOW(n_class, hidden_size)

optimizer = Adam()

trainer = Trainer(model, optimizer)

trainer.fit(contexts, target, max_epoch, batch_size)

trainer.plot()

word_vecs = model.word_vecs

for word_id, word in number_dict.items():

print(word, word_vecs[word_id])

5.2 skip-gram代码简单实现

5.2.1 skip-gram模型类

class SimpleSkipGram:

def __init__(self, vocab_size, hidden_size):

V, H = vocab_size, hidden_size

# 初始化权重

W_in = 0.01 * np.random.randn(V, H).astype('f')

W_out = 0.01 * np.random.randn(H, V).astype('f')

# 生成层

self.in_layer = MatMul(W_in)

self.out_layer = MatMul(W_out)

self.loss_layer1 = SoftmaxWithLoss()

self.loss_layer2 = SoftmaxWithLoss()

# 将所有的权重和梯度整理到列表中

layers = [self.in_layer, self.out_layer]

self.params, self.grads = [], []

for layer in layers:

self.params += layer.params

self.grads += layer.grads

# 将单词的分布式表示设置为成员变量

self.word_vecs = W_in

def forward(self, contexts, target):

h = self.in_layer.forward(target)

s = self.out_layer.forward(h)

l1 = self.loss_layer1.forward(s, contexts[:, 0])

l2 = self.loss_layer2.forward(s, contexts[:, 1])

loss = l1 + l2

return loss

def backward(self, dout=1):

dl1 = self.loss_layer1.backward(dout)

dl2 = self.loss_layer2.backward(dout)

ds = dl1 + dl2

dh = self.out_layer.backward(ds)

self.in_layer.backward(dh)

return None

- 其他代码与

CBOW模型一致- 只是

skip-gram输入是CBOW的输出 skip-gram输出是CBOW的输入

- 只是

5.2.2 完整代码

import time

import numpy as np

import matplotlib.pyplot as plt

def preprocess(sentences_list,lis = []):

"""

语料库预处理

:param text_list:句子列表

:return:

word_list 是单词列表

word_dict:是单词到单词 ID 的字典

number_dict 是单词 ID 到单词的字典

n_class 单词数

"""

for i in sentences_list:

text = i.split('.')[0].split(' ') # 按照空格分词,统计 sentences的分词的个数

word_list = list({}.fromkeys(text).keys()) # 去重 统计词典个数

lis=lis+word_list

word_list=list({}.fromkeys(lis).keys())

word_dict = {w: i for i, w in enumerate(word_list)}

number_dict = {i: w for i, w in enumerate(word_list)}

n_class = len(word_dict) # 词典的个数,也是softmax 最终分类的个数

return word_list, word_dict, number_dict,n_class

def create_contexts_target(sentences_list, word_dict, window_size=1):

"""

词向量编码函数

:param sentences_list:句子列表

:param word_dict: 字典{'You': 0,,,} key:单词,value:索引,为词向量做准备

:param windows_size: 上下文窗口大小

:return:

contexts:数据集向量

target:标签值

"""

contexts, target = [], [] # 上下文+目标词总列表

for sen in sentences_list:

target_list = sen.split('.')[0].split(' ') # 句子按照空格分词

target_index=[word_dict[i] for i in sen.split(' ')[window_size:-window_size]] # 句子目标标签列表

target+=target_index

text=[] # 上下文列表

for t_index in target_index:

word_list = [] # 句子上下文列表

for t in range(-window_size, window_size + 1): # 上下文窗口

if t == 0: continue# 目标标签

word_list.append(word_dict[target_list[t_index + t]]) # 获取单词索引并加入列表

text.append(word_list)

contexts+=text

return np.array(contexts), np.array(target)

def convert_one_hot(corpus, vocab_size):

'''

转换为one-hot表示

:param corpus: 单词ID列表(一维或二维的NumPy数组)

:param vocab_size: 词汇个数

:return: one-hot表示(二维或三维的NumPy数组)

'''

N = corpus.shape[0]

if corpus.ndim == 1:

one_hot = np.zeros((N, vocab_size), dtype=np.int32)

for idx, word_id in enumerate(corpus):

one_hot[idx, word_id] = 1

elif corpus.ndim == 2:

C = corpus.shape[1]

one_hot = np.zeros((N, C, vocab_size), dtype=np.int32)

for idx_0, word_ids in enumerate(corpus):

for idx_1, word_id in enumerate(word_ids):

one_hot[idx_0, idx_1, word_id] = 1

return one_hot

def softmax(x):

if x.ndim == 2:

x = x - x.max(axis=1, keepdims=True)

x = np.exp(x)

x /= x.sum(axis=1, keepdims=True)

elif x.ndim == 1:

x = x - np.max(x)

x = np.exp(x) / np.sum(np.exp(x))

return x

def cross_entropy_error(y, t):

if y.ndim == 1:

t = t.reshape(1, t.size)

y = y.reshape(1, y.size)

# 在监督标签为one-hot-vector的情况下,转换为正确解标签的索引

if t.size == y.size:

t = t.argmax(axis=1)

batch_size = y.shape[0]

return -np.sum(np.log(y[np.arange(batch_size), t] + 1e-7)) / batch_size

class MatMul:

def __init__(self, W):

self.params = [W]

self.grads = [np.zeros_like(W)]

self.x = None

def forward(self, x):

W, = self.params

out = np.dot(x, W)

self.x = x

return out

def backward(self, dout):

W, = self.params

dx = np.dot(dout, W.T)

dW = np.dot(self.x.T, dout)

self.grads[0][...] = dW

return dx

class SoftmaxWithLoss:

def __init__(self):

self.params, self.grads = [], []

self.y = None # softmax的输出

self.t = None # 监督标签

def forward(self, x, t):

self.t = t

self.y = softmax(x)

# 在监督标签为one-hot向量的情况下,转换为正确解标签的索引

if self.t.size == self.y.size:

self.t = self.t.argmax(axis=1)

loss = cross_entropy_error(self.y, self.t)

return loss

def backward(self, dout=1):

batch_size = self.t.shape[0]

dx = self.y.copy()

dx[np.arange(batch_size), self.t] -= 1

dx *= dout

dx = dx / batch_size

return dx

class Adam:

'''

Adam (http://arxiv.org/abs/1412.6980v8)

'''

def __init__(self, lr=0.001, beta1=0.9, beta2=0.999):

self.lr = lr

self.beta1 = beta1

self.beta2 = beta2

self.iter = 0

self.m = None

self.v = None

def update(self, params, grads):

if self.m is None:

self.m, self.v = [], []

for param in params:

self.m.append(np.zeros_like(param))

self.v.append(np.zeros_like(param))

self.iter += 1

lr_t = self.lr * np.sqrt(1.0 - self.beta2 ** self.iter) / (1.0 - self.beta1 ** self.iter)

for i in range(len(params)):

self.m[i] += (1 - self.beta1) * (grads[i] - self.m[i])

self.v[i] += (1 - self.beta2) * (grads[i] ** 2 - self.v[i])

params[i] -= lr_t * self.m[i] / (np.sqrt(self.v[i]) + 1e-7)

def remove_duplicate(params, grads):

'''

将参数列表中重复的权重整合为1个,

加上与该权重对应的梯度

'''

params, grads = params[:], grads[:] # copy list

while True:

find_flg = False

L = len(params)

for i in range(0, L - 1):

for j in range(i + 1, L):

# 在共享权重的情况下

if params[i] is params[j]:

grads[i] += grads[j] # 加上梯度

find_flg = True

params.pop(j)

grads.pop(j)

# 在作为转置矩阵共享权重的情况下(weight tying)

elif params[i].ndim == 2 and params[j].ndim == 2 and \

params[i].T.shape == params[j].shape and np.all(params[i].T == params[j]):

grads[i] += grads[j].T

find_flg = True

params.pop(j)

grads.pop(j)

if find_flg: break

if find_flg: break

if not find_flg: break

return params, grads

def clip_grads(grads, max_norm):

total_norm = 0

for grad in grads:

total_norm += np.sum(grad ** 2)

total_norm = np.sqrt(total_norm)

rate = max_norm / (total_norm + 1e-6)

if rate < 1:

for grad in grads:

grad *= rate

class Trainer:

def __init__(self, model, optimizer):

self.model = model

self.optimizer = optimizer

self.loss_list = []

self.eval_interval = None

self.current_epoch = 0

def fit(self, x, t, max_epoch=10, batch_size=32, max_grad=None, eval_interval=20):

data_size = len(x)

max_iters = data_size // batch_size

self.eval_interval = eval_interval

model, optimizer = self.model, self.optimizer

total_loss = 0

loss_count = 0

start_time = time.time()

for epoch in range(max_epoch):

# 打乱

idx = np.random.permutation(np.arange(data_size))

x = x[idx]

t = t[idx]

for iters in range(max_iters):

batch_x = x[iters*batch_size:(iters+1)*batch_size]

batch_t = t[iters*batch_size:(iters+1)*batch_size]

# 计算梯度,更新参数

loss = model.forward(batch_x, batch_t)

model.backward()

params, grads = remove_duplicate(model.params, model.grads) # 将共享的权重整合为1个

if max_grad is not None:

clip_grads(grads, max_grad)

optimizer.update(params, grads)

total_loss += loss

loss_count += 1

# 评价

if (eval_interval is not None) and (iters % eval_interval) == 0:

avg_loss = total_loss / loss_count

elapsed_time = time.time() - start_time

if (self.current_epoch + 1) % 250 == 0:

print('| epoch %d | iter %d / %d | time %d[s] | loss %.2f'

% (self.current_epoch + 1, iters + 1, max_iters, elapsed_time, avg_loss))

self.loss_list.append(float(avg_loss))

total_loss, loss_count = 0, 0

self.current_epoch += 1

def plot(self, ylim=None):

x = np.arange(len(self.loss_list))

if ylim is not None:

plt.ylim(*ylim)

plt.plot(x, self.loss_list, label='train')

plt.xlabel('iterations (x' + str(self.eval_interval) + ')')

plt.ylabel('loss')

plt.show()

class SimpleSkipGram:

def __init__(self, vocab_size, hidden_size):

V, H = vocab_size, hidden_size

# 初始化权重

W_in = 0.01 * np.random.randn(V, H).astype('f')

W_out = 0.01 * np.random.randn(H, V).astype('f')

# 生成层

self.in_layer = MatMul(W_in)

self.out_layer = MatMul(W_out)

self.loss_layer1 = SoftmaxWithLoss()

self.loss_layer2 = SoftmaxWithLoss()

# 将所有的权重和梯度整理到列表中

layers = [self.in_layer, self.out_layer]

self.params, self.grads = [], []

for layer in layers:

self.params += layer.params

self.grads += layer.grads

# 将单词的分布式表示设置为成员变量

self.word_vecs = W_in

def forward(self, contexts, target):

h = self.in_layer.forward(target)

s = self.out_layer.forward(h)

l1 = self.loss_layer1.forward(s, contexts[:, 0])

l2 = self.loss_layer2.forward(s, contexts[:, 1])

loss = l1 + l2

return loss

def backward(self, dout=1):

dl1 = self.loss_layer1.backward(dout)

dl2 = self.loss_layer2.backward(dout)

ds = dl1 + dl2

dh = self.out_layer.backward(ds)

self.in_layer.backward(dh)

return None

if __name__ == '__main__':

hidden_size = 5

batch_size = 3

max_epoch = 1000

sentences_list = ['You say goodbye and I say hello.'] # 训练数据

word_list, word_dict, number_dict,n_class=preprocess(sentences_list)

print('word_list为: ',word_list)

print('word_dict为:',word_dict)

print('number_dict为:',number_dict)

print('n_class为:',n_class)

contexts, target = create_contexts_target(sentences_list, word_dict) # 获取上下文及标签值

# print('contexts',contexts)

# print('target',target)

# 上下文及标签值转为one-hot编码

target=convert_one_hot(target, n_class)

contexts = convert_one_hot(contexts, n_class)

# print('contexts', contexts)

# print('target', target)

model = SimpleSkipGram(n_class, hidden_size)

optimizer = Adam()

trainer = Trainer(model, optimizer)

trainer.fit(contexts,target,max_epoch, batch_size)

trainer.plot()

word_vecs = model.word_vecs

for word_id, word in number_dict.items():

print(word, word_vecs[word_id])

总结

- 没有看代码之前不知道

输入与输出的数据格式,看完代码可以更好的理解原理 - 模型训练代码没有看,以后有时间再看,主要是不太理解

词向量编码与解码的过程 - 看代码可以更好地理解原理流程

- 现在主要看原理,以后读代码,现在的算法封装的很好,不需要看也可以跑项目,如果时间不急的话,可以深入研究,我急,所以我不研究