K8s集群史上最详细二进制安装教程

1. 安装部署准备工作

1.1 基础环境准备

- 5台2C/2G/50G磁盘虚拟机(内核版本最低需要3.10)

- Centos7.6系统

- 关闭selinux,关闭firewalld

- 时间同步

- 调整base源和epel

- 关闭swap分区

| Name |

IP |

OS |

Remarks |

| proxy01 |

192.168.1.141 |

Centos7.6 |

proxy master |

| proxy02 |

192.168.1.142 |

Centos7.6 |

proxy standby |

| k8s01 |

192.168.1.131 |

Centos7.6 |

计算、存储、管理等节点 |

| k8s02 |

192.168.1.132 |

Centos7.6 |

计算、存储、管理等节点 |

| yunwei |

192.168.1.200 |

Centos7.6 |

运维主机 |

1.2 核心组件安装顺序

- 安装部署bind9内网DNS

- 安装部署docker私有仓库-harbor

- 准备证书签发环境-cfssl

- 安装部署主控节点服务-etcd、apiserver、controller-manager、scheduler

- 安装部署运算节点服务-kubelet、kube-proxy

1.3 组件作用

- etcd

分布式键值存储系统。用于保存集群状态数据,比如Pod、Service等对象信息。

- apiserver

Kubernetes API,集群的统一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储

- controller-manager

处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的

- scheduler

根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一个节点上,也可以部署在不同的节点上

- kubelet

kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器

- kube-proxy

在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。

2. 部署环境架构

3. 环境优化(所有机器都需要执行)

3.1 安装epel源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo3.2 安装基本软件

yum install -y wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils vim less3.3 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld3.4 关闭selinux

setenforce 0

sed -ir '/^SELINUX=/s/=.+/=disabled/' /etc/selinux/config3.5 修改主机名

| name | hostname |

| proxy01 | proxy-master.host.com |

| proxy02 | proxy-standby.host.com |

| k8s01 | k8s01.host.com |

| k8s02 | k8s02.host.com |

| yunwei | yunwei.host.com |

3.6 时间同步

crontab -e

#ntp

00 * * * * /usr/sbin/ntpdate ntp6.aliyun.com

4. 安装bind9

4.1 安装规划

| 主机名 | 角色 | IP |

| proxy-master.host.com | bind9 | 192.168.1.141 |

4.2 安装

yum install -y bind

4.3 配置主配置文件

vim /etc/named.conf

options {

listen-on port 53 { 192.168.1.141; }; #本机IP地址,下面ipv6地址需要删除

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query { any; }; #允许所有机器可以查

forwarders { 192.168.1.254; }; #上级dns,虚拟机网关地址

/*

- If you are building an AUTHORITATIVE DNS server, do NOT enable recursion.

- If you are building a RECURSIVE (caching) DNS server, you need to enable

recursion.

- If your recursive DNS server has a public IP address, you MUST enable access

control to limit queries to your legitimate users. Failing to do so will

cause your server to become part of large scale DNS amplification

attacks. Implementing BCP38 within your network would greatly

reduce such attack surface

*/

recursion yes; #采用递归方法查询IP

dnssec-enable no; #实验环境,关闭dnssec,节省资源

dnssec-validation no; #实验环境,关闭dnssec,节省资源

/* Path to ISC DLV key */

bindkeys-file "/etc/named.root.key";

managed-keys-directory "/var/named/dynamic";

pid-file "/run/named/named.pid";

session-keyfile "/run/named/session.key";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";4.4 检查主配置文件

named-checkconf # 检查配置 没有信息即为正确4.5 配置区域配置文件

vim /etc/named.rfc1912.zones

zone "localhost.localdomain" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "localhost" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "1.0.0.127.in-addr.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "0.in-addr.arpa" IN {

type master;

file "named.empty";

allow-update { none; };

};

# 增加两个zone配置,od.com为业务域,host.com.zone为主机域(2个域可以自定义)

zone "host.com" IN {

type master;

file "host.com.zone";

allow-update { 192.168.1.141; };

};

zone "od.com" IN {

type master;

file "od.com.zone";

allow-update { 192.168.1.141; };

};

4.6 配置主机域文件

vim /var/named/host.com.zone#第四行,格式为xxxx xx xx01(年月日01),每次修改配置文件都需要前滚一个序列号

$ORIGIN host.com.

$TTL 600 ; 10 minutes # 过期时间十分钟 这里的分号是注释

@ IN SOA dns.host.com. dnsadmin.host.com. (

2021062101 ; serial

10800 ; refresh (3 hours) # soa参数

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com.

$TTL 60 ; 1 minute

dns A 192.168.1.141

proxy_master A 192.168.1.141

proxy_standby A 192.168.1.142

k8s01 A 192.168.1.131

k8s02 A 192.168.1.132

yunwei A 192.168.1.2004.7 配置业务域文件

vim /var/named/od.com.zone$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2021062101 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.1.1414.8 检查配置文件

named-checkconf4.9 启动bind服务

systemctl start named

systemctl enable named4.10 测试是否可以解析到192.168.1.141

dig -t A proxy-master.host.com @192.168.1.141 +shor4.11 修改所有主机DNS

vim /etc/sysconfig/network-scripts/ifcfg-ens33TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="none"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="219a82ff-07e0-4280-b0a4-0e125cf0aedb"

DEVICE="ens33"

ONBOOT="yes"

IPADDR="192.168.1.141"

PREFIX="24"

GATEWAY="192.168.1.254"

DNS1="192.168.1.141"

IPV6_PRIVACY="no"4.12 配置所有主机resolv.conf文件

vim /etc/resolv.conf# Generated by NetworkManager

search host.com #用于可以直接ping通域名,一般默认重启网卡就有

nameserver 192.168.1.141

5. 根证书准备

| 主机名 | 角色 | IP |

| yunwei.host.com | 签发证书 | 192.168.1.200 |

5.1 下载证书工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/local/bin/cfssl-json

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/local/bin/cfssl-certinfo5.2 授权

chmod 755 /usr/local/bin/cfssl*5.3 签发根证书

mkdir /opt/certs/

cd /opt/certs/

vim /opt/certs/ca-csr.json{

"CN": "vertexcloud",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "vt",

"OU": "vtc"

}

],

"ca": {

"expiry": "175200h"

}

}cd /opt/certs/

cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

6. 安装docker环境

6.1 安装规划

| 主机名 | 角色 | IP |

| k8s01.host.com | docker | 192.168.1.131 |

| k8s02.host.com | docker | 192.168.1.132 |

| yunwei.host.com | docker | 192.168.1.200 |

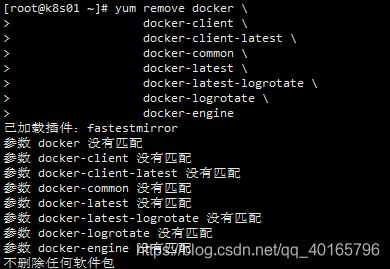

6.2 卸载旧版本

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine6.3 yum安装docker,安装yum-utils软件包(提供yum-config-manager 实用程序)并设置稳定的存储库

yum install -y yum-utils

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repoyum install docker-ce docker-ce-cli containerd.io -y6.4 配置docker

6.3.1 创建docker目录

mkdir -p /etc/docker/

mkdir -p /data/docker/6.4 配置的daemon.json

6.4.1 创建daemon.json文件

vim /etc/docker/daemon.json{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com"],

"registry-mirrors": ["https://ktfzo0tc.mirror.aliyuncs.com"],

"bip": "172.7.131.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

7. harbor安装

7.1 安装规划

| 主机名 | 角色 | IP |

| yunwei.host.com | harbor | 192.168.1.200 |

7.2 官方地址

官方地址:https://goharbor.io/

下载地址:https://github.com/goharbor/harbor/releases

注意 不要选择1.7.5以下版本 有漏洞

下载的时候下载harbor-offline-installer-vx.x.x.tgz版本(离线安装版本)

7.3 下载软件

wget https://github.com/goharbor/harbor/releases/download/v1.9.4/harbor-offline-installer-v1.9.4.tgz

7.4 解压

tar -xvf harbor-offline-installer-v1.9.4.tgz -C /opt/7.5重命名

cd /opt/

mv harbor/ harbor-v1.9.47.6 软连接(方便升级)

ln -s /opt/harbor-v1.9.4 /opt/harbor7.7 配置harbor.yml文件

vim /opt/harbor/harbor.ymlhostname: harbor.od.com

http:

port: 180

data_volume: /data/harbor

location: /data/harbor/logs

创建/data/harbor/logs目录

mkdir -p /data/harbor/logs7.8 harbor依赖docker-compose做单机编排,所以需要安装此组件

yum install -y docker-compose7.9 安装

cd /opt/harbor

./install.sh7.10 设置harbor开机自启动

vim /etc/rc.d/rc.local# start harbor

cd /opt/harbor

docker-compose startchmod 755 /etc/rc.d/rc.local7.11 安装nginx,反向代理harbor

yum -y install nginxvim /etc/nginx/conf.d/harbor.confserver {

listen 80;

server_name harbor.od.com;

# 避免出现上传失败的情况

client_max_body_size 1000m;

location / {

proxy_pass http://127.0.0.1:180;

}

}7.12 启动nginx服务

systemctl start nginx

systemctl enable nginx7.13 proxy-master(192.168.1.141)上配置DNS解析,通过域名访问harbor

vim /var/named/od.com.zone$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2021062101 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.1.141

harbor A 192.168.1.2007.14 重启DNS服务

systemctl restart named.service7.15 本地电脑上加一台hosts解析

打开C:\Windows\System32\drivers\etc目录,修改hosts文件,在最后一行添加

192.168.1.200 harbor.od.com

15、本地电脑上访问harbor

http://harbor.od.com

账号:admin

密码:Harbor12345

7.16 测试harbor

7.16 .1 新建项目

7.16.2 在yunwei(192.168.1.200)上拉取nginx镜像

docker pull nginx:1.7.9

docker tag nginx:1.7.9 harbor.od.com/public/nginx:v1.7.9

docker login -u admin harbor.od.com

docker push harbor.od.com/public/nginx:v1.7.9

docker logout8. K8s 主控节点部署

8.1 部署etcd集群(etcd 的leader选举机制,要求至少为3台或以上的奇数台)

8.1.1 集群规划

| 主机名 | 角色 | IP |

| proxy-standby | etcd lead | 192.168.1.142 |

| k8s01 | etcd follow | 192.168.1.131 |

| k8s02 | etcd follow | 192.168.1.132 |

8.1.2 签发etcd证书(yunwei 192.168.1.200)

创建ca的json配置: /opt/certs/ca-config.json

- server 表示服务端连接客户端时携带的证书,用于客户端验证服务端身份

- client 表示客户端连接服务端时携带的证书,用于服务端验证客户端身份

- peer 表示相互之间连接时使用的证书,如etcd节点之间验证

"expiry": "175200h"证书有效期 十年 如果这里是一年的话 到期后集群会立宕掉

vim /opt/certs/ca-config.json{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}8.1.3 创建etcd证书配置:/opt/certs/etcd-peer-csr.json

重点在hosts上,将所有可能的etcd服务器添加到host列表,不能使用网段,新增etcd服务器需要重新签发证书

vim /opt/certs/etcd-peer-csr.json{

"CN": "k8s-etcd",

"hosts": [

"192.168.1.141",

"192.168.1.142",

"192.168.1.131",

"192.168.1.132"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shnaghai",

"L": "shanghai",

"O": "vt",

"OU": "vtc"

}

]

}① 签发证书

cd /opt/certs/

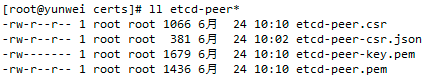

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json |cfssl-json -bare etcd-peer② 查看证书

ll etcd-peer*8.1.4 安装etcd

① 下载etcd

useradd -s /sbin/nologin -M etcd

wget https://github.com/etcd-io/etcd/releases/download/v3.1.20/etcd-v3.1.20-linux-amd64.tar.gz

tar -xvf etcd-v3.1.20-linux-amd64.tar.gz

mv etcd-v3.1.20-linux-amd64 /opt/etcd-v3.1.20

ln -s /opt/etcd-v3.1.20 /opt/etcd

mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server② 下发证书到各个etcd上

scp 192.168.1.200:/opt/certs/ca.pem /opt/etcd/certs/

scp 192.168.1.200:/opt/certs/etcd-peer.pem /opt/etcd/certs/

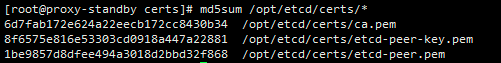

scp 192.168.1.200:/opt/certs/etcd-peer-key.pem /opt/etcd/certs/③ 校验证书

md5sum /opt/etcd/certs/*④ 创建启动脚本

vim /opt/etcd/etcd-server-startup.sh⑤ proxy-standby(192.168.1.142)脚本

#!/bin/sh

# listen-peer-urls etcd节点之间通信端口

# listen-client-urls 客户端与etcd通信端口

# quota-backend-bytes 配额大小

# 需要修改的参数:name,listen-peer-urls,listen-client-urls,initial-advertise-peer-urls

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/etcd/etcd --name etcd-server-7-142 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.1.142:2380 \

--listen-client-urls https://192.168.1.142:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.1.142:2380 \

--advertise-client-urls https://192.168.1.142:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-7-142=https://192.168.1.142:2380,etcd-server-7-131=https://192.168.1.131:2380,etcd-server-7-132=https://192.168.1.132:2380 \

--ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout⑥ k8s01(192.168.1.131)脚本

#!/bin/sh

# listen-peer-urls etcd节点之间通信端口

# listen-client-urls 客户端与etcd通信端口

# quota-backend-bytes 配额大小

# 需要修改的参数:name,listen-peer-urls,listen-client-urls,initial-advertise-peer-urls

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/etcd/etcd --name etcd-server-7-131 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.1.131:2380 \

--listen-client-urls https://192.168.1.131:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.1.131:2380 \

--advertise-client-urls https://192.168.1.131:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-7-142=https://192.168.1.142:2380,etcd-server-7-131=https://192.168.1.131:2380,etcd-server-7-132=https://192.168.1.132:2380 \

--ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout⑦ k8s02(192.168.1.132)脚本

#!/bin/sh

# listen-peer-urls etcd节点之间通信端口

# listen-client-urls 客户端与etcd通信端口

# quota-backend-bytes 配额大小

# 需要修改的参数:name,listen-peer-urls,listen-client-urls,initial-advertise-peer-urls

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/etcd/etcd --name etcd-server-7-132 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://192.168.1.132:2380 \

--listen-client-urls https://192.168.1.132:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://192.168.1.132:2380 \

--advertise-client-urls https://192.168.1.132:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-7-142=https://192.168.1.142:2380,etcd-server-7-131=https://192.168.1.131:2380,etcd-server-7-132=https://192.168.1.132:2380 \

--ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout⑧ 授权

chmod 755 /opt/etcd/etcd-server-startup.sh

chown -R etcd.etcd /opt/etcd/ /data/etcd /data/logs/etcd-server8.1.5 启动etcd

因为这些进程都是要启动为后台进程,要么手动启动,要么采用后台进程管理工具,实验中使用后台管理工具

① 安装supervisor

yum install -y supervisor② 启动supervisor

systemctl start supervisord

systemctl enable supervisord③ 创建etcd-server启动配置

vim /etc/supervisord.d/etcd-server.ini④(proxy-standby 192.168.1.142)的配置

[program:etcd-server-7-142]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=5 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)⑤(k8s01 192.168.1.131)的配置

[program:etcd-server-7-131]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=5 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)⑥(k8s02 192.168.1.132)的配置

[program:etcd-server-7-132]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=5 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

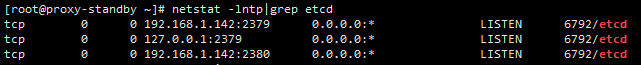

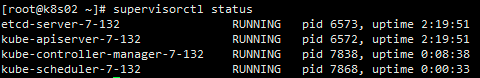

stdout_events_enabled=false ; emit events on stdout writes (default false)supervisorctl update⑦ etcd 进程状态查看

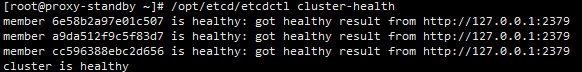

netstat -lntp|grep etcd⑧ 检查etcd集群健康状态

/opt/etcd/etcdctl cluster-health⑨ etcd启动方式

#etcd-server-7-142启动方式

supervisorctl start etcd-server-7-142

supervisorctl stop etcd-server-7-142

supervisorctl restart etcd-server-7-142

supervisorctl status etcd-server-7-142

#etcd-server-7-131启动方式

supervisorctl start etcd-server-7-131

supervisorctl stop etcd-server-7-131

supervisorctl restart etcd-server-7-131

supervisorctl status etcd-server-7-131

#etcd-server-7-132启动方式

supervisorctl start etcd-server-7-132

supervisorctl stop etcd-server-7-132

supervisorctl restart etcd-server-7-132

supervisorctl status etcd-server-7-1328.2 apiserver安装

8.2.1 集群规划

| 主机名 | 角色 | IP |

| k8s01.host.com | apiserver | 192.168.1.131 |

| k8s02.host.com | apiserver | 192.168.1.132 |

8.2.2 下载kubernetes服务端

- 进入kubernetes的github页面: https://github.com/kubernetes/kubernetes

- 进入tags页签: https://github.com/kubernetes/kubernetes/tags

- 选择要下载的版本: https://github.com/kubernetes/kubernetes/releases/tag/v1.15.2

- 点击 CHANGELOG-${version}.md 进入说明页面: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.15.md#downloads-for-v1152

- 下载Server Binaries: https://dl.k8s.io/v1.15.2/kubernetes-server-linux-amd64.tar.gz

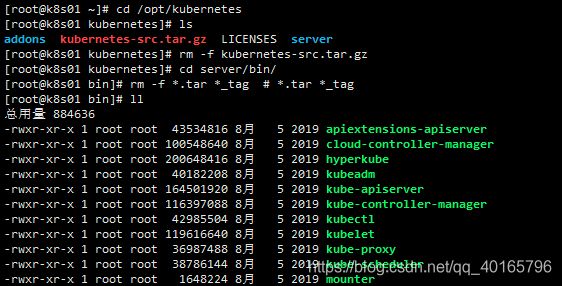

wget https://dl.k8s.io/v1.15.2/kubernetes-server-linux-amd64.tar.gz

tar -vxf kubernetes-server-linux-amd64.tar.gz

mv kubernetes /opt/kubernetes-v1.15.2

ln -s /opt/kubernetes-v1.15.2 /opt/kubernetes

cd /opt/kubernetes

rm -f kubernetes-src.tar.gz

cd server/bin/

rm -f *.tar *_tag # *.tar *_tag

ll8.2.3 签发client证书(apiserver和etcd通信证书),在yunwei 192.168.1.200上签发

vim /opt/certs/client-csr.json{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "vt",

"OU": "vtc"

}

]

}cd /opt/certs/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client8.2.4 签发server证书(apiserver和其它k8s组件通信使用),在yunwei 192.168.1.200上签发

vim /opt/certs/apiserver-csr.json{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"10.0.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"192.168.1.140",

"192.168.1.131",

"192.168.1.132",

"192.168.1.133"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "vt",

"OU": "vtc"

}

]

}

cd /opt/certs/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver8.2.5 证书下发(k8s01 192.168.1.131,k8s02 192.168.1.132)

cd /opt/kubernetes/server/bin/

mkdir certs

scp 192.168.1.200:/opt/certs/apiserver-key.pem /opt/kubernetes/server/bin/certs/

scp 192.168.1.200:/opt/certs/apiserver.pem /opt/kubernetes/server/bin/certs/

scp 192.168.1.200:/opt/certs/ca-key.pem /opt/kubernetes/server/bin/certs/

scp 192.168.1.200:/opt/certs/ca.pem /opt/kubernetes/server/bin/certs/

scp 192.168.1.200:/opt/certs/client-key.pem /opt/kubernetes/server/bin/certs/

scp 192.168.1.200:/opt/certs/client.pem /opt/kubernetes/server/bin/certs/

8.2.6 配置apiserver日志审计(k8s01 192.168.1.131,k8s02 192.168.1.132)

mkdir -p /opt/kubernetes/server/bin/conf

vim /opt/kubernetes/server/bin/conf/audit.yamlapiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"8.2.7 配置apiserver启动脚本(k8s01 192.168.1.131,k8s02 192.168.1.132)

vim /opt/kubernetes/server/bin/kube-apiserver-startup.sh#!/bin/bash

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-apiserver \

--apiserver-count 2 \

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \

--audit-policy-file /opt/kubernetes/server/bin/conf/audit.yaml \

--authorization-mode RBAC \

--client-ca-file ./certs/ca.pem \

--requestheader-client-ca-file ./certs/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \

--etcd-cafile ./certs/ca.pem \

--etcd-certfile ./certs/client.pem \

--etcd-keyfile ./certs/client-key.pem \

--etcd-servers https://192.168.1.142:2379,https://192.168.1.131:2379,https://192.168.1.132:2379 \

--service-account-key-file ./certs/ca-key.pem \

--service-cluster-ip-range 10.0.0.0/16 \

--service-node-port-range 3000-29999 \

--target-ram-mb=1024 \

--kubelet-client-certificate ./certs/client.pem \

--kubelet-client-key ./certs/client-key.pem \

--log-dir /data/logs/kubernetes/kube-apiserver \

--tls-cert-file ./certs/apiserver.pem \

--tls-private-key-file ./certs/apiserver-key.pem \

--v 2chmod 755 /opt/kubernetes/server/bin/kube-apiserver-startup.shmkdir -p /data/logs/kubernetes/kube-apiserver/8.2.8 配置k8s01 192.168.1.131的supervisor启动配置

vim /etc/supervisord.d/kube-apiserver.ini[program:kube-apiserver-7-131]

command=/opt/kubernetes/server/bin/kube-apiserver-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=falsesupervisorctl update

supervisorctl status8.2.9 配置k8s02 192.168.1.132的supervisor启动配置

vim /etc/supervisord.d/kube-apiserver.ini[program:kube-apiserver-7-132]

command=/opt/kubernetes/server/bin/kube-apiserver-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=falsesupervisorctl update

supervisorctl status8.2.10 启停apiserver

supervisorctl start kube-apiserver-7-131

supervisorctl stop kube-apiserver-7-131

supervisorctl restart kube-apiserver-7-131

supervisorctl status kube-apiserver-7-131

supervisorctl start kube-apiserver-7-132

supervisorctl stop kube-apiserver-7-132

supervisorctl restart kube-apiserver-7-132

supervisorctl status kube-apiserver-7-1328.2.11 配置apiserver L4代理(proxy-master 192.168.1.141 proxy-standby 192.168.1.142)

① 安装依赖包

yum -y install gcc gcc-c++ autoconf automake zlib zlib-devel openssl openssl-devel pcre pcre-devel

②下载nginx包

wget http://nginx.org/download/nginx-1.19.0.tar.gz③ 解压

tar -xvf nginx-1.19.0.tar.gz

mv nginx-1.19.0 /opt/

ln -s /opt/nginx-1.19.0/ /opt/nginx

⑤ 编译安装

cd /opt/nginx./configure --prefix=/usr/local/nginx --with-http_dav_module --with-http_ssl_module --with-http_stub_status_module --with-http_addition_module --with-http_sub_module --with-http_flv_module --with-http_mp4_module --with-stream --with-stream_realip_module --with-stream_ssl_module --with-stream_ssl_preread_module

make && make install

⑥ 配置nginx.conf

vim /usr/local/nginx/conf/nginx.conf在文件最后一行加入以下内容

stream {

log_format proxy '$time_local|$remote_addr|$upstream_addr|$protocol|$status|'

'$session_time|$upstream_connect_time|$bytes_sent|$bytes_received|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

upstream kube-apiserver {

server 192.168.1.131:6443 max_fails=3 fail_timeout=30s;

server 192.168.1.132:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

access_log /var/log/nginx/proxy.log proxy;

}

}

⑦ 创建/var/log/nginx/目录

mkdir -p /var/log/nginx/⑧ 创建nginx启动脚本

vim /usr/lib/systemd/system/nginx.service配置以下内容

[Unit]

Description=nginx service

After=network.target

[Service]

Type=forking

ExecStart=/usr/local/nginx/sbin/nginx

ExecReload=/usr/local/nginx/sbin/nginx

ExecStop=/usr/local/nginx/sbin/nginx

PrivateTmp=true

[Install]

WantedBy=multi-user.target⑨ 启动nginx

systemctl start nginx

systemctl enable nginx8.2.12 配置nginx高可用

① 集群规划

| 主机名 | 角色 | IP |

| proxy-master.host.com | keepalived master | 192.168.1.141 |

| proxy-standby.host.com | keepalived backup | 192.168.1.142 |

② 安装keepalived

yum -y install keepalived③ 创建nginx检查脚本

vim /etc/keepalived/nginx_check.sh#!/bin/bash

#keepalived 监控端口脚本

#}

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

chmod 755 /etc/keepalived/nginx_check.sh

④ 配置主keepalived配置文件(proxy-master 192.168.1.141)

vim /etc/keepalived/keepalived.conf! Configuration File for keepalived

global_defs {

router_id localhost

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state Master

interface ens33

virtual_router_id 50

mcast_src_ip 192.168.1.141

nopreempt #设置非抢占式,当主服务down,vip漂移到备机,当主机服务up,vip依然在备机上

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.1.140

}

}

⑤ 配置备keepalived配置文件(proxy-standby 192.168.1.142)

vim /etc/keepalived/keepalived.conf! Configuration File for keepalived

global_defs {

router_id localhost

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 50

mcast_src_ip 192.168.1.142

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.1.140

}

}⑥ 启动keepalived

systemctl start keepalived

systemctl enable keepalived

⑦ 检查

使用ip addr命令发现主keepalived上网卡有192.168.1.140的IP地址,备keepalived则是没有的;当主停止nginx服务,主上的虚拟IP 192.168.1.140会漂移到备机上

8.3 controller-manager 安装

8.3.1 集群规划

| 主机名 | 角色 | IP |

| k8s01.host.com | controller-manager | 192.168.1.131 |

| k8s02.host.com | controller-manager | 192.168.1.132 |

8.3.2 创建controller-manager 启动脚本

vim /opt/kubernetes/server/bin/kube-controller-manager-startup.sh#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-controller-manager \

--cluster-cidr 172.7.0.0/16 \

--leader-elect true \

--log-dir /data/logs/kubernetes/kube-controller-manager \

--master http://127.0.0.1:8080 \

--service-account-private-key-file ./certs/ca-key.pem \

--service-cluster-ip-range 10.0.0.0/16 \

--root-ca-file ./certs/ca.pem \

--v 2chmod 755 /opt/kubernetes/server/bin/kube-controller-manager-startup.shmkdir -p /data/logs/kubernetes/kube-controller-manager8.3.3 配置supervisor启动配置

vim /etc/supervisord.d/kube-controller-manager.ini① k8s01 192.168.1.131的配置文件

[program:kube-controller-manager-7-131]

command=/opt/kubernetes/server/bin/kube-controller-manager-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

② k8s01 192.168.1.132的配置文件

[program:kube-controller-manager-7-132]

command=/opt/kubernetes/server/bin/kube-controller-manager-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

~ 8.3.4 加入supervisorctl

supervisorctl update8.4 kube-scheduler安装

8.4.1 集群规划

| 主机名 | 角色 | IP |

| k8s01.host.com | controller-manager | 192.168.1.131 |

| k8s02.host.com | controller-manager | 192.168.1.132 |

8.4.2 创建kube-scheduler启动脚本

vim /opt/kubernetes/server/bin/kube-scheduler-startup.sh#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-scheduler \

--leader-elect \

--log-dir /data/logs/kubernetes/kube-scheduler \

--master http://127.0.0.1:8080 \

--v 2chmod 755 /opt/kubernetes/server/bin/kube-scheduler-startup.shmkdir -p /data/logs/kubernetes/kube-scheduler8.4.3 配置supervisor启动配置

vim /etc/supervisord.d/kube-scheduler.ini① k8s01 192.168.1.131的配置文件

[program:kube-scheduler-7-131]

command=/opt/kubernetes/server/bin/kube-scheduler-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false ② k8s01 192.168.1.132的配置文件

[program:kube-scheduler-7-132]

command=/opt/kubernetes/server/bin/kube-scheduler-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false 8.4.4 加入supervisorctl

supervisorctl update

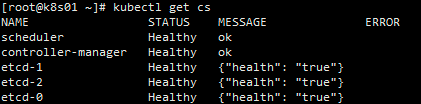

supervisorctl status8.5 检查主控节点状态

ln -s /opt/kubernetes/server/bin/kubectl /usr/local/bin/kubectl get cs9. 运算节点部署

9.1 kebelet部署

9.1.1签发证书(yunwei 192.168.1.200)

加入可能是运算节点的IP,这样可以保证新加节点,不需要重新签发证书

vim /opt/certs/kubelet-csr.json{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"192.168.1.140",

"192.168.1.131",

"192.168.1.132",

"192.168.1.133",

"192.168.1.134",

"192.168.1.135",

"192.168.1.136",

"192.168.1.137",

"192.168.1.138"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "vt",

"OU": "vtc"

}

]

}cd /opt/certs/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet9.1.2 复制证书(yunwei 192.168.1.200)

cd /opt/certs/

scp kubelet.pem kubelet-key.pem 192.168.1.131:/opt/kubernetes/server/bin/certs/

scp kubelet.pem kubelet-key.pem 192.168.1.132:/opt/kubernetes/server/bin/certs/9.1.3 创建kubelet配置(k8s01 192.168.1.131)

- 创建/opt/kubernetes/server/conf目录

mkdir -p /opt/kubernetes/server/conf- 进入/opt/kubernetes/server/conf目录

cd /opt/kubernetes/server/conf- set-cluster # 创建需要连接的集群信息,可以创建多个k8s集群信息

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \

--embed-certs=true \

--server=https://192.168.1.140:7443 \

--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig- set-credentials # 创建用户账号,即用户登陆使用的客户端私有和证书,可以创建多个证书

kubectl config set-credentials k8s-node \

--client-certificate=/opt/kubernetes/server/bin/certs/client.pem \

--client-key=/opt/kubernetes/server/bin/certs/client-key.pem \

--embed-certs=true \

--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig- set-context # 设置context,即确定账号和集群对应关系

kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig- use-context # 设置当前使用哪个context

kubectl config use-context myk8s-context --kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig- u在k8s02 192.168.1.132上创建/opt/kubernetes/conf/目录

mkdir -p /opt/kubernetes/conf

- 把/opt/kubernetes/conf/kubelet.kubeconfig传给k8s02 192.168.1.132,这样就不需要在k8s02机器上重新上述四个步骤配置

scp /opt/kubernetes/conf/kubelet.kubeconfig 192.168.1.132:/opt/kubernetes/conf/9.1.4 授权k8s-node用户(k8s01 192.168.1.131)

cd /opt/kubernetes/server/bin/conf/

vim k8s-node.yamlapiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-nodekubectl create -f k8s-node.yaml kubectl get clusterrolebinding k8s-node9.1.5 准备pause基础镜像(yunwei 192.168.1.200),这个镜像的作用给容器进行初始化

docker image pull kubernetes/pause

docker image tag kubernetes/pause:latest harbor.od.com/public/pause:latest

docker login -u admin harbor.od.com

docker image push harbor.od.com/public/pause:latest9.1.6 创建启动脚本(k8s01 192.168.1.131,k8s02 192.168.1.132)

vim /opt/kubernetes/server/bin/kubelet-startup.sh① k8s01的启动脚本

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 10.0.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./certs/ca.pem \

--tls-cert-file ./certs/kubelet.pem \

--tls-private-key-file ./certs/kubelet-key.pem \

--hostname-override k8s01.host.com \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig ../../conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.od.com/public/pause:latest \

--root-dir /data/kubelet② k8s02的启动脚本

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 10.0.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./certs/ca.pem \

--tls-cert-file ./certs/kubelet.pem \

--tls-private-key-file ./certs/kubelet-key.pem \

--hostname-override k8s02.host.com \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig ../../conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.od.com/public/pause:latest \

--root-dir /data/kubelet9.1.7 授权并创建/data/logs/kubernetes/kube-kubelet 和 /data/kubelet目录

chmod 755 /opt/kubernetes/server/bin/kubelet-startup.sh

mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet9.1.8 创建supervisor k8s01配置文件

vim /etc/supervisord.d/kube-kubelet.ini[program:kube-kubelet-7-131]

command=/opt/kubernetes/server/bin/kubelet-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false9.1.9 创建supervisor k8s02配置文件

[program:kube-kubelet-7-132]

command=/opt/kubernetes/server/bin/kubelet-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false9.1.10 加入supervisor

supervisorctl update

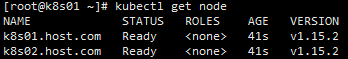

supervisorctl status9.1.11 修改节点名称

使用 kubectl get nodes 获取的Node节点角色为空,可以按照以下方式修改

kubectl get node#修改k801标签为node

kubectl label node k8s01.host.com node-role.kubernetes.io/node=

#修改k801标签为master

kubectl label node k8s01.host.com node-role.kubernetes.io/master=

#修改k802标签为node

kubectl label node k8s02.host.com node-role.kubernetes.io/node=

#修改k802标签为master

kubectl label node k8s02.host.com node-role.kubernetes.io/master=

10. kube-proxy部署(连接pod网络和集群网络)

10.1 签发证书(yunwei 192.168.1.200)

cd /opt/certs/

vim kube-proxy-csr.json10.1.1 证书请求文件

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "shanghai",

"L": "shanghai",

"O": "vt",

"OU": "vtc"

}

]

}10.1.2 签发证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client10.1.3 复制证书到k8s01上

scp kube-proxy-client-key.pem kube-proxy-client.pem 192.168.1.131:/opt/kubernetes/server/bin/certs/ 10.1.4 复制证书到k8s02上

scp kube-proxy-client-key.pem kube-proxy-client.pem 192.168.1.132:/opt/kubernetes/server/bin/certs/ 10.2 创建kube-proxy配置文件(k8s01 192.168.1.131)

10.2.1 进入/opt/kubernetes/server/bin/conf

cd /opt/kubernetes/server/bin/conf/

kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/certs/ca.pem \

--embed-certs=true \

--server=https://192.168.1.140:7443 \

--kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfigkubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/certs/kube-proxy-client.pem \

--client-key=/opt/kubernetes/server/bin/certs/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfigkubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfigkubectl config use-context myk8s-context --kubeconfig=/opt/kubernetes/conf/kube-proxy.kubeconfig10.2.2 复制/opt/kubernetes/conf/kube-proxy.kubeconfig文件到k8s02的/opt/kubernetes/conf/目录

cd /opt/kubernetes/conf/

scp kube-proxy.kubeconfig 192.168.1.132:/opt/kubernetes/conf/10.3 加载ipvs模块

10.3.1 集群规划

| 主机名 | 角色 | IP |

| k8s01.host.com | controller-manager | 192.168.1.131 |

| k8s02.host.com | controller-manager | 192.168.1.132 |

kube-proxy 共有3种流量调度模式,分别是 namespace,iptables,ipvs,其中ipvs性能最好

10.3.2 创建加载ipvs脚本

vim ipvs.sh

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

donechmod 755 ipvs.sh

./ipvs.sh

10.3.3 查看加载的模块

lsmod | grep ip_vs10.4 创建kube-proxy启动脚本

vim /opt/kubernetes/server/bin/kube-proxy-startup.sh10.4.1 k8s01的kube-proxy启动脚本

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override k8s01.host.com \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig /opt/kubernetes/conf/kube-proxy.kubeconfig10.4.2 k8s02的kube-proxy启动脚本

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/opt/kubernetes/server/bin/kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override k8s02.host.com \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig /opt/kubernetes/conf/kube-proxy.kubeconfig10.4.3 授权并创建目录

chmod 755 /opt/kubernetes/server/bin/kube-proxy-startup.sh

mkdir -p /data/logs/kubernetes/kube-proxy10.4.5 配置kube-proxy的supervisor 配置文件

① 创建supervisor k8s01配置文件

vim /etc/supervisord.d/kube-proxy.ini[program:kube-proxy-7-131]

command=/opt/kubernetes/server/bin/kube-proxy-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

② 创建supervisor k8s02配置文件

vim /etc/supervisord.d/kube-proxy.ini[program:kube-proxy-7-132]

command=/opt/kubernetes/server/bin/kube-proxy-startup.sh

numprocs=1

directory=/opt/kubernetes/server/bin

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false

③ 加入supervisor

supervisorctl update10.5 验证服务状态

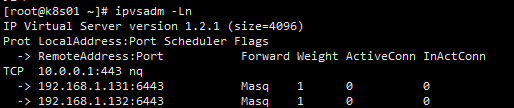

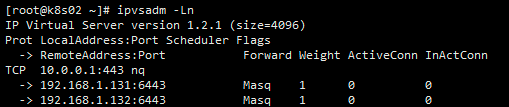

10.5.1 安装ipvsadm(k8s01,k8s02)

yum install -y ipvsadmipvsadm -Ln11. 验证集群

11.1 任意一个计算节点,创建一个资源配置清单(k8s01 192.168.11.131)

这里使用之前上传到harbor镜像仓库的nginx镜像来进行测试

vim nginx-ds.yaml apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:v1.7.9

ports:

- containerPort: 8011.2 创建资源(创建资源之前,运算节点必须登录harbor)

docker login -u admin harbor.od.com

kubectl create -f nginx-ds.yaml11.3 查看pods运行状态

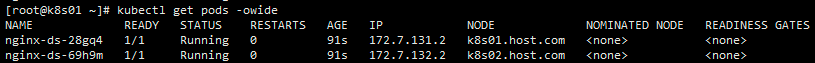

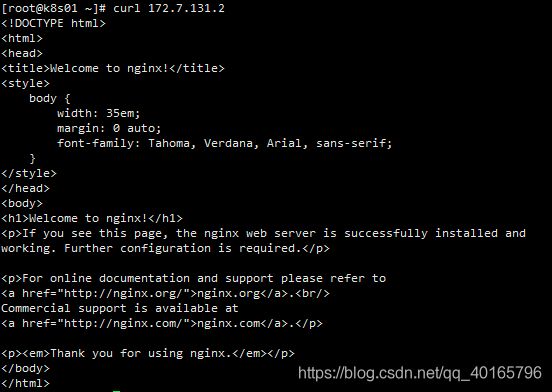

kubectl get pods -owide11.4 访问服务是否正常(因为跨宿主机访问需要安装flannel插件,所以在k8s01上只能访问172.7.131.2这个pod,如何需要访问172.7.132.2,需要去k8s02上进行访问)

curl 172.7.131.212. 核心插件安装

12.1 K8s的CNI网络插件-Flannel安装

12.1.1 安装规划

| 主机名 | 角色 | IP |

| k8s01.host.com | controller-manager | 192.168.1.131 |

| k8s02.host.com | controller-manager | 192.168.1.132 |

12.1.2 下载

wget "https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz"

12.1.3 解压

mkdir -p /opt/flannel-v0.11.0

tar -xvf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/flannel-v0.11.0/

ln -s /opt/flannel-v0.11.0 /opt/flannel

12.1.4 复制证书

cd /opt/flannel

mkdir cert

cd cert/

scp 192.168.1.200:/opt/certs/ca.pem /opt/flannel/cert/

scp 192.168.1.200:/opt/certs/client.pem /opt/flannel/cert/

scp 192.168.1.200:/opt/certs/client-key.pem /opt/flannel/cert/12.1.5 创建subnet.env文件

① K8s01上

vim /opt/flannel/subnet.env

FLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.131.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false② K8s02上

vim /opt/flannel/subnet.envFLANNEL_NETWORK=172.7.0.0/16

FLANNEL_SUBNET=172.7.132.1/24

FLANNEL_MTU=1500

FLANNEL_IPMASQ=false12.1.6 创建启动脚本

vim flanneld.sh① k8s01启动脚本

#!/bin/sh

./flanneld \

--public-ip=192.168.1.131 \

--etcd-endpoints=https://192.168.1.142:2379,https://192.168.1.131:2379,https://192.168.11.132:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=ens33 \

--subnet-file=./subnet.env \

--healthz-port=2401

② k8s02启动脚本

#!/bin/sh

./flanneld \

--public-ip=192.168.1.132 \

--etcd-endpoints=https://192.168.1.142:2379,https://192.168.1.131:2379,https://192.168.11.132:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

--etcd-cafile=./cert/ca.pem \

--iface=ens33 \

--subnet-file=./subnet.env \

--healthz-port=2401

③ 授权

chmod 755 flanneld.sh 12.1.7 操作etcd ,增加host-gw模型

① 任意一个etcd节点下执行以下命令

cd /opt/etcd

#host-gw最优模型,适用于二层网络,如是三层网络或阿里云网络, 最好使用网络模型为VxLAN,当然二层网络也可以使用VxLAN模型

./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

./etcdctl set /coreos.com/network/subnets/172.7.131.0-24'{"PublicIP":"192.168.1.131","BackendType":"host-gw"}'./etcdctl set /coreos.com/network/subnets/172.7.132.0-24 '{"PublicIP":"192.168.1.132","BackendType":"host-gw"}'12.1.8 创建supervisord配置文件

vim /etc/supervisord.d/flannel.ini① k8s01 supervisord配置文件

[program:flanneld-7-131]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)② k8s02 supervisord配置文件

[program:flanneld-7-132]

command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/flannel ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)③ 创建日志目录

mkdir /data/logs/flanneld/④ 加入supervisord

supervisorctl update12.1.9 重启docker和flannel服务,并验证服务

#重启docker服务,k8s01和k8s02都需要重启

systemctl restart docker

#重启flannel服务,k8s01和k8s02都需要重启

supervisorctl restart flanneld-7-131

supervisorctl restart flanneld-7-132

① 创建pod资源

#创建nginx-ds.yaml,k8s01或k8s02任意一台上

vim nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:v1.7.9

ports:

- containerPort: 80

#创建pod

kubectl create -f nginx-ds.yaml

#查看pod

kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ds-v4cqd 1/1 Running 11 3d1h 172.7.131.2 k8s01.host.com

nginx-ds-z5657 1/1 Running 10 3d1h 172.7.132.2 k8s02.host.com

② 查看路由

route -n③ k8s01上路由

④ k8s02上路由

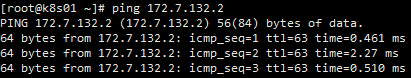

⑤ 验证通信

k8s01上ping 172.7.132.2

k8s02上ping 172.7.131.2

12.1.10 flannel-snat优化

① 安装iptables

yum install iptables-services -y

systemctl start iptables

systemctl enable iptables ② 查看iptables规则

iptables-save | grep -i postrouting

:POSTROUTING ACCEPT [1:60]

:KUBE-POSTROUTING - [0:0]

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 172.7.131.0/24 ! -o docker0 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -m mark --mark 0x4000/0x4000 -j MASQUERADE

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

[root@k8s01 ~]# systemctl start iptables

[root@k8s01 ~]# systemctl enable iptables

Created symlink from /etc/systemd/system/basic.target.wants/iptables.service to /usr/lib/systemd/system/iptables.service.

③ 删除iptables规则

#k8s01

iptables -t nat -D POSTROUTING -s 172.7.131.0/24 ! -o docker0 -j MASQUERADE

#k8s02

iptables -t nat -D POSTROUTING -s 172.7.132.0/24 ! -o docker0 -j MASQUERADE④ 查看filter规则(不删除此规则无,网络无法通信)

iptables-save | grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited⑤ 删除filter规则

iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited⑥ 增加新的iptables规则

#k8s01(来源是172.7.131.0/24,目的不是172.7.0.0/16才进行snat转换,pod之间看到的是真实IP)

iptables -t nat -I POSTROUTING -s 172.7.131.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

#k8s02(来源是172.7.132.0/24,目的不是172.7.0.0/16才进行snat转换,pod之间看到的是真实IP)

iptables -t nat -I POSTROUTING -s 172.7.132.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

iptables-save > /etc/sysconfig/iptables

service iptables save12.2 K8s服务自动发现插件-coredns安装

作用:service的名字与CLUSTER-IP的自动关联

12.2.1 创建nginx的k8s-yaml.od.com.conf配置文件(yunwei 192.168.1.200)

vim /etc/nginx/conf.d/k8s-yaml.od.com.conf

server {

listen 80;

server_name k8s-yaml.od.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

① 验证配置文件

nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

② 创建配置文件目录

mkdir -p /data/k8s-yaml/12.2.2 修改DNS配置文件(proxy-master 192.168.1.141)

vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2021062101 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.1.141

harbor A 192.168.1.200

k8s-yaml A 192.168.1.200

① 重启DNS服务

systemctl restart named② 验证解析

dig -t A k8s-yaml.od.com @192.168.1.141 +short12.2.3 下载coredns的docker镜像并打包上传到harbor仓库(yunwei 192.168.1.200)

docker pull coredns/coredns:1.6.1docker tag c0f6e815079e harbor.od.com/public/coredns:v1.6.1docker push !$12.2.4 创建coredns的资源配置清单(yunwei 192.168.1.200)

①创建coredns目录

mkdir -p /data/k8s-yaml/coredns

② rbac.yaml

vim /data/k8s-yaml/coredns/rbac.yamlapiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system③ cm.yaml (forward . 192.168.1.141 为bind9 DNS地址)

vim /data/k8s-yaml/coredns/cm.yamlapiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 172.7.0.0/16

forward . 192.168.1.141

cache 30

loop

reload

loadbalance

}④ dp.yaml

vim /data/k8s-yaml/coredns/dp.yamlapiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/public/coredns:v1.6.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile⑤ svc.yaml(clusterIP: 192.168.1.254为kubelet定义好的集群DNS地址)

vim /data/k8s-yaml/coredns/svc.yaml

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

12.2.5 应用上文的.yaml文件(k8s01或k8s02上)

kubectl apply -f http://k8s-yaml.od.com/coredns/rbac.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/cm.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/dp.yaml

kubectl apply -f http://k8s-yaml.od.com/coredns/svc.yaml12.2.6 测试百度

dig -t A www.baidu.com @10.0.0.2 +short12.2.7 测试svc

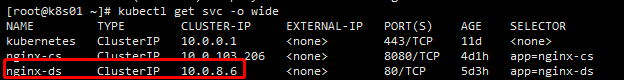

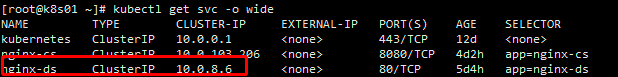

① 创建一个pod,nginx-ds.yaml文件已在十一步骤配置

kubectl create -f nginx-ds.yamlkubectl expose deployment nginx-ds --port=80 --target-port=80 service/nginx-ds exposed② 查看coredns

kubectl get svc -n kube-system ③ dig -t A svc的名字.名称空间.svc.cluster.local @10.0.0.2 +short

dig -t A nginx-ds.default.svc.cluster.local. @10.0.0.2 +short

12.3 K8s服务暴露插件-traefik安装(ingress控制器)

12.3.1 下载traefik的docker镜像(yunwei 192.168.1.200)

① 创建traefik目录

mkdir -p /data/k8s-yaml/traefik② 进入traefik目录

cd /data/k8s-yaml/traefik/③ 下载镜像

docker pull traefik:v1.7.2-alpine12.3.2 打包traefik镜像到harbor仓库

① 打标签

docker tag traefik:v1.7.2-alpine harbor.od.com/public/traefik:v1.7.2② 上传harbor仓库

docker push !$12.3.3 创建traefik的资源配置清单(yunwei 192.168.1.200)

① rbac.yaml

vim /data/k8s-yaml/traefik/rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system② ds.yaml

vim /data/k8s-yaml/traefik/ds.yamlapiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.od.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://192.168.1.140:7443

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus③ ingress.yaml

vim /data/k8s-yaml/traefik/ingress.yamlapiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.od.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080④ svc.yaml

vim /data/k8s-yaml/traefik/svc.yamlkind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web12.3.4 应用资源配置清单(任意一运算节点K8s01或K8s02)

① 应用资源配置清单

kubectl apply -f http://k8s-yaml.od.com/traefik/rbac.yaml

kubectl apply -f http://k8s-yaml.od.com/traefik/ds.yaml

kubectl apply -f http://k8s-yaml.od.com/traefik/ingress.yaml

kubectl apply -f http://k8s-yaml.od.com/traefik/svc.yaml② 检查容器是否正常运行

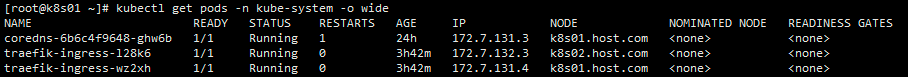

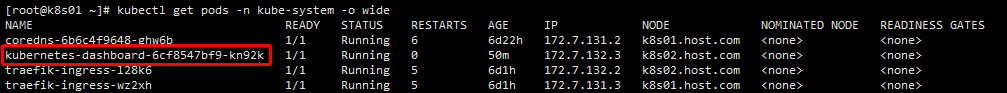

kubectl get pods -n kube-system -o wide

12.3.5 配置traefik负载均衡(proxy-master和proxy-standby都需要配置)

① 新增include /usr/local/nginx/conf.d/*.conf;配置

vim /usr/local/nginx/conf/nginx.conf#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

include /usr/local/nginx/conf.d/*.conf; #新增

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

stream {

log_format proxy '$time_local|$remote_addr|$upstream_addr|$protocol|$status|'

'$session_time|$upstream_connect_time|$bytes_sent|$bytes_received|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

upstream kube-apiserver {

server 192.168.1.131:6443 max_fails=3 fail_timeout=30s;

server 192.168.1.132:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

access_log /var/log/nginx/proxy.log proxy;

}

}

② 创建conf.d目录

mkdir -p /usr/local/nginx/conf.d/

③ 新建od.com.conf配置文件

vim /usr/local/nginx/conf.d/od.com.conf

upstream default_backend_traefik {

server 192.168.1.131:81 max_fails=3 fail_timeout=10s;

server 192.168.1.132:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.od.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

④ 重启nginx服务

systemctl restart nginx12.3.6 配置DNS服务器(proxy-master)

① 新增A记录

vim /var/named/od.com.zone$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2021062101 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.1.141

harbor A 192.168.1.200

k8s-yaml A 192.168.1.200

traefik A 192.168.1.140 #新增A记录,指向nginx的VIP,备注不要复制进配置文件,会导致服务无法启动

② 重启DNS服务

systemctl restart named12.3.7 验证traefik服务

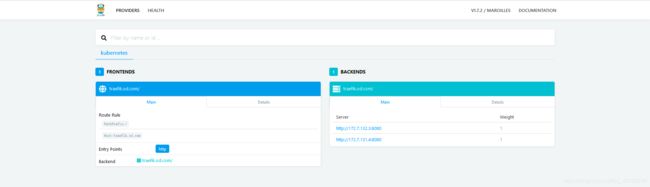

① 任意一台机器上验证,访问http://traefik.od.com

12.3.8 登录traefik

① 本地机器的hosts上加一条解析为192.168.1.140 traefik.od.com,或者本地机器修改DNS地址为192.168.1.141

② 本地浏览器访问http://traefik.od.com

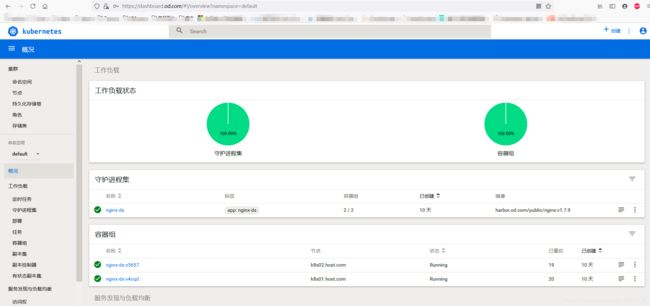

12.4 K8s仪表盘插件-dashboard安装

12.4.1 下载dashboard的docker镜像(yunwei 192.168.1.200)

docker pull k8scn/kubernetes-dashboard-amd64:v1.8.312.4.2 打包traefik镜像到harbor仓库

① 打标签

docker tag k8scn/kubernetes-dashboard-amd64:v1.8.3 harbor.od.com/public/dashboard:v1.8.3②上传harbor仓库

docker push !$ 12.4.3 创建dashboard的资源配置清单(yunwei 192.168.1.200)

① 创建dashboard目录

mkdir -p /data/k8s-yaml/dashboard

② dashboard-rbac.yaml

vim /data/k8s-yaml/dashboard/dashboard-rbac.yamlkind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system③ dashboard-deployment.yaml

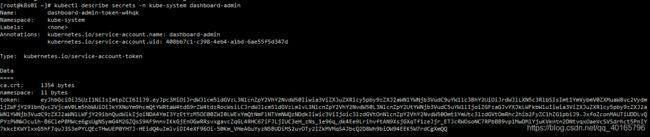

vim /data/k8s-yaml/dashboard/dashboard-deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: apps/v1