(shell批量版)二进制高可用安装k8s集群v1.23.5版本,搭配containerd容器运行时

目录

第1章 安装前准备

1.1 节点规划

1.2 配置NTP

1.3 bind安装DNS服务

1.4 修改主机DNS

1.5 安装runtime环境及依赖

1.5.1 安装docker运行时

1.5.2 安装containerd运行时

1.6 安装habor仓库

1.7 配置高可用

第2章 k8s安装集群master

2.1 下载二进制安装文件

2.2 生成证书

2.2.1 生成etcd证书

2.2.2 生成k8s证书

2.3 安装etcd

2.4 安装apisercer

2.5 安装controller

2.6 安装scheduler

第3章 k8s安装集群node

3.1 TLS Bootstrapping配置

3.2 node证书配置

3.3 安装kubelet

3.4 安装kube-proxy

第4章 k8s安装集群插件

4.1 安装calico

4.2 安装coredns

4.3 安装metrics-server

4.4 安装ingress-nginx

4.5 配置storageClass动态存储

4.6 安装kubesphere集群管理

第1章 安装前准备

1.1 节点规划

操作系统 Almalinux RHEL-8

m1,node1 : 192.168.44.201 主机名称:k8s-

m2,node2 : 192.168.44.202 主机名称:k8s-

m3,node3 : 192.168.44.203 主机名称:k8s-

| 192.168.44.201 |

apiserver,controller,scheduler,kubelet,kube-proxy,flannel |

| 192.168.44.202 |

apiserver,controller,scheduler,kubelet,kube-proxy,flannel |

| 192.168.44.203 |

apiserver,controller,scheduler,kubelet,kube-proxy,flannel |

| 192.168.44.200 |

vip |

| 10.0.0.0/16 |

service网络 |

| 10.244.0.0/16 |

pod网络 |

#配置免密登录201

cd ~

cat >host_list.txt < /etc/sysctl.d/k8s.conf < 1.2 配置NTP

#服务端201

yum install chrony -y

vim /etc/chrony.conf

allow 192.168.44.0/24

systemctl enable chronyd.service

systemctl restart chronyd.service

chronyc sources

#客户端所有master和node节点202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} yum install chrony -y;\

done

#单机编辑201

cp /etc/chrony.conf /tmp

vim /tmp/chrony.conf

server 192.168.44.201 iburst

#分发201

cd ~

for i in $(cat host_list.txt |awk '{print $1}'|grep -v "192.168.44.201");\

do \

rsync -avzP /tmp/chrony.conf root@${i}:/etc/;\

ssh root@${i} cat /etc/chrony.conf;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#批量重启服务201

cd ~

for i in $(cat host_list.txt |awk '{print $1}'|grep -v "192.168.44.201");\

do \

ssh root@${i} systemctl enable chronyd.service;\

ssh root@${i} systemctl restart chronyd.service;\

ssh root@${i} chronyc sources;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

1.3 bind安装DNS服务

#201

~]# yum install -y bind

主配置文件

注意语法(分号空格)这里的IP是内网IP

~]# vim /etc/named.conf //确保以下配置正确

listen-on port 53 { 192.168.44.201; }; # 监听端口53 下面一行本来有ipv6地址 需要删除

directory "/var/named";

allow-query { any; }; #允许内机器都可以查

forwarders { 192.168.44.2; }; # 虚拟机这里填网关地址,阿里云机器可以填223.5.5.5

recursion yes; # 采用递归方法查询IP

dnssec-enable no;

dnssec-validation no;

~]# named-checkconf # 检查配置 没有信息即为正确

在 201 配置域名文件

增加两个zone配置,od.com为业务域,host.com为主机域

~]# vim /etc/named.rfc1912.zones

zone "host.com" IN {

type master;

file "host.com.zone";

allow-update { 192.168.44.201; };

};

zone "od.com" IN {

type master;

file "od.com.zone";

allow-update { 192.168.44.201; };

};

在 201 配置主机域文件

第四行时间需要修改 (年月日01)每次修改配置文件都需要前滚一个序列号

~]# vim /var/named/host.com.zone

$ORIGIN host.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.host.com. dnsadmin.host.com. (

2021071301 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com.

$TTL 60 ; 1 minute

dns A 192.168.44.201

k8s-192-168-44-201 A 192.168.44.201

k8s-192-168-44-202 A 192.168.44.202

k8s-192-168-44-203 A 192.168.44.203

在 201 配置业务域文件

~]# vim /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2021071301 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 192.168.44.201

在 201 启动bind服务,并测试

~]# named-checkconf # 检查配置文件

~]# systemctl start named ; systemctl enable named

~]# dig -t A k8s-192-168-44-203.host.com @192.168.44.201 +short #检查是否可以解析

192.168.44.203

1.4 修改主机DNS

#所有master和node节点201.202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} sed -ri '"s#(DNS1=)(.*)#\1192.168.44.201#g"' /etc/sysconfig/network-scripts/ifcfg-eth0;\

ssh root@${i} systemctl restart NetworkManager.service;\

ssh root@${i} ping www.baidu.com -c 1;\

ssh root@${i} ping k8s-192-168-44-203.host.com -c 1;\

ssh root@${i} cat /etc/resolv.conf;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

==============================================================

# Generated by NetworkManager

search host.com #添加后解析主机A记录 可以不加域名 例如

dig -t A k8s-192-168-44-201 @192.168.44.201 +short

nameserver 192.168.44.201

1.5 安装runtime环境及依赖

1.5.1 安装docker运行时

虽然docker被k8s抛弃了。但是还是要安装上,因为后期的ci/cd功能需要用到宿主机的docker的引擎来构建镜像,所以所有master和node节点201.202.203都需要安装上

#所有master和node节点201.202.203

#依赖关系:https://git.k8s.io/kubernetes/build/dependencies.yaml

#官方网站:https://docs.docker.com/engine/install/centos/

#清理环境

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

#官方源(推荐)

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} curl https://download.docker.com/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo;\

ssh root@${i} ls -l /etc/yum.repos.d;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

=====================================================================

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

#安装指定版本docker这里是20.10.14版本

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} yum install -y yum-utils;\

ssh root@${i} yum list docker-ce --showduplicates | sort -r;\

ssh root@${i} yum list docker-ce-cli --showduplicates | sort -r;\

ssh root@${i} yum list containerd.io --showduplicates | sort -r;\

ssh root@${i} yum install docker-ce-20.10.14 docker-ce-cli-20.10.14 containerd.io -y;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#阿里源(备用)

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} curl http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo;\

ssh root@${i} ls -l /etc/yum.repos.d;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

==================================================================

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

#创建文件夹所有master和node节点201.202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} mkdir /etc/docker -p;\

ssh root@${i} mkdir /data/docker -p;\

ssh root@${i} tree /data;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#修改docker配置文件,bip172.xx.xx.1的xx.xx替换为主机ip的后两位

#单机编辑201

vim /etc/docker/daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","192.168.44.201:8088"],

"registry-mirrors": ["https://uoggbpok.mirror.aliyuncs.com"],

"bip": "172.16.0.1/16",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true,

"log-driver": "json-file",

"log-opts": {"max-size":"100m", "max-file":"3"}

}

#分发201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

rsync -avzP /etc/docker/daemon.json root@${i}:/etc/docker/;\

ssh root@${i} cat /etc/docker/daemon.json;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#所有master和node节点201.202.203

#启动docker,验证版本信息

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} systemctl daemon-reload;\

ssh root@${i} systemctl enable docker;\

ssh root@${i} systemctl start docker;\

ssh root@${i} docker version;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

1.5.2 安装containerd运行时

注意:默认kubelet使用的是docker运行时,如果要指定其他运行时需要修改kebelet启动配置文件参数

KUBELET_RUNTIME_ARGS="--container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

#所有master和node节点201.202.203

#官方文档:https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/#containerd

#设置模块所有master和node节点201.202.203

#单机编辑201

cat <> ~/.bashrc

#分发201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

rsync -avzP ~/.bashrc root@${i}:~/;\

ssh root@${i} cat ~/.bashrc;\

ssh root@${i} systemctl restart containerd.service;\

ssh root@${i} crictl --version;\

ssh root@${i} crictl images;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

=======================================================================

crictl version v1.22.0

#简单入门使用方法见文档:

https://kubernetes.io/zh/docs/tasks/debug-application-cluster/crictl/

1.6 安装habor仓库

#前提准备201

yum install -y docker-compose

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

mkdir /opt/tool

cd /opt/tool

#官方文档:https://goharbor.io/docs/2.0.0/install-config/configure-https/

wget https://github.com/goharbor/harbor/releases/download/v2.0.1/harbor-offline-installer-v2.0.1.tgz

mkdir -p /opt/harbor_data

tar xf harbor-offline-installer-v2.0.1.tgz

cd harbor

mkdir cert -p

cd cert

#生成 CA 证书私钥

openssl genrsa -out ca.key 4096

#生成 CA 证书(替换CN为registry.myharbor.com)

openssl req -x509 -new -nodes -sha512 -days 36500 \

-subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=registry.myharbor.com" \

-key ca.key \

-out ca.crt

#生成域名私钥

openssl genrsa -out registry.myharbor.com.key 4096

#生成域名请求csr(替换CN为registry.myharbor.com)

openssl req -sha512 -new \

-subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=registry.myharbor.com" \

-key registry.myharbor.com.key \

-out registry.myharbor.com.csr

#生成 v3 扩展文件

cat > v3.ext <<-EOF

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

extendedKeyUsage = serverAuth

subjectAltName = @alt_names

[alt_names]

DNS.1=registry.myharbor.com

DNS.2=registry.myharbor

DNS.3=hostname

IP.1=192.168.44.201

EOF

#生成域名证书

openssl x509 -req -sha512 -days 36500 \

-extfile v3.ext \

-CA ca.crt -CAkey ca.key -CAcreateserial \

-in registry.myharbor.com.csr \

-out registry.myharbor.com.crt

#查看效果

tree ./

./

├── ca.crt

├── ca.key

├── ca.srl

├── registry.myharbor.com.crt

├── registry.myharbor.com.csr

├── registry.myharbor.com.key

└── v3.ext

0 directories, 7 files

#转换xxx.crt为xxx.cert, 供 Docker 使用

openssl x509 -inform PEM \

-in registry.myharbor.com.crt \

-out registry.myharbor.com.cert

#查看

tree ./

./

├── ca.crt

├── ca.key

├── ca.srl

├── registry.myharbor.com.cert

├── registry.myharbor.com.crt

├── registry.myharbor.com.csr

├── registry.myharbor.com.key

└── v3.ext

0 directories, 8 files

#复制证书到harbor主机的docker文件夹

mkdir /etc/docker/certs.d/registry.myharbor.com/ -p

\cp registry.myharbor.com.cert /etc/docker/certs.d/registry.myharbor.com/

\cp registry.myharbor.com.key /etc/docker/certs.d/registry.myharbor.com/

\cp ca.crt /etc/docker/certs.d/registry.myharbor.com/

如果您将默认nginx端口 443 映射到不同的端口,请创建文件夹/etc/docker/certs.d/yourdomain.com:port或/etc/docker/certs.d/harbor_IP:port.

#检查

tree /etc/docker/certs.d/

/etc/docker/certs.d/

└── registry.myharbor.com

├── ca.crt

├── registry.myharbor.com.cert

└── registry.myharbor.com.key

1 directory, 3 files

#重启Docker 引擎。

systemctl restart docker

#部署或重新配置 Harbor

cd /opt/tool/harbor/

cp harbor.yml.tmpl harbor.yml

修改配置文件主要修改参数如下:

vim harbor.yml

hostname = registry.myharbor.com //修改主机名称

关于http配置(见下)

http:

# port for http, default is 80. If https enabled, this port will redirect to https port

port: 8088 //修改端口

关于https配置(见下)

https:

# https port for harbor, default is 443

port: 443

# The path of cert and key files for nginx

certificate: /opt/tool/harbor/cert/registry.myharbor.com.crt

private_key: /opt/tool/harbor/cert/registry.myharbor.com.key

其余配置

harbor_admin_password: 888888 //修改admin密码

data_volume: /opt/harbor_data //修改持久化数据目录

第五步:执行./install.sh

#如果前面已经安装了http只要启用https的话操作如下

./prepare

docker-compose down -v

docker-compose up -d

第六步:浏览器访问https://192.168.44.201或者https://registry.myharbor.com/

账号admin密码888888

注意:需要使用该仓库域名的机器配置hosts解析,这里为所有k8s的node节点

#所有master和node节点201.202.203

#单机编辑201

vim /etc/hosts

192.168.44.201 registry.myharbor.com

#分发201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

rsync -avzP /etc/hosts root@${i}:/etc/;\

ssh root@${i} cat /etc/hosts;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

1.7 配置高可用

#201 && 202 && 203

配置Nginx反向代理192.168.44.201:6443和192.168.44.202:6443和192.168.44.203:6443

cat >/etc/yum.repos.d/nginx.repo </server/scripts/check_web.sh </etc/keepalived/keepalived.conf </server/scripts/check_web.sh </server/scripts/check_ip.sh </dev/null

if [ \$? -eq 0 ]

then

echo "主机\$(hostnamectl |awk 'NR==1''{print \$3}')里边的keepalived服务出现异常,请进行检查"|mail -s 异常告警-keepalived [email protected]

fi

EOF

chmod +x /server/scripts/check_ip.sh

配置发送邮件

vim /etc/mail.rc

set [email protected] smtp=smtp.163.com

set [email protected] smtp-auth-password=xxxx smtp-auth=login

systemctl restart postfix.service

echo "邮件发送测试"|mail -s "邮件测试" [email protected]

cat >/etc/keepalived/keepalived.conf </server/scripts/check_web.sh </server/scripts/check_ip.sh </dev/null

if [ \$? -eq 0 ]

then

echo "主机\$(hostnamectl |awk 'NR==1''{print \$3}')里边的keepalived服务出现异常,请进行检查"|mail -s 异常告警-keepalived [email protected]

fi

EOF

chmod +x /server/scripts/check_ip.sh

配置发送邮件

vim /etc/mail.rc

set [email protected] smtp=smtp.163.com

set [email protected] smtp-auth-password=xxxx smtp-auth=login

systemctl restart postfix.service

echo "邮件发送测试"|mail -s "邮件测试" [email protected]

cat >/etc/keepalived/keepalived.conf <>/etc/sysctl.conf

sysctl -p

vim /etc/nginx/nginx.conf

stream {

log_format proxy '$time_local|$remote_addr|$upstream_addr|$protocol|$status|'

'$session_time|$upstream_connect_time|$bytes_sent|$bytes_received|'

'$upstream_bytes_sent|$upstream_bytes_received' ;

upstream kube-apiserver {

server 192.168.44.201:6443 max_fails=3 fail_timeout=30s;

server 192.168.44.202:6443 max_fails=3 fail_timeout=30s;

server 192.168.44.203:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 192.168.44.200:7443;

proxy_connect_timeout 1s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

access_log /var/log/nginx/proxy.log proxy;

}

}

systemctl restart nginx.service

netstat -tunpl|grep nginx

第2章 k8s安装集群master

2.1 下载二进制安装文件

-

k8s下载

https://github.com/kubernetes/kubernetes/tree/master/CHANGELOG

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.23.md

#(所有master和 node节点)201.202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} mkdir /opt/tool -p;\

ssh root@${i} cd /opt/tool;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#(所有master和 node节点)201.202.203

#单机下载201

cd /opt/tool

wget https://storage.googleapis.com/kubernetes-release/release/v1.23.5/kubernetes-server-linux-amd64.tar.gz -O kubernetes-v1.23.5-server-linux-amd64.tar.gz

tar xf kubernetes-v1.23.5-server-linux-amd64.tar.gz

mv kubernetes /opt/kubernetes-1.23.5

#分发201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

rsync -avzP /opt/kubernetes-1.23.5 root@${i}:/opt/;\

ssh root@${i} ln -s /opt/kubernetes-1.23.5 /opt/kubernetes;\

ssh root@${i} tree -L 1 /opt/;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#(所有master和 node节点)201.202.203

tree -L 1 /opt/

/opt/

├── containerd

├── kubernetes -> /opt/kubernetes-1.23.5

├── kubernetes-1.23.5

└── tool

4 directories, 0 files

#命令补全(所有master和 node节点)201.202.203

#单机执行201

\cp /opt/kubernetes/server/bin/kubectl /usr/local/bin/

source <(kubectl completion bash) && echo 'source <(kubectl completion bash)' >> ~/.bashrc

#分发201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

rsync -avzP /usr/local/bin/kubectl root@${i}:/usr/local/bin/;\

rsync -avzP ~/.bashrc root@${i}:~/;\

ssh root@${i} tree -L 1 /usr/local/bin/;\

ssh root@${i} cat ~/.bashrc;\

ssh root@${i} kubectl version;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

===========================================================================

kubectl version

Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.5", GitCommit:"c285e781331a3785a7f436042c65c5641ce8a9e9", GitTreeState:"clean", BuildDate:"2022-03-16T15:58:47Z", GoVersion:"go1.17.8", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

-

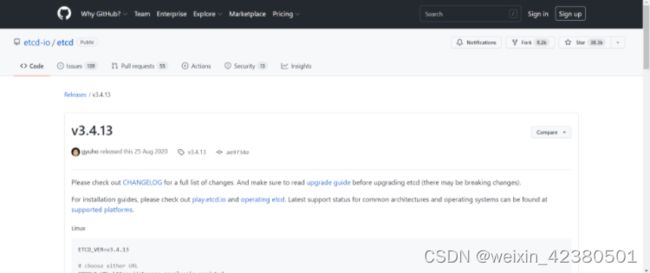

下载etcd

https://github.com/etcd-io/etcd/releases/tag/v3.5.1

依赖关系:https://git.k8s.io/kubernetes/build/dependencies.yaml

#所有etcd节点201.202.203

#批量201

cd ~

for i in 192.168.44.{201..203};\

do \

ssh root@${i} mkdir /opt/tool -p;\

ssh root@${i} cd /opt/tool

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#下载安装包所有etcd节点201.202.203

#单机下载201

wget https://github.com/etcd-io/etcd/releases/download/v3.5.1/etcd-v3.5.1-linux-amd64.tar.gz

tar xf etcd-v3.5.1-linux-amd64.tar.gz

mv etcd-v3.5.1-linux-amd64 /opt/etcd-v3.5.1

#分发201

cd ~

for i in 192.168.44.{201..203};\

do \

rsync -avzP /opt/etcd-v3.5.1 root@${i}:/opt/;\

ssh root@${i} ln -s /opt/etcd-v3.5.1 /opt/etcd;\

ssh root@${i} tree -L 1 /opt/;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#所有etcd节点201.202.203

tree -L 1 /opt/

/opt/

├── containerd

├── etcd -> /opt/etcd-v3.5.1

├── etcd-v3.5.1

├── kubernetes -> /opt/kubernetes-1.23.5

├── kubernetes-1.23.5

└── tool

6 directories, 0 files

#拷贝命令到环境变量(所有etcd节点201.202.203)

#单机执行201

\cp /opt/etcd/etcdctl /usr/local/bin/

#分发201

cd ~

for i in 192.168.44.{201..203};\

do \

rsync -avzP /usr/local/bin/etcdctl root@${i}:/usr/local/bin/;\

ssh root@${i} tree -L 1 /usr/local/bin/;\

ssh root@${i} etcdctl version;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

====================================================================

etcdctl version

etcdctl version: 3.5.1

API version: 3.5

2.2 生成证书

#201

cd

rz

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64 -o cfssl

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64 -o cfssl-json

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl-certinfo_1.5.0_linux_amd64 -o cfssl-certinfo

chmod +x cfssl*

\mv cfssl* /usr/local/bin/

ll /usr/local/bin/

total 140556

-rwxr-xr-x 1 root root 12049979 Apr 29 20:53 cfssl

-rwxr-xr-x 1 root root 12021008 Apr 29 21:16 cfssl-certinfo

-rwxr-xr-x 1 root root 9663504 Apr 29 21:08 cfssl-json

-rwxr-xr-x 1 1000 users 45611187 Aug 5 2021 crictl

-rwxr-xr-x 1 root root 17981440 Apr 29 19:11 etcdctl

-rwxr-xr-x 1 root root 46596096 Apr 29 18:24 kubectl

-

2.2.1 生成etcd证书

#创建证书目录(所有etcd节点)201.202.203

#批量201

cd ~

for i in 192.168.44.{201..203};\

do \

ssh root@${i} mkdir -p /etc/kubernetes/pki/etcd;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#进入安装文档目录201

cd /root/k8s-ha-install/

git checkout manual-installation-v1.23.x

git branch

cd pki/

#生成etcd的ca证书201

cfssl gencert -initca etcd-ca-csr.json | cfssl-json -bare /etc/kubernetes/pki/etcd/etcd-ca

#生成etcd的证书(注意hostname的ip地址修改预留几个可能扩容的etcd地址)

cfssl gencert \

-ca=/etc/kubernetes/pki/etcd/etcd-ca.pem \

-ca-key=/etc/kubernetes/pki/etcd/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s-192-168-44-201.host.com,k8s-192-168-44-202.host.com,k8s-192-168-44-203.host.com,192.168.44.201,192.168.44.202,192.168.44.203,192.168.44.204,192.168.44.205,192.168.44.206,192.168.44.207 \

-profile=kubernetes \

etcd-csr.json | cfssl-json -bare /etc/kubernetes/pki/etcd/etcd

#查看结果201

ll /etc/kubernetes/pki/etcd

total 24

-rw-r--r-- 1 root root 1005 Dec 24 01:15 etcd-ca.csr

-rw------- 1 root root 1675 Dec 24 01:15 etcd-ca-key.pem

-rw-r--r-- 1 root root 1367 Dec 24 01:15 etcd-ca.pem

-rw-r--r-- 1 root root 1005 Dec 24 01:40 etcd.csr

-rw------- 1 root root 1675 Dec 24 01:40 etcd-key.pem

-rw-r--r-- 1 root root 1562 Dec 24 01:40 etcd.pem

#下发证书到其他etcd节点201

cd ~

for i in 192.168.44.{201..203};\

do \

rsync -avzP /etc/kubernetes/pki/etcd/etcd* root@${i}:/etc/kubernetes/pki/etcd;\

ssh root@${i} tree /etc/kubernetes/pki/etcd;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#验证下发结果

#202

ll /etc/kubernetes/pki/etcd

total 24

-rw-r--r-- 1 root root 1005 Dec 24 01:15 etcd-ca.csr

-rw------- 1 root root 1675 Dec 24 01:15 etcd-ca-key.pem

-rw-r--r-- 1 root root 1367 Dec 24 01:15 etcd-ca.pem

-rw-r--r-- 1 root root 1005 Dec 24 01:40 etcd.csr

-rw------- 1 root root 1675 Dec 24 01:40 etcd-key.pem

-rw-r--r-- 1 root root 1562 Dec 24 01:40 etcd.pem

#203

ll /etc/kubernetes/pki/etcd

total 24

-rw-r--r-- 1 root root 1005 Dec 24 01:15 etcd-ca.csr

-rw------- 1 root root 1675 Dec 24 01:15 etcd-ca-key.pem

-rw-r--r-- 1 root root 1367 Dec 24 01:15 etcd-ca.pem

-rw-r--r-- 1 root root 1005 Dec 24 01:40 etcd.csr

-rw------- 1 root root 1675 Dec 24 01:40 etcd-key.pem

-rw-r--r-- 1 root root 1562 Dec 24 01:40 etcd.pem

-

2.2.2 生成k8s证书

#创建证书目录(所有master节点)201.202.203

#批量201

cd ~

for i in 192.168.44.{201..203};\

do \

ssh root@${i} mkdir -p /etc/kubernetes/pki/etcd;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#进入安装文档目录201

cd /root/k8s-ha-install/

git checkout manual-installation-v1.23.x

git branch

cd pki/

#生成ca证书201

cfssl gencert -initca ca-csr.json | cfssl-json -bare /etc/kubernetes/pki/ca

#生成apiserver证书201(注意VIP地址和预留几个扩容的apiserver地址)

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-hostname=10.0.0.1,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.44.200,192.168.44.201,192.168.44.202,192.168.44.203,192.168.44.204,192.168.44.205,192.168.44.206,192.168.44.207 \

-profile=kubernetes \

apiserver-csr.json | cfssl-json -bare /etc/kubernetes/pki/apiserver

#生成聚合ca证书201

cfssl gencert -initca front-proxy-ca-csr.json | cfssl-json -bare /etc/kubernetes/pki/front-proxy-ca

#生成聚合证书201

cfssl gencert \

-ca=/etc/kubernetes/pki/front-proxy-ca.pem \

-ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

front-proxy-client-csr.json | cfssl-json -bare /etc/kubernetes/pki/front-proxy-client

#生成controller证书201

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssl-json -bare /etc/kubernetes/pki/controller-manager

#配置controller设置201

设置集群(注意VIP地址)

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.44.200:7443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

设置用户

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

设置上下文

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

设置用户使用的默认上下文

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

#生成scheduler证书201

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssl-json -bare /etc/kubernetes/pki/scheduler

#配置scheduler设置201

设置集群(注意VIP地址)

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.44.200:7443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

设置用户

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

设置上下文

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

设置用户使用的默认上下文

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

#生成admin的证书201

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssl-json -bare /etc/kubernetes/pki/admin

#配置admin的设置201

设置集群(注意VIP地址)

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.44.200:7443 \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

设置用户

kubectl config set-credentials kubernetes-admin \

--client-certificate=/etc/kubernetes/pki/admin.pem \

--client-key=/etc/kubernetes/pki/admin-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

设置上下文

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

设置默认上下文

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

#创建ServiceAccount Key 201

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

#查看结果201

tree /etc/kubernetes/

/etc/kubernetes/

├── admin.kubeconfig

├── controller-manager.kubeconfig

├── pki

│ ├── admin.csr

│ ├── admin-key.pem

│ ├── admin.pem

│ ├── apiserver.csr

│ ├── apiserver-key.pem

│ ├── apiserver.pem

│ ├── ca.csr

│ ├── ca-key.pem

│ ├── ca.pem

│ ├── controller-manager.csr

│ ├── controller-manager-key.pem

│ ├── controller-manager.pem

│ ├── etcd

│ │ ├── etcd-ca.csr

│ │ ├── etcd-ca-key.pem

│ │ ├── etcd-ca.pem

│ │ ├── etcd.csr

│ │ ├── etcd-key.pem

│ │ └── etcd.pem

│ ├── front-proxy-ca.csr

│ ├── front-proxy-ca-key.pem

│ ├── front-proxy-ca.pem

│ ├── front-proxy-client.csr

│ ├── front-proxy-client-key.pem

│ ├── front-proxy-client.pem

│ ├── sa.key

│ ├── sa.pub

│ ├── scheduler.csr

│ ├── scheduler-key.pem

│ └── scheduler.pem

└── scheduler.kubeconfig

2 directories, 32 files

#下发证书到其他master节点201

cd ~

for i in 192.168.44.{201..203};\

do \

rsync -avzP /etc/kubernetes/ root@${i}:/etc/kubernetes;\

ssh root@${i} tree /etc/kubernetes/;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#查看结果

#202

tree /etc/kubernetes/

/etc/kubernetes/

├── admin.kubeconfig

├── controller-manager.kubeconfig

├── pki

│ ├── admin.csr

│ ├── admin-key.pem

│ ├── admin.pem

│ ├── apiserver.csr

│ ├── apiserver-key.pem

│ ├── apiserver.pem

│ ├── ca.csr

│ ├── ca-key.pem

│ ├── ca.pem

│ ├── controller-manager.csr

│ ├── controller-manager-key.pem

│ ├── controller-manager.pem

│ ├── etcd

│ │ ├── etcd-ca.csr

│ │ ├── etcd-ca-key.pem

│ │ ├── etcd-ca.pem

│ │ ├── etcd.csr

│ │ ├── etcd-key.pem

│ │ └── etcd.pem

│ ├── front-proxy-ca.csr

│ ├── front-proxy-ca-key.pem

│ ├── front-proxy-ca.pem

│ ├── front-proxy-client.csr

│ ├── front-proxy-client-key.pem

│ ├── front-proxy-client.pem

│ ├── sa.key

│ ├── sa.pub

│ ├── scheduler.csr

│ ├── scheduler-key.pem

│ └── scheduler.pem

└── scheduler.kubeconfig

2 directories, 32 files

#203

tree /etc/kubernetes/

/etc/kubernetes/

├── admin.kubeconfig

├── controller-manager.kubeconfig

├── pki

│ ├── admin.csr

│ ├── admin-key.pem

│ ├── admin.pem

│ ├── apiserver.csr

│ ├── apiserver-key.pem

│ ├── apiserver.pem

│ ├── ca.csr

│ ├── ca-key.pem

│ ├── ca.pem

│ ├── controller-manager.csr

│ ├── controller-manager-key.pem

│ ├── controller-manager.pem

│ ├── etcd

│ │ ├── etcd-ca.csr

│ │ ├── etcd-ca-key.pem

│ │ ├── etcd-ca.pem

│ │ ├── etcd.csr

│ │ ├── etcd-key.pem

│ │ └── etcd.pem

│ ├── front-proxy-ca.csr

│ ├── front-proxy-ca-key.pem

│ ├── front-proxy-ca.pem

│ ├── front-proxy-client.csr

│ ├── front-proxy-client-key.pem

│ ├── front-proxy-client.pem

│ ├── sa.key

│ ├── sa.pub

│ ├── scheduler.csr

│ ├── scheduler-key.pem

│ └── scheduler.pem

└── scheduler.kubeconfig

2 directories, 32 files

2.3 安装etcd

准备配置文件

#注意修改ip地址

#201

mkdir /etc/etcd -p

vim /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.44.201:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.44.201:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.44.201:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.44.201:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.44.201:2380,etcd2=https://192.168.44.202:2380,etcd3=https://192.168.44.203:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#202

mkdir /etc/etcd -p

vim /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd2"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.44.202:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.44.202:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.44.202:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.44.202:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.44.201:2380,etcd2=https://192.168.44.202:2380,etcd3=https://192.168.44.203:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#203

mkdir /etc/etcd -p

vim /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd3"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.44.203:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.44.203:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.44.203:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.44.203:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.44.201:2380,etcd2=https://192.168.44.202:2380,etcd3=https://192.168.44.203:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

准备启动文件

#所有etcd节点201.202.203

#单机编辑201

cat >/usr/lib/systemd/system/etcd.service <2.4 安装apisercer

#创建相关目录(所有master节点)201.202.203

#批量201

cd ~

for i in 192.168.44.{201..203};\

do \

ssh root@${i} mkdir -p /opt/cni/bin /etc/cni/net.d /etc/kubernetes/manifests /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#创建token文件(所有master节点)201.202.203

#单机编辑201

cat >/etc/kubernetes/token.csv </usr/lib/systemd/system/kube-apiserver.service </usr/lib/systemd/system/kube-apiserver.service </usr/lib/systemd/system/kube-apiserver.service < 2.5 安装controller

#准备启动文件(注意修改pod的ip地址段,本次10.244.0.0/16)

#注意使用相同版本的虚拟机,打开kubeadm集群查看对应参数,必要时调整

#所有master节点201.202.203

cat >/usr/lib/systemd/system/kube-controller-manager.service <2.6 安装scheduler

#准备启动文件

#注意使用相同版本的虚拟机,打开kubeadm集群查看对应参数,必要时调整

#所有master节点201.202.203

cat >/usr/lib/systemd/system/kube-scheduler.service <第3章 k8s安装集群node

3.1 TLS Bootstrapping配置

官方文档:https://kubernetes.io/zh/docs/reference/command-line-tools-reference/kubelet-tls-bootstrapping/#bootstrap-tokens

#配置Bootstrapping配置文件201

cd /root/k8s-ha-install/

git checkout manual-installation-v1.23.x

git branch

cd bootstrap/

创建集群(注意VIP地址)

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.44.200:7443 \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

创建用户(注意token要和bootstrap.secret.yaml或者token.csv文件里边一样)

kubectl config set-credentials tls-bootstrap-token-user \

--token=c8ad9c.2e4d610cf3e7426e \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

创建上下文

kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

创建默认上下文

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

#所有master节点201.202.203

mkdir /root/.kube -p

cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

#配置token以及RBAC规则201

=============================================

方法一:令牌认证文件(推荐,因为token永不过期)

cat /etc/kubernetes/token.csv

02b50b05283e98dd0fd71db496ef01e8,kubelet-bootstrap,10001,"system:bootstrappers"

#需要api开启这个特性

--token-auth-file=/etc/kubernetes/token.csv

=============================================

方法二:启动引导令牌(推荐,因为token过期会自动被清理)

#需要api组件开启这个特性

--enable-bootstrap-token-auth=true

==============================================

cat ~/k8s-ha-install/bootstrap/bootstrap.secret.yaml

#启动令牌认证

apiVersion: v1

kind: Secret

metadata:

# name 必须是 "bootstrap-token-" 格式的

name: bootstrap-token-c8ad9c

namespace: kube-system

# type 必须是 'bootstrap.kubernetes.io/token'

type: bootstrap.kubernetes.io/token

stringData:

# 供人阅读的描述,可选。

description: "The default bootstrap token generated by 'kubelet '."

# 令牌 ID 和秘密信息,必需。

token-id: c8ad9c

token-secret: 2e4d610cf3e7426e

# 可选的过期时间字段

expiration: "2999-03-10T03:22:11Z"

# 允许的用法

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

# 令牌要认证为的额外组,必须以 "system:bootstrappers:" 开头

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

# 允许启动引导节点创建 CSR

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: create-csrs-for-bootstrapping

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:node-bootstrapper

apiGroup: rbac.authorization.k8s.io

---

# 批复 "system:bootstrappers" 组的所有 客户端CSR

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

---

# 批复 "system:nodes" 组的 客户端 CSR 续约请求

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-renewals-for-nodes

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

===========================================================

#应用资源201

kubectl create -f bootstrap.secret.yaml

3.2 node证书配置

#创建证书目录(所有node节点)201.202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} mkdir -p /etc/kubernetes/pki/etcd;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#创建证书目录(所有node节点)201.202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} mkdir -p /etc/kubernetes/pki;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#下发证书201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

rsync -avzP /etc/kubernetes/ --exclude=kubelet.kubeconfig root@${i}:/etc/kubernetes;\

ssh root@${i} tree /etc/kubernetes/;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#检查

#202

tree /etc/kubernetes/

/etc/kubernetes/

├── admin.kubeconfig

├── bootstrap-kubelet.kubeconfig

├── controller-manager.kubeconfig

├── manifests

├── pki

│ ├── admin.csr

│ ├── admin-key.pem

│ ├── admin.pem

│ ├── apiserver.csr

│ ├── apiserver-key.pem

│ ├── apiserver.pem

│ ├── ca.csr

│ ├── ca-key.pem

│ ├── ca.pem

│ ├── controller-manager.csr

│ ├── controller-manager-key.pem

│ ├── controller-manager.pem

│ ├── etcd

│ │ ├── etcd-ca.csr

│ │ ├── etcd-ca-key.pem

│ │ ├── etcd-ca.pem

│ │ ├── etcd.csr

│ │ ├── etcd-key.pem

│ │ └── etcd.pem

│ ├── front-proxy-ca.csr

│ ├── front-proxy-ca-key.pem

│ ├── front-proxy-ca.pem

│ ├── front-proxy-client.csr

│ ├── front-proxy-client-key.pem

│ ├── front-proxy-client.pem

│ ├── sa.key

│ ├── sa.pub

│ ├── scheduler.csr

│ ├── scheduler-key.pem

│ └── scheduler.pem

└── scheduler.kubeconfig

└── token.csv

3 directories, 34 files

3.3 安装kubelet

#所有node节点创建相关目录201.202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} mkdir -p /opt/cni/bin /etc/cni/net.d /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#所有node节点配置启动文件201.202.203

#注意使用相同版本的虚拟机,打开kubeadm集群查看对应参数,必要时调整

#单机编辑201

cat >/usr/lib/systemd/system/kubelet.service </etc/systemd/system/kubelet.service.d/10-kubelet.conf </etc/kubernetes/kubelet-conf.yml <

lines 1114-1141/1141 (END)

#查看证书有效期201.202.203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} openssl x509 -in /var/lib/kubelet/pki/kubelet-client-current.pem -noout -text |grep -A 1 "Not Before:";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

3.4 安装kube-proxy

#创建配置201(注意vip地址)

cd /root/k8s-ha-install/

git branch

#1

kubectl -n kube-system create serviceaccount kube-proxy

#2

kubectl create clusterrolebinding system:kube-proxy \

--clusterrole system:node-proxier \

--serviceaccount kube-system:kube-proxy

#3

SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

#4

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

#5

PKI_DIR=/etc/kubernetes/pki

#6

K8S_DIR=/etc/kubernetes

#7

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.44.200:7443 \

--kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

#8

kubectl config set-credentials kubernetes \

--token=${JWT_TOKEN} \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

#9

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=kubernetes \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

#10

kubectl config use-context kubernetes \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

#分发配置到其他node节点201

cd /root/k8s-ha-install/

git branch

ll kube-proxy/

total 12

-rw-r--r-- 1 root root 813 Dec 24 01:06 kube-proxy.conf

-rw-r--r-- 1 root root 288 Dec 24 01:06 kube-proxy.service

-rw-r--r-- 1 root root 3677 Dec 24 01:06 kube-proxy.yml

#编辑kube-proxy.conf文件修改pod网络10.244.0.0/16

#注意使用相同版本的虚拟机,打开kubeadm集群查看对应参数,必要时调整

#单机编辑201

cat >/etc/kubernetes/kube-proxy.yml </usr/lib/systemd/system/kube-proxy.service < 第4章 k8s安装集群插件

4.1 安装calico

https://kubernetes.io/docs/concepts/cluster-administration/addons/

#201

这里calico

cd /root/k8s-ha-install

git checkout manual-installation-v1.23.x

git branch

cd calico/

#修改文件内容,注意ip地址201

cp calico-etcd.yaml calico-etcd.yaml.bak$(date +"%F-%H-%M-%S")

sed -i 's#etcd_endpoints: "http://:"#etcd_endpoints: "https://192.168.44.201:2379,https://192.168.44.202:2379,https://192.168.44.203:2379"#g' calico-etcd.yaml

ETCD_CA=`cat /etc/kubernetes/pki/etcd/etcd-ca.pem | base64 | tr -d '\n'`

ETCD_CERT=`cat /etc/kubernetes/pki/etcd/etcd.pem | base64 | tr -d '\n'`

ETCD_KEY=`cat /etc/kubernetes/pki/etcd/etcd-key.pem | base64 | tr -d '\n'`

sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml

POD_SUBNET="10.244.0.0/16"

sed -i 's@- name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@value: "POD_CIDR"@value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

#安装201

kubectl apply -f calico-etcd.yaml

kubectl get --all-namespaces pod

kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 443/TCP 77m

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-192-168-44-201.host.com Ready 33m v1.23.5

k8s-192-168-44-202.host.com Ready 33m v1.23.5

k8s-192-168-44-203.host.com Ready 33m v1.23.5

#修改节点角色(所有master节点)201

cd ~

for i in k8s-192-168-44-{201..203}.host.com;\

do \

kubectl label node ${i} node-role.kubernetes.io/master=;\

kubectl label node ${i} node-role.kubernetes.io/node=;\

done

#修改节点角色(所有node节点)201

cd ~

for i in $(kubectl get nodes |awk "NR>1"'{print $1}');\

do \

kubectl label node ${i} node-role.kubernetes.io/node=;\

done

#查看效果201

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-192-168-44-201.host.com Ready master,node 37m v1.23.5

k8s-192-168-44-202.host.com Ready master,node 37m v1.23.5

k8s-192-168-44-203.host.com Ready master,node 37m v1.23.5

4.2 安装coredns

#201

cd /root/k8s-ha-install/

git checkout manual-installation-v1.23.x

git branch

cd CoreDNS/

#修改coredns的IP地址10.0.0.10

vim coredns.yaml

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.10

#应用资源清单201

kubectl apply -f coredns.yaml

#查看结果

kubectl get --all-namespaces all

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/calico-kube-controllers-5f6d4b864b-rfgxl 1/1 Running 0 55m

kube-system pod/calico-node-4f7h9 1/1 Running 0 55m

kube-system pod/calico-node-4j57l 1/1 Running 0 55m

kube-system pod/calico-node-r8dxd 1/1 Running 0 55m

kube-system pod/coredns-867d46bfc6-9zt6d 1/1 Running 0 23s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.0.0.1 443/TCP 10h

kube-system service/kube-dns ClusterIP 10.0.0.10 53/UDP,53/TCP,9153/TCP 24s

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/calico-node 3 3 3 3 3 kubernetes.io/os=linux 55m

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/calico-kube-controllers 1/1 1 1 55m

kube-system deployment.apps/coredns 1/1 1 1 25s

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/calico-kube-controllers-5f6d4b864b 1 1 1 55m

kube-system replicaset.apps/coredns-867d46bfc6 1 1 1 24s

4.3 安装metrics-server

#201

cd /root/k8s-ha-install/metrics-server/

kubectl apply -f comp.yaml

kubectl get --all-namespaces pod

kubectl top node

kubectl top pod --all-namespaces

#镜像批量保存脚本

cat image_save.sh

#!/bin/bash

#fun: save docker image list to local disk

source /etc/init.d/functions

docker image ls|awk 'NR>1''{print $1":"$2}' >/tmp/image_list.txt

for image_name in $(cat /tmp/image_list.txt)

do

tmp_name=${image_name-}

tmp1_name=${tmp_name//./-}

file_name=${tmp1_name//:/-}.tar

echo "saveing image ${image_name}...."

docker image save ${image_name} >/tmp/${file_name}

action "image ${image_name} has saved complete!" /bin/true

done

#镜像批量导入脚本

cat image_load.sh

#!/bin/bash

#fun: load docker image list from local disk

source /etc/init.d/functions

for image_name in $(find /tmp/ -name "*.tar")

do

echo "loading image ${image_name//\/tmp\//}...."

docker image load -i ${image_name}

action "image ${image_name//\/tmp\//} has loaded complete!" /bin/true

done

docker image ls

echo "导入完成!本次导入了 $(find /tmp/ -name "*.tar"|wc -l) 个镜像,系统共计 $(docker image ls |awk 'NR>1'|wc -l) 个镜像。"

4.4 安装ingress-nginx

可以略过,参考第9章节ingress资源详解来安装最新版!!!!

#201

地址:https://github.com/kubernetes/ingress-nginx

mkdir /data/k8s-yaml/ingress-controller -p

cd /data/k8s-yaml/ingress-controller

=====================================================

cat ingress-controller.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "-"

# Here: "-"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

#hostNetwork: true

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: lizhenliang/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

=====================================================

cat svc.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

ports:

- name: port-1

port: 80

protocol: TCP

targetPort: 80

nodePort: 30030

- name: port-2

port: 443

protocol: TCP

targetPort: 443

nodePort: 30033

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

type: NodePort

status:

loadBalancer: {}

====================================================

#应用资源201

kubectl apply -f ingress-controller.yaml

kubectl apply -f svc.yaml

kubectl get -n ingress-nginx all

NAME READY STATUS RESTARTS AGE

pod/nginx-ingress-controller-5759f86b89-bmx9q 1/1 Running 0 4m2s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-ingress-controller NodePort 10.0.158.23 80:30030/TCP,443:30033/TCP 4m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-ingress-controller 1/1 1 1 4m2s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-ingress-controller-5759f86b89 1 1 1 4m2s

#配置代理201.202.203

cat >/etc/nginx/conf.d/od.com.conf < 4.5 配置storageClass动态存储

#服务端201

mkdir /volume -p

yum install -y nfs-utils rpcbind

echo '/volume 192.168.44.0/24(insecure,rw,sync,no_root_squash,no_all_squash)' >/etc/exports

systemctl start rpcbind.service

systemctl enable rpcbind.service

systemctl start nfs-server

systemctl enable nfs-server

#客户端所有node节点202 && 203

#批量201

cd ~

for i in $(cat host_list.txt |awk '{print $1}');\

do \

ssh root@${i} yum install -y nfs-utils;\

ssh root@${i} showmount -e 192.168.44.201;\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo "${i}";\

ssh root@${i} echo "===================================================";\

ssh root@${i} echo;\

done

#创建目录(第1个存储类就创建对应编号1的目录)201

mkdir /data/k8s-yaml/storageClass/1 -p

cd /data/k8s-yaml/storageClass/1

==================================================

cat >rbac.yaml <dp.yaml <sc.yaml < 4.6 安装kubesphere集群管理

https://kubesphere.com.cn/

#201

mkdir /data/k8s-yaml/kubesphere -p

cd /data/k8s-yaml/kubesphere/

wget https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

wget https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

#修改配置

vim cluster-configuration.yaml

etcd:

monitoring: true # Enable or disable etcd monitoring dashboard installation. You have to create a Secret for etcd before you enable it.

endpointIps: 192.168.44.201 # etcd cluster EndpointIps. It can be a bunch of IPs here.

port: 2379 # etcd port.

tlsEnable: true

redis:

enabled: true

openldap:

enabled: true

basicAuth:

enabled: false

console:

enableMultiLogin: true

alerting: # (CPU: 0.1 Core, Memory: 100 MiB) It enables users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.

enabled: true

auditing: # Provide a security-relevant chronological set of records,recording the sequence of activities happening on the platform, initiated by different tenants.

enabled: true

devops: # (CPU: 0.47 Core, Memory: 8.6 G) Provide an out-of-the-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image.

enabled: true

events: # Provide a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.

enabled: true

ruler:

enabled: true

logging: # (CPU: 57 m, Memory: 2.76 G) Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.

enabled: true

logsidecar:

enabled: true

metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) It enables HPA (Horizontal Pod Autoscaler).

enabled: false

networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).

# Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net.

enabled: true

store:

enabled: true

servicemesh: # (0.3 Core, 300 MiB) Provide fine-grained traffic management, observability and tracing, and visualized traffic topology.

enabled: true

kubeedge: # Add edge nodes to your cluster and deploy workloads on edge nodes.

enabled: true

#将master设置为可调度,因为这平台耗费资源厉害

kubectl taint node k8s-192-168-44-201.host.com node-role.kubernetes.io/master-

kubectl describe node k8s-192-168-44-201.host.com

#将节点打标签

kubectl label nodes k8s-192-168-44-201.host.com node-role.kubernetes.io/woker=

#应用资源

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

#查看效果

kubectl get --all-namespaces pod

kubesphere-system ks-installer-54c6bcf76b-whxgj 0/1 ContainerCreating 0 74s

#查看安装日志不允许出现失败的

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

localhost : ok=31 changed=25 unreachable=0 failed=0 skipped=15 rescued=0 ignored=0

#安装完成

localhost : ok=31 changed=25 unreachable=0 failed=0 skipped=15 rescued=0 ignored=0

Start installing monitoring

Start installing multicluster

Start installing openpitrix

Start installing network

**************************************************

Waiting for all tasks to be completed ...

task network status is successful (1/4)

task openpitrix status is successful (2/4)

task multicluster status is successful (3/4)

task monitoring status is successful (4/4)

**************************************************

Collecting installation results ...

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.44.201:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2021-10-03 19:21:41

#####################################################

安装完成界面效果

监控模块报错解决

#201

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs \

--from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/etcd-ca.pem \

--from-file=etcd-client.crt=/etc/kubernetes/pki/etcd/etcd.pem \

--from-file=etcd-client.key=/etc/kubernetes/pki/etcd/etcd-key.pem