Keras-4-深度学习用于计算机视觉-猫狗数据集训练卷积网络

0. 说明:

本篇学习记录主要包括:《Python深度学习》的第5章(深度学习用于计算机视觉)的第2节(在小型数据集上从头开始训练一个卷积神经网络)内容。

相关知识点:

- 从头训练卷积网络;

- 数据增强;

dropout;

1. 在猫狗分类数据集上训练一个卷积神经网络:

1.1 数据集下载及划分:

下载链接:https://www.kaggle.com/c/dogs-vs-cats/data

该数据集包含25000张猫狗图片(每个类别都有 12500 张)。

本次暂时只用到其中一小部分数据:每个类别各1000个样本作为训练集,各500个样本作验证集,各500个样本作测试集。

将原始文件解压之后得到: sampleSubmission.csv, test1.zip , train.zip;将 test1.zip解压后得到 test1/,包含12500张为分类猫狗图片;train.zip解压后得到25000张带标签的猫狗分类图片。

数据集划分的结果就是:

train: cat(1000), dog(1000)

val: cat(500), dog(500)

test: cat(500), dog(500)

import os

## 将前1000张猫狗图片复制到目标目录,作为训练集

for i in range(1000):

os.system("cp ./dogs-vs-cats/train/cat.{}.jpg ./dogs-vs-cats/small_dt/train/train_cats/".format(i))

os.system("cp ./dogs-vs-cats/train/dog.{}.jpg ./dogs-vs-cats/small_dt/train/train_dogs/".format(i))

## 将1000-1500的猫狗图片复制到验证集目录;

for j in range(1000,1500):

os.system("cp ./dogs-vs-cats/train/cat.{}.jpg ./dogs-vs-cats/small_dt/validation/validation_cats/".format(j))

os.system("cp ./dogs-vs-cats/train/dog.{}.jpg ./dogs-vs-cats/small_dt/validation/validation_dogs/".format(j))

## 将1500-2000的猫狗图片复制到测试集目录

for k in range(1500, 2000):

os.system("cp ./dogs-vs-cats/train/cat.{}.jpg ./dogs-vs-cats/small_dt/test/test_cats/".format(k))

os.system("cp ./dogs-vs-cats/train/dog.{}.jpg ./dogs-vs-cats/small_dt/test/test_dogs/".format(k))

1.2 数据预处理:

处理步骤:

-

读取图像文件;

-

将 JPEG 文件解码为 RGB 像素网格;

-

将这些像素网格转换为浮点张量;

-

将像素值 (0-255) 缩放到 [0,1] 之间 (较少的数值便于神经网络处理);

注意目录结构必须是:

train/

train_cats/

train_dogs/

validation/

validation_cats/

validation_dogs/

test/

test_cats/

test_dogs

from keras.preprocessing.image import ImageDataGenerator ## 可以将硬盘上的图像文件自动转换为预处理好的张量批量

## 将所有图像乘以 1./255 进行像素值的缩放

train_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

## 创建批量张量

train_generator = train_datagen.flow_from_directory(directory="./dogs-vs-cats/small_dt/train/", ## 训练集图像文件所在目录

target_size=(150, 150), ## 将所有图像的大小调整为 150x150

batch_size=20, ## 批量大小

class_mode="binary") ## 因为时二分类问题,所以用二进制标签 (0,1)

validation_generator = test_datagen.flow_from_directory(directory="./dogs-vs-cats/small_dt/validation/", ## 验证集图像文件所在目录

target_size=(150, 150),

batch_size=20,

class_mode="binary")

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

for data_batch, labels_batch in train_generator:

print(data_batch.shape)

print(labels_batch.shape)

break

(20, 150, 150, 3)

(20,)

1.3 构建网络:

相比于之前的 MNIST,猫狗数据集相对而言数据量较大,所以相应的卷积网络也会比之前的更大一些。

和之前的 MNIST 类似,本次的卷积神经网络也是有 Conv2D层 (relu 为激活函数)和 MaxPooling2D 层交替组成。

增加网络深度既可以增大网络容量,也可以减小特征图的尺寸,使之在输入 Flatten 层时尺寸不会太大。

网络中特征图的深度在逐渐增大 (从32增加到128),而特征图的尺寸在逐渐减小 (从150x150 减到 7x7),这几乎是所有卷积网络的模式。

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3,3), activation="relu", input_shape=(150, 150, 3))) ## cnn1 (32个分类器,卷积核为3x3)

model.add(layers.MaxPool2D((2,2))) ## maxpooling 1

model.add(layers.Conv2D(64, (3,3), activation="relu")) ## cnn3

model.add(layers.MaxPool2D((2,2))) ## maxpooling 2

model.add(layers.Conv2D(128, (3,3), activation="relu")) ## cnn 3

model.add(layers.MaxPool2D((2,2))) ## maxpooling 3

model.add(layers.Conv2D(128, (3,3), activation="relu")) ## cnn4

model.add(layers.MaxPool2D((2,2))) ## maxpooling 4

model.add(layers.Flatten()) ## Flatten

model.add(layers.Dense(512, activation="relu")) ## FC1 (512个隐藏单元)

model.add(layers.Dense(1, activation="sigmoid")) ## 输出层 (1个隐藏单元,对应1个输出结果;二分类问题,激活函数为 sigmoid)

Metal device set to: Apple M1

systemMemory: 8.00 GB

maxCacheSize: 2.67 GB

查看 model 的特征维度随着每层的变化而产生的变化:

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 148, 148, 32) 896

max_pooling2d (MaxPooling2D (None, 74, 74, 32) 0

)

conv2d_1 (Conv2D) (None, 72, 72, 64) 18496

max_pooling2d_1 (MaxPooling (None, 36, 36, 64) 0

2D)

conv2d_2 (Conv2D) (None, 34, 34, 128) 73856

max_pooling2d_2 (MaxPooling (None, 17, 17, 128) 0

2D)

conv2d_3 (Conv2D) (None, 15, 15, 128) 147584

max_pooling2d_3 (MaxPooling (None, 7, 7, 128) 0

2D)

flatten (Flatten) (None, 6272) 0

dense (Dense) (None, 512) 3211776

dense_1 (Dense) (None, 1) 513

=================================================================

Total params: 3,453,121

Trainable params: 3,453,121

Non-trainable params: 0

_________________________________________________________________

关于上面结果中 Param 列参数的个数的计算 (以第一个 896 为例): 参数个数(896) = 卷积核个数(32) * 卷积核大小(3x3x3) + 卷积核个数(32)

## 配置并编译模型

from keras import optimizers

model.compile(optimizer=optimizers.RMSprop(learning_rate=1e-4),

loss="binary_crossentropy", ## 二分类问题用 二元交叉熵损失函数

metrics=["acc"])

1.4 用 fit_generator() 方法对数据进行拟合:

history = model.fit_generator(

train_generator,

steps_per_epoch=100, ## 因为训练样本一共有2000个,批量大小为20,所以读取完全部训练样本需要100个批量.

epochs=30, ## 训练30次

validation_data=validation_generator,

validation_steps=50 ## 验证样本一共1000个,批量大小为20,读取完所有验证样本需要50个批量.

)

Epoch 1/30

/var/folders/0w/m6x2g_g94sqfmg3k8dldpwgm0000gn/T/ipykernel_32812/2996549298.py:1: UserWarning: `Model.fit_generator` is deprecated and will be removed in a future version. Please use `Model.fit`, which supports generators.

history = model.fit_generator(

2023-06-25 13:34:09.683332: W tensorflow/tsl/platform/profile_utils/cpu_utils.cc:128] Failed to get CPU frequency: 0 Hz

100/100 [==============================] - 8s 69ms/step - loss: 0.6898 - acc: 0.5255 - val_loss: 0.6737 - val_acc: 0.5600

Epoch 2/30

100/100 [==============================] - 7s 67ms/step - loss: 0.6615 - acc: 0.6030 - val_loss: 0.6755 - val_acc: 0.5670

Epoch 3/30

100/100 [==============================] - 7s 66ms/step - loss: 0.6243 - acc: 0.6525 - val_loss: 0.6122 - val_acc: 0.6680

Epoch 29/30

100/100 [==============================] - 7s 67ms/step - loss: 0.0574 - acc: 0.9845 - val_loss: 0.9850 - val_acc: 0.7270

Epoch 30/30

100/100 [==============================] - 7s 67ms/step - loss: 0.0482 - acc: 0.9875 - val_loss: 0.9249 - val_acc: 0.7320

1.5 保存模型:

model.save("cats_and_dogs_small_1.h5")

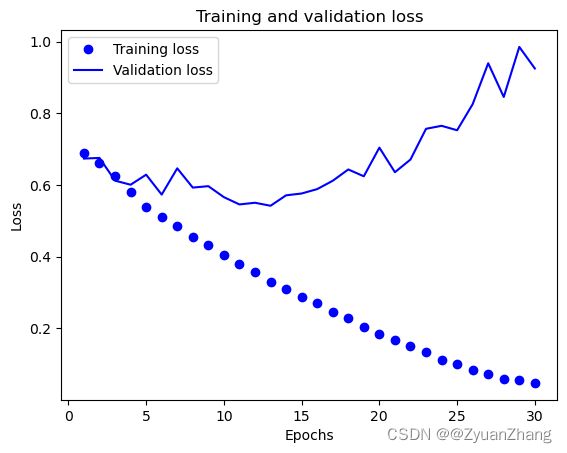

1.6 绘制训练过程中损失曲线和精度曲线:

## 训练损失和验证损失

import matplotlib.pyplot as plt

history_dict = history.history

loss_values = history_dict["loss"]

val_loss_values = history_dict["val_loss"]

epochs = range(1, len(loss_values)+1)

plt.plot(epochs, loss_values, "bo", label="Training loss") ## "bo" 表示蓝色圆点

plt.plot(epochs, val_loss_values, "b", label="Validation loss") ## "bo" 表示蓝色实线

plt.title("Training and validation loss")

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend()

plt.show()

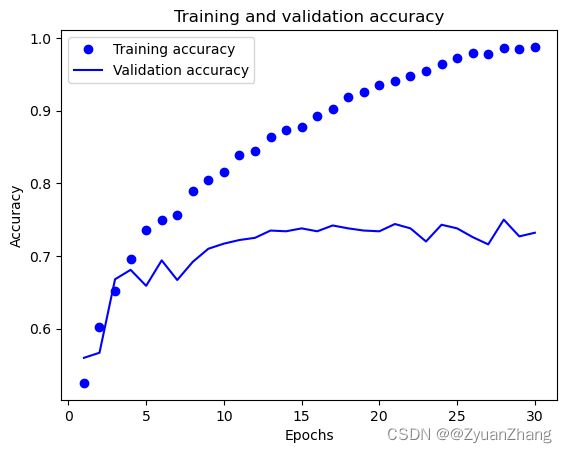

## 练精度和验证精度

acc_values = history_dict["acc"]

val_acc_values = history_dict["val_acc"]

plt.plot(epochs, acc_values, "bo", label="Training accuracy") ## "bo" 表示蓝色圆点

plt.plot(epochs, val_acc_values, "b", label="Validation accuracy") ## "bo" 表示蓝色实线

plt.title("Training and validation accuracy")

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend()

plt.show()

1.7 使用数据增强来降低模型过拟合:

由于训练样本较少,所以模型很可能出现过拟合。除了之前提到的 dropout和 L2正则化 等降低过拟合的方法外,还可以使用一种针对计算机视觉领域的新方法——数据增强(data augmentation)。

数据增强指从现有的训练样本中生成更多的训练数据,利用多种能够生成可信图像的随机变换来增强样本。(将图像作不同的变化(翻转等)使模型在训练时不会两次查看完全相同的图像))

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40, ## 图像随机旋转的角度范围 (0-180)

width_shift_range=0.2, ## 图像在水平方向上平移的范围 (相对于总宽度的比例)

height_shift_range=0.2, ## 图像在垂直方向上平移的范围 (相对于总高度的比例)

shear_range=0.2, ## 随机错切变换的角度

zoom_range=0.2, ## 图像随机缩放的范围

horizontal_flip=True ## 随机将一半图像水平翻转

)

test_datagen = ImageDataGenerator(rescale=1./255) ## 验证数据不能增强

## 创建批量张量

train_generator = train_datagen.flow_from_directory(directory="./dogs-vs-cats/small_dt/train/", ## 训练集图像文件所在目录

target_size=(150, 150), ## 将所有图像的大小调整为 150x150

batch_size=20, ## 批量大小

class_mode="binary") ## 因为时二分类问题,所以用二进制标签 (0,1)

validation_generator = test_datagen.flow_from_directory(directory="./dogs-vs-cats/small_dt/validation/", ## 验证集图像文件所在目录

target_size=(150, 150),

batch_size=20,

class_mode="binary")

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

1.8 定义一个包含dropout的卷积网络:

## 定义模型框架

model = models.Sequential()

model.add(layers.Conv2D(32, (3,3), activation="relu", input_shape=(150, 150,3)))

model.add(layers.MaxPool2D((2,2)))

model.add(layers.Conv2D(64, (3,3), activation="relu"))

model.add(layers.MaxPool2D((2,2)))

model.add(layers.Conv2D(128, (3,3), activation="relu"))

model.add(layers.MaxPool2D((2,2)))

model.add(layers.Conv2D(128, (3,3), activation="relu"))

model.add(layers.MaxPool2D((2,2)))

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(512, activation="relu"))

model.add(layers.Dense(1, activation="sigmoid"))

## 配置并编译模型

model.compile(optimizer=optimizers.RMSprop(learning_rate=1e-4),

loss="binary_crossentropy",

metrics=["acc"])

1.9 训练并保存模型:

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=50,

validation_data=validation_generator,

validation_steps=50

)

model.save("cats_and_dogs_small_2.h5")

Epoch 1/50

/var/folders/0w/m6x2g_g94sqfmg3k8dldpwgm0000gn/T/ipykernel_32812/2595555689.py:1: UserWarning: `Model.fit_generator` is deprecated and will be removed in a future version. Please use `Model.fit`, which supports generators.

history = model.fit_generator(

100/100 [==============================] - 8s 73ms/step - loss: 0.6944 - acc: 0.5055 - val_loss: 0.6940 - val_acc: 0.5000

Epoch 2/50

100/100 [==============================] - 8s 77ms/step - loss: 0.6901 - acc: 0.5235 - val_loss: 0.6918 - val_acc: 0.5000

Epoch 3/50

100/100 [==============================] - 8s 79ms/step - loss: 0.6780 - acc: 0.5765 - val_loss: 0.6721 - val_acc: 0.5440

Epoch 48/50

100/100 [==============================] - 11s 112ms/step - loss: 0.4791 - acc: 0.7715 - val_loss: 0.5140 - val_acc: 0.7340

Epoch 49/50

100/100 [==============================] - 10s 102ms/step - loss: 0.4683 - acc: 0.7725 - val_loss: 0.4514 - val_acc: 0.7720

Epoch 50/50

100/100 [==============================] - 11s 106ms/step - loss: 0.4825 - acc: 0.7790 - val_loss: 0.4511 - val_acc: 0.7810

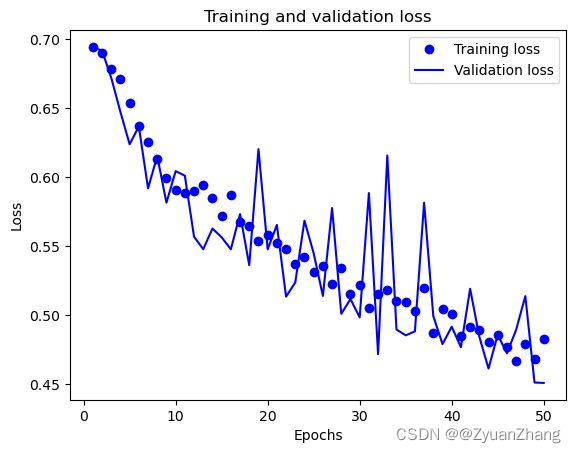

1.10 可视化损失和精度:

## 训练损失和验证损失

import matplotlib.pyplot as plt

history_dict = history.history

loss_values = history_dict["loss"]

val_loss_values = history_dict["val_loss"]

epochs = range(1, len(loss_values)+1)

plt.plot(epochs, loss_values, "bo", label="Training loss") ## "bo" 表示蓝色圆点

plt.plot(epochs, val_loss_values, "b", label="Validation loss") ## "bo" 表示蓝色实线

plt.title("Training and validation loss")

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend()

plt.show()

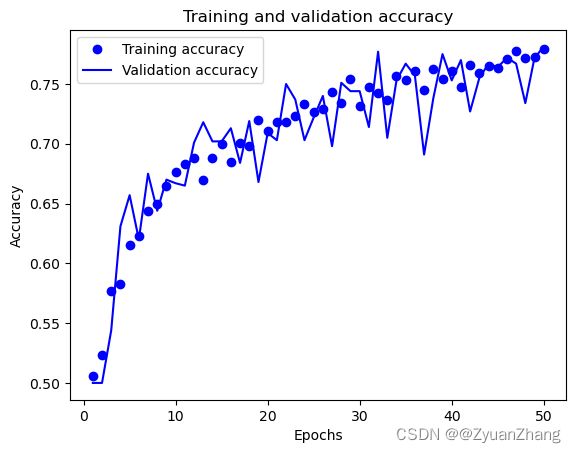

## 训练精度和验证精度

import matplotlib.pyplot as plt

history_dict = history.history

acc_values = history_dict["acc"]

val_acc_values = history_dict["val_acc"]

epochs = range(1, len(acc_values)+1)

plt.plot(epochs, acc_values, "bo", label="Training accuracy") ## "bo" 表示蓝色圆点

plt.plot(epochs, val_acc_values, "b", label="Validation accuracy") ## "bo" 表示蓝色实线

plt.title("Training and validation accuracy")

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend()

plt.show()