Pulsar

Apache Pulsar是一个一体化的消息和流平台。消息可以单独使用和确认,也可以作为延迟小于10毫秒的流使用。它的分层体系结构允许跨数百个节点快速扩展,而无需数据重组。

其功能包括具有资源分离和访问控制的多租户、跨区域的异地复制、分层存储以及对六种官方客户端语言的支持。它支持多达一百万个独特主题,旨在简化您的应用程序架构。

Pulsar 是 Apache 软件基金会排名前 10 的项目,拥有充满活力和热情的社区以及涵盖小型公司和大型企业的用户群。

官网:https://pulsar.apache.org/

理论

Apache Pulsar 作为 Apache 软件基金会顶级项目,是下一代云原生分布式消息流平台,集消息、存储、轻量化函数计算为一体,采用计算与存储分离架构设计,支持多租户、持久化存储、跨区域复制、具有强一致性、高吞吐、低延迟及高可扩展性等流数据存储特性。

Pulsar 诞生于 2012 年,最初的目的是为在 Yahoo 内部,整合其他消息系统,构建统一逻辑、支撑大集群和跨区域的消息平台。当时的其他消息系统(包括 Kafka),都不能满足 Yahoo 的需求,比如大集群多租户、稳定可靠的 IO 服务质量、百万级 Topic、跨地域复制等,因此 Pulsar 应运而生。

Pulsar 的关键特性如下

Pulsar 的单个实例原生支持多个集群,可跨机房在集群间无缝地完成消息复制。

● 极低的发布延迟和端到端的延迟

● 可无缝扩展到超过一百万个 Topic

● 简单的客户端 API,支持 Java、Go、Python 和 C++

● 支持多种 Topic 订阅模式 (独占订阅、共享订阅、故障转移订阅)

● 通过 Apache BookKeeper 提供的持久化消息存储机制保证消息传递

● 由轻量级的 Serverless 计算框架 Pulsar Functions 实现流原生的数据处理。

● 基于 Pulsar Functions 的 serverless connector 框架 Pulsar IO 使得数据更容易移入、移出 Apache Pulsar。

● 分层存储可在数据陈旧时,将数据从热存储卸载到冷/长期存储(如S3、GCS)中。

概念

官网介绍

Producer 消息的源头,也是消息的发布者,负责将消息发送到 topic。

Consumer 消息的消费者,负责从 topic 订阅并消费消息。

Topic 消息数据的载体,在 Pulsar 中 Topic 可以指定分为多个 partition,如果不设置默认只有一个 partition。

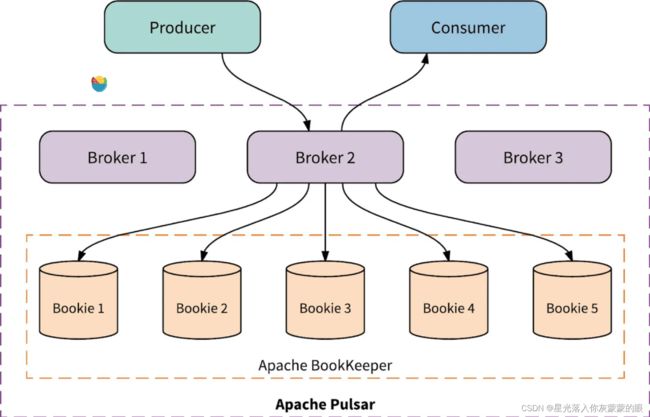

Broker Broker 是一个无状态组件,主要负责接收 Producer 发送过来的消息,并交付给 Consumer。

BookKeeper 分布式的预写日志系统,为消息系统比 Pulsar 提供存储服务,为多个数据中心提供跨机器复制。

Bookie Bookie 是为消息提供持久化的 Apache BookKeeper 的服务端。

Cluster Apache Pulsar 实例集群,由一个或多个实例组成。

云原生架构

Apache Pulsar 采用计算存储分离的一个架构,不与计算逻辑耦合在一起,可以做到数据独立扩展和快速恢复。计算存储分离式的架构随着云原生的发展,在各个系统中出现的频次也是越来越多。Pulsar 的 Broker 层就是一层无状态的计算逻辑层,主要负责接收和分发消息,而存储层由 Bookie 节点组成,负责消息的存储和读取。

Pulsar 的这种计算存储分离式的架构,可以做到水平扩容不受限制,如果系统的 Producer、Consumer 比较多,那么就可以直接扩容计算逻辑层 Broker,不受数据一致性的影响。如果不是这种架构,我们在扩容的时候,计算逻辑和存储都是实时变化的,就很容易受到数据一致性的限制。同时计算层的逻辑本身就很复杂容易出错,而存储层的逻辑相对简单,出错的概率也比较小,在这种架构下,如果计算层出现错误,可以进行单方面恢复而不影响存储层。

Pulsar 还支持数据分层存储,可以将旧消息移到便宜的存储方案中,而最新的消息可以存到 SSD 中。这样可以节约成本、最大化利用资源。

Pulsar的集群由多个 Pulsar 实例组成的,其中包括

设计原理

pulsar 采用发布-订阅的设计模式(pub-sub),在该设计模式中 producer 发布消息到 topic ,consumer 订阅 topic 中的消息并在处理完成之后发送 ack 确认

特点

部署

Docker

Start Pulsar in Docker

docker run -it -p 6650:6650 -p 8080:8080 --mount source=pulsardata,target=/pulsar/data --mount source=pulsarconf,target=/pulsar/conf apachepulsar/pulsar:3.0.0 bin/pulsar standalone

如果要更改 Pulsar 配置并启动 Pulsar,请通过传递带有PULSAR_PREFIX_前缀的环境变量来运行以下命令。有关更多详细信息,请参阅默认配置文件。

docker run -it -e PULSAR_PREFIX_xxx=yyy -p 6650:6650 -p 8080:8080 --mount source=pulsardata,target=/pulsar/data --mount source=pulsarconf,target=/pulsar/conf apachepulsar/pulsar:2.10.0 sh -c "bin/apply-config-from-env.py conf/standalone.conf && bin/pulsar standalone"

建议:

● 默认情况下,docker容器以UID 10000和GID 0运行。需要确保所挂载的卷为UID 10000或GID 0提供写权限。注意,UID 10000是任意的,因此建议将这些挂载设置为根组(GID 0)可写。

● 数据、元数据和配置持久化在Docker卷上,以避免每次容器重新启动时都“重新”启动。要了解卷的详细信息,可以使用docker volume inspect 命令。

● 对于 Windows 上的 Docker,请确保将其配置为使用 Linux 容器。

成功启动Pulsar后,您可以看到info级日志消息,如下所示:

08:18:30.970 [main] INFO org.apache.pulsar.broker.web.WebService - HTTP Service started at http://0.0.0.0:8080

...

07:53:37.322 [main] INFO org.apache.pulsar.broker.PulsarService - 消息服务准备就绪, bootstrap service port = 8080, broker url= pulsar://localhost:6650, cluster=standalone, configs=org.apache.pulsar.broker.ServiceConfiguration@98b63c1

...

如果需要执行健康检查,可以使用 bin/pulsar-admin brokers healthcheck 命令。(pulsar-admin是用于管理 Pulsar 实体的工具)

启动本地独立集群时,会自动创建 public/default 命名空间。命名空间用于开发目的。所有Pulsar主题都在名称空间中进行管理。

Use Pulsar in Docker

如果你运行的是本地独立的集群,你可以使用这些根url之一来与你的集群交互:

pulsar://localhost:6650

http://localhost:8080

下面的示例指导您通过使用Python客户端API开始使用Pulsar。

直接从PyPI安装Pulsar Python客户端库:

pip install pulsar-client

使用消息

Create a consumer and subscribe to the topic: 创建消费者并订阅主题

import pulsar

client = pulsar.Client('pulsar://localhost:6650')

consumer = client.subscribe('my-topic', subscription_name='my-sub')

while True:

msg = consumer.receive()

print("Received message: '%s'" % msg.data())

consumer.acknowledge(msg)

client.close()

生成消息

Start a producer to send some test messages: 启动一个producer来发送一些测试消息

import pulsar

client = pulsar.Client('pulsar://localhost:6650')

producer = client.create_producer('my-topic')

for i in range(10):

producer.send(('hello-pulsar-%d' % i).encode('utf-8'))

client.close()

Get the topic statistics

在Pulsar中,您可以使用REST API、Java或命令行工具来控制系统的各个方面。有关API的详细信息,请参见Admin API Overview。

在最简单的例子中,您可以使用curl探测特定主题的统计信息:

curl http://localhost:8080/admin/v2/persistent/public/default/my-topic/stats | python -m json.tool

输出是这样的:

{

···

"consumers": [

{

"msgRateOut": 1.8332950480217471,

"msgThroughputOut": 91.33142602871978,

"bytesOutCounter": 6607,

"msgOutCounter": 133,

"msgRateRedeliver": 0.0,

"chunkedMessageRate": 0.0,

"consumerName": "3c544f1daa",

"availablePermits": 867,

"unackedMessages": 0,

"avgMessagesPerEntry": 6,

"blockedConsumerOnUnackedMsgs": false,

"lastAckedTimestamp": 1625389546162,

"lastConsumedTimestamp": 1625389546070,

"metadata": {},

"address": "/127.0.0.1:35472",

"connectedSince": "2021-07-04T08:58:21.287682Z",

"clientVersion": "2.8.0"

}

],

···

}

Docker-compose

单机

https://jpinjpblog.wordpress.com/2020/12/10/pulsar-with-manager-and-dashboard-on-docker-compose/

version: "3.5"

services:

pulsar:

image: "apachepulsar/pulsar:2.6.2"

command: bin/pulsar standalone

environment:

PULSAR_MEM: " -Xms512m -Xmx512m -XX:MaxDirectMemorySize=1g"

volumes:

- ./pulsar/data:/pulsar/data

ports:

- "6650:6650"

- "8080:8080"

restart: unless-stopped

networks:

- network_test_bed

pulsar-manager:

image: "apachepulsar/pulsar-manager:v0.2.0"

ports:

- "9527:9527"

- "7750:7750"

depends_on:

- pulsar

environment:

SPRING_CONFIGURATION_FILE: /pulsar-manager/pulsar-manager/application.properties

networks:

- network_test_bed

redis:

image: "redislabs/redistimeseries:1.4.7"

ports:

- "6379:6379"

volumes:

- ./redis/redis-data:/var/lib/redis

environment:

- REDIS_REPLICATION_MODE=master

- PYTHONUNBUFFERED=1

networks:

- network_test_bed

alertmanager:

image: prom/alertmanager:v0.21.0

ports:

- "9093:9093"

volumes:

- ./alertmanager/:/etc/alertmanager/

networks:

- network_test_bed

restart: always

command:

- '--config.file=/etc/alertmanager/config.yml'

- '--storage.path=/alertmanager'

prometheus:

image: prom/prometheus:v2.23.0

volumes:

- ./prometheus/standalone.prometheus.yml:/etc/prometheus/prometheus.yml

ports:

- "9090:9090"

networks:

- network_test_bed

grafana:

image: streamnative/apache-pulsar-grafana-dashboard:0.0.14

environment:

PULSAR_CLUSTER: "standalone"

PULSAR_PROMETHEUS_URL: "http://163.221.68.230:9090"

restart: unless-stopped

ports:

- "3000:3000"

networks:

- network_test_bed

depends_on:

- prometheus

networks:

network_test_bed:

name: network_test_bed

driver: bridge

集群

官网 示例

方法一

# 安装

curl -SL https://github.com/docker/compose/releases/download/v2.19.1/docker-compose-linux-x86_64 -o /usr/bin/docker-compose && chmod +x /usr/bin/docker-compose

# 部署

version: '3'

services:

# Start zookeeper

zookeeper:

image: apachepulsar/pulsar:latest

container_name: zookeeper

restart: on-failure # 失败后重启

# user: root # 当镜像是apachepulsar/pulsar:3.0.0时需要开启

networks:

- pulsar

volumes:

- ./data/zookeeper:/pulsar/data/zookeeper

environment:

- metadataStoreUrl=zk:zookeeper:2181

- PULSAR_MEM=-Xms256m -Xmx256m -XX:MaxDirectMemorySize=256m

command: >

bash -c "bin/apply-config-from-env.py conf/zookeeper.conf && \

bin/generate-zookeeper-config.sh conf/zookeeper.conf && \

exec bin/pulsar zookeeper"

healthcheck:

test: ["CMD", "bin/pulsar-zookeeper-ruok.sh"]

interval: 10s

timeout: 5s

retries: 30

# Init cluster metadata

pulsar-init:

container_name: pulsar-init

hostname: pulsar-init

image: apachepulsar/pulsar:latest

restart: on-failure # 失败后重启

# user: root # 当镜像是apachepulsar/pulsar:3.0.0时需要开启

networks:

- pulsar

command: >

bin/pulsar initialize-cluster-metadata \

--cluster cluster-a \

--zookeeper zookeeper:2181 \

--configuration-store zookeeper:2181 \

--web-service-url http://broker:8080 \

--broker-service-url pulsar://broker:6650

depends_on:

zookeeper:

condition: service_healthy

# Start bookie

bookie:

image: apachepulsar/pulsar:latest

container_name: bookie

restart: on-failure

# user: root # 当镜像是apachepulsar/pulsar:3.0.0时需要开启

networks:

- pulsar

environment:

- clusterName=cluster-a

- zkServers=zookeeper:2181

- metadataServiceUri=metadata-store:zk:zookeeper:2181

# 否则每次我们运行docker时,由于Cookie的原因,我们都无法启动

# 查看: https://github.com/apache/bookkeeper/blob/405e72acf42bb1104296447ea8840d805094c787/bookkeeper-server/src/main/java/org/apache/bookkeeper/bookie/Cookie.java#L57-68

- advertisedAddress=bookie

- BOOKIE_MEM=-Xms512m -Xmx512m -XX:MaxDirectMemorySize=256m

depends_on:

zookeeper:

condition: service_healthy

pulsar-init:

condition: service_completed_successfully

# 将本地目录映射到容器,避免由于容器磁盘不足导致bookie启动失败

volumes:

- ./data/bookkeeper:/pulsar/data/bookkeeper

command: bash -c "bin/apply-config-from-env.py conf/bookkeeper.conf && exec bin/pulsar bookie"

# Start broker

broker:

image: apachepulsar/pulsar:latest

container_name: broker

hostname: broker

restart: on-failure

# user: root # 当镜像是apachepulsar/pulsar:3.0.0时需要开启

networks:

- pulsar

environment:

- metadataStoreUrl=zk:zookeeper:2181

- zookeeperServers=zookeeper:2181

- clusterName=cluster-a

- managedLedgerDefaultEnsembleSize=1

- managedLedgerDefaultWriteQuorum=1

- managedLedgerDefaultAckQuorum=1

- advertisedAddress=broker

# 将Broker的Listener信息发布到Zookeeper中,供Clients(Producer/Consumer)使用

- advertisedListeners=external:pulsar://broker:6650,external1:pulsar://127.0.0.1:66500

- PULSAR_MEM=-Xms512m -Xmx512m -XX:MaxDirectMemorySize=256m

depends_on:

zookeeper:

condition: service_healthy

bookie:

condition: service_started

expose:

- 8080

- 6650

ports:

- "6650:6650"

- "8080:8080"

volumes:

- ./data/broker/data:/pulsar/data/

- ./data/broker/conf:/pulsar/conf

- ./data/broker/logs:/pulsar/logs

- ./data/ssl/:/pulsar/ssl

command: bash -c "bin/apply-config-from-env.py conf/broker.conf && exec bin/pulsar broker"

pulsar-manager:

image: apachepulsar/pulsar-manager:v0.3.0 # :v0.4.0也有

container_name: pulsar-manager

hostname: pulsar-manager

restart: always

networks:

- pulsar

ports:

- "9527:9527" # 前端端口

- "7750:7750" # 后端端口

depends_on:

- broker

links:

- broker

environment:

SPRING_CONFIGURATION_FILE: /pulsar-manager/pulsar-manager/application.properties

volumes:

- ./pulsar-manager/dbdata:/pulsar-manager/pulsar-manager/dbdata

- ./pulsar-manager/application.properties:/pulsar-manager/pulsar-manager/application.properties

- ./data/ssl:/pulsar-manager/ssl

networks:

pulsar:

driver: bridge

方法二

version: '2.1'

services:

zoo1:

image: apachepulsar/pulsar:2.4.1

hostname: zoo1

ports:

- "2181:2181"

environment:

ZK_ID: 1

PULSAR_ZK_CONF: /conf/zookeeper.conf

volumes:

- ./zoo1/data:/pulsar/data/zookeeper/

- ./zoo1/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_zk.sh"

zoo2:

image: apachepulsar/pulsar:2.4.1

hostname: zoo2

ports:

- "2182:2181"

environment:

ZK_ID: 2

PULSAR_ZK_CONF: /conf/zookeeper.conf

volumes:

volumes:

- ./zoo2/data:/pulsar/data/zookeeper/

- ./zoo2/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_zk.sh"

zoo3:

image: apachepulsar/pulsar:2.4.1

hostname: zoo3

ports:

- "2183:2181"

environment:

ZK_ID: 3

PULSAR_ZK_CONF: /conf/zookeeper.conf

volumes:

- ./zoo3/data:/pulsar/data/zookeeper/

- ./zoo3/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_zk.sh"

bookie1:

image: apachepulsar/pulsar:2.4.1

hostname: bookie1

ports:

- "3181:3181"

environment:

BOOKIE_CONF: /conf/bookkeeper.conf

volumes:

- ./bookie1/data:/pulsar/data/bookkeeper/

- ./bookie1/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_mainbk.sh"

depends_on:

- zoo1

- zoo2

- zoo3

bookie2:

image: apachepulsar/pulsar:2.4.1

hostname: bookie2

ports:

- "3182:3181"

environment:

BOOKIE_CONF: /conf/bookkeeper.conf

volumes:

- ./bookie2/data:/pulsar/data/bookkeeper/

- ./bookie2/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_otherbk.sh"

depends_on:

- bookie1

bookie3:

image: apachepulsar/pulsar:2.4.1

hostname: bookie3

ports:

- "3183:3181"

environment:

BOOKIE_CONF: /conf/bookkeeper.conf

volumes:

- ./bookie3/data:/pulsar/data/bookkeeper/

- ./bookie3/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_otherbk.sh"

depends_on:

- bookie1

broker1:

image: apachepulsar/pulsar:2.4.1

hostname: broker1

environment:

PULSAR_BROKER_CONF: /conf/broker.conf

ports:

- "6660:6650"

- "8090:8080"

volumes:

- ./broker1/data:/pulsar/data/broker/

- ./broker1/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_broker.sh"

depends_on:

- bookie1

- bookie2

- bookie3

broker2:

image: apachepulsar/pulsar:2.4.1

hostname: broker2

environment:

PULSAR_BROKER_CONF: /conf/broker.conf

ports:

- "6661:6650"

- "8091:8080"

volumes:

- ./broker2/data:/pulsar/data/broker/

- ./broker2/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_broker.sh"

depends_on:

- bookie1

- bookie2

- bookie3

pulsar-proxy:

image: apachepulsar/pulsar:2.4.1

hostname: pulsar-proxy

ports:

- "6650:6650"

- "8080:8080"

environment:

PULSAR_PROXY_CONF: "/conf/proxy.conf"

volumes:

- ./proxy/log/:/pulsar/logs

- ./conf:/conf

- ./scripts:/scripts

command: /bin/bash "/scripts/start_proxy.sh"

depends_on:

- broker1

- broker2

Those who cannot be commanded by themselves must be commanded by others.