基于Kubeadm部署k8s集群:下篇

继续上篇内容

目录

7、安装flannel

8、节点管理命令

三、安装Dashboard UI

1、部署Dashboard

2、开放端口设置

3、权限配置

7、安装flannel

Master 节点NotReady 的原因就是因为没有使用任何的网络插件,此时Node 和Master的连接还不正常。目前最流行的Kubernetes 网络插件有Flannel、Calico、Canal、Weave 这里选择使用flannel。

[root@k8s-master ~]# docker load < flannel_v0.12.0-amd64.tar

256a7af3acb1: Loading layer [==================================================>] 5.844MB/5.844MB

d572e5d9d39b: Loading layer [==================================================>] 10.37MB/10.37MB

57c10be5852f: Loading layer [==================================================>] 2.249MB/2.249MB

7412f8eefb77: Loading layer [==================================================>] 35.26MB/35.26MB

05116c9ff7bf: Loading layer [==================================================>] 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

所有主机:

master上传kube-flannel.yml,所有主机上传flannel_v0.12.0-amd64.tar

[root@k8s-master ~]# ll

总用量 52496

-rw-------. 1 root root 1257 7月 18 16:47 anaconda-ks.cfg

-rw-r--r--. 1 root root 53746688 12月 16 2020 flannel_v0.12.0-amd64.tar

-rw-r--r--. 1 root root 895 8月 10 14:18 init-config.yaml

[root@k8s-master ~]# docker load < flannel_v0.12.0-amd64.tar

256a7af3acb1: Loading layer [==================================================>] 5.844MB/5.844MB

d572e5d9d39b: Loading layer [==================================================>] 10.37MB/10.37MB

57c10be5852f: Loading layer [==================================================>] 2.249MB/2.249MB

7412f8eefb77: Loading layer [==================================================>] 35.26MB/35.26MB

05116c9ff7bf: Loading layer [==================================================>] 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

三台主机安装cni插件,这些文件都可以从官网获取

[root@k8s-master ~]# ll

总用量 88512

-rw-------. 1 root root 1257 7月 18 16:47 anaconda-ks.cfg

-rw-r--r--. 1 root root 36878412 11月 10 2021 cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# tar xf cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# cp flannel /opt/cni/bin/[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/flannel created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 7m53s v1.19.0

k8s-node01 Ready 6m12s v1.19.0

k8s-node02 Ready 6m8s v1.19.0

已经是ready状态了

第一个拉取镜像时间会比较长,先做后面的不影响

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d56c8448f-4rvnr 1/1 Running 0 10m

coredns-6d56c8448f-9mn9h 1/1 Running 0 10m

etcd-k8s-master 1/1 Running 0 10m

kube-apiserver-k8s-master 1/1 Running 0 10m

kube-controller-manager-k8s-master 1/1 Running 0 10m

kube-flannel-ds-amd64-2h859 1/1 Running 0 2m23s

kube-flannel-ds-amd64-6gqct 1/1 Running 0 2m23s

kube-flannel-ds-amd64-rvljf 1/1 Running 0 2m23s

kube-proxy-bxh2m 1/1 Running 0 8m28s

kube-proxy-s7jxv 1/1 Running 0 10m

kube-proxy-xdr5v 1/1 Running 0 8m30s

kube-scheduler-k8s-master 1/1 Running 0 10m

8、节点管理命令

以下命令无需执行,仅作为了解

重置master和node配置

[root@k8s-master ~]# kubeadm reset删除node配置

[root@k8s-master ~]# kubectl delete node k8s-node01

[root@k8s-node01 ~]# docker rm -f $(docker ps -aq)

[root@k8s-node01 ~]# systemctl stop kubelet

[root@k8s-node01 ~]# rm -rf /etc/kubernetes/*

[root@k8s-node01 ~]# rm -rf /var/lib/kubelet/*

三、安装Dashboard UI

1、部署Dashboard

dashboard的github仓库地址:https://github.com/kubernetes/dashboard

代码仓库当中,有给出安装示例的相关部署文件,我们可以直接获取之后,直接部署即可

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml默认这个部署文件当中,会单独创建一个名为kubernetes-dashboard的命名空间,并将kubernetes-dashboard部署在该命名空间下。dashboard的镜像来自docker hub官方,所以可不用修改镜像地址,直接从官方获取即可。

2、开放端口设置

在默认情况下,dashboard并不对外开放访问端口,这里简化操作,直接使用nodePort的方式将其端口暴露出来,修改serivce部分的定义:

[root@k8s-master ~]# vim recommended.yaml

30 ---

31

32 kind: Service

33 apiVersion: v1

34 metadata:

35 labels:

36 k8s-app: kubernetes-dashboard

37 name: kubernetes-dashboard

38 namespace: kubernetes-dashboard

39 spec:

40 type: NodePort

41 ports:

42 - port: 443

43 targetPort: 8443

44 nodePort: 32443

45 selector:

46 k8s-app: kubernetes-dashboard

47

48 ---

192 image: kubernetesui/dashboard:v2.0.0

276 image: kubernetesui/metrics-scraper:v1.0.4

3、权限配置

配置一个超级管理员权限

[root@k8s-master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b59f7d4df-rhl9r 1/1 Running 0 37s

kubernetes-dashboard-74d688b6bc-whtfr 1/1 Running 0 37s

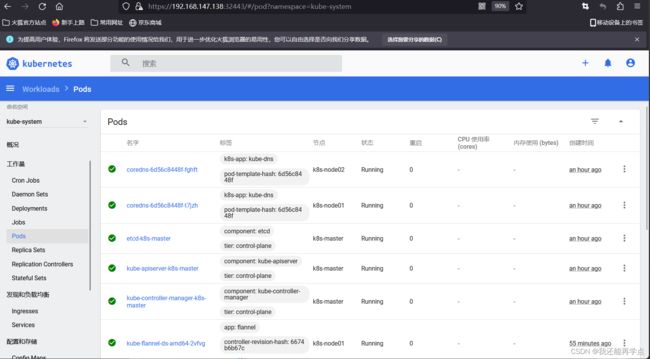

[root@k8s-master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-6d56c8448f-fghft 1/1 Running 0 37m 10.244.2.2 k8s-node02

kube-system coredns-6d56c8448f-t7jzh 1/1 Running 0 37m 10.244.1.2 k8s-node01

kube-system etcd-k8s-master 1/1 Running 0 38m 192.168.147.138 k8s-master

kube-system kube-apiserver-k8s-master 1/1 Running 0 38m 192.168.147.138 k8s-master

kube-system kube-controller-manager-k8s-master 1/1 Running 0 38m 192.168.147.138 k8s-master

kube-system kube-flannel-ds-amd64-2vfvg 1/1 Running 0 30m 192.168.147.139 k8s-node01

kube-system kube-flannel-ds-amd64-88g4t 1/1 Running 0 30m 192.168.147.140 k8s-node02

kube-system kube-flannel-ds-amd64-fbn4v 1/1 Running 0 30m 192.168.147.138 k8s-master

kube-system kube-proxy-b26zq 1/1 Running 0 36m 192.168.147.139 k8s-node01

kube-system kube-proxy-jzjkq 1/1 Running 0 36m 192.168.147.140 k8s-node02

kube-system kube-proxy-r9ckd 1/1 Running 0 37m 192.168.147.138 k8s-master

kube-system kube-scheduler-k8s-master 1/1 Running 0 38m 192.168.147.138 k8s-master

kubernetes-dashboard dashboard-metrics-scraper-7b59f7d4df-rhl9r 1/1 Running 0 75s 10.244.2.3 k8s-node02

kubernetes-dashboard kubernetes-dashboard-74d688b6bc-whtfr 1/1 Running 0 75s 10.244.1.3 k8s-node01

使用火狐浏览器登录IP,选择高级,接受风险

可以看到出现如上图画面,需要我们输入一个kubeconfig文件或者一个token。事实上在安装dashboard时,也为我们默认创建好了一个serviceaccount,为kubernetes-dashboard,并为其生成好了token,我们可以通过如下指令获取该sa的token:

[root@k8s-master ~]# kubectl describe secret -n kubernetes-dashboard $(kubectl get secret -n kubernetes-dashboard |grep kubernetes-dashboard-token | awk '{print $1}') |grep token | awk '{print $2}'

kubernetes-dashboard-token-4fjtj

kubernetes.io/service-account-token

eyJhbGciOiJSUzI1NiIsImtpZCI6IklVQ1J5cklyajJrRkd5MWV0Z0ZrRDNLQVVoWXZXM3I5RGIxMFl1d1I1NzQifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi00Zmp0aiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjQ0ZTMxYjNhLWM5MGUtNGFiOS05Y2VlLTNkY2I1MWJiMjdkNiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.ZyrPFXYexeoLbz48ZZ7WHglk00WhOpU-Dabb1ZR088uWA8Gx2rmi-SbuFQ7qyliXpCIDG6jkKMDZXYR0h7bWG8S0Fx2OhFZmqcwZ4GbenmQc7MYLD1w1HQHi8SRlDUzQ8pJ_hMHQOKQsUa8JMyXvzRXD998H2WFQHJe95ke8BeDJ5oCFwS-GIVwwA1h4DHUuhjSnvPBhi0wuiZhK1VDXqfx4cq6ko83tyRfjAAsCsF7bcnOX1n0y8tUrK0WDmJMnhBB5EGK8O8xNjqYqzWt_GyHY-AQG8tnnDtCBw-e5oMZvdfFyk5XSSqaUBYTm_ebMjKvvTMWmxPcqMuGrYUAglw