障碍物检测算法开发 -(2)激光雷达和相机的联合标定

接上一篇: 障碍物识别算法开发 -(1)算法实现和主要技术路线

本节主要实现激光雷达和相机的联合标定

1.项目背景

(1)进行远距离的障碍物识别,由于成本限制,目前我使用的激光雷达探测距离在80米左右就无法通过聚类进行提取了,简直是人畜不分。

(2)相机可体现障碍物的具体形状,并进行定位识别,但无法确定的物体大小和距离。

2.算法设计方案

(1)方案1:拟通过激光雷达和相机进行联合标定,通过对相机图像进行处理,深度学习目标检测算法实现目标检测,通过雷达点云数据投影到图像中,再进行物体距离的测量。

(2)方案2:通过激光雷达和相机的联合标定,将图像像素转化为世界坐标,形成点云图,再对点云数据进行操作。

3.算法实现

(1)本次实验采用方案1进行处理

(2)开发环境及设备:

VS2019做算法开发,需要opencv454,pcl库支持,使用matlab2021a做相机标定,相机和雷达各1个。

4.激光雷达和相机的联合标定详细设计方案

(1)使用YOLOv5对图像中的障碍物进行识别

int ObstacleRecongnition::ObstacleRecognizeNear(Mat SrcImg,vector<Rect> &defect_result, vector<int> &class_id)

{

Mat blob;

int col = SrcImg.cols;

int row = SrcImg.rows;

int maxLen = MAX(col, row);

Mat netInputImg = SrcImg.clone();

if (maxLen > 1.2*col || maxLen > 1.2*row) {

Mat resizeImg = Mat::zeros(maxLen, maxLen, CV_8UC3);

SrcImg.copyTo(resizeImg(Rect(0, 0, col, row)));

netInputImg = resizeImg;

}

dnn::blobFromImage(netInputImg, blob, 1 / 255.0, Size(netWidth, netHeight), Scalar(), true, false);

t_model_near.setInput(blob);

std::vector<Mat> netOutputImg;

t_model_near.forward(netOutputImg, t_model_near.getUnconnectedOutLayersNames());

std::vector<int> classIds;//结果id数组

std::vector<float> confidences;//结果每个id对应置信度数组

std::vector<Rect> boxes;//每个id矩形框

float ratio_h = (float)netInputImg.rows / netHeight;

float ratio_w = (float)netInputImg.cols / netWidth;

int net_width = classnum + 5; //输出的网络宽度是类别数+5

float* pdata = (float*)netOutputImg[0].data;

for (int stride = 0; stride < 3; stride++) { //stride

int grid_x = (int)(netWidth / netStride[stride]);

int grid_y = (int)(netHeight / netStride[stride]);

for (int anchor = 0; anchor < 3; anchor++) { //anchors

for (int i = 0; i < grid_y; i++) {

for (int j = 0; j < grid_x; j++) {

float box_score = pdata[4]; //Sigmoid(pdata[4]);//获取每一行的box框中含有某个物体的概率

if (box_score > boxThreshold) {

Mat scores(1, classnum, CV_32FC1, pdata + 5);

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre; //Sigmoid((float)max_class_socre);

if (max_class_socre > classThreshold) {

//rect [x,y,w,h]

float x = pdata[0];

float y = pdata[1];

float w = pdata[2];

float h = pdata[3];

int left = (x - 0.5*w)*ratio_w;

int top = (y - 0.5*h)*ratio_h;

classIds.push_back(classIdPoint.x);

confidences.push_back(max_class_socre*box_score);

boxes.push_back(Rect(left, top, int(w*ratio_w), int(h*ratio_h)));

}

}

pdata += net_width;//下一行

}

}

}

}

//执行非最大抑制以消除具有较低置信度的冗余重叠框(NMS)

vector<int> nms_result;

dnn::NMSBoxes(boxes, confidences, classThreshold, nmsThreshold, nms_result);

for (int i = 0; i < nms_result.size(); i++) {

int idx = nms_result[i];

if (boxes[idx].x >= 0 &&

boxes[idx].y >= 0 &&

boxes[idx].width > 3 &&

boxes[idx].height > 3

) {

float left_x = boxes[idx].x;

float left_y = boxes[idx].y;

float width = boxes[idx].width;

float height = boxes[idx].height;

class_id.push_back(classIds[idx]);

defect_result.push_back(Rect(left_x, left_y, width, height));

}

}

return 0;

}

(2)点云数据处理

为了保证点云投影过程中出现一些异常,需要先对点云数据进行处理,包括设置点云边界和体素过滤

1)设置点云边界

设置ROI区域,将该区域的点云暴露,去除图像中没有拍到的点和自身需要的点的范围,也就是对x,y,z三个值的大小进行限定,通过遍历点云原始数据,通过if条件判断语句设置边界区域

pcl::PointCloud<pcl::PointXYZRGB>::Ptr ObstacleRecongnition::cut3DPoint(pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud) {

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud_filtered_1(new pcl::PointCloud<pcl::PointXYZRGB>);

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud_filtereds(new pcl::PointCloud<pcl::PointXYZRGB>);

//1.裁剪区域

for (size_t i = 0; i < cloud->size(); ++i) {

//大方向的筛选轨道,包含了所有的轨道

if (cloud->points[i].y >= guidao_min_height && cloud->points[i].y <= guidao_max_height) {

cloud_filtered_1->push_back(cloud->points[i]);

}

}

return cloud_filtered_1;

}

2)体素过滤

理解为降采样,降低点云密度,加快处理流程,毕竟原始点云的点以万为单位,这一步也是必须的。

pcl::PointCloud<pcl::PointXYZRGB>::Ptr ObstacleRecongnition::voxelsampling(pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud_filter_cloud) {

//3.下采样后点云-体素过滤,降采样、稀疏化

pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud_filtered(new pcl::PointCloud<pcl::PointXYZRGB>);

pcl::VoxelGrid<pcl::PointXYZRGB> voxelFilter;

voxelFilter.setInputCloud(cloud_filter_cloud);

voxelFilter.setLeafSize(upsample_x, upsample_y, upsample_z);//可根据数据进行参数调整,我的取值是0.01,0.01,0.01。

voxelFilter.filter(*cloud_filtered);

return cloud_filtered;

}

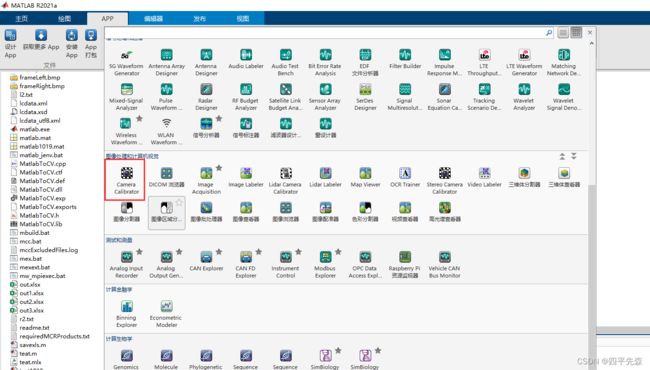

(3)相机标定

使用matlab进行标定,获取相机内参,matlab里面也有激光雷达和相机的联合标定,后面研究一下

注意的是:matlab获取的相机参数需要转置一下才可以在c++ opencv里面使用

标定完成后,得到相机的3*3内参矩阵,畸变系数,R、T矩阵。

(4)激光雷达和相机的联合标定

首先标定单目相机,将相机内参和畸变系数保存下来,用于联合标定使用。

将(3)中相机标定的参数带入,进行投影计算

//点云投影-----用于计算目标距离(激光雷达和相机的联合标定)

int ObstacleRecongnition::cloudtoimage(Mat im, vector<Point3d> nose_end_point3D,Rect defect_result,pcl::PointCloud<pcl::PointXYZRGB>::Ptr &obj3d,float &dis_all){

double focal_length = im.cols;

Point2d center = cv::Point2d(im.cols * 0.5, im.rows * 0.5);

cv::Mat camera_matrix = (cv::Mat_<double>(3, 3) << focal_length, 0, center.x, 0, focal_length, center.y, 0, 0, 1);

//内参

float nc[3][3] = { n_fx,0.0,n_cx,

0.0,n_fy,n_cy,

0.0,0.0,1.0 };

cv::Mat dist_coeffs = cv::Mat::zeros(n_jibian_num, 1, cv::DataType<double>::type); // Assuming no lens distortion

//输出旋转向量和平移向量

double xz[3][1] = { n_xz_1,n_xz_2,n_xz_3 };

double py[3][1] = { n_py_1,n_py_2,n_py_3 };

camera_matrix = Mat(3, 3, CV_32FC1, nc); //内参数K Mat类型变量

if (n_jibian_num == 3) {

float jibian[3][1] = { };

dist_coeffs = Mat(3, 1, CV_32FC1, jibian); //内参数K Mat类型变量

}

if (n_jibian_num == 5) {

//填写自己的畸变系数

float jibian[5][1] = { 3.16036850e-01,4.44217459e-02,0,0,0 };

dist_coeffs = Mat(5, 1, CV_32FC1, jibian); //内参数K Mat类型变量

}

cv::Mat rotation_vector;

cv::Mat translation_vector;

rotation_vector = Mat(3,1, CV_64FC1, xz);

cv::Rodrigues(rotation_vector,rotation_vector);

translation_vector = Mat(3, 1, CV_64FC1, py);

vector<Point2d> nose_end_point2D;

//3D转2D

cv::projectPoints(nose_end_point3D, rotation_vector, translation_vector, camera_matrix,

dist_coeffs, nose_end_point2D);

if (save_cloud) {

//将点云数据画在图像上

for (int i = 0; i < nose_end_point2D.size(); i++)

{

//限界处理

if (nose_end_point2D[i].x >= 0 && nose_end_point2D[i].y >= 0 && nose_end_point2D[i].x < 3840 && nose_end_point2D[i].y < 2160)

circle(im, nose_end_point2D[i], 3, Scalar(0, 0, 255), -1);

}

cv::imwrite("log/touying.jpg", im);

cv::waitKey(0);

}

vector<int> pos_2d;

//寻找落在图像识别中的框内的点云,存储点云下标

for (int i = 0; i < nose_end_point2D.size(); i++) {

if (nose_end_point2D[i].x > defect_result.x &&

nose_end_point2D[i].y > defect_result.y &&

nose_end_point2D[i].x < defect_result.x+ defect_result.width &&

nose_end_point2D[i].y < defect_result.y+defect_result.height

) {

pos_2d.push_back(i);

}

else {

pos_2d.push_back(0);

}

}

//当前点在图像范围内,对该点进行保存,并输出

pcl::PointCloud<pcl::PointXYZRGB> obj_cloud;

obj_cloud.width = nose_end_point3D.size();

obj_cloud.height = 1;

obj_cloud.is_dense = false;

obj_cloud.points.resize(obj_cloud.width * obj_cloud.height);

//对目标进行处理和转换,获得距离--图像坐标转世界坐标

float dis = 0;

int count_dis = 0;

for (int i = 1; i < nose_end_point3D.size(); i++) {

if (pos_2d.size()!=0 && pos_2d[i] == i) {

obj_cloud.points[i].x = nose_end_point3D[i].x;

obj_cloud.points[i].y = nose_end_point3D[i].y;

obj_cloud.points[i].z = nose_end_point3D[i].z;

obj_cloud.points[i].r = 255;

obj_cloud.points[i].g = 0;

obj_cloud.points[i].b = 255;

dis += nose_end_point3D[i].z;

count_dis++;

}

}

if (pos_2d.size() != 0)

dis_all = dis / pos_2d.size()*1.0;

else {

//如果雷达点云未能输出距离,使用图像输出距离

dis_all = 100;

}

return 0;

}

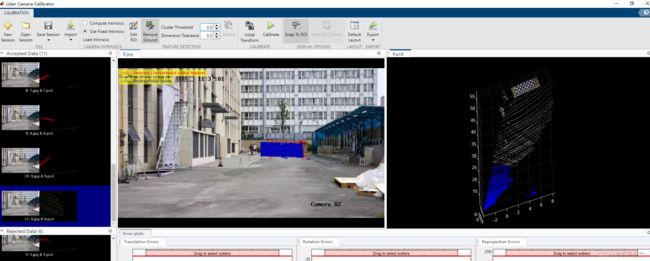

投影结果如下:

误差较大,原因是因为标定时,棋盘格位置摆放不全,只标了前面的,近距离的没有采集,整个的投影误差较大,其中棋盘格上的投影效果一般。

5.其他可调用接口

(1)保存自己处理后的点云数据

//8.保存点云

int ObstacleRecongnition::savepoints(pcl::PointCloud<pcl::PointXYZRGB>::Ptr cloud_filtered_2, std::string path) {

//对每一个聚类进行框选

SYSTEMTIME sys;

GetLocalTime(&sys);

static int name = 0;

pcl::PointCloud<pcl::PointXYZRGB> cloud_save;

cloud_save.width = cloud_filtered_2->size();

cloud_save.height = 1;

cloud_save.is_dense = false;

cloud_save.points.resize(cloud_save.width * cloud_save.height);

for (int i = 0; i < cloud_filtered_2->points.size(); i++) {

cloud_save.points[i].x = cloud_filtered_2->points[i].x;

cloud_save.points[i].y = cloud_filtered_2->points[i].y;

cloud_save.points[i].z = cloud_filtered_2->points[i].z;

cloud_save.points[i].r = cloud_filtered_2->points[i].r;

cloud_save.points[i].g = cloud_filtered_2->points[i].g;

cloud_save.points[i].b = cloud_filtered_2->points[i].b;

}

name++;

cout << path + "\\" + to_string(sys.wYear) + "-" + to_string(sys.wMonth) + "-"

+ to_string(sys.wDay) + "-" + to_string(sys.wHour) + "-" +

to_string(sys.wMinute) + "-" + to_string(sys.wMilliseconds)

+ "-" + to_string(name) + ".pcd" << endl;

pcl::io::savePCDFileASCII(path+"/" + to_string(sys.wYear)+"-"+ to_string(sys.wMonth)+"-"

+ to_string(sys.wDay)+"-" + to_string(sys.wHour) + "-" +

to_string(sys.wMinute) + "-" + to_string(sys.wMilliseconds)

+"-"+to_string(name) + ".pcd", cloud_save);

//pcl::io::saveRgbPGGFile(path+ "\\test_pcd.pcd", cloud_save,"rgb");

return 0;

}

(2)绘制点云的最小包围盒,立体矩形框

//9.最小包围盒

void ObstacleRecongnition::draw_boundingbox(pcl::PointCloud<pcl::PointXYZRGB>::Ptr original_cloud,

vector<Eigen::Vector3f> &whd,

vector<Eigen::Vector3f> &tfinals,

vector<Eigen::Matrix3f> &eigen_vectors1) {

//1.PCA、质心、协方差、特征值和特征向量

// (1)PCA:计算三个主方向

Eigen::Vector4f centroid;// (2)获取质心

pcl::compute3DCentroid(*original_cloud, centroid);// 齐次坐标,(c0,c1,c2,1)

Eigen::Matrix3f covariance;

//(3)协方差矩阵

computeCovarianceMatrixNormalized(*original_cloud, centroid, covariance); // 计算归一化协方差矩阵

// 计算主方向:计算协方差矩阵的特征向量和特征值 特征向量即为主方向

Eigen::SelfAdjointEigenSolver<Eigen::Matrix3f> eigen_solver(covariance, Eigen::ComputeEigenvectors);

Eigen::Matrix3f eigen_vectors = eigen_solver.eigenvectors();

Eigen::Vector3f eigenValuesPCA = eigen_solver.eigenvalues();

// 校正主方向间垂直(特征向量方向: (e0, e1, e0 × e1) --- note: e0 × e1 = +/- e2)

eigen_vectors.col(2) = eigen_vectors.col(0).cross(eigen_vectors.col(1));

eigen_vectors.col(0) = eigen_vectors.col(1).cross(eigen_vectors.col(2));

eigen_vectors.col(1) = eigen_vectors.col(2).cross(eigen_vectors.col(0));

/*std::cout << "特征值va(3x1):\n" << eigenValuesPCA << std::endl;

std::cout << "特征向量ve(3x3):\n" << eigen_vectors << std::endl;

std::cout << "质心点(4x1):\n" << centroid << std::endl;*/

//2.将输入点云转换至原点,且主方向与坐标系方向重回,建立变换到原点的点云包围盒

// 转到参考坐标系,将点云主方向与参考坐标系的坐标轴进行对齐

Eigen::Matrix4f transformation(Eigen::Matrix4f::Identity());

transformation.block<3, 3>(0, 0) = eigen_vectors.transpose(); // R^(-1) = R^T

transformation.block<3, 1>(0, 3) = -1.f * (transformation.block<3, 3>(0, 0) * centroid.head<3>()); // t^(-1) = -R^T * t

/*std::cout << "变换矩阵tm(4x4):\n" << Eigen::Matrix4f::Identity() << std::endl;

std::cout << "逆变矩阵tm'(4x4):\n" << transformation << std::endl;*/

pcl::PointCloud<PointT> transformed_cloud; // 变换后的点云

pcl::transformPointCloud(*original_cloud, transformed_cloud, transformation);

PointT min_pt, max_pt;// 沿参考坐标系坐标轴的边界值

pcl::getMinMax3D(transformed_cloud, min_pt, max_pt);

const Eigen::Vector3f mean_diag = 0.5f*(max_pt.getVector3fMap() + min_pt.getVector3fMap()); // 形心

//std::cout << "型心c1(3x1):\n" << mean_diag << std::endl;

Eigen::Vector3f tfinal = eigen_vectors * mean_diag + centroid.head<3>();

//3.给输入点云设置主方向和包围盒,通过输入点云到原点点云变换的逆变换实现

tfinals.push_back(tfinal);

// (1)3个方向尺寸:宽高深

Eigen::Vector3f whd_ = max_pt.getVector3fMap() - min_pt.getVector3fMap();// getVector3fMap:返回Eigen::Map(3)显示最小包围盒

//7.显示最小包围盒

void ObstacleRecongnition::showbox(pcl::PointCloud<pcl::PointXYZRGB>::Ptr &transformedCloud,

RECT_DEFECT *out_near, int result_len){

//visualization

pcl::visualization::PCLVisualizer viewer;

viewer.addPointCloud(transformedCloud);

viewer.addCoordinateSystem();

for (int i = 0; i < result_len; i++) {

const Eigen::Quaternionf qfinal(out_near[i].eigen_vectors);

viewer.addCube(out_near[i].tfinals, qfinal, out_near[i].lidar_width, out_near[i].lidar_height,

out_near[i].depth, "bbox1"+to_string(i));

viewer.setShapeRenderingProperties(pcl::visualization::PCL_VISUALIZER_REPRESENTATION, pcl::visualization::PCL_VISUALIZER_REPRESENTATION_WIREFRAME, "bbox1" + to_string(i));

viewer.setShapeRenderingProperties(pcl::visualization::PCL_VISUALIZER_COLOR, 1.0, 0.0, 0.0, "bbox1" + to_string(i));

}

while (!viewer.wasStopped())

{

viewer.spinOnce(100);

boost::this_thread::sleep(boost::posix_time::microseconds(100000));

}

savepoints(transformedCloud, save_path);

}

6.参考文献

[1].https://wenku.baidu.com/view/e618b129497302768e9951e79b89680202d86b42.html

[2].https://zhuanlan.zhihu.com/p/348122380

[3].https://www.zhihu.com/question/450173958/answer/1789451231

[4].https://www.jianshu.com/p/a0c408115ce6