ELK-微服务日志中心搭建学习,学无止境

ELK-微服务日志中心搭建学习

- 什么是日志中心

-

- 要实现ELK:日志中心用到那些技术

-

-

- 1.ElasticSearch

- 2.logstash

- 3.kibana

- 先说一个例子

- ELK 使用从同步到异步处理并发请求

-

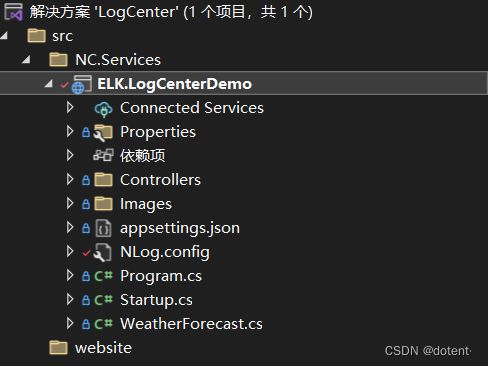

- 1.搭建一个.NET 6的API项目

-

- 2.项目搭建好了该如何去实现呢,这时还需要一个NLog写日志给到我们的RabbitMQ

- 3.直接引用对应的Nuget包

- 3.1本项目对应版本

- 3.2开始注册NLOG的使用

- 3.3Program里加载配置文件NLog

- 4.开始准备安装环境

-

- 4.3 Erlang安装没啥大问题,RabbitMQ可能会有

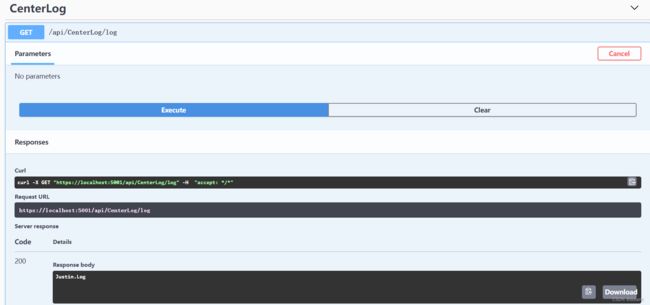

- 5.启动项目

-

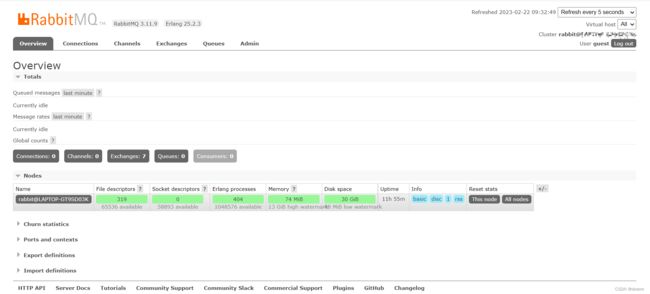

- 5.1 这是看我们的RabbitMQ

- 6.logstash 安装

-

- 6.1 安装异常记录

- 7. ElasticSearch安装

-

- 7.1 修改elasticsearch.yml配置

- 7.2 启动ElasticSearch

- 7.3 ES启动异常问题

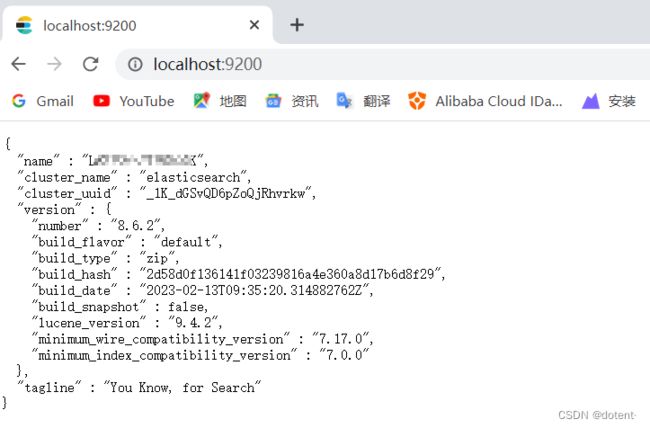

- 7.4 再启动,地址栏输入 localhost:9200

- 8.0 Kibana的安装

-

- 8.1 进入config文件里修改kibana.yml配置

- 8.2 启动kibana

- 8.3 地址栏输入http://localhost:5601/

- 8.4 启动异常记录

-

- 结语记录

什么是日志中心

简单的来讲就是将所有系统的日志收集起来这样讲。哈哈哈,是不听起来很简单,确实不难。

要实现ELK:日志中心用到那些技术

1.ElasticSearch

2.logstash

3.kibana

首先我们今天的目标是为了使用.NET 6 API项目实现这样的一个日志中心的统一管理,为什么要统一管理呢。

先说一个例子

-

我们都知道单体电商平台到微服务电商,从最初的单体开始期初系统承载能力还是可以的可是到了业务能力的提升会发现单体电商就会达到一个瓶颈,逐渐就出现了微服务电商或者其他的微服务平台,微服务就是模块化,这样讲应该是很清晰的。

-

微服务项目逐渐模块化后我们就会对某个模块做日志的记录,这样每个模块就都会有一个日志管理的地方需要去维护,模块越多维护的日志信息也就越困难,导致系统维护量上升。

-

这样就出现一个问题该如何减低日志量呢??

-

这时就要考虑分布式来完成一个系统所有模块的日志做到统一管理,从而去降低维护量,同时对我们开发者来说也是方便去排bug的。这里就是开头提到的ELK技术。

ELK 使用从同步到异步处理并发请求

- ES(ElasticSearch) 存储日志

- Logstash 收集日志(主要依靠http/TCP

属于同步请求 500/200/400/403等收集并发请求) - Kibana 展示日志

如何去落地学习,绘制了一个图

小提示:RabbitMQ解决日志并发问题哦

小提示:RabbitMQ解决日志并发问题哦

下面就直接开始,开始前说一个上面的ELK都需要依托java环境,我本地环境是java8

1.搭建一个.NET 6的API项目

2.项目搭建好了该如何去实现呢,这时还需要一个NLog写日志给到我们的RabbitMQ

3.直接引用对应的Nuget包

NLog

NLog.Web.AspNetCore

Nlog.RabbitMQ.Target

RabbitMQ.Client

3.1本项目对应版本

<ItemGroup>

<PackageReference Include="NLog" Version="4.7.14" />

<PackageReference Include="Nlog.RabbitMQ.Target" Version="2.7.7" />

<PackageReference Include="NLog.Web.AspNetCore" Version="4.14.0" />

<PackageReference Include="RabbitMQ.Client" Version="6.2.1" />

<PackageReference Include="Swashbuckle.AspNetCore" Version="5.6.3" />

</ItemGroup>

3.2开始注册NLOG的使用

这时我们需要给项目新增一个NLog.config文件

<nlog xmlns="http://www.nlog-project.org/schemas/NLog.xsd"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

autoReload="true"

throwExceptions="false"

internalLogLevel="Warn"

internalLogFile="logs/internal-nlog.txt">

<targets>

<default-wrapper xsi:type="AsyncWrapper">default-wrapper>

<target xsi:type="File"

name="info"

fileName="${basedir}/info/${date:format=yyyy-MM-dd-HH}.txt"

layout ="${longdate}|${logger}|${uppercase:${level}}|${message} ${exception}"/>

<target xsi:type="File"

name="error"

fileName="${basedir}/error/${date:format=yyyy-MM-dd-HH}.txt"

layout ="${longdate}|${logger}|${uppercase:${level}}|${message} ${exception}"/>

<extensions>

<add assembly="Nlog.RabbitMQ.Target" />

extensions>

<target name="RabbitMQTarget"

xsi:type="RabbitMQ"

username="guest"

password="guest"

hostname="localhost"

exchange="LogCenterDemo-log"

exchangeType="topic"

topic="EKL.Logging-key"

port="5672"

vhost="/"

useJSON="true"

layout="${longdate}|${logger}|${uppercase:${level}}|${message} ${exception}"

UseLayoutAsMessage="true"/>

targets>

<rules>

<logger name="*" level="Info,Error" writeTo="RabbitMQTarget" />

rules>

nlog>

3.3Program里加载配置文件NLog

public static IHostBuilder CreateHostBuilder(string[] args) =>

Host.CreateDefaultBuilder(args)

.ConfigureWebHostDefaults(webBuilder =>

{

webBuilder.UseStartup<Startup>();

webBuilder.ConfigureAppConfiguration((context, config) =>

{

NLogBuilder.ConfigureNLog($"NLog.config");

});

})

.UseNLog();

4.开始准备安装环境

java环境

RabbitMQ

Erlang (RabbitMQ)需要它

4.1 Erlang 下载

下载 - Erlang/OTP

4.2 RabbitMQ 下载

在视窗上安装 — 兔子MQ (rabbitmq.com)

其他:Erlang RabbitMQ 客户端库 — 兔子MQ

要是下载慢可以去这里下载25 或者这里下载24

4.3 Erlang安装没啥大问题,RabbitMQ可能会有

- 异常问题记录

Microsoft Windows [版本 10.0.19045.2486]

(c) Microsoft Corporation。保留所有权利。

C:\Windows\system32>d:

D:\>cd D:\xxxxx\rabbitmq_server-3.11.9\sbin

D:\xxxxx\rabbitmq_server-3.11.9\sbin>rabbitmq-service install

D:\persioninto_exe\soft\ErlangOTP\Erlang OTP\erts-13.1.5\bin\erlsrv: Service RabbitMQ added to system.

D:\xxxxx\rabbitmq_server-3.11.9\sbin>rabbitmq-service enable

D:\persioninto_exe\soft\ErlangOTP\Erlang OTP\erts-13.1.5\bin\erlsrv: Service RabbitMQ enabled.

D:\xxxxx\rabbitmq_server-3.11.9\sbin>rabbitmq-service start

RabbitMQ 服务正在启动 .

RabbitMQ 服务已经启动成功。

D:\xxxxx\rabbitmq_server-3.11.9\sbin>rabbitmqctl status

Error: unable to perform an operation on node 'rabbit@LAPTOP-GT9SD03K'. Please see diagnostics information and suggestions below.

Most common reasons for this are:

* Target node is unreachable (e.g. due to hostname resolution, TCP connection or firewall issues)

* CLI tool fails to authenticate with the server (e.g. due to CLI tool's Erlang cookie not matching that of the server)

* Target node is not running

In addition to the diagnostics info below:

* See the CLI, clustering and networking guides on https://rabbitmq.com/documentation.html to learn more

* Consult server logs on node rabbit@LAPTOP-GT9SD03K

* If target node is configured to use long node names, don't forget to use --longnames with CLI tools

DIAGNOSTICS

===========

attempted to contact: ['rabbit@LAPTOP-GT9SD03K']

rabbit@LAPTOP-GT9SD03K:

* connected to epmd (port 4369) on LAPTOP-GT9SD03K

* epmd reports node 'rabbit' uses port 25672 for inter-node and CLI tool traffic

* TCP connection succeeded but Erlang distribution failed

* suggestion: check if the Erlang cookie is identical for all server nodes and CLI tools

* suggestion: check if all server nodes and CLI tools use consistent hostnames when addressing each other

* suggestion: check if inter-node connections may be configured to use TLS. If so, all nodes and CLI tools must do that

* suggestion: see the CLI, clustering and networking guides on https://rabbitmq.com/documentation.html to learn more

Current node details:

* node name: 'rabbitmqcli-632-rabbit@LAPTOP-GT9SD03M'

* effective user's home directory: c:/Users/Tim

* Erlang cookie hash: luzMZ9FtsJWk0wEUi+1YAA==

D:\xxxxx\rabbitmq_server-3.11.9\sbin>

- 解决方案

1.出现以上问题的原因可能是C盘user用户(C:\Users\111)下的.erlang.cookie文件和sbin内的不一致导致

2.复制user用户目录下的.erlang.cookie文件文件到sbin内的文件,前提需要先将rabbitmq服务停止和删除,然后重启电脑接着重新装rabbitmq服务

3.

rabbitmq-service installrabbitmq-service start

rabbitmq-plugins enable rabbitmq_management

rabbitmqctl status

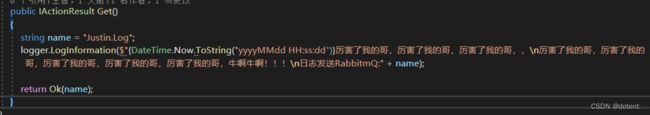

5.启动项目

发送一个请求,为了后面日志的清晰我程序里写了这么一大堆

5.1 这是看我们的RabbitMQ

6.logstash 安装

官网直接下载 :downloads

6.1 安装异常记录

异常一:

D:\xxxx\logstash-7.10.1\bin>logstash.bat -f …/config/logstash.conf -t

Using JAVA_HOME defined java: D:\persioninto_exe\soft\jdk8

WARNING, using JAVA_HOME while Logstash distribution comes with a bundled JDK

Sending Logstash logs to D:/xxxxx/dotnet/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/logs which is now configured via log4j2.properties

[2023-02-23T11:33:00,672][INFO ][logstash.runner ] Starting Logstash {“logstash.version”=>“7.10.1”, “jruby.version”=>“jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc Java HotSpot™ 64-Bit Server VM 25.361-b09 on 1.8.0_361-b09 +indy +jit [mswin32-x86_64]”}

[2023-02-23T11:33:00,850][WARN ][logstash.config.source.multilocal] Ignoring the ‘pipelines.yml’ file because modules or command line options are specified

[2023-02-23T11:33:01,313][FATAL][logstash.runner ] The given configuration is invalid. Reason: Expected one of [A-Za-z0-9_-], [ \t\r\n], “#”, “{”, [A-Za-z0-9_], “}” at line 37, column 23 (byte 1054) after input {

拆分异常:

[2023-02-23T11:33:01,313][FATAL][logstash.runner ] The given configuration is invalid. Reason: Expected one of [A-Za-z0-9_-], [ \t\r\n], “#”, “{”, [A-Za-z0-9_], “}” at line 37, column 23 (byte 1054) after input {

方案:根据提示 这里说这里 line 37, column 23,去配置文件看一下发现是key => 缺少双引号异常,修改后重新检查配置文件是否正确

检查配置文件 logstash.bat -f …/config/logstash.conf -t

出现这标识正确

[2023-02-23T11:36:25,154][INFO ][org.reflections.Reflections] Reflections took 43 ms to scan 1 urls, producing 23 keys and 47 values

Configuration OK

无法申明交换信息异常

[2023-02-23T11:42:10,409][ERROR][logstash.inputs.rabbitmq ][main][input1] Could not declare exchange! {:exchange=>“LogCenterDemo-log”, :type=>“topic”, :durable=>false, :error_class=>“MarchHare::PreconditionFailed”, :error_message=>“PRECONDITION_FAILED - inequivalent arg ‘durable’ for exchange ‘LogCenterDemo-log’ in vhost ‘/’: received ‘false’ but current is ‘true’”, :backtrace=>[“D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/march_hare-4.3.0-java/lib/march_hare/exceptions.rb:127:in `convert_and_reraise’”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/march_hare-4.3.0-java/lib/march_hare/channel.rb:996:in `converting_rjc_exceptions_to_ruby’”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/march_hare-4.3.0-java/lib/march_hare/channel.rb:428:in `exchange_declare’”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/march_hare-4.3.0-java/lib/march_hare/exchange.rb:178:in `declare!'”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/march_hare-4.3.0-java/lib/march_hare/channel.rb:320:in `block in exchange’”, “org/jruby/RubyKernel.java:1897:in `tap’”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/march_hare-4.3.0-java/lib/march_hare/channel.rb:319:in `exchange’”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/logstash-integration-rabbitmq-7.1.1-java/lib/logstash/plugin_mixins/rabbitmq_connection.rb:191:in `declare_exchange!'”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/logstash-integration-rabbitmq-7.1.1-java/lib/logstash/inputs/rabbitmq.rb:227:in `bind_exchange!'”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/logstash-integration-rabbitmq-7.1.1-java/lib/logstash/inputs/rabbitmq.rb:194:in `setup!'”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/vendor/bundle/jruby/2.5.0/gems/logstash-integration-rabbitmq-7.1.1-java/lib/logstash/inputs/rabbitmq.rb:179:in `run’”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/logstash-core/lib/logstash/java_pipeline.rb:405:in `inputworker’”, “D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/logstash-core/lib/logstash/java_pipeline.rb:396:in `block in start_input’”]}

刚开始[2023-02-23T11:42:10,409][ERROR][logstash.inputs.rabbitmq ][main][input1] Could not declare exchange! {:exchange=>“LogCenterDemo-log”, ???还是以为是版本的问题

配置文件检查了没有问题,

修改一下这个 :durable => true

成功运行

D:\xxxx\dotnet\elk\logstash-7.10.1-windows-x86_64\logstash-7.10.1\bin>logstash.bat -f ../config/logstash.conf

Using JAVA_HOME defined java: D:\persioninto_exe\soft\jdk8

WARNING, using JAVA_HOME while Logstash distribution comes with a bundled JDK

Sending Logstash logs to D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/logs which is now configured via log4j2.properties

[2023-02-23T16:48:31,134][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"7.10.1", "jruby.version"=>"jruby 9.2.13.0 (2.5.7) 2020-08-03 9a89c94bcc Java HotSpot(TM) 64-Bit Server VM 25.361-b09 on 1.8.0_361-b09 +indy +jit [mswin32-x86_64]"}

[2023-02-23T16:48:31,317][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2023-02-23T16:48:32,893][INFO ][org.reflections.Reflections] Reflections took 44 ms to scan 1 urls, producing 23 keys and 47 values

[2023-02-23T16:48:33,583][INFO ][logstash.outputs.elasticsearch][main] Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://localhost:9200/]}}

[2023-02-23T16:48:33,748][WARN ][logstash.outputs.elasticsearch][main] Restored connection to ES instance {:url=>"http://localhost:9200/"}

[2023-02-23T16:48:33,793][INFO ][logstash.outputs.elasticsearch][main] ES Output version determined {:es_version=>7}

[2023-02-23T16:48:33,797][WARN ][logstash.outputs.elasticsearch][main] Detected a 6.x and above cluster: the `type` event field won't be used to determine the document _type {:es_version=>7}

[2023-02-23T16:48:33,844][INFO ][logstash.outputs.elasticsearch][main] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["http://localhost:9200"]}

[2023-02-23T16:48:33,904][INFO ][logstash.outputs.elasticsearch][main] Using a default mapping template {:es_version=>7, :ecs_compatibility=>:disabled}

[2023-02-23T16:48:33,959][INFO ][logstash.javapipeline ][main] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>12, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50, "pipeline.max_inflight"=>1500, "pipeline.sources"=>["D:/xxxxx/elk/logstash-7.10.1-windows-x86_64/logstash-7.10.1/config/logstash.conf"], :thread=>"#"}

[2023-02-23T16:48:33,984][INFO ][logstash.outputs.elasticsearch][main] Attempting to install template {:manage_template=>{"index_patterns"=>"logstash-*", "version"=>60001, "settings"=>{"index.refresh_interval"=>"5s", "number_of_shards"=>1}, "mappings"=>{"dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date"}, "@version"=>{"type"=>"keyword"}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}

[2023-02-23T16:48:34,004][INFO ][logstash.outputs.elasticsearch][main] Installing elasticsearch template to _template/logstash

[2023-02-23T16:48:34,799][INFO ][logstash.javapipeline ][main] Pipeline Java execution initialization time {"seconds"=>0.83}

[2023-02-23T16:48:34,824][INFO ][logstash.javapipeline ][main] Pipeline started {"pipeline.id"=>"main"}

[2023-02-23T16:48:34,876][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2023-02-23T16:48:35,039][INFO ][logstash.inputs.rabbitmq ][main][input1] Connected to RabbitMQ at

[2023-02-23T16:48:35,094][INFO ][logstash.inputs.rabbitmq ][main][input1] Declaring exchange ' LogCenterDemo-log' with type topic

[2023-02-23T16:48:35,259][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

7. ElasticSearch安装

版本:7.10.1 windows

修改elasticsearch.yml配置

7.1 修改elasticsearch.yml配置

# 就这2个

# ---------------------------------- Network -----------------------------------

#

# Set the bind address to a specific IP (IPv4 or IPv6):

#

network.host: localhost

#

# Set a custom port for HTTP:

#

http.port: 9200

7.2 启动ElasticSearch

D:\xxxxx\elasticsearch-7.10.1\bin>elasticsearch.bat

7.3 ES启动异常问题

异常一:

[2023-02-22T15:16:53,489][ERROR][o.e.i.g.GeoIpDownloader ] [LAPTOP-xxxxx] exception during geoip databases updateorg.elasticsearch.ElasticsearchException: not all primary shards of [.geoip_databases] index are active

at [email protected]/org.elasticsearch.ingest.geoip.GeoIpDownloader.updateDatabases(GeoIpDownloader.java:134)

at [email protected]/org.elasticsearch.ingest.geoip.GeoIpDownloader.runDownloader(GeoIpDownloader.java:274)

at [email protected]/org.elasticsearch.ingest.geoip.GeoIpDownloaderTaskExecutor.nodeOperation(GeoIpDownloaderTaskExecutor.java:102)

at [email protected]/org.elasticsearch.ingest.geoip.GeoIpDownloaderTaskExecutor.nodeOperation(GeoIpDownloaderTaskExecutor.java:48)

at [email protected]/org.elasticsearch.persistent.NodePersistentTasksExecutor$1.doRun(NodePersistentTasksExecutor.java:42)

方案

# 配置文件内

ingest.geoip.downloader.enabled: false

异常二:

[2023-02-22T15:19:36,351][WARN ][o.e.x.s.t.n.SecurityNetty4HttpServerTransport] [LAPTOP-xxxxx] received plaintext http traffic on an https channel, closing connection Netty4HttpChannel{localAddress=/[0:0:0:0:0:0:0:1]:9200, remoteAddress=/[0:0:0:0:0:0:0:1]:52285}

[2023-02-22T15:19:36,351][WARN ][o.e.x.s.t.n.SecurityNetty4HttpServerTransport] [LAPTOP-xxxxx] received plaintext http traffic on an https channel, closing connection Netty4HttpChannel{localAddress=/[0:0:0:0:0:0:0:1]:9200, remoteAddress=/[0:0:0:0:0:0:0:1]:52286}

[2023-02-22T15:19:36,353][WARN ][o.e.x.s.t.n.SecurityNetty4HttpServerTransport] [LAPTOP-xxxxx] received plaintext http traffic on an https channel, closing connection Netty4HttpChannel{localAddress=/[0:0:0:0:0:0:0:1]:9200, remoteAddress=/[0:0:0:0:0:0:0:1]:52287}

方案

# 配置文件内修改

xpack.security.enabled: false

7.4 再启动,地址栏输入 localhost:9200

8.0 Kibana的安装

下载:kibana-7.10.1 windows版本的

8.1 进入config文件里修改kibana.yml配置

# 用于所有查询的Elasticsearch实例的URL。

elasticsearch.hosts: ["http://localhost:9200"]

# 支持的语言如下: English - en , by default , Chinese - zh-CN .

i18n.locale: "zh-CN"

8.2 启动kibana

#bin目录下启动

D:\xxxx\kibana-7.10.1\bin>kibana.bat

8.3 地址栏输入http://localhost:5601/

8.4 启动异常记录

暂未出现任何异常

结语记录

但是再最后输出日志的时候没显示出来,是上面logstash配置文件里设置了true的缘故,还是改回来吧【这块有些细节】

durable => true 改回 false

为了确定是不是持久化的缘故,我从新修改了配置再次启动,logstash->es看看也有日志记录,logstash这边也有返回代码

reply-code=200,前面设置的持久化为true时没有任何的返回代码信息

最后一个简单的微服务日志中心就搭建好了,快去试试吧