ubuntu20.04+ROS noetic在线运行单USB双目ORB_SLAM

双目摄像头主要有以下几种,各有优缺点。

- 1.单USB插口,左右图像单独输出

- 2.双USB插口,左右图像单独输出(可能存在同步性问题)

- 3.双USB插口,左右图像合成输出

- 4.单USB插口,左右图像合成输出

官方版本的ORB SLAM2编译运行参考之前记录的博客

虽然在ubuntu22.04上编译和运行的,但我后来在ubuntu20.04上编译和运行,报错也都差不多,主要是OpenCV的版本问题,由于需要使用ROS在线运行,不建议使用OpenCV3,直接先安装ROS noetic,其自带OpenCV4.2.0版本,可不用自己再编译安装。

一、相机话题拆分

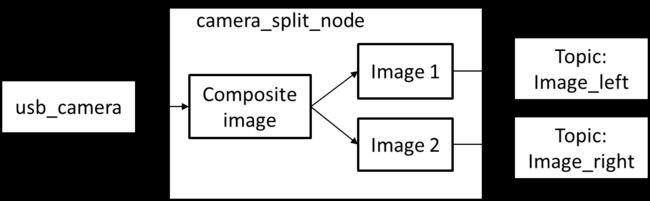

我的双目相机是单USB合成图像,然而ORM SLAM2双目ROS在订阅相机话题时,订阅的是左右图像两个节点,因此需要对USB相机话题进行拆封。

参考:

1. ROS调用USB双目摄像头模组

2. ROS&OpenCV下单目和双目摄像头的标定与使用

1. ROS调用自己的双目USB相机

安装usb_cam包

sudo apt install ros-noetic-usb-cam*

查看摄像头占用usb串口号(插上USB查看一次,拔掉USB再查看一次,可确定串口号)

ls /dev/video*

启动launch文件

cd /opt/ros/noetic/share/usb_cam/launch/

sudo gedit usb_cam-test.launch

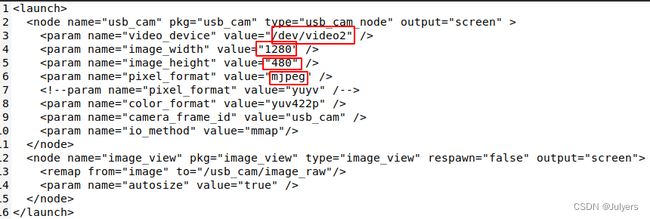

修改如上红框几个地方,主要有usb串口号、摄像头分辨率,以及摄像头的像素格式。默认分辨率是640x480,默认像素格式是yuyv,如果不修改的的话可能显示是花的,根据自己的相机修改即可。另外,同一个串口在关机重启后可能会发生变化,如果不显示,查询以后更改即可。

打开双目摄像头

roslaunch usb_cam usb_cam-test.launch

rostopic list

2. 分割双目相机图像,拆分rostopic

主要思路就是首先启动usb相机,然后新建camera_split节点,该节点订阅usb_cam/image_raw,然后分割双目相机图像,发布左图像和右图像两个节点。

创建工作空间并初始化(个人习惯放在Documents文件夹下)

mkdir -p catkin_ws/src

cd catkin_ws

catkin_make

进入src创建ROS包并添加依赖

cd src

catkin_create_pkg camera_split cv_bridge image_transport roscpp sensor_msgs std_msgs camera_info_manager

修改camera_split包的CMakeLists.txt文件,修改include_directories

find_package(OpenCV 4.2.0 REQUIRED)

#修改include_directories:

include_directories (

${catkin_INCLUDE_DIRS}

${OpenCV_INCLUDE_DIRS}

)

#添加可执行文件

add_executable(camera_split_node src/camera_split.cpp )

#指定链接库

target_link_libraries(camera_split_node

${catkin_LIBRARIES}

${OpenCV_LIBRARIES}

)

创建源代码文件camera_split.cpp

#include

#include

#include

#include

#include

#include

#include

//#include

//#include

using namespace std;

class CameraSplitter

{

public:

CameraSplitter():nh_("~"),it_(nh_)

{

image_sub_ = it_.subscribe("/usb_cam/image_raw", 1, &CameraSplitter::imageCallback, this);

image_pub_left_ = it_.advertiseCamera("/left_cam/image_raw", 1);

image_pub_right_ = it_.advertiseCamera("/right_cam/image_raw", 1);

cinfo_ =boost::shared_ptr(new camera_info_manager::CameraInfoManager(nh_));

//读取参数服务器参数,得到左右相机参数文件的位置

string left_cal_file = nh_.param("left_cam_file", "");

string right_cal_file = nh_.param("right_cam_file", "");

if(!left_cal_file.empty())

{

if(cinfo_->validateURL(left_cal_file)) {

cout<<"Load left camera info file: "<loadCameraInfo(left_cal_file);

ci_left_ = sensor_msgs::CameraInfoPtr(new sensor_msgs::CameraInfo(cinfo_->getCameraInfo()));

}

else {

cout<<"Can't load left camera info file: "<validateURL(right_cal_file)) {

cout<<"Load right camera info file: "<loadCameraInfo(right_cal_file);

ci_right_ = sensor_msgs::CameraInfoPtr(new sensor_msgs::CameraInfo(cinfo_->getCameraInfo()));

}

else {

cout<<"Can't load right camera info file: "<image(cv::Rect(0,0,cv_ptr->image.cols/2, cv_ptr->image.rows));

rightImgROI_=cv_ptr->image(cv::Rect(cv_ptr->image.cols/2,0, cv_ptr->image.cols/2, cv_ptr->image.rows ));

//创建两个CvImage, 用于存放原始图像的左右部分。CvImage创建时是对Mat进行引用的,不会进行数据拷贝

leftImgPtr_=cv_bridge::CvImagePtr(new cv_bridge::CvImage(cv_ptr->header, cv_ptr->encoding,leftImgROI_) );

rightImgPtr_=cv_bridge::CvImagePtr(new cv_bridge::CvImage(cv_ptr->header, cv_ptr->encoding,rightImgROI_) );

//发布到/left_cam/image_raw和/right_cam/image_raw

ci_left_->header = cv_ptr->header; //很重要,不然会提示不同步导致无法去畸变

ci_right_->header = cv_ptr->header;

sensor_msgs::ImagePtr leftPtr =leftImgPtr_->toImageMsg();

sensor_msgs::ImagePtr rightPtr =rightImgPtr_->toImageMsg();

leftPtr->header=msg->header; //很重要,不然输出的图象没有时间戳

rightPtr->header=msg->header;

image_pub_left_.publish(leftPtr,ci_left_);

image_pub_right_.publish(rightPtr,ci_right_);

}

private:

ros::NodeHandle nh_;

image_transport::ImageTransport it_;

image_transport::Subscriber image_sub_;

image_transport::CameraPublisher image_pub_left_;

image_transport::CameraPublisher image_pub_right_;

boost::shared_ptr cinfo_;

sensor_msgs::CameraInfoPtr ci_left_;

sensor_msgs::CameraInfoPtr ci_right_;

cv::Mat leftImgROI_;

cv::Mat rightImgROI_;

cv_bridge::CvImagePtr leftImgPtr_=NULL;

cv_bridge::CvImagePtr rightImgPtr_=NULL;

};

int main(int argc,char** argv)

{

ros::init(argc,argv, "camera_split");

CameraSplitter cameraSplitter;

ros::spin();

return 0;

}

创建launch文件

<launch>

<node pkg="camera_split" type="camera_split_node" name="camera_split_node" output="screen" />

<node pkg="image_view" type="image_view" name="image_view_left" respawn="false" output="screen">

<remap from="image" to="/left_cam/image_raw"/>

<param name="autosize" value="true" />

</node>

<node pkg="image_view" type="image_view" name="image_view_right" respawn="false" output="screen">

<remap from="image" to="/right_cam/image_raw"/>

<param name="autosize" value="true" />

</node>

</launch>

运行(运行之前先启动usb_cam)

cd catkin_ws

catkin_make

source ./devel/setup.bash

roslaunch camera_split camera_split_no_calibration.launch

二、创建双目相机参数文件

1. 棋盘格图像获取

拆分左右相机图像,按空格键捕获

- main.cpp

#include

#include

#include

#include

#include

#include

#include

#include

using namespace std;

using namespace cv;

//双目摄像头支持2560x720, 1280x480,640x240

#define FRAME_WIDTH 2560

#define FRAME_HEIGHT 960

const char* keys =

{

"{help h usage ? | | print this message}"

"{@video | | Video file, if not defined try to use webcamera}"

};

int main(int argc, char** argv)

{

CommandLineParser parser(argc, argv, keys);

parser.about("Video Capture");

if (parser.has("help"))

{

parser.printMessage();

return 0;

}

String videoFile = parser.get(0);

if (!parser.check())

{

parser.printErrors();

return 0;

}

VideoCapture cap;

if (videoFile != "")

{

cap.open(videoFile);

}

else

{

cap.open(0); //0-笔记本自带摄像头,1-外接usb双目摄像头

cap.set(CV_CAP_PROP_FRAME_WIDTH, FRAME_WIDTH); //设置捕获视频的宽度

cap.set(CV_CAP_PROP_FRAME_HEIGHT, FRAME_HEIGHT); //设置捕获视频的高度

}

if (!cap.isOpened())

{

cout << "摄像头打开失败!" << endl;

return -1;

}

Mat frame, frame_L, frame_R;

cap >> frame; //从相机捕获一帧

Mat grayImage;

double fScale = 1.;

Size dsize = Size(frame.cols*fScale, frame.rows*fScale);

Mat imagedst = Mat(dsize, CV_32S);

resize(frame, imagedst, dsize);

char key;

char image_left[200];

char image_right[200];

int cap_count = 0;

int count = 0;

int count1 = 0;

int count2 = 0;

namedWindow("图片1", 1);

namedWindow("图片2", 1);

while(1)

{

key = waitKey(50);

cap >> frame;

count++;

resize(frame, imagedst, dsize);

frame_L = imagedst(Rect(0, 0, FRAME_WIDTH/2, FRAME_HEIGHT));

namedWindow("Video_L", 1);

imshow("Video_L", frame_L);

frame_R = imagedst(Rect(FRAME_WIDTH/2, 0, FRAME_WIDTH/2, FRAME_HEIGHT));

namedWindow("Video_R", 1);

imshow("Video_R", frame_R);

if (key == 27)

break;

if (key == 32) //使用空格键拍照

//if (0 == (count % 100)) //每5秒定时拍照

{

snprintf(image_left, sizeof(image_left), "/home/juling/Documents/CLionProjects/binocular_calibration/images3/left/left%02d.jpg", ++count1);

imwrite(image_left, frame_L);

imshow("图片1", frame_L);

snprintf(image_right, sizeof(image_right), "/home/juling/Documents/CLionProjects/binocular_calibration/images3/right/right%02d.jpg", ++count2);

imwrite(image_right, frame_R);

imshow("图片2", frame_R);

}

}

cap.release();

return 0;

}

- CmakeLists.txt

cmake_minimum_required(VERSION 3.21)

project(binocular_calibration)

set(CMAKE_CXX_STANDARD 11)

find_package( OpenCV 3.4.12 REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

aux_source_directory(. DIR_SRCS)

#add_executable(demo ${DIR_SRCS})

add_executable(binocular_calibration main.cpp)

target_link_libraries( binocular_calibration ${OpenCV_LIBS} )

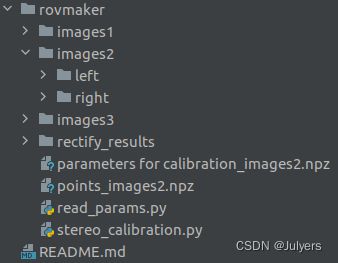

2. 双目标定

OpenCV标定

# -*- coding: utf-8 -*-

import os.path

import numpy as np

import cv2

import glob

def draw_parallel_lines(limg, rimg):

HEIGHT = limg.shape[0]

WIDTH = limg.shape[1]

img = np.zeros((HEIGHT, WIDTH * 2 + 20, 3))

img[:, :WIDTH, :] = limg

img[:, -WIDTH:, :] = rimg

for i in range(int(HEIGHT / 32)):

img[i * 32, :, :] = 255

return img

# monocular camera calibration

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

objp = np.zeros((5 * 5, 3), np.float32)

objp[:, :2] = np.mgrid[0:5, 0:5].T.reshape(-1, 2)

objp = objp * 100 # 棋盘格方格100mm

objpoints = []

imgpoints1 = []

imgpoints2 = []

root_path ='./images2'

subfix = 'images2'

image_id = 12

# 20230828 Julyer

# 左相机imgpoints1与右相机imgpoints2的维度不一样导致报错

left_imgs = glob.glob(root_path + '/left/*.jpg')

right_imgs = glob.glob(root_path + '/right/*.jpg')

for name in left_imgs:

img_id = name.split('left')[-1]

left_img = cv2.imread(name)

right_img = cv2.imread(root_path + '/right/right' + img_id)

gray1 = cv2.cvtColor(left_img, cv2.COLOR_BGR2GRAY)

gray2 = cv2.cvtColor(right_img, cv2.COLOR_BGR2GRAY)

ret1, corners1 = cv2.findChessboardCorners(gray1, (5, 5), cv2.CALIB_CB_ADAPTIVE_THRESH | cv2.CALIB_CB_FILTER_QUADS)

ret2, corners2 = cv2.findChessboardCorners(gray2, (5, 5), cv2.CALIB_CB_ADAPTIVE_THRESH | cv2.CALIB_CB_FILTER_QUADS)

if ret1 and ret2:

objpoints.append(objp)

corners11 = cv2.cornerSubPix(gray1, corners1, (11, 11), (-1, -1), criteria)

corners22 = cv2.cornerSubPix(gray2, corners2, (11, 11), (-1, -1), criteria)

imgpoints1.append(corners11)

imgpoints2.append(corners22)

# img1 = cv2.drawChessboardCorners(left_img, (5, 5), corners11, ret1)

# img2 = cv2.drawChessboardCorners(right_img, (5, 5), corners22, ret2)

# cv2.imshow('left corners', img1)

# cv2.imshow('right corners', img2)

# cv2.waitKey(1)

elif not ret1:

print('left' + img_id + ' couldn\'t be found')

elif not ret2:

print('right' + img_id + ' couldn\'t be found')

ret_l, mtx_l, dist_l, rvecs_l, tvecs_l = cv2.calibrateCamera(objpoints, imgpoints1, gray1.shape[::-1], None, None)

ret_r, mtx_r, dist_r, rvecs_r, tvecs_r = cv2.calibrateCamera(objpoints, imgpoints2, gray2.shape[::-1], None, None)

print('left ret: ', ret_l)

print('right ret: ', ret_r)

# binocular camera calibration

ret, mtx_l, dist_l, mtx_r, dist_r, R, T, E, F = cv2.stereoCalibrate(objpoints, imgpoints1, imgpoints2, mtx_l, dist_l,

mtx_r, dist_r, gray1.shape[::-1])

np.savez("./parameters for calibration_" + subfix + ".npz", ret=ret, mtx_l=mtx_l, mtx_r=mtx_r, dist_l=dist_l, dist_r=dist_r, R=R, T=T, E=E, F=F)

np.savez("./points_" + subfix + ".npz", objpoints=objpoints, imgpoints1=imgpoints1, imgpoints2=imgpoints2)

print('\nintrinsic matrix of left camera=', mtx_l)

print('\nintrinsic matrix of right camera=', mtx_r)

print('\ndistortion coefficients of left camera=', dist_l)

print('\ndistortion coefficients of right camera=', dist_r)

print('\nTransformation from left camera to right:')

print('\nR=', R)

print('\nT=', T)

print('\nReprojection Error=', ret)

# stereo rectification

R1, R2, P1, P2, Q, ROI1, ROI2 = cv2.stereoRectify(mtx_l, dist_l, mtx_r, dist_r, gray1.shape[::-1], R, T, flags=0, alpha=-1)

# undistort rectifying mapping

map1_l, map2_l = cv2.initUndistortRectifyMap(mtx_l, dist_l, R1, P1, gray1.shape[::-1], cv2.CV_16SC2) # cv2.CV_32FC1

map1_r, map2_r = cv2.initUndistortRectifyMap(mtx_r, dist_r, R2, P2, gray2.shape[::-1], cv2.CV_16SC2)

print('\nmap1_r size', np.shape(map1_r))

print('\nmap2_r size', np.shape(map2_r))

# undistort the original image, take img#12 as an example

left_id = cv2.imread(root_path + '/left/left' + str(image_id) + '.jpg')

right_id = cv2.imread(root_path + '/right/right' + str(image_id) + '.jpg')

dst_l = cv2.remap(left_id, map1_l, map2_l, cv2.INTER_LINEAR) # cv2.INTER_CUBIC

dst_r = cv2.remap(right_id, map1_r, map2_r, cv2.INTER_LINEAR)

cv2.imshow('map dst_r', dst_r)

cv2.waitKey(0)

print('\ndst_r size', np.shape(dst_r))

img_merge = draw_parallel_lines(dst_l, dst_r)

# cv2.imwrite('./rectify_results/left03(rectified).jpg', dst_l)

# cv2.imwrite('./rectify_results/right03(rectified).jpg', dst_r)

cv2.imwrite('rectify_results/rectify' + str(image_id) + '_' + subfix + '.jpg', img_merge)

print('\nrectification has been done successfully.')

np.savez("./rectify_results/parameters for rectification_" + subfix +".npz", R1=R1, R2=R2, P1=P1, P2=P2, Q=Q, ROI1=ROI1, ROI2=ROI2)

print('\nR1=', R1)

print('\nR2=', R2)

print('\nP1=', P1)

print('\nP2=', P2)

print('\nQ=', Q)

print('\nROI1=', ROI1)

print('\nROI2=', ROI2)

标定结果:

/usr/bin/python3.8 /home/juling/Documents/PycharmProjects/Stereo-master/rovmaker/stereo_calibration.py

left ret: 0.3898234269642049

right ret: 0.4078028378647591

intrinsic matrix of left camera= [[840.80247861 0. 667.37621909]

[ 0. 840.1220566 519.95457746]

[ 0. 0. 1. ]]

intrinsic matrix of right camera= [[838.1562009 0. 677.06068936]

[ 0. 836.94290586 500.83733639]

[ 0. 0. 1. ]]

distortion coefficients of left camera= [[-0.00459317 0.03249505 0.00071983 0.00213802 0.02668156]]

distortion coefficients of right camera= [[ 0.00872802 -0.01583376 -0.00164319 0.00104224 0.08360213]]

Transformation from left camera to right:

R= [[ 9.99981316e-01 4.00224781e-04 -6.09985120e-03]

[-3.85052048e-04 9.99996830e-01 2.48836542e-03]

[ 6.10082777e-03 -2.48597017e-03 9.99978300e-01]]

T= [[-57.64570079]

[ -0.7422294 ]

[ 0.41023682]]

Reprojection Error= 27.596230140236862

rectification has been done successfully.

R1= [[ 0.99982475 0.01329215 -0.01318275]

[-0.01327549 0.99991097 0.00135007]

[ 0.01319952 -0.00117483 0.99991219]]

R2= [[ 0.9998918 0.01287432 -0.00711575]

[-0.01288329 0.99991627 -0.0012167 ]

[ 0.00709949 0.00130824 0.99997394]]

P1= [[838.53248123 0. 684.23641968 0. ]

[ 0. 838.53248123 506.49901199 0. ]

[ 0. 0. 1. 0. ]]

P2= [[ 8.38532481e+02 0.00000000e+00 6.81501434e+02 -4.83430231e+04]

[ 0.00000000e+00 8.38532481e+02 5.06499012e+02 0.00000000e+00]

[ 0.00000000e+00 0.00000000e+00 1.00000000e+00 0.00000000e+00]]

Q= [[ 1.00000000e+00 0.00000000e+00 0.00000000e+00 -6.84236420e+02]

[ 0.00000000e+00 1.00000000e+00 0.00000000e+00 -5.06499012e+02]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 8.38532481e+02]

[ 0.00000000e+00 0.00000000e+00 1.73454705e-02 -4.74396078e-02]]

ROI1= (27, 13, 1221, 909)

ROI2= (31, 38, 1213, 903)

Process finished with exit code 0

3. 创建yaml参数文件

参考:https://blog.csdn.net/weixin_37918890/article/details/95626004

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

# Pr矩阵中的值(参考:https://blog.csdn.net/weixin_37918890/article/details/95626004)

Camera.fx: 8.38532481e+02

Camera.fy: 8.38532481e+02

Camera.cx: 6.81501434e+02

Camera.cy: 5.06499012e+02

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.width: 1280

Camera.height: 960

# Camera frames per second

Camera.fps: 20.0

# stereo baseline times fx

# Pr中的值,单位转为m,取绝对值

Camera.bf: 48.3430231

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 18

#--------------------------------------------------------------------------------------------

# Stereo Rectification. Only if you need to pre-rectify the images.

# Camera.fx, .fy, etc must be the same as in LEFT.P

#--------------------------------------------------------------------------------------------

LEFT.height: 960

LEFT.width: 1280

LEFT.D: !!opencv-matrix

rows: 1

cols: 5

dt: d

data:[-0.00459317, 0.03249505, 0.00071983, 0.00213802, 0.02668156]

LEFT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [840.80247861, 0., 667.37621909, 0.0, 840.1220566, 519.95457746, 0.0, 0.0, 1.0]

LEFT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 0.99982475, 0.01329215, -0.01318275, -0.01327549, 0.99991097, 0.00135007, 0.01319952, -0.00117483, 0.99991219]

LEFT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [838.53248123, 0. , 684.23641968, 0. , 0. , 838.53248123, 506.49901199, 0. , 0. , 0. , 1. , 0.]

RIGHT.height: 960

RIGHT.width: 1280

RIGHT.D: !!opencv-matrix

rows: 1

cols: 5

dt: d

data:[0.00872802, -0.01583376, -0.00164319, 0.00104224, 0.08360213]

RIGHT.K: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [838.1562009, 0., 677.06068936, 0.0, 836.94290586, 500.83733639, 0.0, 0.0, 1]

RIGHT.R: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [0.9998918, 0.01287432, -0.00711575, -0.01288329, 0.99991627, -0.0012167, 0.00709949, 0.00130824, 0.99997394]

# -4.83430231e+04转为m单位,即-4.83430231e+01

RIGHT.P: !!opencv-matrix

rows: 3

cols: 4

dt: d

data: [8.38532481e+02, 0.00000000e+00, 6.81501434e+02, -4.83430231e+01, 0, 8.38532481e+02, 5.06499012e+02, 0, 0, 0, 1, 0]

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1200

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

三、ROS在线运行ORB SLAM2建立稀疏地图

1. 修改订阅的相机话题为拆分后的话题

复制ros_stereo.cc为ros_stereo_rovmaker.cc,修改如下部分

ros::NodeHandle nh;

//message_filters::Subscriber<sensor_msgs::Image> left_sub(nh, "/camera/left/image_raw", 1);

//message_filters::Subscriber<sensor_msgs::Image> right_sub(nh, "camera/right/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> left_sub(nh, "/left_cam/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> right_sub(nh, "right_cam/image_raw", 1);

typedef message_filters::sync_policies::ApproximateTime<sensor_msgs::Image, sensor_msgs::Image> sync_pol;

message_filters::Synchronizer<sync_pol> sync(sync_pol(10), left_sub,right_sub);

sync.registerCallback(boost::bind(&ImageGrabber::GrabStereo,&igb,_1,_2));

2. 重新编译

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/juling/Documents/projects/ORB_SLAM2_binocular

chmod +x build_ros.sh

./build_ros.sh

3. 运行

rosrun ORB_SLAM2 StereoRovmaker Vocabulary/ORBvoc.txt Examples/ROS/ORB_SLAM2/rovmaker.yaml true

四、OpenCV在线运行ORB SLAM2建立稀疏地图

参考:十里桃园的博客

由于是单usb合成图像输出,这里修改了一下代码,输出左右帧。复制Example/Stereo/stereo_euroc.cc,修改为如下代码。

- stereo_euroc_slty.cc

/**

* This file is part of ORB-SLAM2.

*

* Copyright (C) 2014-2016 Raúl Mur-Artal (University of Zaragoza)

* For more information see

*

* ORB-SLAM2 is free software: you can redistribute it and/or modify

* it under the terms of the GNU General Public License as published by

* the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* ORB-SLAM2 is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License

* along with ORB-SLAM2. If not, see

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace std::chrono;

using namespace std;

using namespace cv;

#define FRAME_WIDTH 2560

#define FRAME_HEIGHT 960

int main(int argc, char **argv)

{

// Retrieve paths to images

vector vstrImageLeft;

vector vstrImageRight;

vector vTimeStamp;

//LoadImages(string(argv[3]), string(argv[4]), string(argv[5]), vstrImageLeft, vstrImageRight, vTimeStamp);

//if(vstrImageLeft.empty() || vstrImageRight.empty())

// {

// cerr << "ERROR: No images in provided path." << endl;

// return 1;

//}

// if(vstrImageLeft.size()!=vstrImageRight.size())

// {

// cerr << "ERROR: Different number of left and right images." << endl;

// return 1;

// }

// Read rectification parameters

cv::FileStorage fsSettings(argv[2], cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "ERROR: Wrong path to settings" << endl;

return -1;

}

cv::Mat K_l, K_r, P_l, P_r, R_l, R_r, D_l, D_r;

fsSettings["LEFT.K"] >> K_l;

fsSettings["RIGHT.K"] >> K_r;

fsSettings["LEFT.P"] >> P_l;

fsSettings["RIGHT.P"] >> P_r;

fsSettings["LEFT.R"] >> R_l;

fsSettings["RIGHT.R"] >> R_r;

fsSettings["LEFT.D"] >> D_l;

fsSettings["RIGHT.D"] >> D_r;

int rows_l = fsSettings["LEFT.height"];

int cols_l = fsSettings["LEFT.width"];

int rows_r = fsSettings["RIGHT.height"];

int cols_r = fsSettings["RIGHT.width"];

if(K_l.empty() || K_r.empty() || P_l.empty() || P_r.empty() || R_l.empty() || R_r.empty() || D_l.empty() || D_r.empty() ||

rows_l==0 || rows_r==0 || cols_l==0 || cols_r==0)

{

cerr << "ERROR: Calibration parameters to rectify stereo are missing!" << endl;

return -1;

}

cv::Mat M1l,M2l,M1r,M2r;

cv::initUndistortRectifyMap(K_l,D_l,R_l,P_l.rowRange(0,3).colRange(0,3),cv::Size(cols_l,rows_l),CV_32F,M1l,M2l);

cv::initUndistortRectifyMap(K_r,D_r,R_r,P_r.rowRange(0,3).colRange(0,3),cv::Size(cols_r,rows_r),CV_32F,M1r,M2r);

// const int nImages = vstrImageLeft.size();

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::STEREO,true);

// Vector for tracking time statistics

vector vTimesTrack;

cout << endl << "-------" << endl;

cout << "Start processing camera ..." << endl;

cv::Mat imLeft, imRight, imLeftRect, imRightRect;

//***********************************************************************8

cv::VideoCapture cap(0, cv::CAP_V4L2);

if (!cap.isOpened())

{

cout << "摄像头打开失败!" << endl;

return -1;

}

else

{

cap.open(0, cv::CAP_V4L2); //0-笔记本自带摄像头,1-外接usb双目摄像头

cap.set(cv::CAP_PROP_FRAME_WIDTH, FRAME_WIDTH); //设置捕获视频的宽度

cap.set(cv::CAP_PROP_FRAME_HEIGHT, FRAME_HEIGHT); //设置捕获视频的高度

cap.set(cv::CAP_PROP_FPS, 30);

}

cv::Mat frame;

cap >> frame; //从相机捕获一帧

cv::Mat grayImage;

double fScale = 1.;

cv::Size dsize = cv::Size(frame.cols*fScale, frame.rows*fScale);

cv::Mat imagedst = cv::Mat(dsize, CV_32S);

//***********************************************************************8

long int nImages = 0;

int ni=0;

// Main loop

while(ni>-1)

{

cap >> frame;

cv::resize(frame, imagedst, dsize);

imLeft = imagedst(cv::Rect(0, 0, FRAME_WIDTH/2, FRAME_HEIGHT));

imRight = imagedst(cv::Rect(FRAME_WIDTH/2, 0, FRAME_WIDTH/2, FRAME_HEIGHT));

//***********************************************************************8

if(imLeft.empty())

{

cerr << endl << "Check Left Camera!! "<< endl;

return 1;

}

if(imRight.empty())

{

cerr << endl << "Check Right Camera!! "<< endl;

return 1;

}

cv::remap(imLeft,imLeftRect,M1l,M2l,cv::INTER_LINEAR);

cv::remap(imRight,imRightRect,M1r,M2r,cv::INTER_LINEAR);

time_point now = system_clock::now();

double tframe = now.time_since_epoch().count();

vTimeStamp.push_back(tframe);

#ifdef COMPILEDWITHC11

std::chrono::steady_clock::time_point t1 = std::chrono::steady_clock::now();

#else

std::chrono::monotonic_clock::time_point t1 = std::chrono::monotonic_clock::now();

#endif

// Pass the images to the SLAM system

SLAM.TrackStereo(imLeftRect,imRightRect,tframe);

#ifdef COMPILEDWITHC11

std::chrono::steady_clock::time_point t2 = std::chrono::steady_clock::now();

#else

std::chrono::monotonic_clock::time_point t2 = std::chrono::monotonic_clock::now();

#endif

double ttrack= std::chrono::duration_cast >(t2 - t1).count();

vTimesTrack.push_back(ttrack);

// Wait to load the next frame

/*

double T=0;

if(ni0)

T = tframe-vTimeStamp[ni-1];

if(ttrack - /ORB_SLAM2_binocular/CmakeLists.txt

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${PROJECT_SOURCE_DIR}/Examples/Stereo)

add_executable(stereo_kitti

Examples/Stereo/stereo_kitti.cc)

target_link_libraries(stereo_kitti ${PROJECT_NAME})

add_executable(stereo_euroc

Examples/Stereo/stereo_euroc.cc)

target_link_libraries(stereo_euroc ${PROJECT_NAME})

# 增加下面几行

add_executable(stereo_euroc_slty

Examples/Stereo/stereo_euroc_slty.cc)

target_link_libraries(stereo_euroc_slty ${PROJECT_NAME})

重新编译

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/juling/Documents/projects/ORB_SLAM2_binocular

chmod +x build.sh

./build.sh

运行

./Examples/Stereo/stereo_euroc_slty Vocabulary/ORBvoc.txt Examples/ROS/ORB_SLAM2/rovmaker.yaml

yaml文件中的特征点数量ORBextractor.nFeatures从1200改成了2500,初始化的时候要慢一些,相机移动速度要平稳。

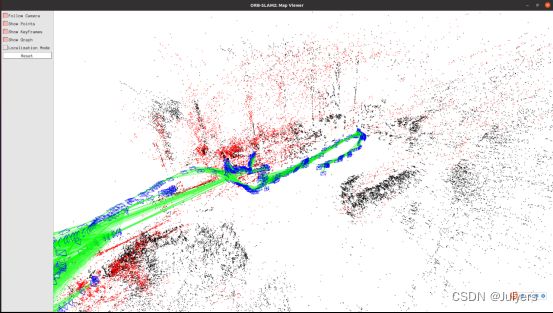

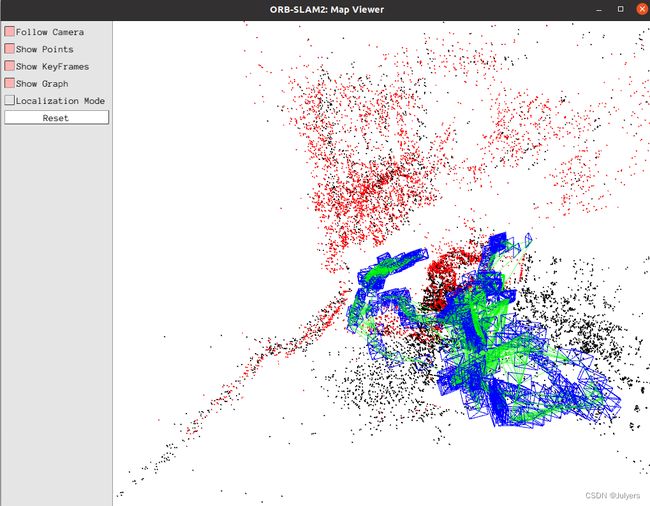

办公室稀疏建图结果: