kube-proxy模式之iptables

注:本文基于K8S v1.21.2版本编写

1 默认模式

当我们以默认配置部署kube-proxy时,将使用iptables作为proxier,我们可以从日志中查看相关信息。

I0814 13:42:49.260381 1 node.go:172] Successfully retrieved node IP: 192.168.0.111

I0814 13:42:49.260462 1 server_others.go:140] Detected node IP 192.168.0.111

W0814 13:42:49.260622 1 server_others.go:598] Unknown proxy mode "", assuming iptables proxy

I0814 13:42:49.478905 1 server_others.go:206] kube-proxy running in dual-stack mode, IPv4-primary

I0814 13:42:49.479023 1 server_others.go:212] Using iptables Proxier.

I0814 13:42:49.479032 1 server_others.go:219] creating dualStackProxier for iptables.

W0814 13:42:49.479258 1 server_others.go:512] detect-local-mode set to ClusterCIDR, but no IPv6 cluster CIDR defined, , defaulting to no-op detect-local for IPv6

I0814 13:42:49.480464 1 server.go:643] Version: v1.21.2

2 关于iptables

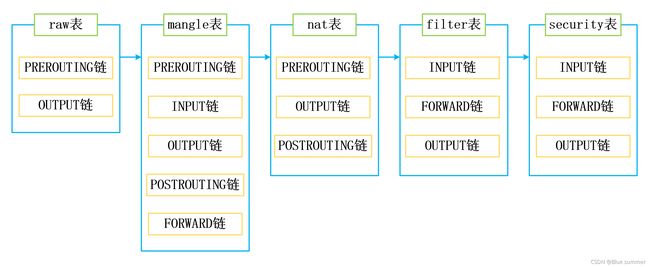

Iptabels是与Linux内核集成的包过滤防火墙系统,包含五表五链,其中,对于表,按先后顺序分别为,raw->mangle->nat->filter->security,

- raw表——两个链:PREROUTING、OUTPUT

作用:决定数据包是否被状态跟踪机制处理,和conntrack相关 - mangle表——五个链:PREROUTING、INPUT、OUTPUT、POSTROUTING、FORWARD

作用:修改数据包的服务类型、TTL以及QOS相关 - nat表——三个链:PREROUTING、OUTPUT、POSTROUTING

作用:用于网络地址转换 - filter表——三个链:INPUT、FORWARD、OUTPUT

作用:过滤数据包 - security表——三个链:INPUT、FORWARD、OUTPUT

作用:对连接进行安全标记,比如SECMARK,CONNSECMARK

其中,mangle和security用的比较少,raw主要用于iptables规则调试,因此在kube-proxy主要起作用的就是nat和filter表,这点我们可以通过查看iptables规则可知,其他三个表并没有规则。

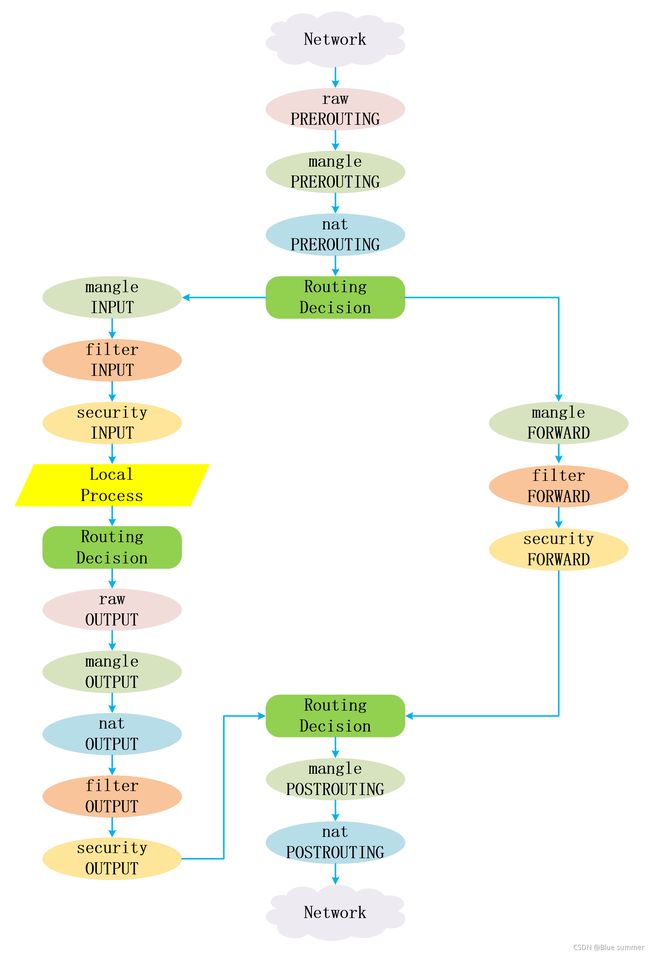

而对于链,按先后顺序为,

- 本机数据包:PREROUTING -> INPUT -> OUTPUT -> POSTROUTING

- 转发数据包:PREROUTING -> FORWARD -> POSTROUTING

我们可以通过下面两张图来更直观的了解这五表五链,

而对于数据包的流动而言,下面这张图更能清楚地描述这个过程,

而对于数据包的流动而言,下面这张图更能清楚地描述这个过程,

3 CoreDNS数据流

我们以coredns服务为例,看下dns请求是如何通过iptables规则从其他pod到达coredns pod。

考虑到集群中的iptables规则繁多,我们可以通过raw表来实现规则的trace,方便跟踪数据包的流动,具体可以参考——CentOS通过raw表实现iptables日志输出和调试

因此我们在测试容器所在的node上创建以下两条规则,

[root@node1 ~]# iptables -t raw -A PREROUTING -p udp -s 10.244.1.62 --dport 53 -j TRACE

[root@node1 ~]# iptables -t raw -A OUTPUT -p udp -s 10.244.1.62 --dport 53 -j TRACE

[root@node1 ~]# iptables -t raw -vnL

Chain PREROUTING (policy ACCEPT 174 packets, 18916 bytes)

pkts bytes target prot opt in out source destination

0 0 TRACE udp -- * * 10.244.1.62 0.0.0.0/0 udp dpt:53

Chain OUTPUT (policy ACCEPT 78 packets, 7085 bytes)

pkts bytes target prot opt in out source destination

0 0 TRACE udp -- * * 10.244.1.62 0.0.0.0/0 udp dpt:53

其中10.244.1.62是测试容器的ip,规则越细,我们越容易筛选出我们的数据报文,不然一大堆日志。

然后通过kubectl exec进入到测试容器,执行ping操作,

[root@master ~]# kubectl exec -it centos-79456f6db-9wzc5 -n kubernetes-dashboard -- /bin/bash

[root@centos-79456f6db-9wzc5 /]# ping -c 1 www.baidu.com.

PING www.a.shifen.com (14.215.177.39) 56(84) bytes of data.

64 bytes from 14.215.177.39 (14.215.177.39): icmp_seq=1 ttl=53 time=19.2 ms

--- www.a.shifen.com ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 19.240/19.240/19.240/0.000 ms

有两点需要注意,

- 只ping一个报文,不然日志太多

- 域名最后要加一个点,不然会先在集群中查询这个域名

然后在系统日志/var/log/messages中查看数据流动情况,以下日志有精简,因为我们只关注数据流向,

node1 kernel: TRACE: raw:PREROUTING:policy:2

node1 kernel: TRACE: mangle:PREROUTING:policy:1

node1 kernel: TRACE: nat:PREROUTING:rule:1

node1 kernel: TRACE: nat:KUBE-SERVICES:rule:10

node1 kernel: TRACE: nat:KUBE-SVC-TCOU7JCQXEZGVUNU:rule:1

node1 kernel: TRACE: nat:KUBE-SEP-MK55K7XS7VKFSUGB:rule:2

node1 kernel: TRACE: mangle:FORWARD:policy:1

node1 kernel: TRACE: filter:FORWARD:rule:1

node1 kernel: TRACE: filter:KUBE-FORWARD:return:5

node1 kernel: TRACE: filter:FORWARD:rule:2

node1 kernel: TRACE: filter:KUBE-SERVICES:return:1

node1 kernel: TRACE: filter:FORWARD:rule:3

node1 kernel: TRACE: filter:KUBE-EXTERNAL-SERVICES:return:1

node1 kernel: TRACE: filter:FORWARD:rule:4

node1 kernel: TRACE: filter:DOCKER-USER:return:1

node1 kernel: TRACE: filter:FORWARD:rule:5

node1 kernel: TRACE: filter:DOCKER-ISOLATION-STAGE-1:return:2

node1 kernel: TRACE: filter:FORWARD:rule:15

node1 kernel: TRACE: mangle:POSTROUTING:policy:2

node1 kernel: TRACE: nat:POSTROUTING:rule:1

node1 kernel: TRACE: nat:KUBE-POSTROUTING:rule:1

node1 kernel: TRACE: nat:POSTROUTING:rule:8

node1 kernel: TRACE: nat:POSTROUTING:policy:12

而其中,最需要注意的是nat表的prerouting链,这里我把对应的iptables规则择出来,

Chain PREROUTING (policy ACCEPT 347 packets, 75317 bytes)

pkts bytes target prot opt in out source destination

969 136K KUBE-SERVICES all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */

Chain KUBE-SERVICES (2 references)

pkts bytes target prot opt in out source destination

...

280 22956 KUBE-SVC-TCOU7JCQXEZGVUNU udp -- * * 0.0.0.0/0 10.96.0.10 /* kube-system/kube-dns:dns cluster IP */ udp dpt:53

Chain KUBE-SVC-TCOU7JCQXEZGVUNU (1 references)

pkts bytes target prot opt in out source destination

280 22956 KUBE-SEP-MK55K7XS7VKFSUGB all -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns */

Chain KUBE-SEP-MK55K7XS7VKFSUGB (1 references)

pkts bytes target prot opt in out source destination

...

280 22956 DNAT udp -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns */ udp to:10.244.0.48:53

由此可见,

- 我们发往coredns的DNS请求开始时使用的clusterip,最后会被DNAT到对应的coredns的ip

- k8s集群中每个服务都会有对应的iptables来路由请求

那对于多个服务的情况,负载是如何进行的呢,为此,我们在dashboard中,将coredns的副本数量调整为3个,再查看下对应的iptables规则,这里我们只看变化的部分,也就是服务部分,

Chain KUBE-SVC-TCOU7JCQXEZGVUNU (1 references)

pkts bytes target prot opt in out source destination

0 0 KUBE-SEP-MK55K7XS7VKFSUGB all -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns */ statistic mode random probability 0.33333333349

0 0 KUBE-SEP-YEXLSZTEUWXX6KZK all -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns */ statistic mode random probability 0.50000000000

0 0 KUBE-SEP-6XVRDQFXUVS4IFFJ all -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns */

可见,这时候规则里多了probability,这就是iptables的负载均衡。不过有意思的是,这个规则的概率和我们平常的有点不一样,按理三个服务,那每个都是1/3的概率,这里却出现了0.5。这是因为iptables的规则计算不一样,它是从前往后匹配,所以第一条规则的命中是1/3,但是到了第二条,那就是后面两条二选一,因此是1/2,到了最后一条,就是100%了。