LangChain-Chatchat学习资料-Windows开发部署(踩坑篇)

系列文章目录

1.[LangChain-Chatchat学习资料-简介]

2.[LangChain-Chatchat学习资料-Windows开发部署]

3.[LangChain-Chatchat学习资料-Windows开发部署(踩坑篇)]

4.LangChain-Chatchat学习资料-Ubuntu安装Nvidia驱动和CUDA

5.LangChain-Chatchat学习资料-Ubuntu开发部署

6.LangChain-Chatchat学习资料-Ubuntu开发部署(踩坑篇)

文章目录

- 系列文章目录

- LangChain-Chatchat学习资料-Windows开发部署(踩坑篇)

-

- 环境准备的坑

-

- 1.CUDA版本问题

- 2.Python依赖包问题

- 配置文件的坑

- chatglm-6b-int4的坑

LangChain-Chatchat学习资料-Windows开发部署(踩坑篇)

环境准备的坑

1.CUDA版本问题

我是用的RTX3060显卡,通过nvidia-smi命令,查看显卡支持的CUDA版本为12.2,然后下载版本的CUDA,后续发现这里是个坑,pytorch目前最新版为2.0.1,支持的cuda版本最高为11.8,所以想使用显卡跑pytorch,需要讲CUDA降到11.8版本。具体情况可查看pytorch的官网介绍

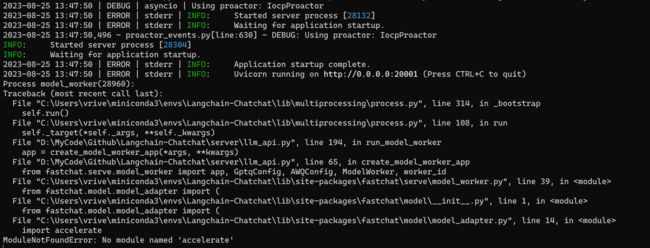

2.Python依赖包问题

1.依赖包安装不全

使用官方提供的requirements.txt进行依赖包安装,会出现包安装不完整的问题,需要根据后续服务启动报错,按照报错情况安装相对应的依赖包。

pip install accelerate

2.pytorch默认安装为CPU版本

如果想用GPU进行推理运算,需要安装pytorch的CUDA版本,使用官方提供的requirements.txt安装,只会安装CPU版本,后续运行起来非常慢。具体操作如下:

- 首先按照pytorch官方推荐方式,安装pytorch==2.0.1+cu118版本

# CUDA 11.8

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

- 再将requirements.txt中的torch~=2.0.0注释掉

#torch~=2.0.0

- 在使用requirements.txt安装依赖包

pip install -r requirements.txt

配置文件的坑

chatglm-6b-int4的坑

在使用int4量化模型时,使用pytorch的cpu版本时正常,使用cuda版本时,发生以下报错。

点击查看代码2023-08-25 13:52:26 | ERROR | stderr | Traceback (most recent call last):

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\uvicorn\protocols\http\h11_impl.py", line 408, in run_asgi

2023-08-25 13:52:26 | ERROR | stderr | result = await app( # type: ignore[func-returns-value]

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\uvicorn\middleware\proxy_headers.py", line 84, in __call__

2023-08-25 13:52:26 | ERROR | stderr | return await self.app(scope, receive, send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\fastapi\applications.py", line 290, in __call__

2023-08-25 13:52:26 | ERROR | stderr | await super().__call__(scope, receive, send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\applications.py", line 122, in __call__

2023-08-25 13:52:26 | ERROR | stderr | await self.middleware_stack(scope, receive, send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\middleware\errors.py", line 184, in __call__

2023-08-25 13:52:26 | ERROR | stderr | raise exc

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\middleware\errors.py", line 162, in __call__

2023-08-25 13:52:26 | ERROR | stderr | await self.app(scope, receive, _send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\middleware\exceptions.py", line 79, in __call__

2023-08-25 13:52:26 | ERROR | stderr | raise exc

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\middleware\exceptions.py", line 68, in __call__

2023-08-25 13:52:26 | ERROR | stderr | await self.app(scope, receive, sender)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\fastapi\middleware\asyncexitstack.py", line 20, in __call__

2023-08-25 13:52:26 | ERROR | stderr | raise e

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\fastapi\middleware\asyncexitstack.py", line 17, in __call__

2023-08-25 13:52:26 | ERROR | stderr | await self.app(scope, receive, send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\routing.py", line 718, in __call__

2023-08-25 13:52:26 | ERROR | stderr | await route.handle(scope, receive, send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\routing.py", line 276, in handle

2023-08-25 13:52:26 | ERROR | stderr | await self.app(scope, receive, send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\routing.py", line 69, in app

2023-08-25 13:52:26 | ERROR | stderr | await response(scope, receive, send)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\responses.py", line 270, in __call__

2023-08-25 13:52:26 | ERROR | stderr | async with anyio.create_task_group() as task_group:

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\anyio\_backends\_asyncio.py", line 597, in __aexit__

2023-08-25 13:52:26 | ERROR | stderr | raise exceptions[0]

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\responses.py", line 273, in wrap

2023-08-25 13:52:26 | ERROR | stderr | await func()

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\responses.py", line 262, in stream_response

2023-08-25 13:52:26 | ERROR | stderr | async for chunk in self.body_iterator:

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\concurrency.py", line 63, in iterate_in_threadpool

2023-08-25 13:52:26 | ERROR | stderr | yield await anyio.to_thread.run_sync(_next, iterator)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\anyio\to_thread.py", line 33, in run_sync

2023-08-25 13:52:26 | ERROR | stderr | return await get_asynclib().run_sync_in_worker_thread(

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\anyio\_backends\_asyncio.py", line 877, in run_sync_in_worker_thread

2023-08-25 13:52:26 | ERROR | stderr | return await future

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\anyio\_backends\_asyncio.py", line 807, in run

2023-08-25 13:52:26 | ERROR | stderr | result = context.run(func, *args)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\starlette\concurrency.py", line 53, in _next

2023-08-25 13:52:26 | ERROR | stderr | return next(iterator)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\fastchat\serve\model_worker.py", line 231, in generate_stream_gate

2023-08-25 13:52:26 | ERROR | stderr | for output in self.generate_stream_func(

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\utils\_contextlib.py", line 35, in generator_context

2023-08-25 13:52:26 | ERROR | stderr | response = gen.send(None)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\fastchat\model\model_chatglm.py", line 71, in generate_stream_chatglm

2023-08-25 13:52:26 | ERROR | stderr | for total_ids in model.stream_generate(**inputs, **gen_kwargs):

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\utils\_contextlib.py", line 35, in generator_context

2023-08-25 13:52:26 | ERROR | stderr | response = gen.send(None)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\modeling_chatglm.py", line 1143, in stream_generate

2023-08-25 13:52:26 | ERROR | stderr | outputs = self(

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

2023-08-25 13:52:26 | ERROR | stderr | return forward_call(*args, **kwargs)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\modeling_chatglm.py", line 932, in forward

2023-08-25 13:52:26 | ERROR | stderr | transformer_outputs = self.transformer(

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

2023-08-25 13:52:26 | ERROR | stderr | return forward_call(*args, **kwargs)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\modeling_chatglm.py", line 828, in forward

2023-08-25 13:52:26 | ERROR | stderr | hidden_states, presents, all_hidden_states, all_self_attentions = self.encoder(

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

2023-08-25 13:52:26 | ERROR | stderr | return forward_call(*args, **kwargs)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\modeling_chatglm.py", line 638, in forward

2023-08-25 13:52:26 | ERROR | stderr | layer_ret = layer(

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

2023-08-25 13:52:26 | ERROR | stderr | return forward_call(*args, **kwargs)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\modeling_chatglm.py", line 542, in forward

2023-08-25 13:52:26 | ERROR | stderr | attention_output, kv_cache = self.self_attention(

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

2023-08-25 13:52:26 | ERROR | stderr | return forward_call(*args, **kwargs)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\modeling_chatglm.py", line 374, in forward

2023-08-25 13:52:26 | ERROR | stderr | mixed_x_layer = self.query_key_value(hidden_states)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

2023-08-25 13:52:26 | ERROR | stderr | return forward_call(*args, **kwargs)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\quantization.py", line 502, in forward

2023-08-25 13:52:26 | ERROR | stderr | output = W8A16Linear.apply(input, self.weight, self.weight_scale, self.weight_bit_width)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive\miniconda3\envs\Langchain-Chatchat\lib\site-packages\torch\autograd\function.py", line 506, in apply

2023-08-25 13:52:26 | ERROR | stderr | return super().apply(*args, **kwargs) # type: ignore[misc]

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\quantization.py", line 75, in forward

2023-08-25 13:52:26 | ERROR | stderr | weight = extract_weight_to_half(quant_w, scale_w, weight_bit_width)

2023-08-25 13:52:26 | ERROR | stderr | File "C:\Users\vrive/.cache\huggingface\modules\transformers_modules\chatglm2-6b-int4\quantization.py", line 287, in extract_weight_to_half

2023-08-25 13:52:26 | ERROR | stderr | func = kernels.int4WeightExtractionHalf

2023-08-25 13:52:26 | ERROR | stderr | AttributeError: 'NoneType' object has no attribute 'int4WeightExtractionHalf'

需要安装cpm_kernels包

pip install cpm_kernels

安装完毕后问题解决,可正常使用显卡继续推理计算。