MNIST手写数字识别总结(pytorch)

此博客并不是教程,只是一个练习总结

代码汇总放在文末

1.首先导入所需要的库

import torch

from torch import nn

from torch.nn import functional as F

from torch import optim

import torchvision

from matplotlib import pyplot as plt

import pandas as pd

import numpy as np

from Util import plot_image,pd_one_hot #辅助函数,在博客末尾附上

2.数据集

此数据集总共包含70K张图片,其中60K作为训练集,10K作为测试集。

更多消息可以查看官网官网链接:官网

3.加载数据

batch_size设置一次处理多少图片,此处设置为512张图片,这样并行处理可以cpu,gpu加快处理速度

batch_size = 512

加载训练集,测试集图片

train_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist_data' #数据集文件夹名

,train=True

,download=True #当电脑没此数据的时候会自动下载数据集

,transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor() #矩阵转化为张量

,torchvision.transforms.Normalize((0.1307,), (0.3081,))

])

)

,batch_size=batch_size

,shuffle=True # 设置随机打散

)

test_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist_data'

,train=False

,download=True

,transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

,torchvision.transforms.Normalize((0.1307,), (0.3081,))

])

),

batch_size=batch_size

,shuffle=False)

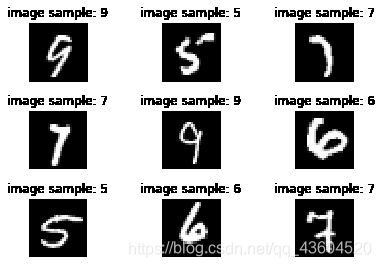

4.数据可视化

只查看9张图片,可在辅助函数内修改为其它值

x, y = next(iter(train_loader))

print(x.shape, y.shape) # 查看数据集大小

plot_image(x, y, 'image sample')

torch.Size([512, 1, 28, 28]) torch.Size([512])

注:

512, 1, 28, 28:四维矩阵,512张图片,1个通道,大小为28*28

1个通道的意思为单色,若改为3则是RGB彩色

5.定义神经网络

class Net(nn.Module):

def __init__(self):

super(Net , self).__init__()

self.fc1 = nn.Linear(28*28 , 256) # 输入和输出的维度,根据经验自己设置

self.fc2 = nn.Linear(256 , 64) # 输入维度要等于上层的输出维度

self.fc3 = nn.Linear(64 , 10) # 数字结果为0~9,所以最后输出值为10个维度

def forward(self , x):

x = F.relu(self.fc1(x)) # relu 激活函数

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

6.训练测试集

1.初始化网络

net = Net()

2.设置学习率

optimizer = optim.SGD(net.parameters() , lr = 0.01 , momentum= 0.9)

3.迭代

此处没有调用GPU处理数据

loss_s = [ ] # 存储损失值

for each in range(3): # 迭代三次

for location , (x,y) in enumerate(train_loader):

x = x.view(x.size(0) , 28*28) #将图片矩阵打平

out = net(x)

y_onehot = pd_one_hot(y)

loss = F.mse_loss(out , torch.from_numpy(y_onehot).float())

# 清零梯度

optimizer.zero_grad()

# 计算梯度

loss.backward()

# 更新梯度

optimizer.step()

if(location % 5 == 0): # 每处理5*512张图片记录一次损失函数值

loss_s.append(loss.item()) # .item的意思为只输出值

print('第' , each+1 , '次迭代完成')

第 1 次迭代完成

第 2 次迭代完成

第 3 次迭代完成

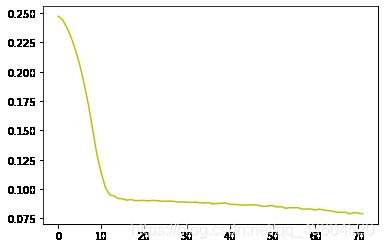

4.查看损失值

plt.plot(range(len(loss_s)) , loss_s , 'y')

plt.show()

7.预测训练集

# 存储预测正确图片的数量

total_correct = 0

for x,y in test_loader:

x = x.view(x.size(0), 28*28)

out = net(x)

pred = out.argmax(dim=1) # 取最大值概率所在的位置

correct = pred.eq(y).sum().float().item()

total_correct += correct

print('正确率:' , total_correct/len(test_loader.dataset))

正确率: 0.8903

8.查看测试集图片

x , y = next(iter(test_loader))

plot_image(x , y , 'test')

Util.py代码

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

def plot_image(img, label, name):

fig = plt.figure()

for i in range(9):

plt.subplot(3, 3, i + 1)

plt.tight_layout()

plt.imshow(img[i][0] * 0.3081 + 0.1307, cmap='gray', interpolation='none')

plt.title("{}: {}".format(name, label[i].item()))

plt.xticks([])

plt.yticks([])

plt.show()

def pd_one_hot(y):

y = y.reshape(-1 , 1)

y = pd.Series(y) # 使用pandas的one-hot处理

y= y.astype(str)

y = pd.get_dummies(y)

return y.values

正文代码汇总

项目github链接: github.com/2979083263/mnist

import torch

from torch import nn

from torch.nn import functional as F

from torch import optim

import torchvision

from matplotlib import pyplot as plt

import pandas as pd

import numpy as np

from Util import plot_curve,plot_image,one_hot,pd_one_hot

batch_size = 512

train_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist_data'

, train=True

,download=True

,transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor() #矩阵转化为张量

,torchvision.transforms.Normalize((0.1307,), (0.3081,))

])

)

,batch_size=batch_size

,shuffle=True # 设置随机打散

)

test_loader = torch.utils.data.DataLoader(

torchvision.datasets.MNIST('mnist_data'

,train=False

,download=True

,transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

,torchvision.transforms.Normalize((0.1307,), (0.3081,))

])

),

batch_size=batch_size

,shuffle=False)

x, y = next(iter(train_loader)) #暂时看作迭代

print(x.shape, y.shape, x.min(), x.max())

plot_image(x, y, 'image sample')

class Net(nn.Module):

def __init__(self):

super(Net , self).__init__()

self.fc1 = nn.Linear(28*28 , 256) #输入和输出的维度

self.fc2 = nn.Linear(256 , 64)

self.fc3 = nn.Linear(64 , 10)

def forward(self , x):

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

optimizer = optim.SGD(net.parameters() , lr = 0.01 , momentum= 0.9)

loss_s = [ ]

for each in range(3):

for location , (x,y) in enumerate(train_loader):

x = x.view(x.size(0) , 28*28)

out = net(x)

y_onehot = pd_one_hot(y)

loss = F.mse_loss(out , torch.from_numpy(y_onehot).float())

# 清零梯度

optimizer.zero_grad()

# 计算梯度

loss.backward()

# 更新梯度

optimizer.step()

if(location % 5 == 0):

loss_s.append(loss.item())

print('第' , each+1 , '次迭代完成')

plt.plot(range(len(loss_s)) , loss_s , 'y')

plt.show()

# 存储正确的数量

total_correct = 0

for x,y in test_loader:

x = x.view(x.size(0), 28*28)

out = net(x)

# out: [b, 10] => pred: [b]

pred = out.argmax(dim=1)

correct = pred.eq(y).sum().float().item()

total_correct += correct

print('正确率:' , total_correct/len(test_loader.dataset))

x , y = next(iter(test_loader))

plot_image(x , y , 'test')