ubuntu+darknet+yolov3训练自己数据

配合视频教程

https://b23.tv/TLu3Kz?share_medium=android&share_source=qq&bbid=XYA9FFBA550EAD7C8938BBF0490F0365E1F05&ts=1635428627070

前提环境

python3.6

有NVIDLA显卡

安装了NVIDLA驱动和cuda和cudnn

(安装了NVIDLA驱动和cuda和cudnn教程很多如:Ubuntu18.04 RTX2070 显卡驱动、Cuda、cudnn和Pytorch深度学习环境配置——亲测可用_zhanghm1995的博客-CSDN博客)

标注

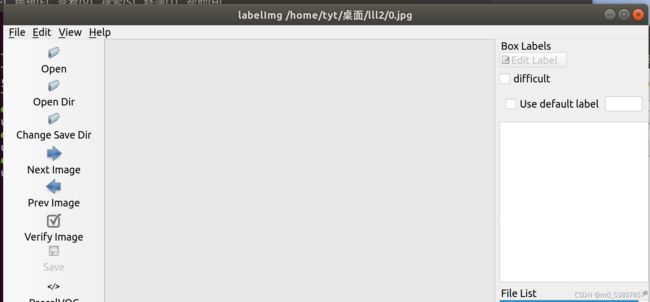

打开标注软件

cd labelImg

make qt5py3

python3 labelImg.py

(如果没有标注软件,就下载一个)

sudo apt-get install pyqt5-dev-tools

sudo apt-get install python3-lxml

sudo apt-get install libxml2-dev libxslt-dev

sudo pip3 install lxml

git clone https://github.com/tzutalin/labelImg.git

(原文链接:https://blog.csdn.net/weixin_44152895/article/details/107410398)

开始标注

1. 随便建一个文件夹(我的是lll,以下的我也用lll称呼)

将所以要训练的图片,放到lll文件夹中

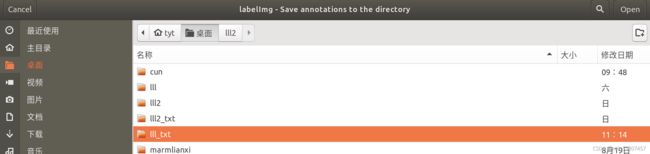

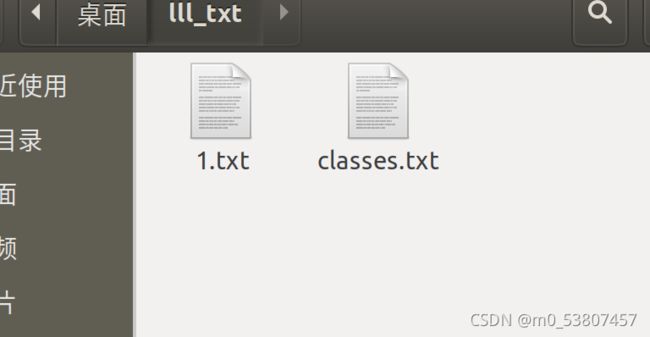

再随便建一个文件夹(我的是lll_txt,以下的我也用lll_txt称呼)

这个暂时是空的,目的是一会儿放txt文件

2. 点击Open Dir

3.点击lll,点击Open(右上角)

4.点击 Change Save Dir

5.点击lll_txt,点击Open(右上角)

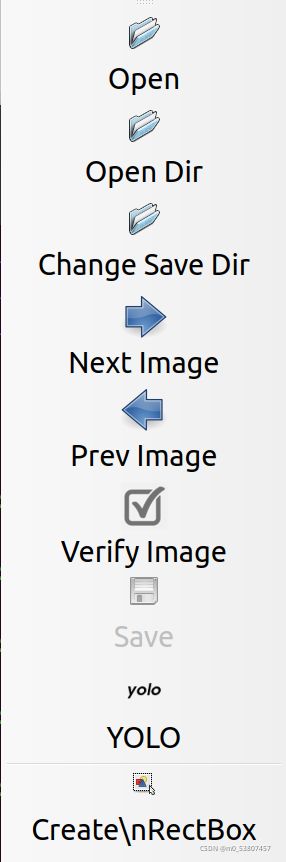

6.最后那个图标切换为yolo(点击切换)

7.最后那个图标就能标注了

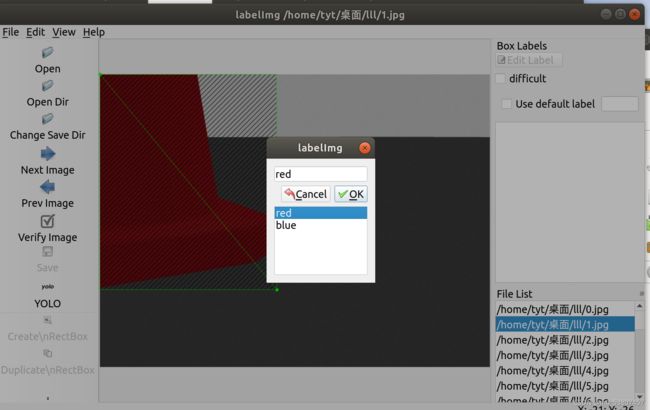

8.红色点red蓝色blue(一般没有red和blue这两个选项,)

(一般没有red和blue这两个选项,去labelImg/data/predefined_classes.txt修改)

9.点击Save保存

此时我们就能看到lll_txt中有一个1.txt文件了(classes.txt不用管它,) 重复此步骤

标记了足够多的图片,开始训练。

1.下载darknet

终端输入:

git clone https://github.com/AlexeyAB/darknet

2.下载yolov3

终端输入:

wget https://pjreddie.com/media/files/yolov3-tiny.weights

3.文件整理

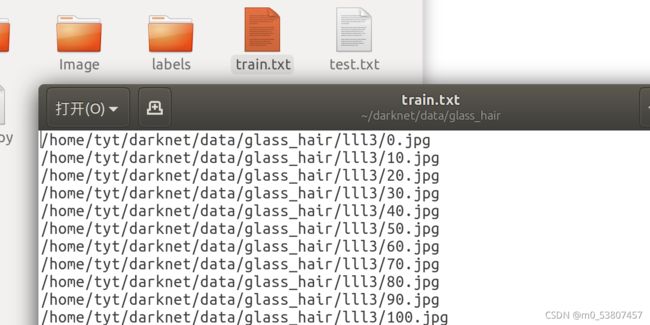

把lll和lll_txt托到darknet/data/glass_hair下(glass_hair这个是自己随便建的)

并且把lll和lll_txt的文件复制到darknet/data/glass_hair下新文件夹lll3中(classes.txt除外)

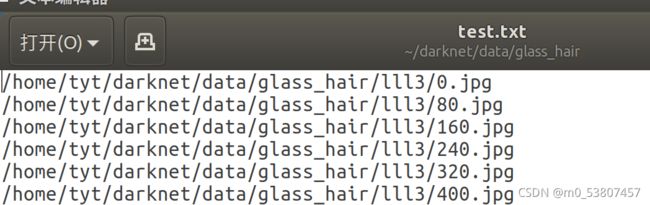

在 glass_hair下新建train.txt和test.txt

train.txt存lll3下80%图片的地址用来训练

test.txt存lll3下剩下20%图片的地址用来检测

4.将data下的coco.names和voc.names都改为(保险起见都改)

red

blue

5.将cfg下的coco.data和voc.data都改为(保险起见都改)

classes= 2

train = /home/tyt/darknet/data/glass_hair/train.txt

valid = /home/tyt/darknet/data/glass_hair/test.txt

names = data/voc.names

backup = backup

6.改cfg/yolov3-tiny.cfg

所有classes=2

表黄的filters=21

filters=3*(classes+5)

其余的可以改(改不改看性能)

如:

[net]

# Testing

#batch=1

#subdivisions=1

# Training

batch=8

subdivisions=2

width=416

height=416

channels=3

momentum=0.9

decay=0.0005

angle=0

saturation = 1.5

exposure = 1.5

hue=.1

learning_rate=0.001

burn_in=1000

max_batches = 500000

policy=steps

steps=400000,450000

scales=.1,.1

[convolutional]

batch_normalize=1

filters=16

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=32

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=leaky

[maxpool]

size=2

stride=1

[convolutional]

batch_normalize=1

filters=1024

size=3

stride=1

pad=1

activation=leaky

###########

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=21

activation=linear

[yolo]

mask = 3,4,5

anchors = 10,14, 23,27, 37,58, 81,82, 135,169, 344,319

classes=2

num=6

jitter=.3

ignore_thresh = .7

truth_thresh = 1

random=0

[route]

layers = -4

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[upsample]

stride=2

[route]

layers = -1, 8

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=21

activation=linear

[yolo]

mask = 0,1,2

anchors = 10,14, 23,27, 37,58, 81,82, 135,169, 344,319

classes=2

num=6

jitter=.3

ignore_thresh = .7

truth_thresh = 1

random=0

终端输入开始训练

./darknet detector train cfg/voc.data cfg/yolov3-tiny.cfg | tee person_train_log.txt

./darknet detect cfg/yolov3-tiny.cfg backup/yolov3-tiny_10000.weights +图片地址