【大模型系列 01】ChatGLM-6B 昇腾迁移

一、ChatGLM-6B说明

ChatGLM-6B 是62亿参数的语言模型,同等参数下中文对话能力极好。

在昇腾上的生成速度约为0.1s/token

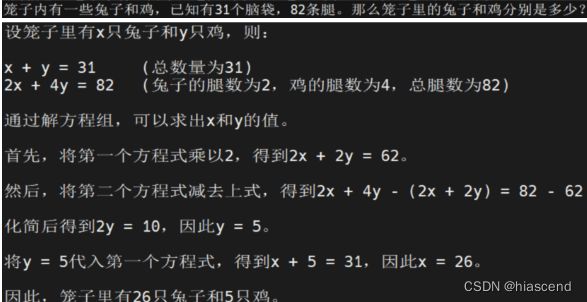

鸡兔同笼问题:虽然错误,但过程很接近

二、下载

Hugging face 地址:https://huggingface.co/THUDM/chatglm-6b (提供模型)

github 地址:https://github.com/THUDM/ChatGLM-6B (提供微调、Web demo等功能)

依赖:pip install protobuf==3.20.0 transformers==4.26.1 icetk cpm_kernels

权重:有八份,通过Hugging face 自动下载

或者手动下载 https://cloud.tsinghua.edu.cn/d/fb9f16d6dc8f482596c2/

注意:近期chatglm-6b的官方代码和权重文件有修改,最新的权重文件无法用于以下的适配代码!如果要使用最新的权重文件,需要基于最新的官方代码重新适配。

三、使用样例

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True)

model = AutoModel.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True).half().cuda()

response, history = model.chat(tokenizer, "你好", history=[])

print(response)

response, history = model.chat(tokenizer, "晚上睡不着应该怎么办", history=history)

print(response)

四、迁移适配

4.1 本次的适配&优化,基于以下版本

git clone https://huggingface.co/THUDM/chatglm-6b

cd chatglm-6b

git reset --hard 2449bdc9d85103734ae987bdc94eafa7fec2145d

4.2 修改了两份文件、启动指令

4.2.1 modeling_chatglm.py修改

def gelu_impl(x):

"""OpenAI's gelu implementation."""

return 0.5 * x * (1.0 + torch.tanh(0.7978845608028654 * x * (1.0 + 0.044715 * x * x)))

修改为:换成更快的fast_gelu

def gelu_impl(x):

"""OpenAI's gelu implementation."""

return torch.fast_gelu(x)

t = torch.arange(seq_len, device=x.device, dtype=self.inv_freq.dtype)

修改为:此处cpu的计算更快

t = torch.arange(seq_len, device='cpu').npu().half()

cos_cached = emb.cos()[:, None, :]

sin_cached = emb.sin()[:, None, :]

修改为:计算更快

cos_cached = emb.cos().unsqueeze(1)

sin_cached = emb.sin().unsqueeze(1)

matmul_result = torch.baddbmm(

matmul_result,

query_layer.transpose(0, 1), # [b * np, sq, hn]

key_layer.transpose(0, 1).transpose(1, 2), # [b * np, hn, sk]

beta=0.0,

alpha=1.0,

)

修改为:

matmul_result = torch.bmm(query_layer.transpose(0, 1), key_layer.permute(1, 2, 0))

4.2.2 对原始的样例进行修改

4.2.2.1 原始

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True)

model = AutoModel.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True).half().cuda()

response, history = model.chat(tokenizer, "你好", history=[])

print(response)

response, history = model.chat(tokenizer, "晚上睡不着应该怎么办", history=history)

print(response)

4.2.2.2 修改

import torch

# 适配昇腾NPU

import torch_npu

from torch_npu.contrib import transfer_to_npu

torch.npu.set_device(torch.device("npu:2"))

# 使用二进制优化,消除动态shape的编译问题

torch.npu.set_compile_mode(jit_compile=False)

option = {}

option["NPU_FUZZY_COMPILE_BLACKLIST"] = "Tril"

torch.npu.set_option(option)

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("/home/hj/GLM/chatglm-6b", trust_remote_code=True)

model = AutoModel.from_pretrained("/home/hj/GLM/chatglm-6b", trust_remote_code=True).half().npu()

# 修改transformers的TopPLogitsWarper

def __call__(self, input_ids: torch.LongTensor, scores: torch.FloatTensor) -> torch.FloatTensor:

sorted_logits, sorted_indices = torch.sort(scores, descending=False)

# cumulative_probs = sorted_logits.softmax(dim=-1).cumsum(dim=-1)

cumulative_probs = sorted_logits.softmax(dim=-1).cpu().float().cumsum(dim=-1).to(sorted_logits.device).to(sorted_logits.dtype)

# Remove tokens with cumulative top_p above the threshold (token with 0 are kept)

sorted_indices_to_remove = cumulative_probs <= (1 - self.top_p)

if self.min_tokens_to_keep > 1:

# Keep at least min_tokens_to_keep

sorted_indices_to_remove[..., -self.min_tokens_to_keep :] = 0

# scatter sorted tensors to original indexing

indices_to_remove = sorted_indices_to_remove.scatter(1, sorted_indices, sorted_indices_to_remove)

scores = scores.masked_fill(indices_to_remove, self.filter_value)

return scores

import transformers

transformers.generation.TopPLogitsWarper.__call__ = __call__

# 优化ND NZ排布,消除transdata

for name, module in model.named_modules():

if isinstance(module, torch.nn.Linear):

module.weight.data = module.weight.data.npu_format_cast(29)

for name, module in model.named_modules():

if isinstance(module, torch.nn.Embedding):

module.weight.data = module.weight.data.npu_format_cast(2)

response, history = model.chat(tokenizer, "你好", history=[])

print(response)

response, history = model.chat(tokenizer, "晚上睡不着应该怎么办", history=history)

print(response)

4.2.3 修改启动任务指令

通过绑核启动任务,而不是直接启动(X86 CPU无需该优化)。当前使用的800-9000服务器,CPU为基于ARM的鲲鹏,核心多但是单核较弱,频繁的核心切换会影响性能。通过下列指令固定使用 0-23 核心。

启动任务指令:( test.py为样例文件名)

taskset -c 0-23 python3 test.py