微博通用爬虫代码开源分享

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

local_time = time.strftime('%Y-%m-%d %H:%M:%S', time.localtime())

key_word = input("Please enter your key words:")

number = int(input("Number:"))

url = f"https://m.weibo.cn/search?containerid=100103type%3D1%26q%3D{key_word}"

options = webdriver.ChromeOptions()

options.add_argument('--headless')

driver = webdriver.Chrome(options=options)

driver.get(url)

time.sleep(3)

js = "window.scrollBy(0,800)" # 向下滑动500个像素 传入x,y坐标

driver.execute_script(js)

time.sleep(1)

for i in range(3, 14, 1):

try:

driver.execute_script("window.scrollBy(0,800)")

time.sleep(0.1)

media_name = driver.find_element(By.XPATH,

f"//*[@id='app']/div[1]/div[1]/div[{i}]/div/div/div/header/div/div/a/h3").get_attribute(

"textContent")

# // *[ @ id = "app"] / div[1] / div[1] / div[3] / div / div / div / header / div / div / a / h3

# //*[@id="app"]/div[1]/div[1]/div[4]/div/div/div/header/div/div/a/h3

# //*[@id="app"]/div[1]/div[1]/div[12]/div/div/div/header/div/div/a/h3

# //*[@id="app"]/div[1]/div[1]/div[13]/div/div/div[1]/div/div/div/header/div/div/a/h3

element_content = driver.find_element(By.XPATH,

f"//*[@id='app']/div[1]/div[1]/div[{i}]/div/div/div/article/div[2]/div[1]")

# //*[@id="app"]/div[1]/div[1]/div[3]/div/div/div/article/div[2]/div[1]

# //*[@id="app"]/div[1]/div[1]/div[12]/div/div/div/article/div[2]/div[1]

content = element_content.get_attribute("textContent")

print("------------------------------------------------------------------------------------")

print(i - 2, "博主昵称:", media_name, "微博内容", content)

with open("%s.txt" % key_word, "a") as file:

file.write(content + "\n")

except Exception as e:

pass

for i in range(14, number + 4, 1):

try:

driver.execute_script("window.scrollBy(0,800)")

time.sleep(0.1)

media_name_element = driver.find_element(By.XPATH,

f"//*

*****************************************************************************************

想要使用后续代码内容请打开咸鱼链接购买代码:【闲鱼】https://m.tb.cn/h.5iteorv?tk=jP8lWXupKJK CZ3457 「我在闲鱼发布了【Python微博爬虫代码分享,以下是一些示例,我搜过微博关键】」

点击链接直接打开

注:需要自己提前配置谷歌的driver驱动到计算机环境变量,大家自行查找线上教程

提示词:输入你需要了解的话题关键词,之后输入你要爬取微博的条数,之后等待片刻,代码将开始爬取数据

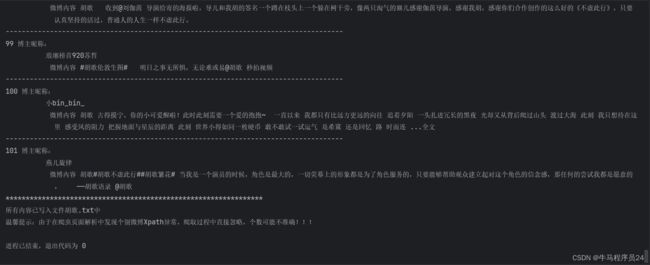

如下是我的一个示例截图:

需要完整源码的伙伴可以扫码关注咸鱼购买