第六课:尚硅谷K8s学习-k8s资源调度器和安全认证

第六课:尚硅谷K8s学习-k8s资源调度器和安全认证

tags:

- golang

- 2019尚硅谷

categories:

- K8s

- 集群调度

- 安全认证

文章目录

- 第六课:尚硅谷K8s学习-k8s资源调度器和安全认证

-

- 第一节 集群调度介绍

-

- 1.1 调度器-调度简介

- 1.2 调度器-调度过程

- 第二节 调度的亲和性

-

- 2.1 节点亲和性

- 2.2 Pod亲和性

- 2.3 Taint(污点)介绍

- 2.4 Toleration(容忍)的介绍

- 2.5 指定调度节点

- 第三节 集群的安全机制

-

- 3.1 安全机制-认证

- 3.2 安全机制-鉴权介绍

- 3.3 Role and ClusterRole、RoleBinding and ClusterRoleBinding说明

- 3.4 Resource和to Subjects介绍

- 3.5 实践:创建一个用户只能管理dev空间

- 3.6 安全机制-准入控制

第一节 集群调度介绍

1.1 调度器-调度简介

- Scheduler是kubernetes中的调度器组件,主要的任务是把定义的pod分配到集群的节点上。听起来非常简单,但有很多要考虑的问题:

- 公平: 如何保证每个节点都能被分配

- 资源资源高效利用: 集群所有资源最大化被使用

- 效率: 调度的性能要好,能够尽快地对大批量的pod完成调度工作

- 灵活: 允许用户根据自己的需求控制调度的逻辑

- Sheduler是作为单独的程序运行的,启动之后会一直监听APIServer,获取PodSpec.NodeName为空的pod,对每个pod都会创建一个binding,表明该 pod 应该放到哪个节点上。

- 这里的PodSpec.NodeName不为空的pod,说明我们手动指定了这个pod应该部署在哪个node上,所以这种情况Sheduler就不需要参与进来了.

1.2 调度器-调度过程

-

调度过程分为两部分,如果中间任何一步骤有错误,直接返回错误:

- predicate(预选): 首先是过滤掉不满足条件的节点

- priority(优选): 然后从中选择优先级最高的节点

-

**Predicate(预选)**有一系列的算法可以使用:

- PodFitsResources: 节点上剩余的资源是否大于pod请求的资源

- Podfitshost: 如果pod指定了NodeName,检查节点名称是否和NodeName相匹配

- PodFfitsHostPorts: 节点上已经使用的port是否和 pod申请的port冲突

- PodSelectorMatches: 过滤掉和 pod指定的label不匹配的节点

- NoDiskConflict: 已经mount的volume和 pod指定的volume不冲突,除非它们都是只读

-

注意:如果在predicate过程中没有合适的节点。pod会一直在pending状态,不断重试调度,直到有节点满足条件。经过这个步骤,如果有多个节点满足条件,就继续priorities过程

-

**Priorities(优选)**是按照优先级大小对节点排序

-

优先级由一系列键值对组成,键是该优先级项的名称,值是它的权重(该项的重要性)。这些优先级选项包括:

- LeastRequestedPriority:通过计算CPU和 Memory的使用率来决定权重,使用率越低权重越高。换句话说,这个优先级指标倾向于资源使用比例更低的节点

- BalancedResourceA1location:节点上CPU和Memory 使用率越接近,权重越高。这个应该和上面的一起使用,不应该单独使用

- ImageLocalityPriority:倾向于已经有要使用镜像的节点,镜像总大小值越大,权重越高

-

通过算法对所有的优先级项目和权重进行计算,得出最终的结果。上面只是常见的算法,还有很多算法可以到官网查阅。

第二节 调度的亲和性

2.1 节点亲和性

- node节点亲和性: 简单来理解就是,指定调度到的node,nodeAffinity分为两种pod.spec.nodeAffinity:

- preferredDuringSchedulinglgnoredDuringExecution:软策略【我想要去这个节点】

- requiredDuringschedulinglgnoredDuringExecution:硬策略【我一定要去这个节点】

- node节点亲和性硬策略示例:requiredDuringSchedulingIgnoredDuringExecution

-

- kubectl get pod -o wide

-

apiVersion: v1

kind: Pod

metadata:

name: affinity

labels:

app: node-affinity-pod

spec:

containers:

- name: with-node-affinity

image: hub.qnhyn.com/library/myapp:v1

affinity:

# 指定亲和性为node亲和性

nodeAffinity:

# 指定为硬策略

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

# key就是node的label

# 这句话代表当前pod一定不能分配到k8s-node02节点上

- matchExpressions:

- key: kubernetes.io/hostname # 标签的键名kubectl get node --show-labels查看

operator: NotIn

values:

- k8s-node2

- node亲和性软策略示例:preferredDuringSchedulingIgnoredDuringExecution。

- kubectl get pod -o wide

apiVersion: v1

kind: Pod

metadata:

name: affinity

labels:

app: node-affinity-pod

spec:

containers:

- name: with-node-affinity

image: hub.qnhyn.com/library/myapp:v1

affinity:

# 声明节点亲和性为软策略

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

# 当前策略权重为1

- weight: 1

preference:

# [最好]能分配到label为source=k8s-node03的节点上

matchExpressions:

- key: source

operator: In

values:

- k8s-node03

- 若软策略和硬策略同时存在。要先满足我们的硬策略在满足软策略才行。

- 键值运算关系

- In: label的值在某个列表中

- Notin: label 的值不在某个列表中

- Gt: label的值大于某个值

- Lt: label的值小于某个值

- Exists:某个label存在

- DoesNotExist: 某个label不存在

2.2 Pod亲和性

- pod亲和性主要解决pod可以和哪些pod部署在同一个拓扑域中的问题

- 拓扑域: 用主机标签实现,可以是单个主机,或者具有同个label的多个主机,也可以是多个主机组成的 cluster、zone 等等

- 所以简单来说: 比如一个 pod 在一个节点上了,那么我这个也得在这个节点,或者你这个 pod 在节点上了,那么我就不想和你待在同一个节点上

- pod亲和性/反亲和性又分为两种:pod.spec.affinity.podAffinity/podAntiAffinity:

- preferredDuringSchedulinglgnoredDuringExecution:软策略

- requiredDuringSchedulinglgnoredDuringExecution:硬策略

apiVersion: v1

kind: Pod

metadata:

name: pod-3

labels:

app: pod-3

spec:

containers:

- name: pod-3

image: hub.qnhyn.com/library/myapp:v1

affinity:

# 配置一条pod亲和性策略

podAffinity:

# 配置为硬策略 kubectl get pod --show-labels labels是app=pod1的pod同一拓扑域

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- pod-1

topologyKey: kubernetes.io/hostname

# 配置一条pod反亲和性策略

podAntiAffinity:

# 配置为软策略

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- pod-2

topologyKey: kubernetes.io/hostname

-

亲和性/反亲和性调度策略比较

调度策略 匹配标签 操作符 拓扑域支持 调度目标 nodeAffinity 主机 IN, NotIn, Exists, DoesNotExist, Gt, Lt 否 指定主机 podAffinity POD IN, NotIn, Exists, DoesNotExist 是 POD与指定POD同一拓扑域 podAntiAffinity POD IN, NotIn, Exists, DoesNotExist 是 POD与指定POD不在同一拓扑域

2.3 Taint(污点)介绍

- 节点亲和性是pod的一种属性(偏好或硬性要求),它使pod被吸引到一类特定的节点。Taint则相反,它使节点能够排斥一类特定的pod。

- Taint和toleration相互配合,可以用来避免pod被分配到不合适的节点上。每个节点上都可以应用一个或多个taint,这表示对于那些不能容忍这些taint的pod,是不会被该节点接受的。如果将toleration 应用于pod上,则表示这些pod 可以(但不要求)被调度到具有匹配 taint 的节点上。

- 如果没有特别配置toleration,默认是不容忍所有污点的

- 使用kubectl taint 命令可以给某个Node节点设置污点,Node被设置上污点之后就和Pod之间存在了一种相斥的关系,可以让Node拒绝Pod的调度执行,甚至将Node已经存在的Pod驱逐出去

- 污点的value是可选项,即污点有两种组成形式:

key=value:effect

key:effect

- 每个污点有一个key和value作为污点的标签,其中value可以为空,effect 描述污点的作用。当前taint effect支持如下三个选项:

- NoSchedule:表示k8s将不会将Pod调度到具有该污点的Node上

- PreferNoschedulel: 表示k8s将尽量避免将Pod调度到具有该污点的Node上

- NoExecute: 表示k8s将不会将Pod调度到具有该污点的Node上,同时会将Node上已经存在的Pod驱逐出去

- kubectl describe node k8s-master 主节点上本身就有一个NoSchedule的污点,默认不在上面创建Pod。

# 设置污点

kubectl taint nodes node1 key1=value1:NoSchedule

# 例子

kubectl taint nodes k8s-node1 check=qnhyn:NoExecute

# 节点说明中,查找Taints字段

kubectl describe pod pod-name

# 去除污点 通过describe查看污点,然后把污点复制出来,按照如下格式在最后加一个-就好了

kubectl taint nodes node1 key1:NoSchedule-

# 例子:

kubectl taint nodes k8s-node1 check=qnhyn:NoExecute-

2.4 Toleration(容忍)的介绍

- 设置了污点的Node将根据taint的effect:NoSchedule、PreferNoSchedule、NoExecute 和 Pod之间产生互斥的关系,Pod将在一定程度上不会被调度到Node上

- 但我们可以在Pod上设置容忍(Toleration)。意思是设置了容忍的Pod将可以容忍污点的存在,可以被调度到存在污点的Node上

- 可以被调度不代表一定会被调度,只是保存了可能性

- Toleration的资源清单配置:

tolerations:

# 容忍key1-value1:NoSchedule的污点

# 且需要被驱逐时,可以再呆3600秒

- key: "key1"

operator: "Equal"

value: "value1"

effect: "NoSchedule"

# 用于描述当Pod需要被驱逐时可以在 Pod上继续保留运行的时间

tolerationSeconds: 3600

# 容忍key1-value1:NoExecute的污点

- key: "key1"

operator: "Equal"

value: "value1"

effect: "NoExecute"

# 容忍key2:NoSchedule的污点

- key:"key2"

operator: "Exists"

effect: "NoSchedule"

- 注意点

- key,value, effect要与Node上设置的 taint保持一致

- operator的值为Exists将会忽略value值,如不指定operator,则默认为equal

- tolerationSeconds用于描述当Pod需要被驱逐时可以在 Pod上继续保留运行的时间

- 当不指定key值时,表示容忍所有的污点key

tolerations:

- operator: "Exists"

- 当不指定effect时,表示容忍所有的污点作用

tolerations:

- key: "key"

operator: "Exists"

- 有多个Master存在时,防止资源浪费,可以如下设置(尽可能不在master上运行)

kubectl taint nodes Node-Name node-role.kubernetes.io/master=:PreferNoSchedule

2.5 指定调度节点

- 通过指定Pod.spec.nodeName将Pod直接调度到指定的Node节点上(根据节点的名称选择)

- 会跳过Scheduler的调度策略

- 该匹配规则是强制匹配

apiVersion: apps/v1

kind: Deployment

metadata:

name: myweb

spec:

replicas: 7

selector:

matchLabels:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

# 直接指定node名称 七个Pod全在k8s-node1上

nodeName: k8s-node1

containers:

- name: myweb

image: hub.qnhyn.com/library/myapp:v1

ports:

- containerPort: 80

- Pod.spec.nodeSplector: 通过kubernetes的label-selector机制选择节点,由调度器调度策略

匹配label,而后调度Pod到目标节点,该匹配规则属于强制约束(根据标签选择)- kubectl label node k8s-node1 disk=ssd 给节点打个标签

apiVersion: apps/v1

kind: Deployment

metadata:

name: myweb

spec:

replicas: 7

selector:

matchLabels:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

# nodeSplector

nodeSplector:

disk: ssd # 标签为硬盘类型为ssd的

containers:

- name: myweb

image: hub.qnhyn.com/library/myapp:v1

ports:

- containerPort: 80

第三节 集群的安全机制

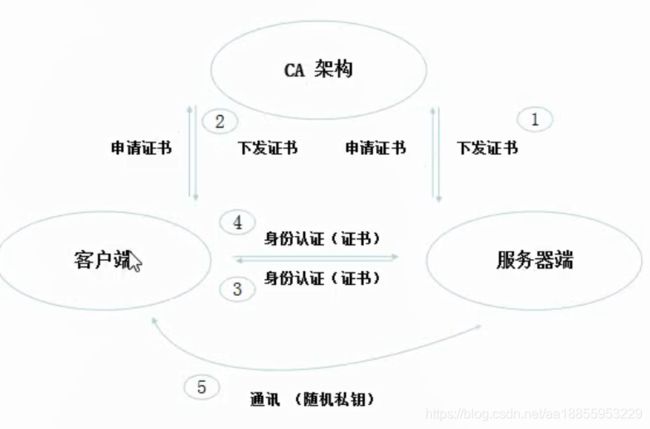

3.1 安全机制-认证

-

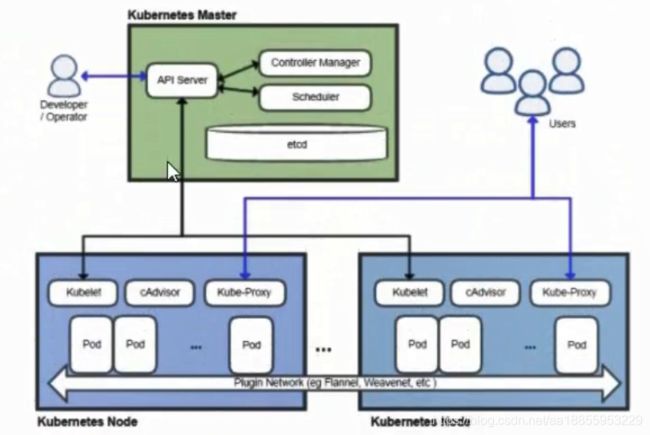

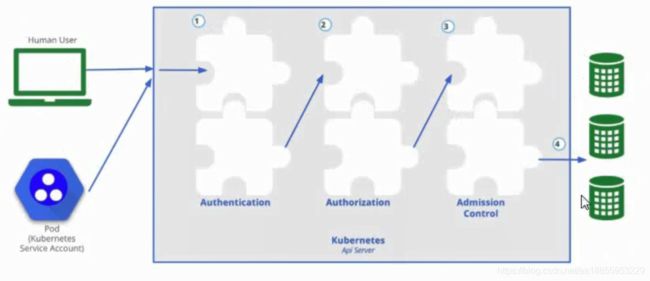

Kubernetes作为一个分布式集群的管理工具,保证集群的安全性是其一个重要的任务。 API Server是集群内部各个组件通信的中介,也是外部控制的入口。所以Kubernetes的安全机制基本就是围绕保护API Server来设计的。Kubernetes 使用了认证(Authentication) 、鉴权(Authorization) 、准入控制(Admission Control)三步来保证API Server的安全

-

Authentication认证方式有以下几种:

- HTTP Token认证:通过一个Token来识别合法用户(不太安全,文件且单向认证)

- HTTP Token的认证是用一个很长的特殊编码方式的并且难以被模仿的字符串- Token来表达客户的一种方式。Token 是一个很长的很复杂的字符串,每个Token对应一个用户名存储在API Server能访问的文件中。当客户端发起API调用请求时,需要在HTTP Header里放入Token

- HTTP Base认证:通过用户名+密码的方式认证(不太安全,单向认证)

- 用户名+密码用BASE64算法进行编码后的字符串放在HTTP Request中的Heather Authorization域里发送给服务端,服务端收到后进行编码,获取用户名及密码

- 最严格的HTTPS证书认证**:基于CA根证书签名的客户端身份认证方式**(双向认证)

- HTTP Token认证:通过一个Token来识别合法用户(不太安全,文件且单向认证)

-

两种类型

- Kubenetes 组件对API Server的访问: kubectl、Controller Manager、Scheduler、 kubelet、 kube-proxy

- Kubernetes 管理的Pod对容器的访问: Pod (dashborad 也是以Pod形式运行)

-

安全性说明

- Controller Manager、 Scheduler与A[PI Server在同一台机器,所以直接使用API Server的非安全端口访问, --insecure -bind-address=127.0.0.1

- kubectl、 kubelet.、kube-proxy访问API Server就都需要证书进行HTTPS双向认证

-

证书颁发

- 手动签发:通过k8s集群的跟ca进行签发HTTPS证书

- 自动签发: kubelet首次访问API Server时,使用token做认证,通过后,Controller Manager会为kubelet生成一个证书, 以后的访问都是用证书做认证了

-

kubeconfig:既是集群的描述,又是集群认证信息的填充。

- kubeconfig文件包含集群参数(CA证书、API Server地址) .客户端参数(上面生成的证书和私钥) ,集群context信息(集群名称、用户名)。Kubenetes 组件通过启动时指定不同的kubeconfig文件可以切换到不同的集群

-

ServiceAccount

- Pod中的容器访问API Server。因为Pod的创建、销毁是动态的,所以要为它手动生成证书就不可行了。Kubenetes使用了Service Account解决Pod访问API Server的认证问题

-

Secret 与SA的关系

- Kubernetes设计了一种资源对象叫做Secret,分为两类,一种是用于ServiceAccount的service-account-token,另一种是用于保存用户自定义保密信息的Opaque。ServiceAccount 中用到包含三个部分: Token、ca.crt、namespace

- token是使用API Server私钥签名的JWT。用于访问API Server时,Server端认证

- ca.crt, 根证书。于Client端验证API Server发送的证书

- namespace, 标识这个service-account-token的作用域名空间

kubectl get secret --all-namespaces

kubectl describe secret default-token-5gm9r --namespace=kube-system

- 默认情况下,每个namespace都会有一个ServiceAccount,如果Pod在创建时没有指定ServiceAccount,就会使用Pod所属的namespace的ServiceAccount

3.2 安全机制-鉴权介绍

-

上面认证过程,只是确认通信的双方都确认了对方是可信的,可以相互通信。而鉴权是确定请求方有哪些资源的权限。API Server目前支持以下几种授权策略(通过 API Server的启动参数"-authorization-mode"设置)

- AIwaysDeny: 表示拒绝所有的请求,一般用于测试

- AlwaysAllow: 允许接收所有请求,如果集群不需要授权流程,则可以采用该策略(测试中可以用一下)

- ABAC (Attribute-Based Access Control) :基于属性的访问控制,表示使用用户配置的授权规则对用户请求进行匹配和控制。(已经过时了,需要重启)

- Webbook: 通过调用外部REST服务对用户进行授权(历史啦,集群内部就不可以操作)

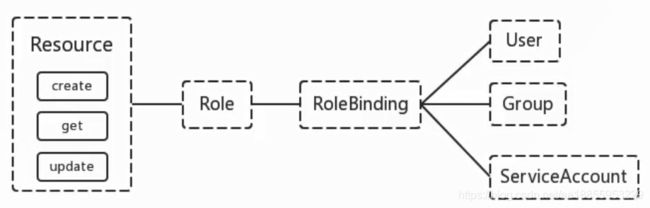

- RBAC (Role-Based Access Control) :基于角色的访问控制,现行默认规则(好的,选择的)

-

RBAC (Role-Based Access Control)基于角色的访问控制,在Kubernetes 1.5中引入,现行版本成为默认标准。相对其它访问控制方式,拥有以下优势:

- 对集群中的资源和非资源均拥有完整的覆盖

- 整个RBAC完全由几个API对象完成,同其它API对象一样,可以用kubectl或API进行操作

- 可以在运行时进行调整,无需重启API Server

-

RBAC的API资源对象说明

-

需要注意的是Kubenetes并不会提供用户管理,那么User. Group. ServiceAccount 指定的用户又是从哪里来的呢? Kubenetes 组件(kubectl、 kube-proxy) 或是其他自定义的用户向CA申请证书时,要提供一个证书请求文件。用户名admin, 组system:masters

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "HangZhou",

"L": "XS",

"O": "system:masters",

"OU": "System"

}

]

}

- API Server会把客户端证书的CN字段作为User,把names .o字段作为Group

- Kubelet使用TLS Bootstaping认证时,API Server可以使用Bootstrap Tokens或者Token authentication file验证= token,无论哪一种,Kubernetes都会为token绑定一个默认的User和Group

- Pod使用ServerAccount认证时,service-account-token 中的JWT会保存User信息

- 有了用户信息,再创建一对角色/角 色绑定(集群角色/集群角色绑定)资源对象,就可以完成权限绑定了

3.3 Role and ClusterRole、RoleBinding and ClusterRoleBinding说明

- 在 RBAC API 中,Role表示一组规则权限,权限只会增加 (累加权限,不存在一个资源一开始就有很多权限而通过 RBAC 对其进行减少的操作;Role可以定义在一个namespace中,如果想要跨namespace则可以创建ClusterRole

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: [""] # "" indicates the core API group 不写代表core的核心组

resources: ["pods"]

verbs: ["get","watch","list"] # 如果把这个role给某个用户,这个用户就可以在default的名称空间下获取、监听、列出pod

- ClusterRole 具有与Role 相同的权限角色控制能力,不同的是ClusterRole是集群级别的,ClusterRole可以用于

- 集群级别的资源控制(例如 node 访问权限)

- 非资源类型 endpoints(例如 /healthz 访问)

- 所有命名空间资源控制(例如pods)

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

# "namespace" omitted since ClusterRole are not namespaced

name: secret-reader

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get","watch","list"]

- RoleBinding 可以将角色中定义的权限授予用户或用户组,RoleBinding 包含一组权限列表(subjects)权限列表包含有不同形式的待授予权限资源类型(users,groups,or service accounts)。RoleBinding 同样包含对被Bind 的Role引用;RoleBinding 适用于某个命名空间内授权,而 ClusterRoleBinding 适用于集群范围内的授权

- RoleBinding可以绑定Role或ClusterRole,但是 ClusterRoleBinding只能绑定 ClusterRole

- 将default 命令空间的 pod-reader Role 授予 jane 用户,此后jane 用户在default 命名空间中具有 pod-reader的权限

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: read-pods

namespace: default

subjects:

- kind: User

name: jane

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io

- RoleBinding 同样可以引用ClusterRole 来对当前namespace 内用户、用户组或ServiceAccount进行授权,这种操作允许集群管理员在整个集群内定义一些通用的ClusterRole,然后在不同的namespace中使用RoleBinding来引用

- 例如,以下RoleBinding 引用了一个ClusterRole,这个ClusterRole 具有整个集群内对secrets的访问权限;但是其授权用户dave只能访问development空间中的secrets(因为RoleBinding 定义在development命名空间)

# This role binding allows "dave" to read secrets in the "development" namespace.

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: read-secrets

namespace: development # This only grants permissions within the "development' namespace

subjects:

- kind: User

name: dave

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: secret-reader

apiGroup: rbac.authorization.k8s.io

- 使用ClusterRoleBinding 可以对整个集群中的所有命名空间资源权限进行授权;以下ClusterRoleBinding 样例展示了授权manager组内所有用户在全部命名空间中对secrets进行访问

# This cluster role binding allows anyone in the "manager" group to read secrets in any namespace

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: read-secrets-global

subjects:

- kind: Group

name: manager

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: secret-reader

apiGroup: rbac.authorization.k8s.io

3.4 Resource和to Subjects介绍

- Kubernetes 集群内一些资源一般以其名称字符串来表示,这些字符串一般会在 API 的URL地址中出现;同时某些资源也会包含子资源,例如logs资源就属于pods的子资源,API中URL样例如下:

GET /api/v1/namespaces/{namespace}/pods/{name}/log

- 如果要在RBAC授权模型中控制这些子资源的访问权限,可以通过/分隔符来实现,以下是一个定义pods资源logs访问权限的Role定义样例

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

namespace: default

name: pod-and-pod-logs-reader

rules:

- apiGroups: [""]

resources: ["pods","pods/log"]

verbs: ["get","list"]

- RoleBinding 和 ClusterRoleBinding 可以将Role绑定到Subjects;Subjects可以是groups、users、或者service accounts

- Subjects 中Users 使用字符串表示,它可以是一个普通的名字字符串,如 “alice”;也可以是email格式的邮箱地址,例如”[email protected]“;甚至是一组字符串形式的数字ID。但是Users的前缀system:是系统保留的,集群管理员应该确保普通用户不会使用这个前缀格式。

- Groups 书写格式与Users相同,都为一个字符串,并且没有特定的格式要求;同样system:前缀为系统保留

3.5 实践:创建一个用户只能管理dev空间

# 先创建一个用户

useradd devuser

passwd devuser

mkdir -p /usr/local/install-k8s/cert

cd /usr/local/install-k8s/cert

mkdir devuser && cd devuser

# 创建访问证书请求

vim devuser-csr.json

{

"CN":"devuser",

"hosts":[],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

# 下载证书生成工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod a+x *

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

cd /etc/kubernetes/pki/

# 创建证书请求和证书私钥

cfssl gencert -ca=ca.crt -ca-key=ca.key -profile=kubernetes /usr/local/install-k8s/cert/devuser/devuser-csr.json | cfssljson -bare devuser

# 设置集群参数

cd /usr/local/install-k8s/cert/devuser

export KUBE_APISERVER="https://192.168.1.10:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.crt \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=devuser.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials devuser \

--client-certificate=/etc/kubernetes/pki/devuser.pem \

--client-key=/etc/kubernetes/pki/devuser-key.pem \

--embed-certs=true \

--kubeconfig=devuser.kubeconfig

# 设置上下文参数 帮我们绑定到某个名称空间dev

# 创建名称空间dev

kubectl create namespace dev

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=devuser \

--namespace=dev \

--kubeconfig=devuser.kubeconfig

# 创建一个rolebinging绑定clusterrole 绑定到命名空间dev.admin可以在dev中为所欲为

kubectl create rolebinding devuser-admin-binding --clusterrole=admin --user=devuser --namespace=dev

mkdir -p /home/devuser/.kube/

cp devuser.kubeconfig /home/devuser/.kube/

chown devuser:devuser /home/devuser/.kube/devuser.kubeconfig

cd /home/devuser/.kube/ && mv devuser.kubeconfig config

# 切换上下文 让kubectl读取我们的配置信息

kubectl config use-context kubernetes --kubeconfig=config

# 然后在devuser用户下测试权限

kubectl get pod

kubectl run nginx --image=hub.qnhyn.com/library/myapp:v1

kubectl get pod --all-namespaces

3.6 安全机制-准入控制

- 准入控制**(建议默认的准入控制**)是API Server的插件集合,通过添加不同的插件,实现额外的准入控制规则。甚至于API Server的一些主要的功能都需要通过Admission Controllers实现,比如ServiceAccount

- 官方文档上有一份针对不同版本的准入控制器推荐列表:

NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota

- 列举几个插件的功能:

- NamespaceLifecycle:防止不存在的namespace上创建对象,防止删除系统预置 namespace,删除namespace时,连带删除它的所有资源对象。

- LimitRanger: 确保请求的资源不会超过资源所在Namespace的LimitRange的限制。

- ServiceAccount:实现了自动化添加ServiceAccount。

- ResourceQuota:确保请求的资源不会超时资源的ResourceQuota限制。