Python多线程爬取中国天气网图片

文章目录

-

- Python实现多线程

-

- Python的前后台线程

- 线程等待

- 多线程与资源

- 多线程爬取中国天气网的图片数据

Python实现多线程

线程类似于同时执行多个不同的程序,多线程运行的优点:

1.使用线程可以把占据长时间的程序中的任务放到后台去处理;

2.可能加快程序的运行速度;

3.在一些等待的任务,例如用户输入、文件读写和网络收发数据等,线程就比较有用了;

4.每个线程都有自己的一组cpu寄存器,称为线程的上下文,该上下文,反映了线程上次运行该线程的cpu寄存器的状态;

5.在其它线程正在运行时,线程可以暂时睡眠,这就是线程的退让。

Python的前后台线程

在python中要启动一个线程,可以使用threading包中的Thread建立一个对象,Thread类的基本原型: t = Thread(target, args=None)

target是执行的线程函数,args是一个元组或列表,为target函数提供参数,然后调用t.start()就开始线程了。

1.后台线程不因主线程的结束而结束

2.主线程结束后,子线程也结束,这就是前台线程

3.r.setDaemon(False) 设置线程r为后台线程 True为前台线程

## 启动一个子线程 并设置为后台线程 不随主线程的结束而结束

import threading

import time

import random

def reading():

for i in range(5):

print('reading', i)

time.sleep(random.randint(1,2))

r = threading.Thread(target=reading)

r.setDaemon(False) #设置线程r为后台线程

r.start()

print('The End')

》》》》》》:

readingThe End

0

reading 1

reading 2

reading 3

reading 4

Process finished with exit code 0

## 设置一个子线程子线程再设置一个子线程

##主线程启动 运行子线程t设置为后台线程不随主线程结束而结束

##子线程t启动一个子线程r r线程为前台线程 随主线程的结束而结束

import threading

import time

import random

def reading():

for i in range(5):

print('reading', i)

time.sleep(random.randint(1,3))

def test():

r = threading.Thread(target=reading)

r.setDaemon(True)

r.start()

print("test End")

t = threading.Thread(target=test)

t.setDaemon(False)

t.start()

print("End")

》》》》》:

End

readingtest End

Process finished with exit code 0

线程等待

一个线程要等待其它线程执行完毕才继续执行可以用join函数,方法是:线程对象.join()

在一个线程中执行该语句,当前的线程就会停止执行,一直到指定线程对象的线程执行完毕之后才会继续执行,这条语句起阻塞等待作用。

## 主线程启动一个子线程并等待子线程结束后才继续执行【阻塞主线程】

import threading

import time

import random

def reading():

for i in range(5):

print('reading:', i)

time.sleep(random.randint(1,2))

t = threading.Thread(target=reading)

t.setDaemon(False)#t这个线程设置为后台线程 不随主线程结束而结束

t.start()

t.join()#子线程.join阻塞主线程

print('The End')#主线程结束

》》》》》》:

reading: 0

reading: 1

reading: 2

reading: 3

reading: 4

The End

Process finished with exit code 0

## 主线程启动t线程后,t.join()会等待t线程的结束,t线程启动r线程,r.join()阻塞t线程,等待r线程执行完毕后才结束r.join(),然后显示Test End,之后t线程结束,结束t.join(),主线程显示The End

import threading

import time

import random

def reading():

for i in range(5):

print('reading:', i)

time.sleep(1)

def test():

r = threading.Thread(target=reading)

r.setDaemon(True)

r.start()

r.join()#阻塞t线程 让r线程运行结束

print('Test End')

t = threading.Thread(target=test)

t.setDaemon(False)

t.start()

t.join() #等待t线程结束

print('The End')

》》》》》:

reading: 0

reading: 1

reading: 2

reading: 3

reading: 4

Test End

The End

Process finished with exit code 0

多线程与资源

多线程普遍会遇到多个线程同时竞争访问与改写公共资源,那么应该怎样协调各个线程的关系。普遍使用的方法就是使用线程锁,使用threading.RLock类来创建一个线程锁对象:

lock = threading.RLock()

该对象lock有两个重要的方法:acquire()与release(),当执行lock.acquire()语句,迫使lock获取线程锁,如果已经有别的线程调用了acquire()方法,获取线程锁还没有调用release()释放线程锁,那么这个lock.acquire()就阻塞当前线程,一直等待锁的控制权,直到别的线程释放锁后,lock.acquire()就获取锁并解除阻塞,线程继续执行,执行后要lock.release()释放锁,不然别的线程会一直得不到锁的控制权。

##一个子线程A把一个全局变量列表words进行升序排序,另一个D线程把这个列表进行降序排列。

##不用锁机制最后 升序降序就是一起做的 都不合适 有锁 升序就是升序 降序就是降序

import threading

import time

lock = threading.RLock()

words = ['a', 'd', 'c', 'd', 'd', 'c', 'a', 'e']

def increase():

global words

for count in range(5):

lock.acquire()#请求锁 已有线程占用没被释放的话 则起阻塞作用

print("A acquired")

for i in range(len(words)):

for j in range(i+1, len(words)):

if words[j] < words[i]:

t = words[i]

words[i] = words[j]

words[j] = t

print('A', words)

time.sleep(2)

lock.release()

def decrease():

global words

for count in range(5):

lock.acquire()

print('D acquired')

for i in range(len(words)):

for j in range(i + 1, len(words)):

if words[j] > words[i]:

t = words[i]

words[i] = words[j]

words[j] = t

print('D', words)

time.sleep(2)

lock.release()

A = threading.Thread(target=increase)

A.setDaemon(False)

A.start()

D = threading.Thread(target=decrease)

D.setDaemon(False)

D.start()

print('The End')

》》》》》》:

A acquired

A ['a', 'a', 'c', 'c', 'd', 'd', 'd', 'e']

The End

D acquired

D ['e', 'd', 'd', 'd', 'c', 'c', 'a', 'a']

A acquired

A ['a', 'a', 'c', 'c', 'd', 'd', 'd', 'e']

D acquired

D ['e', 'd', 'd', 'd', 'c', 'c', 'a', 'a']

A acquired

A ['a', 'a', 'c', 'c', 'd', 'd', 'd', 'e']

A acquired

A ['a', 'a', 'c', 'c', 'd', 'd', 'd', 'e']

D acquired

D ['e', 'd', 'd', 'd', 'c', 'c', 'a', 'a']

A acquired

A ['a', 'a', 'c', 'c', 'd', 'd', 'd', 'e']

D acquired

D ['e', 'd', 'd', 'd', 'c', 'c', 'a', 'a']

D acquired

D ['e', 'd', 'd', 'd', 'c', 'c', 'a', 'a']

Process finished with exit code 0

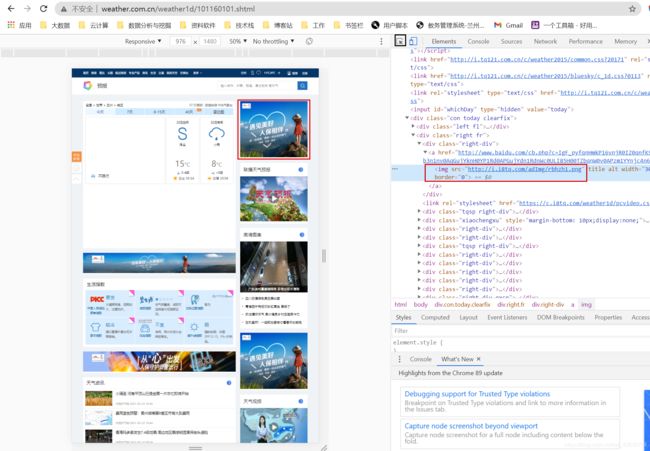

多线程爬取中国天气网的图片数据

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import threading

def download(url, count):

try:

if(url[len(url)-4]=="."):

ext = url[len(url)-4:]

else:

ext=""

req = urllib.request.Request(url, headers=headers)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

fobj = open("images\\" + str(count) + ext, 'wb')

fobj.write(data)

fobj.close()

print("downloaded:"+str(count) + ext)

except Exception as err:

print(err)

def imagesSpider(start_url):

global threads

global count

urls = []

req = urllib.request.Request(start_url, headers=headers)

data = urllib.request.urlopen(req)

data = data.read()

dammit = UnicodeDammit(data, ['utf-8','gbk'])

data = dammit.unicode_markup

soup = BeautifulSoup(data, 'lxml')

images = soup.select('img')

for img in images:

try:

src = img['src']

url = urllib.request.urljoin(start_url, src)

if url not in urls:

print(url)

count = count+1

T=threading.Thread(target=download, args=(url, count))

T.setDaemon(False)

T.start()

threads.append(T)

except Exception as err:

print(err)

headers = {"User-Agent":"Mozilla/5.0 \

(Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.90 Mobile Safari/537.36"}

start_url = "http://www.weather.com.cn/weather1d/101160101.shtml"

count = 0

threads = []

imagesSpider(start_url)

for i in threads:

i.join()

print('The End')