文献阅读 - Bidirectional LSTM-CRF Models for Sequence Tagging

Bidirectional LSTM-CRF Models for Sequence Tagging

Z. H. Huang, W. Xu, K. Yu, Bidirectional LSTM-CRF Models for Sequence Tagging, (2015)

摘要

基于长矩时记忆网络(long short-term memory,LSTM)的序列标注模型:LSTM、双向LSTM(bidirectional

LSTM,BI-LSTM)、条件随机场LSTM(LSTM with a conditional random field layer,LSTM-CRF)、双向(bidirectional LSTM with a conditional random field layer,BI-LSTM-CRF)

BI-LSTM-CRF模型:BI-LSTM能够充分使用输入特征的历史及未来信息(past and future input features);CRF能够使用语义层面的标签信息(sentence level tag information)

1 引言

序列标注(sequence tagging)包括词性标注(part of speech tagging,POS)、组块分析(chunking)和命名实体识别(named entity recognition,NER)

现有序列标注模型多数为线性统计模型(linear statistical models),如:隐马尔科夫模型(Hidden Markov Models,HMM)、最大熵马尔科夫模型(Maximum entropy Markov models,MEMMs)、条件随机场(Conditional Random Fields,CRF)

本文给出四种序列标注模型:LSTM、BI-LSTM、LSTM-CRF、BI-LSTM-CRF,

-

BI-LSTM使用输入特征的历史及未来信息;CRF使用语义层面的标签信息

-

BI-LSTM-CRF鲁棒性(robust)高,且与词嵌入相关小(less dependence on word embedding)

2 模型

LSTM、BI-LSTM、LSTM-CRF、BI-LSTM-CRF

2.1 LSTM网络(LSTM Networks)

循环神经网络(recurrent neural networks,RNN):保留关于历史信息的记忆(a memory based on history information),能够根据相隔很远的特征预测当前输出(predict the current output conditioned on long distance features);网络结构包括输入层(input layer) x x x、隐含层(hidden layer) h h h、输出层(output layer) y y y。

-

输入层表示时间步 t t t的特征,与输入特征维度相同(an input layer has the same dimensionality as feature size);

-

输出层表示时间步 t t t标签的概率分布(a probability distribution over labels),其维度与标签尺寸相同(the same dimensionality as size of labels);

RNN引入前一时间步隐状态与当前时间步隐状态的连接(a RNN introduces the connection between the previous hidden state and current hidden state),即循环层权值参数(the recurrent layer weight parameters)。循环层用于存储历史信息(recurrent layer is designed to store history information)。

h t = f ( U x t + W h t − 1 ) (1) \mathbf{h}_{t} = f( \mathbf{U} \mathbf{x}_{t} + \mathbf{W} \mathbf{h}_{t - 1}) \tag {1} ht=f(Uxt+Wht−1)(1)

y t = g ( V h t ) (2) \mathbf{y}_{t} = g( \mathbf{V} \mathbf{h}_{t}) \tag {2} yt=g(Vht)(2)

其中, U \mathbf{U} U、 W \mathbf{W} W、 V \mathbf{V} V表示连接权值(在训练过程中计算), f ( z ) f(z) f(z)、 g ( z m ) g(z_{m}) g(zm)分别表示sigmoid与softmax激活函数。

f ( z ) = 1 1 + e − z (3) f(z) = \frac{1}{1 + e^{-z}} \tag {3} f(z)=1+e−z1(3)

g ( z m ) = e z m ∑ k e z k (4) g(z_{m}) = \frac{e^{z_{m}}}{\sum_{k} e^{z_{k}}} \tag {4} g(zm)=∑kezkezm(4)

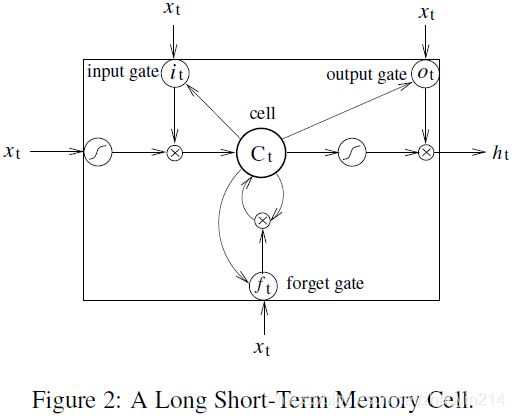

LSTM(Long Short-Term Memory)网络:用记忆单元(purpose-built memory cells)代替隐含层(hidden layer)更新,以抽取数据的远距离相关性(long range dependencies in the data)。

■图2结构不准确,如 h t − 1 \mathbf{h}_{t - 1} ht−1并未反馈至各门输入。■

LSTM记忆单元(memory cell):

i t = σ ( W x i x t + W h i h t − 1 + W c i c t − 1 + b i ) f t = σ ( W x f x t + W h f h t − 1 + W c f c t − 1 + b f ) c t = f t c t − 1 + i t tanh ( W x c x t + W h c h t − 1 + b f ) o t = σ ( W x o x t + W h o h t − 1