Pytorch搭建ResNet网络进行垃圾分类

该项目是按照别人的视频搭建起来的ResNet34网络,视频参考

开放集环境下的垃圾分类,训练的已知类数量为24,未知类数量为16。

数据集来源

下载好数据集以后,我自己写了自定义数据类GARBAGE40_Dataset()

测试集包含了所有的40个垃圾类别。

网络结构

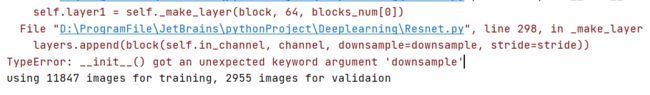

因为把初始化函数__init__()打错了,所以调了很久的bug,这种因为打错而调试的bug还是让我废了很大功夫才找出来,期间也发现了卷积网络的输入输入通道数输错了。

TypeError: init() got an unexpected keyword argument ‘downsample’

代码

Resnet.py

'''

python3.7

-*- coding: UTF-8 -*-

@Project -> File :pythonProject -> Resnet

@IDE :PyCharm

@Author :YangShouWei

@USER: 296714435

@Date :2022/2/18 11:48:27

@LastEditor:

'''

import torch.nn as nn

import torch

class BasicBlock(nn.Module):

expansion = 1 # 表示主线上的卷积层使用的卷积核个数相同。

def __init__(self,in_channel, out_channel, stride=1, downsample=None):

"""

18层和34层的残差结构

:param in_channel: 输入特征通道数

:param out_channel:输出特征通道数

:param stride: 步长

:param downsample: 下采样参数

"""

super(BasicBlock,self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel,out_channels=out_channel,

kernel_size=3, stride=stride, padding=1,bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample

def forward(self,x):

identity = x

if self.downsample is not None:

identity = self.downsample(x) # 捷径分支的输出

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity # 主线输出和捷径输出相加,然后再relu

out = self.relu(out)

return out

class Bottleneck(nn.Module):

expansion = 4 # 主线上的卷积核个数在变化,每一个残差结构中的第三层卷积层中的卷积核个数是第一层、第二层卷积核个数的四倍。

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

"""

50层、101层、152层残差结构

:param in_channel:

:param out_channel:

:param stride:

:param downsample:

:return:

"""

super(Bottleneck, self).__init__()

# super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=1, stride=1, bias=False) # sequeeze channels

self.bn1 = nn.BatchNorm2d(out_channel)

#-----------------------------------------------

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=stride, bias=False,padding=1)

self.bn2 = nn.BatchNorm2d(out_channel)

#-----------------------------------------------

self.conv3 = nn.Conv2d(in_channels=out_channel,out_channels=out_channel*self.expansion,

kernel_size=1, stride=1,bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, blocks_num, num_classes=1000, include_top=True):

"""

:param block: 残差结构

:param blocks_num: 使用残差结构数目

:param num_classes: 训练类别数量

:param include_top:方便以后搭建更复杂的网络

"""

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3,bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

# 卷积层堆叠

self.layer1 = self._make_layer(block, 64, blocks_num[0])

self.layer2 = self._make_layer(block, 128,blocks_num[1],stride=2)

self.layer3 = self._make_layer(block, 256,blocks_num[2],stride=2)

self.layer4 = self._make_layer(block, 512,blocks_num[3],stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1,1)) # output size = (1,1),自适应的特征池化下采样,无论输入的高和宽是多少,输出都是(1,1)

self.fc = nn.Linear(512*block.expansion, num_classes) # 全连接层

for m in self.modules(): # 对卷积层进行初始化

if isinstance(m, nn.Conv2d):

# device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# device = torch.device('cpu')

nn.init.kaiming_normal_(m.weight,mode='fan_out',nonlinearity='relu')

def _make_layer(self, block, channel, block_num, stride=1):

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion:

# 虚线分支会改变输入矩阵大小

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel*block.expansion)

)

layers = []

layers.append(block(self.in_channel, channel, downsample=downsample, stride=stride))

self.in_channel = channel * block.expansion

for _ in range(1,block_num):

layers.append(block(self.in_channel,channel)) #后面的几层的捷径分支都不改变输入矩阵大小

return nn.Sequential(*layers) # *layer表示转化成非关键字参数

def forward(self,x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x,1)

x = self.fc(x)

return x

def resnet18(num_classes=1000, include_top=True):

return ResNet(BasicBlock, [2, 2, 2, 2], num_classes=num_classes, include_top=include_top)

def resnet34(num_classes=1000, include_top=True):

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet50(num_classes=1000, include_top=True):

return ResNet(Bottleneck, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

def resnet152(num_classes=1000, include_top=True):

return ResNet(Bottleneck, [3, 8, 36, 3], num_classes=num_classes, include_top=include_top)

train.py

'''

python3.7

-*- coding: UTF-8 -*-

@Project -> File :pythonProject -> train

@IDE :PyCharm

@Author :YangShouWei

@USER: 296714435

@Date :2022/2/18 14:27:24

@LastEditor:

'''

import argparse

import os

import sys

import torch

import torch.nn as nn

import torch.optim as optim

import numpy as np

from torchvision import transforms

from tqdm import tqdm

from Resnet import resnet34

from garbage.Mydataset import GARBAGE40_Dataset

def get_args():

parser = argparse.ArgumentParser(description='PyTorch OSR Example')

parser.add_argument('--batch_size', type=int, default=64, help='input batch size for training (default: 64)')

parser.add_argument('--num_classes', type=int, default=40, help='number of classes')

parser.add_argument('--epochs', type=int, default=5, help='number of epochs to train (default: 50)')

parser.add_argument('--lr', type=float, default=0.0001, help='learning rate (default: 1e-3)')

parser.add_argument('--seed', type=int, default=117, help='random seed (default: 1)')

parser.add_argument('--seed_sampler', type=str, default='777 1234 2731 3925 5432', help='random seed for dataset sampler')

parser.add_argument('--log_interval', type=int, default=20,

help='how many batches to wait before logging training status')

parser.add_argument('--dataset', type=str, default="GARBAGE40", help='The dataset going to use')

args = parser.parse_args()

return args

def control_seed(args):

# seed

args.cuda = torch.cuda.is_available()

torch.manual_seed(args.seed)

if args.cuda:

torch.cuda.manual_seed_all(args.seed)

np.random.seed(args.seed)

torch.backends.cudnn.deterministic = True

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device".format(device))

args = get_args()

control_seed(args)

data_transform = {

"train":transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(256),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],[0.229, 0.224, 0.225])]),

"val":transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(256),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],[0.229,0.224,0.225])])

}

data = GARBAGE40_Dataset(transform=data_transform['train'],train_num=args.num_classes) # 采用自定义的数据集

train_dataset, val_dataset, test_dataset = data.sampler(42,args)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=args.batch_size, shuffle=True, num_workers=0)

val_loader = torch.utils.data.DataLoader(val_dataset, batch_size=args.batch_size, shuffle=False, num_workers=0)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=args.batch_size, shuffle=False, num_workers=0)

train_length = len(train_dataset)

val_length = len(val_dataset)

test_length = len(test_dataset)

print("using {} images for training, {} images for validaion".format(train_length,val_length))

net = resnet34()

# load pretrain weights

model_wight_path = "./resnet34-pre.pth"

assert os.path.exists(model_wight_path), "file {} dose not exist.".format(model_wight_path) # 若路径不存在,则打印信息

net.load_state_dict(torch.load(model_wight_path,map_location=device))

# change fc layer structure

in_channel = net.fc.in_features

net.fc = nn.Linear(in_channel,40) # 全连接的输出应该和训练样本类别数量一致

net.to(device)

# define loss function

loss_function = nn.CrossEntropyLoss()

# construct an optimizer

params = [p for p in net.parameters() if p.requires_grad]

optimizer = optim.Adam(params,args.lr) # 优化器

best_acc = 0.0

best_test_acc = 0.0

train_steps = len(train_loader)

for epoch in range(args.epochs):

# train

net.train()

runnig_loss = 0.0

train_acc = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

if torch.cuda.is_available():

images = images.cuda()

labels = labels.cuda()

optimizer.zero_grad()

logits = net(images.to(device))

predict_y = torch.max(logits, dim=1)[1]

train_acc += torch.eq(predict_y, labels.to(device)).sum().item()

train_accurate = train_acc / train_length

loss = loss_function(logits,labels.to(device))

loss.backward()

optimizer.step()

runnig_loss += loss.item()

net.eval()

val_acc=0.0

test_acc = 0.0

with torch.no_grad():

val_tar = tqdm(val_loader,file=sys.stdout)

test_tar = tqdm(test_loader,file=sys.stdout)

for val_data in val_tar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

# loss=loss_function(outputs,test_labels)

predict_y = torch.max(outputs,dim=1)[1]

val_acc += torch.eq(predict_y,val_labels.to(device)).sum().item()

for test_data in test_tar:

test_images, test_labels = test_data

outputs_test = net(test_images.to(device))

pre_test_y = torch.max(outputs_test,dim=1)[1]

test_acc += torch.eq(pre_test_y,test_labels.to(device)).sum().item()

val_accurate = val_acc / val_length

test_accurate = test_acc/ test_length

print('epoch:{},train_loss:{:.3f}, train_accuracy:{:.3f}, val_accuracy:{:.3f},test_accuracy:{:.3f}'.format(epoch,

runnig_loss/train_steps,train_accurate,val_accurate,test_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

if test_accurate > best_test_acc:

best_test_acc = test_acc

print("Finish Training!")

print("best test accuracy:{:.3f},best val accuracy:{:.3f}".format(best_test_acc,best_acc))

if __name__ == "__main__":

main()

Mydataset.py

'''

python3.7

-*- coding: UTF-8 -*-

@Project -> File :gcm-cf-main -> Mydataset

@IDE :PyCharm

@Author :YangShouWei

@USER: 296714435

@Date :2022/1/4 14:20:47

@LastEditor:

'''

import torch.nn.functional as F

import torch

import torch.nn as nn

from torch.autograd import Variable

import torchvision.models as models

from torchvision import transforms, utils

from torch.utils.data import Dataset, DataLoader

from PIL import Image

import numpy as np

import torch.optim as optim

import os

import random

import cv2

# torch.cuda.set_device(gpu_id)#使用GPU

learning_rate = 0.0001

# 数据集的设置

root = os.getcwd() + '\garbage\\' # 调用图像

# root = ''

# print(root)

# 定义读取文件的格式

def default_loader(path):

c= '\\'.join(path[1:].split("/"))

path = os.getcwd()+'\\garbage'+c

return Image.open(path).convert('RGB')

class GARBAGE40_Dataset(Dataset):

training_file = '/train.txt'

validating_file = '/validate.txt'

testing_file = '/test.txt'

class_label = [0,1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,

16,17,18,19,20,21,22,23,24,25,26,27,28,

29,30,31,32,33,34,35,36,37,38,39]

def __init__(self, transform=None, target_transform=None,train_num=40):

# 根据自己定义的那个MyDataset来创建数据集!注意是数据集!而不是loader迭代器

super(GARBAGE40_Dataset, self).__init__() # 对继承自父类的属性进行初始化

train_txt = root+self.training_file

test_txt = root+self.testing_file

# test_txt = root+self.training_file

valid_txt = root+self.validating_file

self.path_imgs = []

self.num_target = []

self.test_img = []

self.test_target = []

self.read_data(txt=train_txt,train=True)

self.read_data(txt=valid_txt,train=True)

self.read_data(txt=test_txt,train=False)

self.train_data = np.array(self.path_imgs) # 图片路径数组

self.train_target = np.array(self.num_target)

self.test_data = np.array(self.test_img)

self.test_target = np.array(self.test_target)

self.transform = transform

self.target_transform = target_transform

self.train_num = train_num # 已知类的数量

def read_data(self,txt,train):

fh = open(txt, 'r') # 按照传入的路径和txt文本参数,打开这个文本,并读取内容

for line in fh: # 迭代该列表 #按行循环txt文本中的内

line = line.strip('\n')

line = line.rstrip('\n') # 删除 本行string 字符串末尾的指定字符

words = line.split() # 用split将该行分割成列表 split的默认参数是空格

if train:

self.path_imgs.append(words[0])

self.num_target.append(int(words[1]))

else:

self.test_img.append(words[0])

self.test_target.append(int(words[1]))

# def __len__(self): # 这个函数也必须要写,它返回的是数据集的长度,也就是多少张图片,要和loader的长度作区分

# return len(self.train_data)

#

# def __getitem__(self, index): # 这个方法是必须要有的,用于按照索引读取每个元素的具体内容

# img, label = self.train_data[index]

# # img = self.loader(fn) # 按照路径读取图片

# if self.transform is not None:

# # img = self.transform(img) # 数据标签转换为Tensor

# img = img

# return img, label # return回哪些内容,那么我们在训练时循环读取每个batch时,就能获得哪些内容

def sampler(self, seed, args):

if seed is not None:

random.seed(seed)

seen_classes = random.sample(range(0, 40), self.train_num)

unseen_classes = [idx for idx in range(40) if idx not in seen_classes]

print("seen_class{},length:{}".format(sorted(seen_classes), len(seen_classes)))

print("unseen_class{}".format(sorted(unseen_classes)))

print("训练集样本数量:{},测试集样本数量:{}".format(len(self.train_target),len(self.test_target)))

transform_test = transforms.Compose([transforms.Resize((256, 256)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])])

osr_trainset, osr_valset, osr_testset = construct_ocr_dataset_aug(self.train_data,self.train_target, self.test_data,self.test_target,

seen_classes, unseen_classes, self.transform,

transform_test, args)

return osr_trainset, osr_valset, osr_testset

def construct_ocr_dataset_aug(trainset,traintarget, testset,testtarget, seen_classes, unseen_classes, transform_train, transform_test, args):

class_label = [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15,

16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28,

29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39]

if args.dataset in ['GARBAGE40']:

osr_trainset = DatasetBuilder(

[get_class_i(trainset, traintarget, idx) for idx in seen_classes],

transform_train)

osr_valset = DatasetBuilder(

[get_class_i(testset, testtarget, idx) for idx in seen_classes],

transform_test)

# osr_valset = DatasetBuilder(

# [get_class_i(trainset, traintarget, idx) for idx in seen_classes],

# transform_test)

osr_testset = DatasetBuilder(

[get_class_i(testset, testtarget, idx) for idx in unseen_classes],

transform_test)

# osr_testset = DatasetBuilder(

# [get_class_i(testset, testtarget, idx) for idx in class_label],

# transform_test)

return osr_trainset, osr_valset, osr_testset

def get_class_i(x, y, i):

"""

x: trainset.train_data or testset.test_data

y: trainset.train_labels or testset.test_labels

i: class label, a number between 0 to 39

return: x_i

"""

# Convert to a numpy array

y = np.array(y)

# Locate position of labels that equal to i

pos_i = np.argwhere(y == i)

# Convert the result into a 1-D list

pos_i = list(pos_i[:, 0])

# Collect all data that match the desired label

x_i = [x[j] for j in pos_i]

return x_i

class DatasetBuilder(Dataset):

def __init__(self, datasets, transformFunc,loader=default_loader):

"""

datasets: a list of get_class_i outputs, i.e. a list of list of images for selected classes

"""

self.datasets = datasets

self.lengths = [len(d) for d in self.datasets]

self.transformFunc = transformFunc

self.loader = loader

def __getitem__(self, i):

class_label, index_wrt_class = self.index_of_which_bin(self.lengths, i)

img = self.loader(self.datasets[class_label][index_wrt_class])

if isinstance(img, torch.Tensor):

img = Image.fromarray(img.numpy(), mode='L')

elif type(img).__module__ == np.__name__:

if np.argmin(img.shape) == 0:

img = img.transpose(1, 2, 0)

# print(type(img))

img = Image.fromarray(np.uint8(img))

elif isinstance(img, tuple): #ImageNet

# img = Image.open(img[0])

img = cv2.imread(img[0])

img = Image.fromarray(img)

img = self.transformFunc(img)

return img, class_label

def __len__(self):

return sum(self.lengths)

def index_of_which_bin(self, bin_sizes, absolute_index, verbose=False):

"""

Given the absolute index, returns which bin it falls in and which element of that bin it corresponds to.

"""

# Which class/bin does i fall into?

accum = np.add.accumulate(bin_sizes)

if verbose:

print("accum =", accum)

bin_index = len(np.argwhere(accum <= absolute_index))

if verbose:

print("class_label =", bin_index)

# Which element of the fallent class/bin does i correspond to?

index_wrt_class = absolute_index - np.insert(accum, 0, 0)[bin_index]

if verbose:

print("index_wrt_class =", index_wrt_class)

return bin_index, index_wrt_class

一开始训练效果可以达到98%,但是验证集的准确率只有32%,测试集只有5%左右。后来发现是因为验证集和测试集的数据处理过程图片大小设定为了6464,改成256256以后效果有了明显的提升。