DataLoader worker (pid(s) 13424) exited unexpectedly “nll_loss_forward_reduce_cuda_kernel_2d_index“

错误汇总

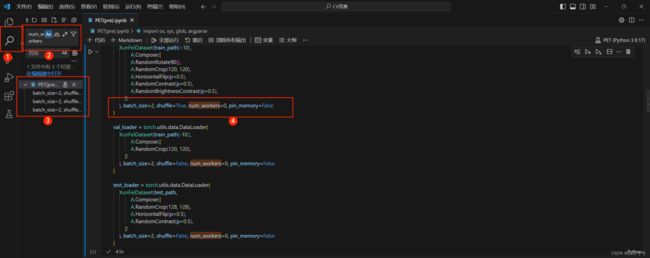

错误一:DataLoader worker (pid(s) 13424) exited unexpectedly

遇到的错误可能是由于以下原因之一导致的:

- 数据集中有损坏或无效的文件,导致数据加载器无法读取或解析它们。

- 数据集太大,超过了您的内存或显存的容量,导致数据加载器无法分配足够的空间来存储或处理它们。

- 数据加载器使用了多个进程来加速数据加载,但由于某些原因,这些进程之间的通信出现了问题,导致数据加载器无法同步或接收数据。

为了解决这个问题,可以尝试以下一些方法:

- 检查数据集中是否有损坏或无效的文件,并删除或修复它们。可以使用一些工具来验证数据集,例如[torchvision.datasets.folder.verify_str_arg]或[torchvision.datasets.folder.is_image_file]。

- 减小批量大小(batch_size)或缩放因子(upscale_factor),以减少每次加载和处理的数据量。这样可以降低内存或显存的压力,避免溢出错误。

- 减少数据加载器使用的进程数(num_workers),或者将其设置为0,以避免多进程之间的通信问题。这样可能会降低数据加载的速度,但也可以避免意外退出错误。

错误二:“nll_loss_forward_reduce_cuda_kernel_2d_index” not implemented for ‘Int’

- 这个错误的原因可能是目标变量

target的数据类型是Int,而不是Long。 - PyTorch的交叉熵损失函数

cross_entropy_loss要求目标变量的数据类型是Long。 - 将

target = target.cuda(non_blocking=True)改为target = target.long().cuda(non_blocking=True),将target = target.cuda()改为target = target.long().cuda()。

def train(train_loader, model, criterion, optimizer):

model.train()

train_loss = 0.0

for i, (input, target) in enumerate(train_loader):

input = input.cuda(non_blocking=True)

target = target.long().cuda(non_blocking=True) # 修改了这里,原来是 target = target.cuda(non_blocking=True)

# 因为交叉熵损失函数要求目标变量的数据类型是Long,而不是Int

output = model(input)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i % 20 == 0:

print(loss.item())

train_loss += loss.item()

return train_loss/len(train_loader)

def validate(val_loader, model, criterion):

model.eval()

val_acc = 0.0

with torch.no_grad():

for i, (input, target) in enumerate(val_loader):

input = input.cuda()

target = target.long().cuda() # 修改了这里,原来是 target = target.cuda()

# 因为交叉熵损失函数要求目标变量的数据类型是Long,而不是Int

# compute output

output = model(input)

loss = criterion(output, target)

val_acc += (output.argmax(1) == target).sum().item()

return val_acc / len(val_loader.dataset)

for _ in range(3):

train_loss = train(train_loader, model, criterion, optimizer)

val_acc = validate(val_loader, model, criterion)

train_acc = validate(train_loader, model, criterion)

print(train_loss, train_acc, val_acc)

思路二:

criterion是一个交叉熵损失函数,它需要的target参数是一个长整型(LongTensor)的张量,而不是一个整型(IntTensor)的张量。- 不进行转换,就会出现

RuntimeError: "nll_loss_forward_reduce_cuda_kernel_2d_index" not implemented for 'Int'的异常,这意味着PyTorch没有实现对整型张量进行交叉熵损失计算的CUDA内核函数。 - 在第9行和第32行分别添加了

.long()方法,将target转换为长整型,然后再传给criterion。这样就可以避免运行时错误,并正确地计算损失函数。

def train(train_loader, model, criterion, optimizer):

model.train()

train_loss = 0.0

for i, (input, target) in enumerate(train_loader):

input = input.cuda(non_blocking=True)

target = target.cuda(non_blocking=True)

output = model(input)

# 修改前的代码是:loss = criterion(output, target)

# 修改的原因是:criterion需要的target参数是一个长整型张量,而不是一个整型张量

loss = criterion(output, target.long()) # 转换成长整型

optimizer.zero_grad()

loss.backward()

optimizer.step()

if i % 20 == 0:

print(loss.item())

train_loss += loss.item()

return train_loss/len(train_loader)

def validate(val_loader, model, criterion):

model.eval()

val_acc = 0.0

with torch.no_grad():

for i, (input, target) in enumerate(val_loader):

input = input.cuda()

target = target.cuda()

# compute output

output = model(input)

# 修改前的代码是:loss = criterion(output, target)

# 修改的原因是:criterion需要的target参数是一个长整型张量,而不是一个整型张量

loss = criterion(output, target.long()) # 转换成长整型

val_acc += (output.argmax(1) == target).sum().item()

return val_acc / len(val_loader.dataset)

for _ in range(3):

train_loss = train(train_loader, model, criterion, optimizer)

val_acc = validate(val_loader, model, criterion)

train_acc = validate(train_loader, model, criterion)

print(train_loss, train_acc, val_acc)

错误三:invalid literal for int() with base 10: ‘Test\94’

思路一:

- 反斜杠(\)是Windows系统中用来表示文件路径的分隔符,例如C:\Windows或\172.12.1.34。正斜杠(/)是Linux系统中用来表示文件路径的分隔符,例如/home/user或/mnt/cdrom。

- 在Windows系统上,有时也可以使用正斜杠(/)作为路径分隔符,但可能会导致一些问题,因为很多Windows程序使用正斜杠(/)来表示命令行参数,所以最好还是使用反斜杠(\)。

def predict(test_loader, model, criterion):

model.eval()

val_acc = 0.0

test_pred = []

with torch.no_grad():

for i, (input, target) in enumerate(test_loader):

input = input.cuda()

target = target.cuda()

output = model(input)

test_pred.append(output.data.cpu().numpy())

return np.vstack(test_pred)

pred = None

for _ in range(10):

if pred is None:

pred = predict(test_loader, model, criterion)

else:

pred += predict(test_loader, model, criterion)

submit = pd.DataFrame(

{

'uuid': [int(x.split('\\')[-1][:-4]) for x in test_path],

# 修改原因:将反斜杠替换为正斜杠,以适应Windows系统的路径分隔符

# 原代码: 'uuid': [int(x.split('/')[-1][:-4]) for x in test_path],

'label': pred.argmax(1)

})

submit['label'] = submit['label'].map({1:'NC', 0: 'MCI'})

submit = submit.sort_values(by='uuid')

submit.to_csv('submit2.csv', index=None)

思路二:

-

os.path.basename 来获取文件名,而不是用

x.split(‘/’)[-1][:-4]。这样可以避免在不同的操作系统下出现路径分隔符不一致的问题。- os.path.basename 是一个 Python内置的函数,它可以从一个完整的文件路径中提取出文件名,而不管路径中使用的是什么分隔符。

- 例如,如果 x 是 ‘C:\Users\Alice\Documents\test.txt’,那么 os.path.basename(x) 就会返回 ‘test.txt’。

- 如果 x 是 ‘/home/bob/test.txt’,那么 os.path.basename(x) 也会返回 ‘test.txt’。

- 这样就可以避免在不同的操作系统下出现路径分隔符不一致的问题,比如 Windows 下用反斜杠 ‘\’,而 Linux 下用正斜杠 ‘/’。

- 而 x.split(‘/’)[-1][:-4] 这种方法就是假设路径中使用的是正斜杠 ‘/’,并且文件名的最后四个字符是扩展名,这样就可能导致错误或者不适用于其他情况。

- os.path.basename 是一个 Python内置的函数,它可以从一个完整的文件路径中提取出文件名,而不管路径中使用的是什么分隔符。

import os

def predict(test_loader, model, criterion):

model.eval()

val_acc = 0.0

test_pred = []

with torch.no_grad():

for i, (input, target) in enumerate(test_loader):

input = input.cuda()

target = target.cuda()

output = model(input)

test_pred.append(output.data.cpu().numpy())

return np.vstack(test_pred)

pred = None

for _ in range(10):

if pred is None:

pred = predict(test_loader, model, criterion)

else:

pred += predict(test_loader, model, criterion)

submit = pd.DataFrame(

{

# 使用 os.path.basename 来获取文件名

'uuid': [int(os.path.basename(x)[:-4]) for x in test_path],

'label': pred.argmax(1)

})

submit['label'] = submit['label'].map({1:'NC', 0: 'MCI'})

submit = submit.sort_values(by='uuid')

submit.to_csv('submit2.csv', index=None)