- nosql数据库技术与应用知识点

皆过客,揽星河

NoSQLnosql数据库大数据数据分析数据结构非关系型数据库

Nosql知识回顾大数据处理流程数据采集(flume、爬虫、传感器)数据存储(本门课程NoSQL所处的阶段)Hdfs、MongoDB、HBase等数据清洗(入仓)Hive等数据处理、分析(Spark、Flink等)数据可视化数据挖掘、机器学习应用(Python、SparkMLlib等)大数据时代存储的挑战(三高)高并发(同一时间很多人访问)高扩展(要求随时根据需求扩展存储)高效率(要求读写速度快)

- ES聚合分析原理与代码实例讲解

光剑书架上的书

大厂Offer收割机面试题简历程序员读书硅基计算碳基计算认知计算生物计算深度学习神经网络大数据AIGCAGILLMJavaPython架构设计Agent程序员实现财富自由

ES聚合分析原理与代码实例讲解1.背景介绍1.1问题的由来在大规模数据分析场景中,特别是在使用Elasticsearch(ES)进行数据存储和检索时,聚合分析成为了一个至关重要的功能。聚合分析允许用户对数据集进行细分和分组,以便深入探索数据的结构和模式。这在诸如实时监控、日志分析、业务洞察等领域具有广泛的应用。1.2研究现状目前,ES聚合分析已经成为现代大数据平台的核心组件之一。它支持多种类型的聚

- WebMagic:强大的Java爬虫框架解析与实战

Aaron_945

Javajava爬虫开发语言

文章目录引言官网链接WebMagic原理概述基础使用1.添加依赖2.编写PageProcessor高级使用1.自定义Pipeline2.分布式抓取优点结论引言在大数据时代,网络爬虫作为数据收集的重要工具,扮演着不可或缺的角色。Java作为一门广泛使用的编程语言,在爬虫开发领域也有其独特的优势。WebMagic是一个开源的Java爬虫框架,它提供了简单灵活的API,支持多线程、分布式抓取,以及丰富的

- 免费的GPT可在线直接使用(一键收藏)

kkai人工智能

gpt

1、LuminAI(https://kk.zlrxjh.top)LuminAI标志着一款融合了星辰大数据模型与文脉深度模型的先进知识增强型语言处理系统,旨在自然语言处理(NLP)的技术开发领域发光发热。此系统展现了卓越的语义把握与内容生成能力,轻松驾驭多样化的自然语言处理任务。VisionAI在NLP界的应用领域广泛,能够胜任从机器翻译、文本概要撰写、情绪分析到问答等众多任务。通过对大量文本数据的

- 如何利用大数据与AI技术革新相亲交友体验

h17711347205

回归算法安全系统架构交友小程序

在数字化时代,大数据和人工智能(AI)技术正逐渐革新相亲交友体验,为寻找爱情的过程带来前所未有的变革(编辑h17711347205)。通过精准分析和智能匹配,这些技术能够极大地提高相亲交友系统的效率和用户体验。大数据的力量大数据技术能够收集和分析用户的行为模式、偏好和互动数据,为相亲交友系统提供丰富的信息资源。通过分析用户的搜索历史、浏览记录和点击行为,系统能够深入了解用户的兴趣和需求,从而提供更

- 浅谈MapReduce

Android路上的人

Hadoop分布式计算mapreduce分布式框架hadoop

从今天开始,本人将会开始对另一项技术的学习,就是当下炙手可热的Hadoop分布式就算技术。目前国内外的诸多公司因为业务发展的需要,都纷纷用了此平台。国内的比如BAT啦,国外的在这方面走的更加的前面,就不一一列举了。但是Hadoop作为Apache的一个开源项目,在下面有非常多的子项目,比如HDFS,HBase,Hive,Pig,等等,要先彻底学习整个Hadoop,仅仅凭借一个的力量,是远远不够的。

- 未来软件市场是怎么样的?做开发的生存空间如何?

cesske

软件需求

目录前言一、未来软件市场的发展趋势二、软件开发人员的生存空间前言未来软件市场是怎么样的?做开发的生存空间如何?一、未来软件市场的发展趋势技术趋势:人工智能与机器学习:随着技术的不断成熟,人工智能将在更多领域得到应用,如智能客服、自动驾驶、智能制造等,这将极大地推动软件市场的增长。云计算与大数据:云计算服务将继续普及,大数据技术的应用也将更加广泛。企业将更加依赖云计算和大数据来优化运营、提升效率,并

- Hadoop

傲雪凌霜,松柏长青

后端大数据hadoop大数据分布式

ApacheHadoop是一个开源的分布式计算框架,主要用于处理海量数据集。它具有高度的可扩展性、容错性和高效的分布式存储与计算能力。Hadoop核心由四个主要模块组成,分别是HDFS(分布式文件系统)、MapReduce(分布式计算框架)、YARN(资源管理)和HadoopCommon(公共工具和库)。1.HDFS(HadoopDistributedFileSystem)HDFS是Hadoop生

- Hadoop架构

henan程序媛

hadoop大数据分布式

一、案列分析1.1案例概述现在已经进入了大数据(BigData)时代,数以万计用户的互联网服务时时刻刻都在产生大量的交互,要处理的数据量实在是太大了,以传统的数据库技术等其他手段根本无法应对数据处理的实时性、有效性的需求。HDFS顺应时代出现,在解决大数据存储和计算方面有很多的优势。1.2案列前置知识点1.什么是大数据大数据是指无法在一定时间范围内用常规软件工具进行捕捉、管理和处理的大量数据集合,

- [转载] NoSQL简介

weixin_30325793

大数据数据库运维

摘自“百度百科”。NoSQL,泛指非关系型的数据库。随着互联网web2.0网站的兴起,传统的关系数据库在应付web2.0网站,特别是超大规模和高并发的SNS类型的web2.0纯动态网站已经显得力不从心,暴露了很多难以克服的问题,而非关系型的数据库则由于其本身的特点得到了非常迅速的发展。NoSQL数据库的产生就是为了解决大规模数据集合多重数据种类带来的挑战,尤其是大数据应用难题。虽然NoSQL流行语

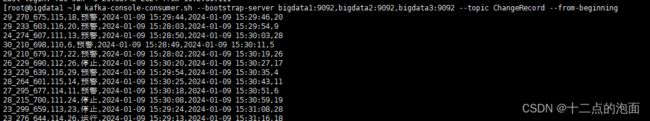

- Kafka详细解析与应用分析

芊言芊语

kafka分布式

Kafka是一个开源的分布式事件流平台(EventStreamingPlatform),由LinkedIn公司最初采用Scala语言开发,并基于ZooKeeper协调管理。如今,Kafka已经被Apache基金会纳入其项目体系,广泛应用于大数据实时处理领域。Kafka凭借其高吞吐量、持久化、分布式和可靠性的特点,成为构建实时流数据管道和流处理应用程序的重要工具。Kafka架构Kafka的架构主要由

- 分享一个基于python的电子书数据采集与可视化分析 hadoop电子书数据分析与推荐系统 spark大数据毕设项目(源码、调试、LW、开题、PPT)

计算机源码社

Python项目大数据大数据pythonhadoop计算机毕业设计选题计算机毕业设计源码数据分析spark毕设

作者:计算机源码社个人简介:本人八年开发经验,擅长Java、Python、PHP、.NET、Node.js、Android、微信小程序、爬虫、大数据、机器学习等,大家有这一块的问题可以一起交流!学习资料、程序开发、技术解答、文档报告如需要源码,可以扫取文章下方二维码联系咨询Java项目微信小程序项目Android项目Python项目PHP项目ASP.NET项目Node.js项目选题推荐项目实战|p

- 疫情,疫情

东山草

2020年,疫情爆发,至今已近三年,反反复复,此起彼伏。不但没被消灭,还自我发展,从德尔塔到奥密克戎,与时俱进的变异着。去年11月,疫情之下,大数据800米范围内,都成为时空伴随者。“你的码儿有没有变颜色”“你绿码还是黄码”成为那段时间的流行语,当然少不了的还有全员核酸。段子手整出来一首歌:我走过你走过的路,这算不算相逢?我吹过你吹过的风,这算不算相拥?800米内我们不曾擦肩而过,你却要我14天相

- 在服务器计算节点中使用 jupyter Lab

ranshan567

程序人生

JupyterLab是一个基于网页的交互式开发环境,用于科学计算、数据分析和机器学.jupyterlab是jupyternotebook的下一代产品,集成了更多功能,使用起来更方便.在进行数据分析及可视化时,个人电脑不能满足大数据的分析需求,就需要用到高性能计算机集群资源,然而计算机集群的计算节点往往没有联网功能,所以在计算机集群中使用jupyterLab需要进行一些配置。具体的步骤如下:

- 大数据真实面试题---SQL

The博宇

大数据面试题——SQL大数据mysqlsql数据库bigdata

视频号数据分析组外包招聘笔试题时间限时45分钟完成。题目根据3张表表结构,写出具体求解的SQL代码(搞笑品类定义:视频分类或者视频创建者分类为“搞笑”)1、表创建语句:createtablet_user_video_action_d(dsint,user_idstring,video_idstring,action_typeint,`timestamp`bigint)rowformatdelimi

- hbase介绍

CrazyL-

云计算+大数据hbase

hbase是一个分布式的、多版本的、面向列的开源数据库hbase利用hadoophdfs作为其文件存储系统,提供高可靠性、高性能、列存储、可伸缩、实时读写、适用于非结构化数据存储的数据库系统hbase利用hadoopmapreduce来处理hbase、中的海量数据hbase利用zookeeper作为分布式系统服务特点:数据量大:一个表可以有上亿行,上百万列(列多时,插入变慢)面向列:面向列(族)的

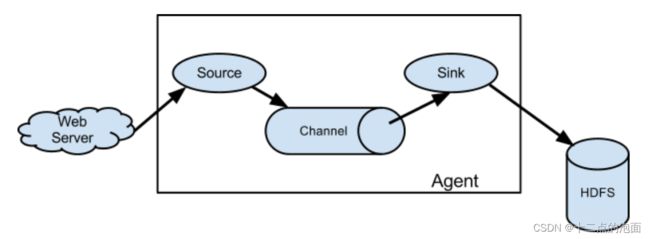

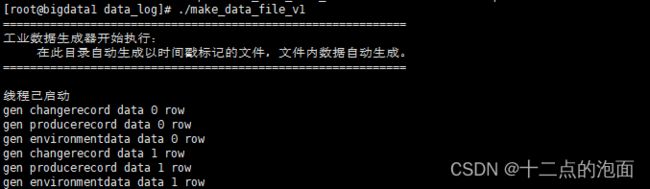

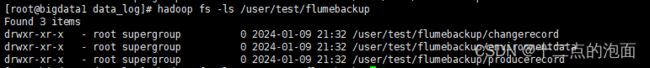

- Flume:大规模日志收集与数据传输的利器

傲雪凌霜,松柏长青

后端大数据flume大数据

Flume:大规模日志收集与数据传输的利器在大数据时代,随着各类应用的不断增长,产生了海量的日志和数据。这些数据不仅对业务的健康监控至关重要,还可以通过深入分析,帮助企业做出更好的决策。那么,如何高效地收集、传输和存储这些海量数据,成为了一项重要的挑战。今天我们将深入探讨ApacheFlume,它是如何帮助我们应对这些挑战的。一、Flume概述ApacheFlume是一个分布式、可靠、可扩展的日志

- 云服务业界动态简报-20180128

Captain7

一、青云青云QingCloud推出深度学习平台DeepLearningonQingCloud,包含了主流的深度学习框架及数据科学工具包,通过QingCloudAppCenter一键部署交付,可以让算法工程师和数据科学家快速构建深度学习开发环境,将更多的精力放在模型和算法调优。二、腾讯云1.腾讯云正式发布腾讯专有云TCE(TencentCloudEnterprise)矩阵,涵盖企业版、大数据版、AI

- 大数据毕业设计hadoop+spark+hive知识图谱租房数据分析可视化大屏 租房推荐系统 58同城租房爬虫 房源推荐系统 房价预测系统 计算机毕业设计 机器学习 深度学习 人工智能

2401_84572577

程序员大数据hadoop人工智能

做了那么多年开发,自学了很多门编程语言,我很明白学习资源对于学一门新语言的重要性,这些年也收藏了不少的Python干货,对我来说这些东西确实已经用不到了,但对于准备自学Python的人来说,或许它就是一个宝藏,可以给你省去很多的时间和精力。别在网上瞎学了,我最近也做了一些资源的更新,只要你是我的粉丝,这期福利你都可拿走。我先来介绍一下这些东西怎么用,文末抱走。(1)Python所有方向的学习路线(

- 架构评审的自动化与人工智能: 如何提高效率

光剑书架上的书

架构自动化人工智能运维

1.背景介绍架构评审是软件开发过程中的一个关键环节,它旨在确保软件架构的质量、可维护性和可扩展性。传统的架构评审通常是由人工进行,需要大量的时间和精力。随着大数据技术和人工智能的发展,自动化和人工智能技术已经开始应用于架构评审,从而提高评审的效率和准确性。在本文中,我们将讨论如何通过自动化和人工智能技术来提高架构评审的效率。我们将从以下几个方面进行讨论:背景介绍核心概念与联系核心算法原理和具体操作

- 【数字化供应链】数字化供应链架构、全景管理、全流程贯通方案

数字化建设方案

数字化转型数据治理主数据数据仓库供应链数字仓储智慧物流智慧仓储物流园区架构微服务数据挖掘大数据人工智能

原文《数字化供应链架构、全景管理、全流程贯通方案》PPT格式。主要从供应链管理全景、智慧供应链建设总体目标、供应链总体业务流程、供应链总体功能架构、供应链总体技术架构、供应链全流程贯通、供应链全领域管理、供应链数据数据分析、供应链决策中台等进行建设。本文仅对主要内容进行介绍。来源网络公开渠道,旨在交流学习,如有侵权联系速删,更多参考公众号:优享智库基于先进IT技术、大数据能力、物联网应用、区块链平

- 80

鑫_259b

科普一个谈恋爱的方法。在以前,谈恋爱千难万难,就难在对对方不知底细,不知道对方希望自己是一个怎样的人,要耗费大量的时间去试探、再磨合,往往会因为一些小事一些细节,满盘皆输。在一个信息化的时代,在一个大数据近乎变成了流行语的时代,我们要跟上时代的步伐,通过大数据,去寻找异性最希望自己展现出来的形象是什么,才可以在爱情的道路上少走弯路。那这个大数据怎么操作呢?上街发问卷?问别人的择偶标准?一来会被打死

- 解锁企业潜能,Vatee万腾平台引领智能新纪元

自媒体经济说

其他

在数字化转型的浪潮中,企业正站在一个前所未有的十字路口,面对着前所未有的机遇与挑战。解锁企业内在潜能,实现跨越式发展,已成为众多企业的共同追求。而Vatee万腾平台,作为智能科技的先锋,正以其强大的智能赋能能力,引领企业步入一个全新的智能纪元。Vatee万腾平台,是一个集成了人工智能、大数据、云计算等前沿技术的综合性智能服务平台。它不仅仅是一个技术工具,更是企业转型升级的加速器,能够深入企业运营的

- 释放“AI+”新质生产力,深算院如何“把大数据变小”?

YashanDB

YashanDB国产数据库数据库数据库大数据

近期,南都·湾财社推出《新质·中国造》栏目,深入千行百业,遍访湾区企业,解锁湾区新质生产力,共探高质量发展之道。本期对话深圳计算科学研究院YashanDB首席技术官陈志标,探讨国产数据库如何实现创新突围,抢抓数字经济时代的新机遇。以下是专访内容:如何应对AI时代所面临的算力挑战?南都·湾财社:数据、算力和算法是发展人工智能的三要素,深算院做了怎样的前瞻性布局?陈志标:今年,政府工作报告中首次提及开

- 数字化智能工厂数字化供应链架构、全景管理、全流程贯通方案

数字化建设方案

智能制造数字工厂制造业数字化转型工业互联网架构

随着信息技术的飞速发展,数字化转型已成为制造企业提升竞争力的关键途径。数字化智能工厂通过集成先进的物联网(IoT)、大数据、云计算、人工智能(AI)等技术,实现了生产过程的智能化、供应链管理的精准化及决策的科学化。本方案旨在构建一套完善的数字化供应链架构,实现全景管理、全流程贯通、智慧化升级,以数据为驱动,强化技术支撑与安全管理体系,推动企业向智能制造迈进。一、数字化供应链架构1.**集成化平台构

- 日记——我的歌单

静若小猴

又到一年一度大数据汇总的时候了,听歌已经成为很多人生活里的一种乐趣。春夏秋冬,我们都有自己喜欢的歌,歌词歌曲唱出沃尔玛你的心声。还记得大学时候最喜欢听的《春天里》,我有一天单曲回放了30遍,总觉得听着仿佛看到自己声音。还有的歌,初听不知曲中意,再听已经是曲终人,听着歌流泪,听着歌入睡……还记得那些年少的故事吗,总觉得自己才是故事外的人,却不是自己已经入歌。一段时间会喜欢一个人的音乐,一段时间会沉静

- Linux dmesg命令:显示开机信息

fafadsj666

linux数据库数据挖掘机器学习大数据

通过学习《Linux启动管理》一章可以知道,在系统启动过程中,内核还会进行一次系统检测(第一次是BIOS进行加测),但是检测的过程不是没有显示在屏幕上,就是会快速的在屏幕上一闪而过那么,如果开机时来不及查看相关信息,我们是否可以在开机后查看呢?答案是肯定的,使用dmesg命令就可以。无论是系统启动过程中,还是系统运行过程中,只要是内核产生的信息,都会被存储在系统缓冲区中,已经为大家精心准备了大数据

- 大数据新视界 --大数据大厂之揭秘大数据时代 Excel 魔法:大厂数据分析师进阶秘籍

青云交

大数据新视界Excel数据分析函数公式数据透视表图表功能规划求解数据分析工具库大数据新视界数据库

亲爱的朋友们,热烈欢迎你们来到青云交的博客!能与你们在此邂逅,我满心欢喜,深感无比荣幸。在这个瞬息万变的时代,我们每个人都在苦苦追寻一处能让心灵安然栖息的港湾。而我的博客,正是这样一个温暖美好的所在。在这里,你们不仅能够收获既富有趣味又极为实用的内容知识,还可以毫无拘束地畅所欲言,尽情分享自己独特的见解。我真诚地期待着你们的到来,愿我们能在这片小小的天地里共同成长,共同进步。本博客的精华专栏:Ja

- 大数据新视界 --大数据大厂之数据挖掘入门:用 R 语言开启数据宝藏的探索之旅

青云交

大数据新视界数据库大数据数据挖掘R语言算法案例未来趋势应用场景学习建议大数据新视界

亲爱的朋友们,热烈欢迎你们来到青云交的博客!能与你们在此邂逅,我满心欢喜,深感无比荣幸。在这个瞬息万变的时代,我们每个人都在苦苦追寻一处能让心灵安然栖息的港湾。而我的博客,正是这样一个温暖美好的所在。在这里,你们不仅能够收获既富有趣味又极为实用的内容知识,还可以毫无拘束地畅所欲言,尽情分享自己独特的见解。我真诚地期待着你们的到来,愿我们能在这片小小的天地里共同成长,共同进步。本博客的精华专栏:Ja

- 高职人工智能训练师边缘计算实训室解决方案

武汉唯众智创

人工智能训练师边缘计算实训室人工智能训练师实训室边缘计算实训室

一、引言随着物联网(IoT)、大数据、人工智能(AI)等技术的飞速发展,计算需求日益复杂和多样化。传统的云计算模式虽在一定程度上满足了这些需求,但在处理海量数据、保障实时性与安全性、提升计算效率等方面仍面临诸多挑战。在此背景下,边缘计算作为一种新兴的计算模式应运而生,通过将计算能力推向数据生成或用户所在的网络边缘,显著降低了数据传输的延迟,提升了处理效率,并增强了数据安全性。针对高等职业院校的人工

- java短路运算符和逻辑运算符的区别

3213213333332132

java基础

/*

* 逻辑运算符——不论是什么条件都要执行左右两边代码

* 短路运算符——我认为在底层就是利用物理电路的“并联”和“串联”实现的

* 原理很简单,并联电路代表短路或(||),串联电路代表短路与(&&)。

*

* 并联电路两个开关只要有一个开关闭合,电路就会通。

* 类似于短路或(||),只要有其中一个为true(开关闭合)是

- Java异常那些不得不说的事

白糖_

javaexception

一、在finally块中做数据回收操作

比如数据库连接都是很宝贵的,所以最好在finally中关闭连接。

JDBCAgent jdbc = new JDBCAgent();

try{

jdbc.excute("select * from ctp_log");

}catch(SQLException e){

...

}finally{

jdbc.close();

- utf-8与utf-8(无BOM)的区别

dcj3sjt126com

PHP

BOM——Byte Order Mark,就是字节序标记 在UCS 编码中有一个叫做"ZERO WIDTH NO-BREAK SPACE"的字符,它的编码是FEFF。而FFFE在UCS中是不存在的字符,所以不应该出现在实际传输中。UCS规范建议我们在传输字节流前,先传输 字符"ZERO WIDTH NO-BREAK SPACE"。这样如

- JAVA Annotation之定义篇

周凡杨

java注解annotation入门注释

Annotation: 译为注释或注解

An annotation, in the Java computer programming language, is a form of syntactic metadata that can be added to Java source code. Classes, methods, variables, pa

- tomcat的多域名、虚拟主机配置

g21121

tomcat

众所周知apache可以配置多域名和虚拟主机,而且配置起来比较简单,但是项目用到的是tomcat,配来配去总是不成功。查了些资料才总算可以,下面就跟大家分享下经验。

很多朋友搜索的内容基本是告诉我们这么配置:

在Engine标签下增面积Host标签,如下:

<Host name="www.site1.com" appBase="webapps"

- Linux SSH 错误解析(Capistrano 的cap 访问错误 Permission )

510888780

linuxcapistrano

1.ssh -v

[email protected] 出现

Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

错误

运行状况如下:

OpenSSH_5.3p1, OpenSSL 1.0.1e-fips 11 Feb 2013

debug1: Reading configuratio

- log4j的用法

Harry642

javalog4j

一、前言: log4j 是一个开放源码项目,是广泛使用的以Java编写的日志记录包。由于log4j出色的表现, 当时在log4j完成时,log4j开发组织曾建议sun在jdk1.4中用log4j取代jdk1.4 的日志工具类,但当时jdk1.4已接近完成,所以sun拒绝使用log4j,当在java开发中

- mysql、sqlserver、oracle分页,java分页统一接口实现

aijuans

oraclejave

定义:pageStart 起始页,pageEnd 终止页,pageSize页面容量

oracle分页:

select * from ( select mytable.*,rownum num from (实际传的SQL) where rownum<=pageEnd) where num>=pageStart

sqlServer分页:

- Hessian 简单例子

antlove

javaWebservicehessian

hello.hessian.MyCar.java

package hessian.pojo;

import java.io.Serializable;

public class MyCar implements Serializable {

private static final long serialVersionUID = 473690540190845543

- 数据库对象的同义词和序列

百合不是茶

sql序列同义词ORACLE权限

回顾简单的数据库权限等命令;

解锁用户和锁定用户

alter user scott account lock/unlock;

//system下查看系统中的用户

select * dba_users;

//创建用户名和密码

create user wj identified by wj;

identified by

//授予连接权和建表权

grant connect to

- 使用Powermock和mockito测试静态方法

bijian1013

持续集成单元测试mockitoPowermock

实例:

package com.bijian.study;

import static org.junit.Assert.assertEquals;

import java.io.IOException;

import org.junit.Before;

import org.junit.Test;

import or

- 精通Oracle10编程SQL(6)访问ORACLE

bijian1013

oracle数据库plsql

/*

*访问ORACLE

*/

--检索单行数据

--使用标量变量接收数据

DECLARE

v_ename emp.ename%TYPE;

v_sal emp.sal%TYPE;

BEGIN

select ename,sal into v_ename,v_sal

from emp where empno=&no;

dbms_output.pu

- 【Nginx四】Nginx作为HTTP负载均衡服务器

bit1129

nginx

Nginx的另一个常用的功能是作为负载均衡服务器。一个典型的web应用系统,通过负载均衡服务器,可以使得应用有多台后端服务器来响应客户端的请求。一个应用配置多台后端服务器,可以带来很多好处:

负载均衡的好处

增加可用资源

增加吞吐量

加快响应速度,降低延时

出错的重试验机制

Nginx主要支持三种均衡算法:

round-robin

l

- jquery-validation备忘

白糖_

jquerycssF#Firebug

留点学习jquery validation总结的代码:

function checkForm(){

validator = $("#commentForm").validate({// #formId为需要进行验证的表单ID

errorElement :"span",// 使用"div"标签标记错误, 默认:&

- solr限制admin界面访问(端口限制和http授权限制)

ronin47

限定Ip访问

solr的管理界面可以帮助我们做很多事情,但是把solr程序放到公网之后就要限制对admin的访问了。

可以通过tomcat的http基本授权来做限制,也可以通过iptables防火墙来限制。

我们先看如何通过tomcat配置http授权限制。

第一步: 在tomcat的conf/tomcat-users.xml文件中添加管理用户,比如:

<userusername="ad

- 多线程-用JAVA写一个多线程程序,写四个线程,其中二个对一个变量加1,另外二个对一个变量减1

bylijinnan

java多线程

public class IncDecThread {

private int j=10;

/*

* 题目:用JAVA写一个多线程程序,写四个线程,其中二个对一个变量加1,另外二个对一个变量减1

* 两个问题:

* 1、线程同步--synchronized

* 2、线程之间如何共享同一个j变量--内部类

*/

public static

- 买房历程

cfyme

2015-06-21: 万科未来城,看房子

2015-06-26: 办理贷款手续,贷款73万,贷款利率5.65=5.3675

2015-06-27: 房子首付,签完合同

2015-06-28,央行宣布降息 0.25,就2天的时间差啊,没赶上。

首付,老婆找他的小姐妹接了5万,另外几个朋友借了1-

- [军事与科技]制造大型太空战舰的前奏

comsci

制造

天气热了........空调和电扇要准备好..........

最近,世界形势日趋复杂化,战争的阴影开始覆盖全世界..........

所以,我们不得不关

- dateformat

dai_lm

DateFormat

"Symbol Meaning Presentation Ex."

"------ ------- ------------ ----"

"G era designator (Text) AD"

"y year

- Hadoop如何实现关联计算

datamachine

mapreducehadoop关联计算

选择Hadoop,低成本和高扩展性是主要原因,但但它的开发效率实在无法让人满意。

以关联计算为例。

假设:HDFS上有2个文件,分别是客户信息和订单信息,customerID是它们之间的关联字段。如何进行关联计算,以便将客户名称添加到订单列表中?

&nbs

- 用户模型中修改用户信息时,密码是如何处理的

dcj3sjt126com

yii

当我添加或修改用户记录的时候对于处理确认密码我遇到了一些麻烦,所有我想分享一下我是怎么处理的。

场景是使用的基本的那些(系统自带),你需要有一个数据表(user)并且表中有一个密码字段(password),它使用 sha1、md5或其他加密方式加密用户密码。

面是它的工作流程: 当创建用户的时候密码需要加密并且保存,但当修改用户记录时如果使用同样的场景我们最终就会把用户加密过的密码再次加密,这

- 中文 iOS/Mac 开发博客列表

dcj3sjt126com

Blog

本博客列表会不断更新维护,如果有推荐的博客,请到此处提交博客信息。

本博客列表涉及的文章内容支持 定制化Google搜索,特别感谢 JeOam 提供并帮助更新。

本博客列表也提供同步更新的OPML文件(下载OPML文件),可供导入到例如feedly等第三方定阅工具中,特别感谢 lcepy 提供自动转换脚本。这里有导入教程。

- js去除空格,去除左右两端的空格

蕃薯耀

去除左右两端的空格js去掉所有空格js去除空格

js去除空格,去除左右两端的空格

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>&g

- SpringMVC4零配置--web.xml

hanqunfeng

springmvc4

servlet3.0+规范后,允许servlet,filter,listener不必声明在web.xml中,而是以硬编码的方式存在,实现容器的零配置。

ServletContainerInitializer:启动容器时负责加载相关配置

package javax.servlet;

import java.util.Set;

public interface ServletContainer

- 《开源框架那些事儿21》:巧借力与借巧力

j2eetop

框架UI

同样做前端UI,为什么有人花了一点力气,就可以做好?而有的人费尽全力,仍然错误百出?我们可以先看看几个故事。

故事1:巧借力,乌鸦也可以吃核桃

有一个盛产核桃的村子,每年秋末冬初,成群的乌鸦总会来到这里,到果园里捡拾那些被果农们遗落的核桃。

核桃仁虽然美味,但是外壳那么坚硬,乌鸦怎么才能吃到呢?原来乌鸦先把核桃叼起,然后飞到高高的树枝上,再将核桃摔下去,核桃落到坚硬的地面上,被撞破了,于是,

- JQuery EasyUI 验证扩展

可怜的猫

jqueryeasyui验证

最近项目中用到了前端框架-- EasyUI,在做校验的时候会涉及到很多需要自定义的内容,现把常用的验证方式总结出来,留待后用。

以下内容只需要在公用js中添加即可。

使用类似于如下:

<input class="easyui-textbox" name="mobile" id="mobile&

- 架构师之httpurlconnection----------读取和发送(流读取效率通用类)

nannan408

1.前言.

如题.

2.代码.

/*

* Copyright (c) 2015, S.F. Express Inc. All rights reserved.

*/

package com.test.test.test.send;

import java.io.IOException;

import java.io.InputStream

- Jquery性能优化

r361251

JavaScriptjquery

一、注意定义jQuery变量的时候添加var关键字

这个不仅仅是jQuery,所有javascript开发过程中,都需要注意,请一定不要定义成如下:

$loading = $('#loading'); //这个是全局定义,不知道哪里位置倒霉引用了相同的变量名,就会郁闷至死的

二、请使用一个var来定义变量

如果你使用多个变量的话,请如下方式定义:

. 代码如下:

var page

- 在eclipse项目中使用maven管理依赖

tjj006

eclipsemaven

概览:

如何导入maven项目至eclipse中

建立自有Maven Java类库服务器

建立符合maven代码库标准的自定义类库

Maven在管理Java类库方面有巨大的优势,像白衣所说就是非常“环保”。

我们平时用IDE开发都是把所需要的类库一股脑的全丢到项目目录下,然后全部添加到ide的构建路径中,如果用了SVN/CVS,这样会很容易就 把

- 中国天气网省市级联页面

x125858805

级联

1、页面及级联js

<%@ page language="java" import="java.util.*" pageEncoding="UTF-8"%>

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN">

&l