成功编译TensorRT-LLM

由于在本地工作站通过下载docker环境编译TRT-LLM出现错误,无法解决。

于是决定通过在公有云申请资源,通过配置TRT-LLM编译依赖环境的方式进行编译。

公有云选择AudoDL,理由简单易用,价格便宜。

1.准备工作

启动已下载的docker镜像,查看编译TRT-LLM的主要依赖项版本

OS:Ubuntu 22.04

cuda:12.2

cudnn: 8.9.4

tensorrt:9.1.0

python:3.10.12

在环境准备的过程中,可以选择"无卡模式开机",以节约GPU费用~~

2.环境配置

2.1 cuda安装

# download cudatoolkit

root@XXX:~/autodl-tmp/files# wget https://developer.download.nvidia.com/compute/cuda/12.2.1/local_installers/cuda_12.2.1_535.86.10_linux.run

--2023-11-09 09:23:51-- https://developer.download.nvidia.com/compute/cuda/12.2.1/local_installers/cuda_12.2.1_535.86.10_linux.run

Resolving developer.download.nvidia.com (developer.download.nvidia.com)... 152.199.39.144

Connecting to developer.download.nvidia.com (developer.download.nvidia.com)|152.199.39.144|:443... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://developer.download.nvidia.cn/compute/cuda/12.2.1/local_installers/cuda_12.2.1_535.86.10_linux.run [following]

--2023-11-09 09:23:51-- https://developer.download.nvidia.cn/compute/cuda/12.2.1/local_installers/cuda_12.2.1_535.86.10_linux.run

Resolving developer.download.nvidia.cn (developer.download.nvidia.cn)... 120.221.249.91, 36.153.62.132, 120.221.249.90

Connecting to developer.download.nvidia.cn (developer.download.nvidia.cn)|120.221.249.91|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4332490379 (4.0G) [application/octet-stream]

Saving to: ‘cuda_12.2.1_535.86.10_linux.run’

cuda_12.2.1_535.86.10_linux.run 100%[============================================================================================================>] 4.03G 27.0MB/s in 2m 23s

2023-11-09 09:26:15 (28.9 MB/s) - ‘cuda_12.2.1_535.86.10_linux.run’ saved [4332490379/4332490379]

root@XXX:~/autodl-tmp/files# ll

total 4230948

drwxr-xr-x 2 root root 53 Nov 9 09:23 ./

drwxr-xr-x 4 root root 46 Nov 9 09:23 ../

-rw-r--r-- 1 root root 4332490379 Jul 27 13:30 cuda_12.2.1_535.86.10_linux.run

#安装

root@XXX:~/autodl-tmp/files# sh cuda_12.2.1_535.86.10_linux.run

┌──────────────────────────────────────────────────────────────────────────────┐

│ End User License Agreement │

│ -------------------------- │

│ │

│ NVIDIA Software License Agreement and CUDA Supplement to │

│ Software License Agreement. Last updated: October 8, 2021 │

│ │

│ The CUDA Toolkit End User License Agreement applies to the │

│ NVIDIA CUDA Toolkit, the NVIDIA CUDA Samples, the NVIDIA │

│ Display Driver, NVIDIA Nsight tools (Visual Studio Edition), │

│ and the associated documentation on CUDA APIs, programming │

│ model and development tools. If you do not agree with the │

│ terms and conditions of the license agreement, then do not │

│ download or use the software. │

│ │

│ Last updated: October 8, 2021. │

│ │

│ │

│ Preface │

│ ------- │

│ │

│──────────────────────────────────────────────────────────────────────────────│

│ Do you accept the above EULA? (accept/decline/quit): │

│ accept

┌──────────────────────────────────────────────────────────────────────────────┐

│ CUDA Installer │

│ - [ ] Driver │

│ [ ] 535.86.10 │

│ + [X] CUDA Toolkit 12.2 │

│ [ ] CUDA Demo Suite 12.2 │

│ [ ] CUDA Documentation 12.2 │

│ - [ ] Kernel Objects │

│ [ ] nvidia-fs │

│ Options │

│ Install │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ │

│ Up/Down: Move | Left/Right: Expand | 'Enter': Select | 'A': Advanced options │

└──────────────────────────────────────────────────────────────────────────────┘

由于驱动已经是535,只安装cudatoolkot。

选择完成后 Install

root@XXX:~/autodl-tmp/files# nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Tue_Jul_11_02:20:44_PDT_2023

Cuda compilation tools, release 12.2, V12.2.128

Build cuda_12.2.r12.2/compiler.33053471_0

2.2 安装cudnn

先查看一下系统内置的cudnn版本:

root@XXX:~/autodl-tmp/files# ldconfig -v |grep cudnn

libcudnn_ops_train.so.8 -> libcudnn_ops_train.so.8.6.0

libcudnn_ops_infer.so.8 -> libcudnn_ops_infer.so.8.6.0

libcudnn_cnn_train.so.8 -> libcudnn_cnn_train.so.8.6.0

libcudnn_cnn_infer.so.8 -> libcudnn_cnn_infer.so.8.6.0

libcudnn_adv_train.so.8 -> libcudnn_adv_train.so.8.6.0

libcudnn_adv_infer.so.8 -> libcudnn_adv_infer.so.8.6.0

libcudnn.so.8 -> libcudnn.so.8.6.0

版本不一致。

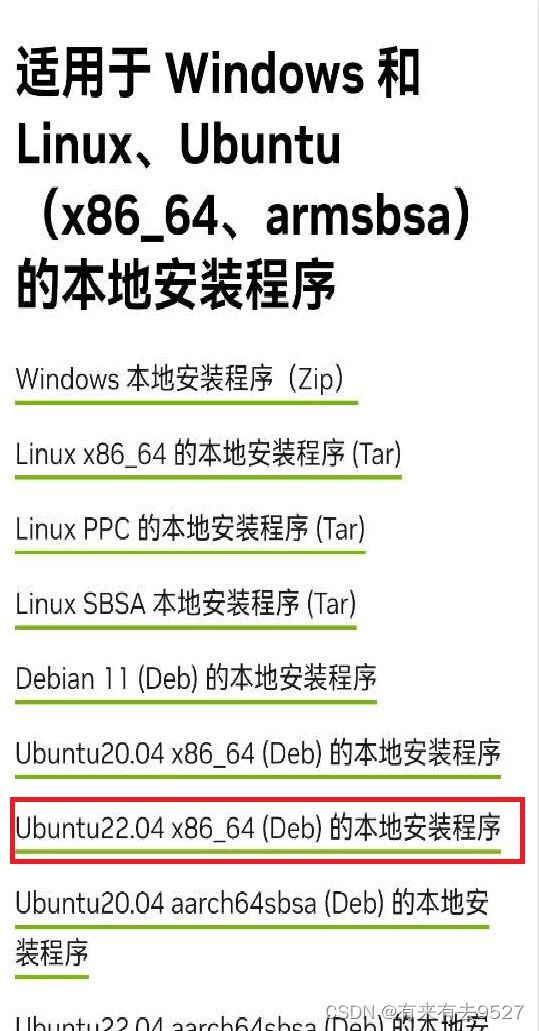

由于系统中的cudnn是deb安装的,所以去Nvidia下载deb安装,可以直接对旧版本进行覆盖。

2.3 安装tensorrt

root@XXX:~/autodl-tmp/files# wget https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/secure/9.1.0/tars/tensorrt-9.1.0.4.linux.x86_64-gnu.cuda-12.2.tar.gz

root@XXX:~/autodl-tmp/files# tar -xvzf tensorrt-9.1.0.4.linux.x86_64-gnu.cuda-12.2.tar.gz -C /usr/local/

root@XXX:~/autodl-tmp/files# /usr/local/TensorRT-9.1.0.4/ /usr/local/tensorrt

2.4 设置环境变量

root@XXX:~/autodl-tmp/files# echo "export LD_LIBRARY_PATH=/usr/local/cuda/lib64/:${LD_LIBRARY_PATH} \n" >> ~/.bashrc

root@XXX:~/autodl-tmp/files# echo "/usr/local/tensorrt/lib" > /etc/ld.so.conf.d/tensorrt.conf

root@XXX:~/autodl-tmp/files# echo "export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/tensorrt/lib \n" >> ~/.bashrc

source ~/.bashrc && ldconfig

3 编译TRT-LLM

配置学术资源加速链接方式

https://www.autodl.com/docs/network_turbo/

3.1下载TRT-LLM源码

root@xxx:~/autodl-tmp/files# apt update

root@xxx:~/autodl-tmp/files# apt install git-lfs

root@xxx:~/autodl-tmp/files# source /etc/network_turbo

设置成功

root@xxx:~/autodl-tmp/files# git clone --recursive https://github.com/NVIDIA/TensorRT-LLM.git

#下载速度很慢,可以上传已经下好的压缩包

root@xxx:~/autodl-tmp/files# unzip TensorRT-LLM-main.zip

3.2 安装python依赖

root@xxx:~/autodl-tmp/files# apt-get install -y --no-install-recommends gdb git-lfs python3-dev python-is-python3 libffi-dev

root@xxx:~/autodl-tmp/files# apt-get install -y --no-install-recommends openmpi-bin libopenmpi-dev

#创建新的conda环境

root@xxx:~/autodl-tmp/files# conda create -n trt-llm python=3.10

root@xxx:~/autodl-tmp/files# source activate trt-llm

(trt-llm) root@xxx:~/autodl-tmp/workspace# cd TensorRT-LLM-main/docker/common/

(trt-llm) root@xxx:~/autodl-tmp/workspace/TensorRT-LLM-main/docker/common# sh install_polygraphy.sh

+ RELEASE_URL_PG=https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/secure/9.0.1/tars/polygraphy-0.48.1-py2.py3-none-any.whl

+ pip uninstall -y polygraphy

WARNING: Skipping polygraphy as it is not installed.

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

+ pip install https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/secure/9.0.1/tars/polygraphy-0.48.1-py2.py3-none-any.whl

Looking in indexes: http://mirrors.aliyun.com/pypi/simple

Collecting polygraphy==0.48.1

Downloading https://developer.nvidia.com/downloads/compute/machine-learning/tensorrt/secure/9.0.1/tars/polygraphy-0.48.1-py2.py3-none-any.whl (311 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 312.0/312.0 kB 6.2 MB/s eta 0:00:00

Installing collected packages: polygraphy

Successfully installed polygraphy-0.48.1

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

#安装mpi4py会报错

conda install mpi4py

#install tensorrt-python

(trt-llm) root@xxx:# cd /usr/local/tensorrt/python

(trt-llm) root@xxx:# pip install tensorrt-9.1.0.post12.dev4-cp310-none-linux_x86_64.whl

(trt-llm) root@xxx:# pip install tensorrt_dispatch-9.1.0.post12.dev4-cp310-none-linux_x86_64.whl

(trt-llm) root@xxx:# pip install tensorrt_lean-9.1.0.post12.dev4-cp310-none-linux_x86_64.whl

3.3 编译TRT-LLM

注意编译时记得切回全资源启动模式

(trt-llm) root@xxx:~/autodl-tmp/workspace/TensorRT-LLM-main# python scripts/build_wheel.py --trt_root /usr/local/tensorrt

#过程很慢,看到console没有print信息属于正常现象

3.4 安装whl文件

pip install tensorrt_llm-0.5.0-py3-none-any.whl && rm tensorrt_llm-0.5.0-py3-none-any.whl

pip list | grep tensorrt-llm

python -c "import tensorrt_llm; print(tensorrt_llm._utils.trt_version())"

4.验证

4.1 运行test文件

(trt-llm) root@xxx:~/autodl-tmp/files/TensorRT-LLM/tests# python test_kv_cache_manager.py

......

----------------------------------------------------------------------

Ran 6 tests in 0.388s

OK

4.2 测试gpt2-medium

git clone https://huggingface.co/gpt2-medium

模型保存在/root/autodl-tmp/models/gpt/

运行步骤参考/root/autodl-tmp/files/TensorRT-LLM/examples/gpt目录下的readme文档

(trt-llm) root@xxx:~/autodl-tmp/files/TensorRT-LLM/examples/gpt# python3 hf_gpt_convert.py -i gpt2 -o ./gpt2-trt --tensor-parallelism 1 --storage-type float16

(trt-llm) root@xxx:~/autodl-tmp/files/TensorRT-LLM/examples/gpt# python3 build.py --model_dir=./gpt2-trt/1-gpu --use_gpt_attention_plugin --remove_input_padding

(trt-llm) root@xxx:~/autodl-tmp/files/TensorRT-LLM/examples/gpt# python3 run.py --max_output_len=16

Input: "Born in north-east France, Soyer trained as a"

Output: " chef and a cook at the local restaurant, La Boulangerie. He"