强化学习入门(更新中......)

废话不多说,此篇文章用于记录强化学习的入门学习过程。

环境一:taxi-v3

环境描述:

出租车载客环境的地图尺寸为5*5,有4个目的地以及5个乘客可能出现的位置。因此可

以计算出该环境总的状态数为:出租车所在位置*乘客所在位置*真实终点所在位置,即一共有500个可能的状态。

当然也可以使用语句输出状态空间的数量为500.

print('Number of states: {}'.format(enviroment.observation_space.n))

该环境的奖惩机制为:

将乘客送到指定地点20分,任意移动一步-1分,把人送错-10分。

出租车在每一个状态有6个不同的选择,分别为接,送乘客动作以及4个方向动作,根据上述描述可以初始化“表格”的大小为500*6的矩阵,然后使用Q_learning或者是Sarsa算法来更新“表格”以找到一个解决方案。

关于Q_learning以及Sarsa的表格法实现写在第一篇文章中,有兴趣的可以去看一看:

链接:

Q_learning表格法

解决代码:

%%time

import numpy as np

import gym

def restore(npy_file='./q_table.npy'):

Q = np.load(npy_file)

print(npy_file + ' loaded.')

return Q

def save(Q):

npy_file = './q_table.npy'

np.save(npy_file, Q)

print(npy_file + ' saved.')

class Q_table(object):

def __init__(self):

#初始化Q表格

self.table = np.zeros((500,6))

def sample(self,obs):

if np.random.uniform()<0.1:

return np.random.randint(6)

else:

'''

选择“概率”最大的动作,但是可能有多个动作概率相同,需要从中选择一个

使用numpy库的where,choice函数随机选择概率最大的动作之一

'''

action_max = np.max(self.table[obs,:])

choice_action = np.where(action_max==self.table[obs,:])[0]

return np.random.choice(choice_action)

def learn(self,obs,action,reward,n_obs,n_action,done):

Q_ = self.table[obs][action]

if done:

target_Q = reward

else:

target_Q = reward + 0.9*np.max(self.table[n_obs,:]) #Q_learning

#target_Q = reward + 0.9*self.table[n_obs][n_action]

self.table[obs][action] = 0.1*(target_Q-Q_)

def run(env,agent,times):

statement = env.reset()

action = agent.sample(statement)

score = 0

steps = 0

while True:

#当环境未结束,持续的循环执行交互过程

next_statement,reward,done,_ = env.step(action)

next_action = agent.sample(next_statement)

agent.learn(statement,action,reward,next_statement,next_action,done)

statement = next_statement

action = next_action

score += reward

steps += 1

if done:

break

if times%50 == 0:

print("The score is",score)

env = gym.make("Taxi-v3").env

agent = Q_table()

for i in range(4000):

run(env,agent,i)

save(agent.table)

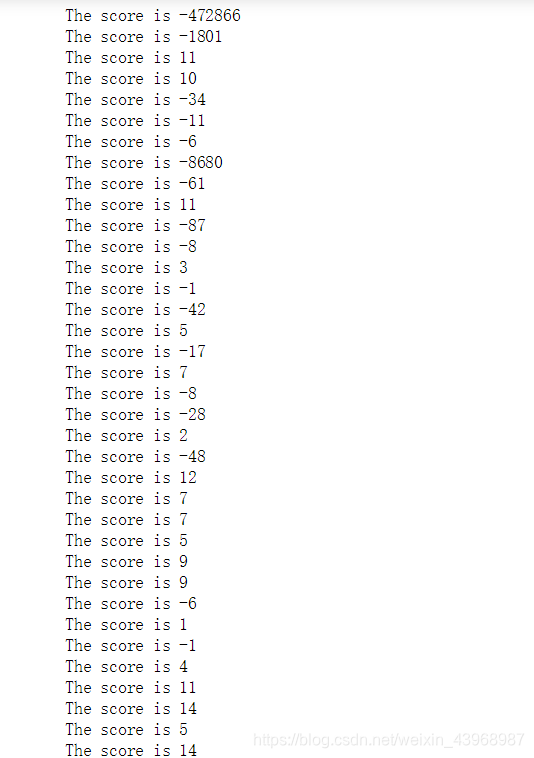

代码运行截图:

代码设置了4000轮测试,这里是跑了大约1750轮的结果。跑4000轮大约需要40秒。(出租车环境的最高分<20。像19,18分这种也是难以取到)

环境二:MountainCar

就是个爬山小车的环境,在此就不多论述,具体的环境的细节以及DQN的一种实现方法都在下面这篇文章里,在此主要介绍另一种实现的方法。MountainCar

关于算法的实现细节如下图:

代码如下:

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras import layers,Sequential,optimizers

import numpy as np

from collections import deque

import time

import gym

import random

class DQN():

def __init__(self,actor_lr):

self.name = "DQN"

self.framework = "TensorFlow"

self.steps = 0

self.var = 1e-1

self.e = 1e-5

self.actor_lr = actor_lr

self.Actor = self.actor()

self.Target = self.actor()

self.optimizer_actor = optimizers.Adam(learning_rate=0.001)

self.replay_memory = deque(maxlen=20000)

def actor(self):

model = tf.keras.Sequential([

layers.Dense(128,activation='relu',input_shape=(1,2)),

layers.Dense(128,activation='relu'),

layers.Dense(3,activation=None)

])

return model

def add(self,obs,action,reward,n_s,done):

if n_s[0]>0.2:

reward += n_s[0]*5

self.replay_memory.append((obs,action,reward,n_s,done))

def sample(self,obs):

self.var -= self.e

if np.random.uniform() <= self.var:

return np.random.randint(3)

return np.argmax(self.Actor(obs))

def data(self):

batch = random.sample(self.replay_memory,32)

Obs,Action,Reward,N_s,Done = [],[],[],[],[]

for (obs,action,reward,n_s,done) in batch:

Obs.append(obs)

Action.append(action)

Reward.append(reward)

N_s.append(n_s)

Done.append(done)

return np.array(Obs).astype("float32"),np.array(Action).astype("int64"),np.array(Reward).astype("float32"),\

np.array(N_s).astype("float32"),np.array(Done).astype("float32")

def learn(self):

if self.steps % 210 == 0:

self.Target.set_weights(self.Actor.get_weights())

if self.steps % 5 == 0 and len(self.replay_memory) >= 2000:

Obs,Action,Reward,N_s,Done = self.data()

target_q = self.Target(N_s)

Max = tf.reduce_max(target_q,axis=1)

terminal = tf.cast(Done,dtype='float32')

target_Q = Reward + (1.0-terminal) * 0.99 * Max

with tf.GradientTape() as tape:

q = self.Actor(Obs)

one_hot = tf.one_hot(Action,3)*1.0

pred_Q = tf.reduce_sum(one_hot*q,axis=1)

loss = tf.reduce_mean(tf.square(pred_Q - target_Q))

grads = tape.gradient(loss,self.Actor.trainable_variables)

self.optimizer_actor.apply_gradients(zip(grads,self.Actor.trainable_variables))

env = gym.make("MountainCar-v0")

agent = DQN(0.001)

Scores = []

for times in range(2000):

s = env.reset()

Score = 0

while True:

agent.steps += 1

a = agent.sample(s.reshape(1,1,2))

next_s, reward, done, _ = env.step(a)

agent.add(s,a,reward,next_s,done)

agent.learn()

Score += reward

s = next_s

#env.render()

if done:

print("The scores is: ",Score)

Scores.append(Score)

print('episode:',times,'score:',Score,'max:',np.max(Scores))

break