Deeplearning

Numpy

Deep Learning

Basic

- 神经网络:

-

监督学习:1个x对应1个y;

-

Sigmoid : 激活函数

s i g m o i d = 1 1 + e − x sigmoid=\frac{1}{1+e^{-x}} sigmoid=1+e−x1 -

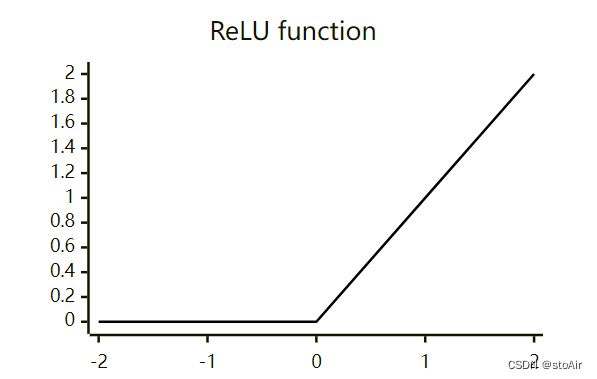

ReLU : 线性整流函数;

Logistic Regression

–>binary classification / x–>y 0 1

some sign

( x , y ) , x ∈ R n x , y ∈ 0 , 1 M = m t r a i n m t e s t = t e s t M : ( x ( 1 ) , y ( 1 ) ) , ( x ( 2 ) , y ( 2 ) ) . . . , ( x ( m ) , y ( m ) ) X = [ x ( 1 ) x ( 2 ) ⋯ x ( m ) ] ← n x × m y ^ = P ( y = 1 ∣ x ) y ^ = σ ( w t x + b ) w ∈ R n x b ∈ R σ ( z ) = 1 1 + e − z (3) (x,y) , x\in{\mathbb{R}^{n_{x}}},y\in{0,1}\\\\ M=m_{train}\quad m_{test}=test\\\\ M:{(x^{(1)},y^{(1)}),(x^{(2)},y^{(2)})...,(x^{(m)},y^{(m)})}\\\\ X = \left[ \begin{matrix} x^{(1)} & x^{(2)} &\cdots & x^{(m)} \end{matrix} \right] \tag{3}\leftarrow n^{x}\times m\\\\ \hat{y}=P(y=1\mid x)\quad\hat{y}=\sigma(w^tx+b)\qquad w\in \mathbb{R}^{n_x} \quad b\in \mathbb{R}\\ \sigma (z)=\frac{1}{1+e^{-z}} (x,y),x∈Rnx,y∈0,1M=mtrainmtest=testM:(x(1),y(1)),(x(2),y(2))...,(x(m),y(m))X=[x(1)x(2)⋯x(m)]←nx×my^=P(y=1∣x)y^=σ(wtx+b)w∈Rnxb∈Rσ(z)=1+e−z1(3)

Loss function

单个样本

L o s s f u n c t i o n : L ( y ^ , y ) = 1 2 ( y ^ − y ) 2 L ( y ^ , y ) = − ( y log ( y ^ ) + ( 1 − y ) log ( 1 − y ^ ) ) y = 1 : L ( y ^ , y ) = − log y ^ log y ^ ← l a r g e r y ^ ← l a r g e r y = 0 : L ( y ^ , y ) = − log ( 1 − y ^ ) log ( 1 − y ^ ) ← l a r g e r y ^ ← s m a l l e r Loss\:function:\mathcal{L}(\hat{y},y)=\frac{1}{2}(\hat{y}-y)^2\\\\ \mathcal{L}(\hat{y},y)=-(y\log(\hat{y})+(1-y)\log(1-\hat{y}))\\\\ y=1:\mathcal{L}(\hat{y},y)=-\log\hat{y}\quad \log\hat{y}\leftarrow larger\quad\hat{y}\leftarrow larger\\ y=0:\mathcal{L}(\hat{y},y)=-\log(1-\hat{y})\quad \log(1-\hat{y})\leftarrow larger\quad\hat{y}\leftarrow smaller\\\\ Lossfunction:L(y^,y)=21(y^−y)2L(y^,y)=−(ylog(y^)+(1−y)log(1−y^))y=1:L(y^,y)=−logy^logy^←largery^←largery=0:L(y^,y)=−log(1−y^)log(1−y^)←largery^←smaller

cost function

J ( w , b ) = 1 m ∑ i = 1 m L ( y ^ ( i ) , y ( i ) ) \mathcal{J}(w,b)=\frac{1}{m}\sum_{i=1}^{m}\mathcal{L}(\hat{y}^{(i)},y^{(i)}) J(w,b)=m1i=1∑mL(y^(i),y(i))

Gradient Descent

find w,b that minimiaze J(w,b) ;

Repeat:

w : = w − α ∂ J ( w , b ) ∂ w ( d w ) b : = b − α ∂ J ( w , b ) ∂ b ( d b ) w:=w-\alpha \frac{\partial\mathcal{J}(w,b)}{\partial w}(dw)\\ b:=b-\alpha \frac{\partial\mathcal{J}(w,b)}{\partial b}(db) w:=w−α∂w∂J(w,b)(dw)b:=b−α∂b∂J(w,b)(db)

Computation Grapha

example:

J = 3 ( a + b c ) J=3(a+bc) J=3(a+bc)

one example gradient descent computer grapha:

recap:

z = w T x + b y ^ = a = σ ( z ) = 1 1 + e − z L ( a , y ) = − ( t log ( a ) + ( 1 − y ) log ( 1 − a ) ) z=w^Tx+b\\ \hat{y}=a=\sigma(z)=\frac{1}{1+e^{-z}} \\ \mathcal{L}(a,y)=-(t\log(a)+(1-y)\log(1-a)) z=wTx+by^=a=σ(z)=1+e−z1L(a,y)=−(tlog(a)+(1−y)log(1−a))

The grapha:

′ d a ′ = d L ( a , y ) d a = − y a + 1 − y 1 − a ′ d z ′ = d L ( a , y ) d z = d L d a ⋅ d a d z = a − y ′ d w 1 ′ = x 1 ⋅ d z . . . w 1 : = w 1 − α d w 1 . . . 'da'=\frac{d\mathcal{L}(a,y)}{da}=-\frac{y}{a}+\frac{1-y}{1-a}\\ 'dz'=\frac{d\mathcal{L}(a,y)}{dz}=\frac{d\mathcal{L}}{da}\cdot\frac{da}{dz}=a-y\\ 'dw_1'=x_1\cdot dz\;\;\; ... \\w_1:=w_1-\alpha dw_1\;\;... ′da′=dadL(a,y)=−ay+1−a1−y′dz′=dzdL(a,y)=dadL⋅dzda=a−y′dw1′=x1⋅dz...w1:=w1−αdw1...

m example gradient descent computer grapha:

recap:

J ( w , b ) = 1 m ∑ i = 1 m L ( a ( i ) , y ( 1 ) ) \mathcal{J}(w,b)=\frac{1}{m}\sum_{i=1}^m\mathcal{L}(a^{(i)},y^{(1)}) J(w,b)=m1i=1∑mL(a(i),y(1))

The grapha: (two iterate)

∂ ∂ w 1 J ( w , b ) = 1 m ∑ i = 1 m ∂ ∂ w 1 L ( a ( i ) , y ( 1 ) ) F o r i = 1 t o m : { a ( i ) = σ ( w T x ( i ) + b ) J + = − [ y ( i ) log a i + ( 1 − y ( i ) log ( 1 − a ( i ) ) ) ] d z ( i ) = a ( i ) − y ( i ) d w 1 + = x 1 ( i ) d z ( i ) d w 2 + = x 2 ( i ) d z ( i ) d b + = d z ( i ) } J / = m ; d w 1 / = m ; d w 2 / = m ; d b / = m d w 1 = ∂ J ∂ w 1 w 1 = w 1 − α d w 1 \frac{\partial}{\partial w_1}\mathcal{J}(w,b)=\frac{1}{m}\sum_{i=1}^m\frac{\partial}{\partial w_1}\mathcal{L}(a^{(i)},y^{(1)})\\\\ For \quad i=1 \quad to \quad m:\{\\ a^{(i)}=\sigma (w^Tx^{(i)}+b)\\ \mathcal{J}+=-[y^{(i)}\log a^{i}+(1-y^{(i)}\log(1-a^{(i)}))] \\ dz^{(i)}=a^{(i)}-y^{(i)}\\ dw_1+=x_1^{(i)}dz^{(i)}\\ dw_2+=x_2^{(i)}dz^{(i)}\\ db+=dz^{(i)}\}\\ \mathcal{J}/=m;dw_1/=m;dw_2/=m;db/=m\\ dw_1=\frac{\partial\mathcal{J}}{\partial w_1}\\ w_1=w_1-\alpha dw_1 ∂w1∂J(w,b)=m1i=1∑m∂w1∂L(a(i),y(1))Fori=1tom:{a(i)=σ(wTx(i)+b)J+=−[y(i)logai+(1−y(i)log(1−a(i)))]dz(i)=a(i)−y(i)dw1+=x1(i)dz(i)dw2+=x2(i)dz(i)db+=dz(i)}J/=m;dw1/=m;dw2/=m;db/=mdw1=∂w1∂Jw1=w1−αdw1

Vectorization

vectorized:

z = n p . d o t ( w , x ) + b z=np.dot(w,x)+b z=np.dot(w,x)+b

logistic regression derivatives:

change:

d w 1 = 0 , d w 2 = 0 → d w = n p . z e r o s ( ( n x , 1 ) ) { d w 1 + = x 1 ( i ) d z ( i ) d w 2 + = x 2 ( i ) d z ( i ) → d w + = x ( i ) d z ( i ) Z = ( z ( 1 ) z ( 2 ) . . . z ( m ) ) = w T X + b A = σ ( Z ) d z = A − Y = ( a ( 1 ) − y ( 1 ) z ( 2 ) − y ( 2 ) . . . z ( m ) − y ( m ) ) d b = 1 m ∑ i = 1 m d z ( i ) = 1 m n p . s u m ( d z ) d w = 1 m X d z T = 1 m ( x ( 1 ) ⋅ d z ( 1 ) x ( 2 ) ⋅ d z ( 2 ) . . . x ( m ) ⋅ d z ( m ) ) dw_1=0,dw_2=0\rightarrow dw=np.zeros((n_x,1))\\ \begin{cases}dw_1+=x_1^{(i)}dz^{(i)}\\ dw_2+=x_2^{(i)}dz^{(i)}\end{cases}\rightarrow dw+=x^{(i)}dz^{(i)}\\\\ Z=\left(\;\begin{matrix} z^{(1)} & z^{(2)} &... &z^{(m)}\end{matrix}\;\right)=w^TX+b\\ A=\sigma(Z)\\\\ dz=A-Y=\left(\;\begin{matrix} a^{(1)}-y^{(1)} & z^{(2)}-y^{(2)} &... &z^{(m)}-y^{(m)}\end{matrix}\;\right)\\ db=\frac{1}{m}\sum_{i=1}^mdz^{(i)}=\frac{1}{m}np.sum(dz)\\ dw=\frac{1}{m}Xdz^T=\frac{1}{m}\left(\;\begin{matrix} x^{(1)}\cdot dz^{(1)} & x^{(2)}\cdot dz^{(2)} &... &x^{(m)}\cdot dz^{(m)}\end{matrix}\;\right) dw1=0,dw2=0→dw=np.zeros((nx,1)){dw1+=x1(i)dz(i)dw2+=x2(i)dz(i)→dw+=x(i)dz(i)Z=(z(1)z(2)...z(m))=wTX+bA=σ(Z)dz=A−Y=(a(1)−y(1)z(2)−y(2)...z(m)−y(m))db=m1i=1∑mdz(i)=m1np.sum(dz)dw=m1XdzT=m1(x(1)⋅dz(1)x(2)⋅dz(2)...x(m)⋅dz(m))

Implementing:

Z = w T X + b = n p . d o t ( w T , X ) + b A = σ ( Z ) d Z = A − Y d w = 1 m X d Z T d b = 1 m n p . s u m ( d Z ) w : = w − α d w b : = b − α d b Z=w^TX+b=np.dot(w^T,X)+b\\ A=\sigma(Z)\\ dZ=A-Y\\ dw=\frac{1}{m}XdZ^T\\ db=\frac{1}{m}np.sum(dZ)\\ w:=w-\alpha dw\\ b:=b-\alpha db Z=wTX+b=np.dot(wT,X)+bA=σ(Z)dZ=A−Ydw=m1XdZTdb=m1np.sum(dZ)w:=w−αdwb:=b−αdb

broadcasting in python:

n p . d o t ( w T , X ) + b np.dot(w^T,X)+b np.dot(wT,X)+b

A note on Numpy

a = n p . r a n d o m . r a n d n ( 5 ) / / w r o n g → a = a . r e s h a p e ( 5 , 1 ) a s s e r t ( a . s h a p e = = ( 5 , 1 ) ) a = n p . r a n d o m . r a n d n ( 5 , 1 ) → c o l u m v e c t o r a=np.random.randn(5) //wrong\rightarrow a=a.reshape(5,1)\\ assert(a.shape==(5,1))\\ a=np.random.randn(5,1)\rightarrow colum\;vector a=np.random.randn(5)//wrong→a=a.reshape(5,1)assert(a.shape==(5,1))a=np.random.randn(5,1)→columvector

:

$$

$$