本文对Sogou的日志进行分析,Sogou日志下载地址.

http://download.labs.sogou.com/dl/sogoulabdown/SogouQ/SogouQ2012.mini.tar.gz

Sogou日志说明:

解压查看Sougou日志的内容和格式,

文件格式:访问时间\t加密的用户ID\t搜索关键词\t该URL的搜索结果排名\t该URL用户点击排序结果\t用户点击的URL

日志上传至HDFS

hdfs dfs -put /home/hadoop/software/spark-1.2.0-bin-hadoop2.4/data/sogou/SogouQ.mini /user/hadoop/

每行日志格式正确性检查

scala> val rdd = sc.textFile("SogouQ.mini");

scala> rdd.count

///结果

2000

scala> val validRDD = rdd.map(_.split("\t")).filter(_.length == 6)

///结果

2000

可见,文件的总行数为2000,同时,对2000文本进行分割过滤长度为6的总行数也是2000,即每行日志的格式都是是正确的

统计搜索结果和点击结果都是第一的记录

1. 计算结果

scala> val result = validRDD.filter(_(3).toInt == 1).filter(_(4).toInt == 1) scala> result.count ///结果 res9: Long = 794 ///注意,这里如果使用下面的语句则得不到正确的结果,结果为0 scala> val result = rdd.filter(_(3).toInt == 1).filter(_(4).toInt == 1) scala> result.count res8: Long = 0

2. 查看RDD转换

scala> result.toDebugString res10: String = (1) FilteredRDD[32] at filter at <console>:16 [] | FilteredRDD[31] at filter at <console>:16 [] | FilteredRDD[28] at filter at <console>:14 [] | MappedRDD[27] at map at <console>:14 [] | SogouQ.mini MappedRDD[26] at textFile at <console>:12 [] | SogouQ.mini HadoopRDD[25] at textFile at <console>:12 []

对用户ID的查询次数进行排序

1.

scala> val result = validRDD.map(x => (x(1),1)).reduceByKey(_+_).map(x => (x._2,x._1)).sortByKey(false).map(x => (x._2,x._1))

scala> result.count

///结果

res11: Long = 1186

///保存到HDFS中

scala> result.saveAsTextFile("SortedResultByUserID");

2. 查看HDFS上的排序结果

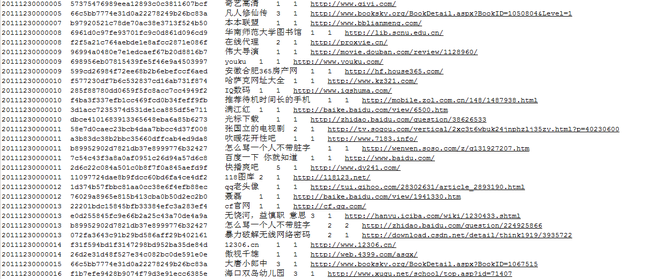

[hadoop@hadoop sogou]$ hdfs dfs -cat /user/hadoop/SortedResultByUserID/part-00000| head -20 (f6492a1da9875f20e01ff8b5804dcc35,14) (d3034ac9911c30d7cf9312591ecf990e,11) (e7579c6b6b9c0ea40ecfa0f425fc765a,11) (ec0363079f36254b12a5e30bdc070125,10) (5c853e91940c5eade7455e4a289722d6,10) (2a36742c996300d664652d9092e8a554,9) (439fa809ba818cee624cc8b6e883913a,9) (828f91e6717213a65c97b694e6279201,9) (45c304b5f2dd99182451a02685252312,8) (5ea391fd07dbb616e9857a7d95f460e0,8) (596444b8c02b7b30c11273d5bbb88741,8) (a06830724b809c0db56263124b2bd142,8) (41389fb54f9b3bec766c5006d7bce6a2,7) (6056710d9eafa569ddc800fe24643051,7) (8897bbb7bdff69e80f7fb2041d83b17d,7) (bc8cc0577bb80fafd6fad1ed67d3698e,7) (29ede0f2544d28b714810965400ab912,6) (b6d439f7b71172e8416a28ea356c06ef,6) (6da1dcbaeab299deffe5932d902e775d,6) (091a8d6c26aa8e95185383f45624227c,6) [hadoop@hadoop sogou]$ hdfs dfs -cat /user/hadoop/SortedResultByUserID/part-00000| tail -10 (9e76272ecf1e526834eb7c9609f03fc0,1) (57375476989eea12893c0c3811607bcf,1) (7de315ffe0730e2275ae6146854237cc,1) (ede950da3337974f2b49bf0c97ca5664,1) (3d29715c7ef674b1223b2814efb87d20,1) (e0259578a1754f9f2221f4367bbc8c84,1) (bb4023be70aef58d29aac9102284705f,1) (1e0b9bab1eaf1255f11140c5f6fc816d,1) (14a23e70a246a8ffabfd6f97711aedc2,1) (c491b1f1d01ec698a69123d4fdff44cc,1)

结果正确

参考:Spark实战高手之路系列文章