-

generating user vectors

Input format

userID: itemID1 itemID2 itemID3 ....

Output format

a Vector from all item IDs for the user, and outputs the user ID mapped to the user’s preference vector. All values in this vector are 0 or 1. For example, 98955 / [590:1.0, 22:1.0, 9059:1.0]

package mia.recommender.ch06;

import java.io.IOException;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.mahout.math.VarLongWritable;

public final class WikipediaToItemPrefsMapper extends

Mapper<LongWritable, Text, VarLongWritable, VarLongWritable> {

private static final Pattern NUMBERS = Pattern.compile("(\\d+)");

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String line = value.toString();

Matcher m = NUMBERS.matcher(line);

m.find();

VarLongWritable userID = new VarLongWritable(Long.parseLong(m.group()));

VarLongWritable itemID = new VarLongWritable();

while (m.find()) {

itemID.set(Long.parseLong(m.group()));

context.write(userID, itemID);

}

}

}

package mia.recommender.ch06;

import java.io.IOException;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.mahout.math.RandomAccessSparseVector;

import org.apache.mahout.math.VarLongWritable;

import org.apache.mahout.math.VectorWritable;

import org.apache.mahout.math.Vector;

public class WikipediaToUserVectorReducer

extends

Reducer<VarLongWritable, VarLongWritable, VarLongWritable, VectorWritable> {

public void reduce(VarLongWritable userID,

Iterable<VarLongWritable> itemPrefs, Context context)

throws IOException, InterruptedException {

Vector userVector = new RandomAccessSparseVector(Integer.MAX_VALUE, 100);

for (VarLongWritable itemPref : itemPrefs) {

userVector.set((int) itemPref.get(), 1.0f);

}

context.write(userID, new VectorWritable(userVector));

}

}

-

calculating co-occurrence

Input format

user IDs mapped to Vectors of user preferences—the output of the above MapReduce. For example, 98955 / [590:1.0,22:1.0,9059:1.0]

Output format

rows—or columns—of the co-occurrence matrix. For example,590 / [22:3.0,95:1.0,...,9059:1.0,...]

package mia.recommender.ch06;

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.mahout.math.VarLongWritable;

import org.apache.mahout.math.VectorWritable;

import org.apache.mahout.math.Vector;

public class UserVectorToCooccurrenceMapper extends

Mapper<VarLongWritable, VectorWritable, IntWritable, IntWritable> {

public void map(VarLongWritable userID, VectorWritable userVector,

Context context) throws IOException, InterruptedException {

//Iterator<Vector.Element> it = userVector.get().iterateNonZero();

Iterator<Vector.Element> it = userVector.get().nonZeroes().iterator();

while (it.hasNext()) {

int index1 = it.next().index();

// Iterator<Vector.Element> it2 = userVector.get().iterateNonZero();

Iterator<Vector.Element> it2 = userVector.get().nonZeroes().iterator();

while (it2.hasNext()) {

int index2 = it2.next().index();

context.write(new IntWritable(index1), new IntWritable(index2));

}

}

}

}

package mia.recommender.ch06;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.mahout.math.RandomAccessSparseVector;

import org.apache.mahout.math.Vector;

import org.apache.mahout.math.VectorWritable;

public class UserVectorToCooccurrenceReducer extends

Reducer<IntWritable, IntWritable, IntWritable, VectorWritable> {

public void reduce(IntWritable itemIndex1,

Iterable<IntWritable> itemIndex2s, Context context)

throws IOException, InterruptedException {

Vector cooccurrenceRow = new RandomAccessSparseVector(

Integer.MAX_VALUE, 100);

for (IntWritable intWritable : itemIndex2s) {

int itemIndex2 = intWritable.get();

cooccurrenceRow.set(itemIndex2,

cooccurrenceRow.get(itemIndex2) + 1.0);

}

context.write(itemIndex1, new VectorWritable(cooccurrenceRow));

}

}

-

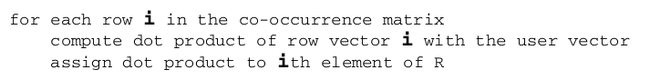

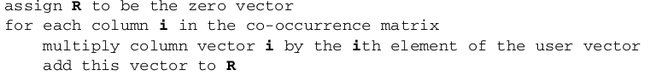

rethinking matrix multiplication

conventional matrix multiplication

The conventional algorithm necessarily touches the entire co-occurrence matrix, because it needs to perform a vector dot product with each row.

alternative matrix compuatation

But note that wherever element i of the user vector is 0, the loop iteration can be skipped entirely, because the product will be the zero vector and doesn’t affect the result. So this loop need only execute for each nonzero element of the user vector. The number of columns loaded will be equal to the number of preferences that the user expresses, which is far smaller than the total number of columns when the user vector is sparse.

-

matrix multiplication by partial products

The columns of the co-occurrence matrix are available from an earlier step. Because the matrix is symmetric, the rows and columns are identical, so this output can be viewed as either rows or columns, conceptually. The columns are keyed by item ID, and the algorithm must multiply each by every nonzero preference value for that item, across all user vectors. That is, it must map item IDs to a user ID and preference value,and then collect them together in a reducer. After multiplying the co-occurrence col-

umn by each value, it produces a vector that forms part of the final recommender vector R for one user.

The difficult part here is that two different kinds of data are joined in one computation: co-occurrence column vectors and user preference values. This isn’t by naturepossible in Hadoop, because values in a reducer can be of one Writable type only. A clever implementation can get around this by crafting a Writable that contains either one or the other type of data: a VectorOrPrefWritable.

The mapper phase here will actually contain two mappers, each producing different types of reducer input:

- Input for mapper 1 is the co-occurrence matrix: item IDs as keys, mapped to columns as Vectors. For example, 590 / [22:3.0,95:1.0,...,9059:1.0,...] The map function simply echoes its input, but with the Vector wrapped in a VectorOrPrefWritable.

- Input for mapper 2 is again the user vectors: user IDs as keys, mapped to preference Vectors. For example, 98955 / [590:1.0,22:1.0,9059:1.0] For each nonzero value in the user vector, the map function outputs an item ID mapped to the user ID and preference value, wrapped in a VectorOrPrefWritable. For example, 590 / [98955:1.0]

- The framework collects together, by item ID, the co-occurrence column and all user ID–preference value pairs.

- The reducer collects this information into one output record and stores it.

package mia.recommender.ch06;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.mahout.cf.taste.hadoop.item.VectorOrPrefWritable;

import org.apache.mahout.math.VectorWritable;

public class CooccurrenceColumnWrapperMapper extends

Mapper<IntWritable, VectorWritable, IntWritable, VectorOrPrefWritable> {

public void map(IntWritable key, VectorWritable value, Context context)

throws IOException, InterruptedException {

context.write(key, new VectorOrPrefWritable(value.get()));

}

}

package mia.recommender.ch06;

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.mahout.cf.taste.hadoop.item.VectorOrPrefWritable;

import org.apache.mahout.math.VarLongWritable;

import org.apache.mahout.math.Vector;

import org.apache.mahout.math.VectorWritable;

public class UserVectorSplitterMapper

extends

Mapper<VarLongWritable, VectorWritable, IntWritable, VectorOrPrefWritable> {

public void map(VarLongWritable key, VectorWritable value, Context context)

throws IOException, InterruptedException {

long userID = key.get();

Vector userVector = value.get();

// Iterator<Vector.Element> it = userVector.iterateNonZero();

Iterator<Vector.Element> it = userVector.nonZeroes().iterator();

IntWritable itemIndexWritable = new IntWritable();

while (it.hasNext()) {

Vector.Element e = it.next();

int itemIndex = e.index();

float preferenceValue = (float) e.get();

itemIndexWritable.set(itemIndex);

context.write(itemIndexWritable,

new VectorOrPrefWritable(userID, preferenceValue));

}

}

} Technically speaking, there is no real Reducer following these two Mappers; it’s no longer possible to feed the output of two Mappers into a Reducer. Instead they’re run separately, and the output is passed through a no-op Reducer and saved in two locations.These two locations can be used as input to another MapReduce, whose Mapper does nothing as well, and whose Reducer collects together a co-occurrence column vector for an item and all users and preference values for that item into a single entity called

VectorAndPrefsWritable. ToVectorAndPrefReducer implements this; for brevity, this detail is omitted.