Nginx Httpcode分析脚本

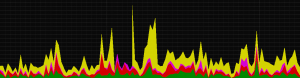

之前在做CDN运维的时候,因为业务的特殊性(跨机房,跨ISP,跨区域),把日志集中传输到一个中心来做qos的分析不太现实,因此采用的方法是每5分钟对Nginx日志进行切割,然后通过Python程序计算http code的分布,并通过Zabbix来实现单台机器Nginx qos的监控,配合对Zabbix数据库的Lastvalue进行聚合,则可以监控整个CDN的流量,qos数据等,这样一般发现问题的延迟就在5分钟左右(cdn的qos敏感性不是很强),配合rsync+hadoop+hive来计算nginx的日志,也可以得到更加详细的各个维度的分析(离线数据分析),下面贴下Nginx日志使用的分析脚本:

先贴下zabbix聚合脚本:

#!/usr/bin/python

#to get webcdn totaol statistics

# -*- coding: utf8 -*-

import MySQLdb

import sys

import os

def get_total_value(sql):

db = MySQLdb.connect(host='xxxx',user='xxxx',passwd='xxxx',db='xxxx')

cursor = db.cursor()

cursor.execute(sql)

try:

result = cursor.fetchone()[0]

except:

result = 0

cursor.close()

db.close()

return result

if __name__ == '__main__':

sql = ''

if sys.argv[1] == "network_traffic":

sql = "select round(sum(lastvalue)/(1024*1024),4) from hosts a, items b where key_ in ( 'net.if.out[eth1,bytes]','net.if.out[eth0,bytes]') and lower(host) like '%-cdn-cache%' and a.hostid = b.hostid"

elif sys.argv[1] == "nginx_traffic":

sql = "select sum(lastvalue) from hosts a, items b where key_ = 'log_webcdn_getvalue[traffic]' and lower(host) like '%cdn-cache%' and a.hostid = b.hostid"

elif sys.argv[1] == "2xxand3xx":

sql = "select sum(lastvalue) from hosts a, items b where key_ in ( 'log_webcdn_getvalue[200]','log_webcdn_getvalue[300]') and lower(host) like '%-cdn-cache%' and a.hostid = b.hostid"

elif sys.argv[1] == "4xxand5xx":

sql = "select sum(lastvalue) from hosts a, items b where key_ in ( 'log_webcdn_getvalue[four]','log_webcdn_getvalue[five]') and lower(host) like '%-cdn-cache%' and a.hostid = b.hostid"

elif sys.argv[1] == "network_ss":

sql = "select sum(lastvalue) from hosts a, items b where key_ = 'network_conn' and lower(host) like '%-cdn-cache%' and a.hostid = b.hostid"

else:

sys.exit(0)

# print sql

value = get_total_value(sql)

print value

然后是单台的分析脚本:

#!/usr/bin/python

#coding=utf-8

from __future__ import division

import subprocess, signal,string

import codecs

import re

import os

import time, datetime

import sys

def show_usage():

print """

python nginx_log_wedcdn.py result_key

result_key could be:

average_bodysize, response_time, sum_count, count_success, four, 403, 404, 499, five, 500, 502, 503, 200, 300, requests_second

response_time_source, percentage_time_1, percentage_time_3, all

"""

def runCmd(command, timeout = 10):

start = datetime.datetime.now()

process = subprocess.Popen(command, stdout=subprocess.PIPE, stderr=subprocess.PIPE, shell=True)

while process.poll() is None:

time.sleep(0.2)

now = datetime.datetime.now()

if (now - start).seconds > timeout:

os.kill(process.pid, signal.SIGKILL)

os.waitpid(-1, os.WNOHANG)

return None

return process.stdout.readlines()

def get_old_filename():

t = datetime.datetime.now() + datetime.timedelta(minutes = -5)

a = t.strftime('%Y-%m-%d-%H')

b = t.strftime('%M')

b = int(b)//5*5

if b <10:

c = "0" + str(b)

else:

c = str(b)

d = "/log/nginx/old/" + a + "-%s.log.gz" % c

#print d

return d

def get_new_filename():

t = datetime.datetime.now() + datetime.timedelta(minutes = -5)

a = t.strftime('%Y-%m-%d-%H')

b = t.strftime('%M')

b = int(b)//5*5

if b <10:

c = "0" + str(b)

else:

c = str(b)

d = "/log/nginx/old/" + a + "-%s.log" % c

#print d

return d

def get_new2_filename():

t = datetime.datetime.now() + datetime.timedelta(minutes = -5)

a = t.strftime('%Y-%m-%d-%H')

b = t.strftime('%M')

b = int(b)//5*5

if b <10:

c = "0" + str(b)

else:

c = str(b)

d = "/log/nginx/new/" + a + "-%s.log" % c

#print d

return d

def average_flow():

flow = 0

flow1 = 0

flow_ppsucai = 0

flow_asimgs = 0

flow_static9 = 0

traffic = 0.0

traffic1 = 0.0

count = 0

count_sucai = 0

count_sucai_100 = 0

count_sucai_30_100 = 0

count_sucai_30 = 0

count_asimgs = 0

count_asimgs_100 = 0

count_asimgs_30_100 = 0

count_asimgs_30 = 0

count_static9 = 0

count_static9_100 = 0

count_static9_30_100 = 0

count_static9_30 = 0

sum_time = 0.0

sum_ppsucai_time = 0.0

sum_asimgs_time = 0.0

sum_static9_time = 0.0

sum_time_source = 0.0

count_200 = 0

count_300 = 0

count_success = 0

count_200_backup = 0

count_not_200_backup = 0

id_list_200 = [200,206]

id_list_300 = [300,301,302,303,304,305,306,307]

id_list_success = [200,206,300,301,302,303,304,305,306,307]

data_byte = 0

elapsed = 0.0

response_time = 0.0

response_time_source = 0.0

requests_second = 0.0

requests_second_sucai = 0.0

requests_second_asimgs = 0.0

list_time_1 = []

list_time_3 = []

list_ip_403 = []

list_ip_404 = []

list_ip_415 = []

list_ip_499 = []

list_ip_500 = []

list_ip_502 = []

list_ip_503 = []

server_list = ['"127.0.0.1:8080"','"127.0.0.1:8081"','"-"']

file_name = get_old_filename()

if os.path.isfile("%s" % file_name):

Writelog(file_name)

i = os.popen("/bin/zcat %s" % file_name).readlines()

#i = gzip.GzipFile("%s" % file_name).readlines()

else:

file_name = get_new_filename()

if os.path.isfile("%s" % file_name):

Writelog(file_name)

i = os.popen("/bin/cat %s" % file_name).readlines()

else:

#time.sleep(15)

file_name = get_new2_filename()

if os.path.isfile("%s" % file_name):

Writelog(file_name)

i = os.popen("/bin/cat %s" % file_name).readlines()

else:

os.popen("rm -f /tmp/exist.txt")

sys.exit(1)

for line in i:

count += 1

try:

domain_name = line.split()[1]

except:

pass

try:

web_code = int(line.split()[8])

except:

web_code = 888

try:

IP = str(line.split()[0])

except:

pass

try:

data_byte = int(line.split()[9])

#print "data", data_byte

except:

data_byte = 0.0001

try:

elapsed = float(line.split()[-1].strip('"'))

if elapsed == 0.000:

elapsed = 0.0001

except:

elapsed = 0.0001

try:

time_source = float(line.split()[-4].strip('"'))

except:

time_source = 0.0

try:

backup_server = str(line.split()[-3])

except:

pass

flow1 += data_byte

if web_code in id_list_success:

flow += data_byte

sum_time_source += time_source

if domain_name != "ppsucai.pptv.com":

sum_time += elapsed

else:

#print domain_name

sum_time += 0.000

if web_code in id_list_200:

#print web_code

count_200 += 1

if backup_server not in server_list:

#print web_code, backup_server

count_200_backup += 1

elif web_code == 200 and date_byte == 0:

#print line.split()[3].lstrip("[")

WriteURLInfo(line.split()[3].lstrip("["))

WriteURLInfo("\t")

WriteURLInfo(line.split()[10])

WriteURLInfo("\n")

elif web_code in id_list_300:

count_300 += 1

elif web_code == 403 and IP not in list_ip_403:

list_ip_403.append(IP)

#print "this is the sum 403 count:", IP, len(list_ip_403)

elif web_code == 404 and IP not in list_ip_404:

list_ip_404.append(IP)

#print "this is the sum 404 count:", IP, len(list_ip_404)

elif web_code == 415 and IP not in list_ip_415:

list_ip_415.append(IP)

#print "this is the sum 415 count:", IP, len(list_ip_415)

elif web_code == 499 and IP not in list_ip_499:

list_ip_499.append(IP)

#print "this is the sum 499 count:", IP, len(list_ip_499)

elif web_code == 500 and IP not in list_ip_500:

list_ip_500.append(IP)

#print "this is the sum 500 count:", IP, len(list_ip_500)

elif web_code == 502 and IP not in list_ip_502:

list_ip_502.append(IP)

#print "this is the sum 502 count:", IP, len(list_ip_502)

elif web_code == 503 and IP not in list_ip_503:

list_ip_503.append(IP)

#print "this is the sum 503 count:", IP, len(list_ip_503)

if web_code not in id_list_200 and backup_server not in server_list:

#print web_code, backup_server

count_not_200_backup += 1

if elapsed > 1.0 and web_code in id_list_success and IP not in list_time_1:

list_time_1.append(IP)

elif elapsed > 3.0 and web_code in id_list_success and IP not in list_time_3:

list_time_3.append(IP)

if domain_name == "ppsucai.pptv.com" and web_code in id_list_success:

download_speed_sucai = round(data_byte / elapsed / 1024, 2)

flow_ppsucai += data_byte

sum_ppsucai_time += elapsed

count_sucai += 1

if download_speed_sucai >= 100:

count_sucai_100 += 1

elif download_speed_sucai <100 and download_speed_sucai >= 30:

count_sucai_30_100 += 1

else:

count_sucai_30 += 1

elif domain_name == "asimgs.pplive.cn" and web_code in id_list_success:

download_speed_asimgs = round(data_byte / elapsed / 1024, 2)

flow_asimgs += data_byte

sum_asimgs_time += elapsed

count_asimgs += 1

if download_speed_asimgs >= 100:

count_asimgs_100 += 1

elif download_speed_asimgs <100 and download_speed_asimgs >= 30:

count_asimgs_30_100 += 1

else:

count_asimgs_30 += 1

elif domain_name == "static9.pplive.cn" and web_code in id_list_success:

download_speed_static9 = round(data_byte / elapsed / 1024, 2)

flow_static9 += data_byte

sum_static9_time += elapsed

count_static9 += 1

if download_speed_static9 >= 100:

count_static9_100 += 1

elif download_speed_static9 <100 and download_speed_static9 >= 30:

count_static9_30_100 += 1

else:

count_static9_30 += 1

#else:

#break

try:

traffic = round((flow*1.07*8)/300/1024/1024, 2)

#traffic1 = round((flow1*1.07)/300/1024/1024, 2)

#print traffic, traffic1

#traffic1 = round(flow/sum_time/1024/1024, 2)

count_success = count_200 + count_300

response_time = round(sum_time/count_success, 2)

response_time_source = round(sum_time_source/count_success, 2)

requests_second = round(count_success/300, 2)

if sum_ppsucai_time == 0.0:

sum_ppsucai_time = 0.0001

if sum_asimgs_time == 0.0:

sum_asimgs_time = 0.0001

#print sum_static9_time

if sum_static9_time == 0.0:

sum_static9_time = 0.0001

traffic_ppsucai = round(flow_ppsucai/sum_ppsucai_time/1024, 2)

traffic_asimgs = round(flow_asimgs/sum_asimgs_time/1024, 2)

traffic_static9 = round(flow_static9/sum_static9_time/1024, 2)

#print "flow_static:", flow_static9, "traffic_static9", traffic_static9

average_bodysize = round((flow/count_success)/1024, 2)

percentage_time_1 = round(len(list_time_1)/count_success*100, 2)

percentage_time_3 = round(len(list_time_3)/count_success*100, 2)

if count_sucai == 0:

count_sucai = 0.0001

percentage_sucai_100 = round(count_sucai_100/count_sucai*100, 2)

percentage_sucai_30_100 = round(count_sucai_30_100/count_sucai*100, 2)

percentage_sucai_30 = round(count_sucai_30/count_sucai*100, 2)

if count_asimgs == 0:

count_asimgs = 0.0001

percentage_asimgs_100 = round(count_asimgs_100/count_asimgs*100, 2)

percentage_asimgs_30_100 = round(count_asimgs_30_100/count_asimgs*100, 2)

percentage_asimgs_30 = round(count_asimgs_30/count_asimgs*100, 2)

#print count_static9

if count_static9 == 0:

count_static9 = 0.0001

percentage_static9_100 = round(count_static9_100/count_static9*100, 2)

#print count_static9_100, "100", percentage_static9_100

percentage_static9_30_100 = round(count_static9_30_100/count_static9*100, 2)

#print count_static9_30_100, "30-100", percentage_static9_30_100

percentage_static9_30 = round(count_static9_30/count_static9*100, 2)

#print count_static9_30, "30", percentage_static9_30

requests_second_sucai = round(count_sucai/300, 2)

requests_second_asimgs = round(count_asimgs/300, 2)

requests_second_static9 = round(count_static9/300, 2)

#print requests_second_static9

#print count, "this is the count of 2xx_backup:", count_200_backup,"%", round(count_200_backup/count, 4),"this is the count of !2xx_backup:", count_not_200_backup, round(count_not_200_backup/count, 4)

percentage_200_backup = round(count_200_backup/count*100, 2)

percentage_not_200_backup = round(count_not_200_backup/count*100, 2)

return average_bodysize, response_time, count, count_success, len(list_ip_403), len(list_ip_404), len(list_ip_499), len(list_ip_500), len(list_ip_502), len(list_ip_503), count_200, count_300, requests_second, response_time_source, len(list_time_1), len(list_time_3), percentage_time_1, percentage_time_3,count_sucai,percentage_sucai_100, percentage_sucai_30_100, percentage_sucai_30, requests_second_sucai, count_asimgs, percentage_asimgs_100, percentage_asimgs_30_100, percentage_asimgs_30, requests_second_asimgs, traffic_ppsucai, traffic_asimgs, traffic, traffic_static9, count_static9, percentage_static9_100, percentage_static9_30_100, percentage_static9_30, requests_second_static9, percentage_200_backup, percentage_not_200_backup, len(list_ip_415)

except:

return 0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0

def log_files(pwd):

log_file_list = []

files = os.popen("ls %s" % pwd).readlines()

for x in files:

if x.strip().endswith("log"):

log_file_list.append(x.strip())

return log_file_list

def result_dic():

list = average_flow()

#print list

# print list

result = {}

result['average_bodysize'] = list[0]

result['response_time'] = list[1]

result['sum_count'] = list[2]

result['count_success'] = list[3]

result['four'] = list[4] + list[5] + list[6] + list[39]

# print 'four','=','%s' % list[4],'+','%s' % list[5],'+','%s' % list[6],'+','%s' % list[39], result['four']

result['403'] = list[4]

# print '403', result['403']

result['404'] = list[5]

# print '404', result['404']

result['499'] = list[6]

# print '499', result['499']

result['415'] = list[39]

# print '415', result['415']

result['five'] = list[7] + list[8] + list[9]

result['500'] = list[7]

result['502'] = list[8]

result['503'] = list[9]

result['200'] = list[10]

result['300'] = list[11]

result['requests_second'] = list[12]

result['response_time_source'] = list[13]

result['percentage_time_1'] = list[16]

result['percentage_time_3'] = list[17]

result['count_sucai'] = list[18]

result['percentage_sucai_100'] = list[19]

result['percentage_sucai_30_100'] = list[20]

result['percentage_sucai_30'] = list[21]

result['requests_second_sucai'] = list[22]

result['count_asimgs'] = list[23]

result['percentage_asimgs_100'] = list[24]

result['percentage_asimgs_30_100'] = list[25]

result['percentage_asimgs_30'] = list[26]

result['requests_second_asimgs'] = list[27]

result['traffic_ppsucai'] = list[28]

result['traffic_asimgs'] = list[29]

result['traffic'] = list[30]

result['traffic_static9'] = list[31]

result['count_static9'] = list[32]

result['percentage_static9_100'] = list[33]

result['percentage_static9_30_100'] = list[34]

result['percentage_static9_30'] = list[35]

result['requests_second_static9'] = list[36]

result['percentage_200_backup'] = list[37]

result['percentage_not_200_backup'] = list[38]

result['all'] = list

return result

def Writelog(msg):

o = open("/log/nginx/qos_result_new"+".log","aw")

o.write(time.strftime("%Y-%m-%d %H:%M:%S", time.localtime()) + ":" + msg + "\n")

o.close()

def WriteTmpInfo(msg):

o = open("/tmp/webcdnqos_result"+".txt","aw+")

o.write(msg+"\n")

o.close()

def WriteURLInfo(msg):

today = datetime.date.today()

o = open("/tmp/webcdnqos_url_%s" % today.strftime('%Y-%m-%d') + ".log","aw")

# o.write(time.strftime("%Y-%m-%d %H:%M:%S", time.localtime()) + " " +msg+"\n")

o.write(msg)

o.close()

if __name__ == "__main__":

if len(sys.argv) <2:

show_usage()

os.popen("rm -f /tmp/exist.txt")

sys.exit(1)

else:

if os.path.isfile("/tmp/exist.txt"):

sys.exit(1)

else:

os.popen("echo 'hello' > /tmp/exist.txt")

result_key = sys.argv[1]

status = result_dic()

os.popen(">/tmp/webcdnqos_result.txt")

print status[result_key]

Writelog(str(status[result_key]))

for i in status.keys():

WriteTmpInfo(str(i)+"="+str(status[i]))

os.popen("rm -f /tmp/exist.txt")