BP神经网络原理及C++实战

前一段时间做了一个数字识别的小系统,基于BP神经网络算法的,用MFC做的交互。在实现过程中也试着去找一些源码,总体上来讲,这些源码的可移植性都不好,多数将交互部分和核心算法代码杂糅在一起,这样不仅代码阅读困难,而且重要的是核心算法不具备可移植性。设计模式,设计模式的重要性啊!于是自己将BP神经网络的核心算法用标准C++实现,这样可移植性就有保证的,然后在核心算法上实现基于不同GUI库的交互(MFC,QT)是能很快的搭建好系统的。下面边介绍BP算法的原理(请看《数字图像处理与机器视觉》非常适合做工程的伙伴),边给出代码的实现,最后给出基于核心算法构建交互的例子。

人工神经网络的理论基础

1.感知器

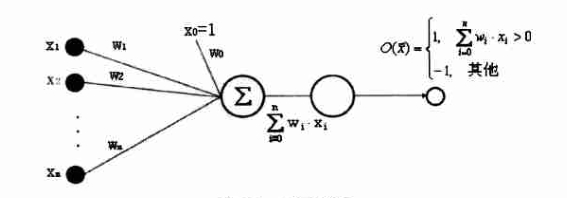

感知器是一种具有简单的两种输出的人工神经元,如下图所示。

2.线性单元

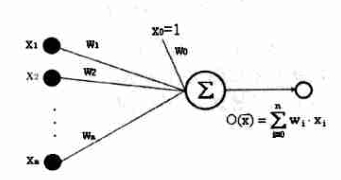

只有1和-1两种输出的感知器实际上限制了其处理和分类的能力,下图是一种简单的推广,即不带阈值的感知器。

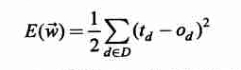

3.误差准则

使用的是一个常用的误差度量标准,平方误差准则。公式如下。

其中D为训练样本,td为训练观测值d的训练输出,ot为观测值d的实际观测值。如果是个凸函数就好了(搞数学的,一听到凸函数就很高兴,呵呵!),但还是可以用梯度下降的方法求其参数w。

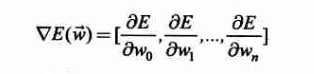

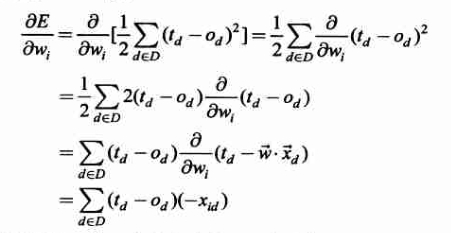

4.梯度下降推导

在高等数学中梯度的概念实际上就是一个方向向量,也就是方向导数最大的方向,也就是说沿着这个方向,函数值的变化速度最快。我们这里是做梯度下降,那么就是沿着梯度的负方向更新参数w的值来快速达到E函数值的最小了。这样梯度下降算法的步骤基本如下:

1) 初始化参数w(随机,或其它方法)。

2) 求梯度。

3) 沿梯度方向更新参数w,可以添加一个学习率,也就是按多大的步子下降。

4) 重复1),2),3)直到达到设置的条件(迭代次数,或者E的减小量小于某个阈值)。

梯度的表达式如下:

那么如何求梯度呢?就是复合函数求导的过程,如下:

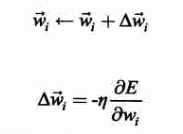

其中xid为样本中第d个观测值对应的一个输入分量xi。这样,训练过程中参数w的更新表达式如下(其中添加了一个学习率,也就是下降的步长):

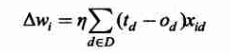

于是参数wi的更新增量为:

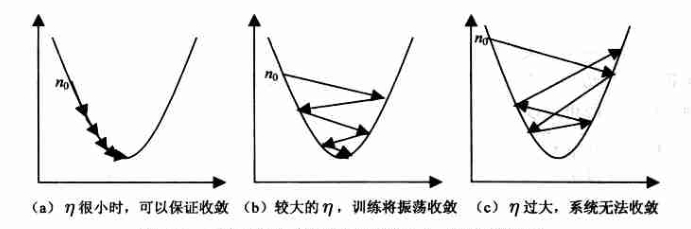

对于学习率选择的问题,一般较小是能够保证收敛的,看下图吧。

5.增量梯度下降

对于4中的梯度下降算法,其缺点是有时收敛速度慢,如果在误差曲面上存在多个局部极小值,算法不能保证能够找到全局极小值。为了改善这些缺点,提出了增量梯度下降算法。增量梯度下降,与4中的梯度下降的不同之处在于,4中对参数w的更新是根据整个样本中的观测值的误差来计算的,而增量梯度下降算法是根据样本中单个观测值的误差来计算w的更新。

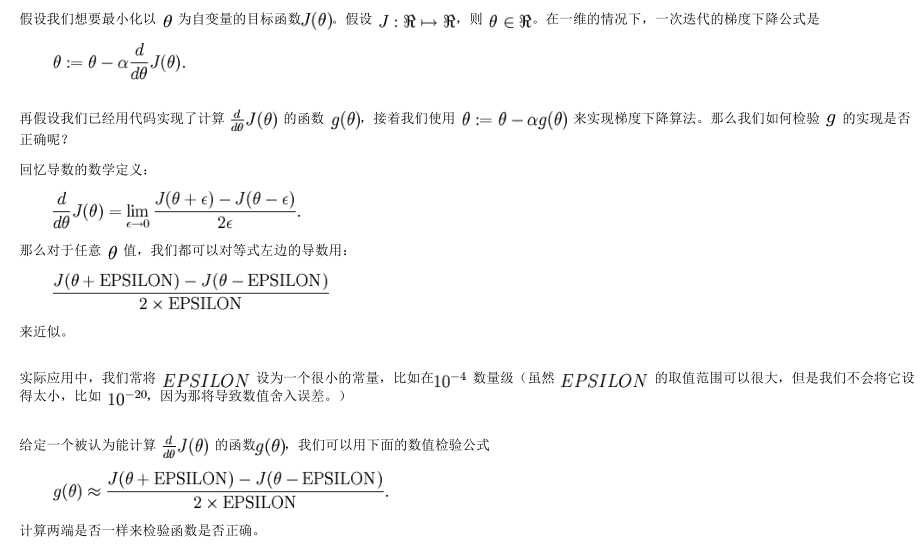

6.梯度检验

这是一个比较实用的内容,如何确定自己的代码就一定没有错呢?因为在求梯度的时候是很容易犯错误的,我就犯过了,嗨,调了两天才找出来,一个数组下表写错了,要是早一点看看斯坦福大学的深度学习基础教程就好了,这里只是截图一部分,有时间去仔细看看吧。

多层神经网络

好了有了前面的基础,我们现在就可以进行实战了,构造多层神经网络。

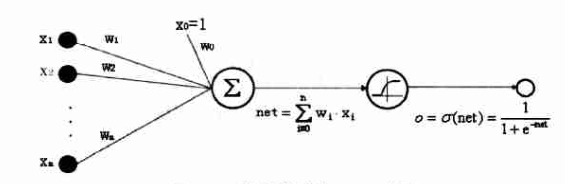

1.Sigmoid神经元

Sigmoid神经元可由下图表示:

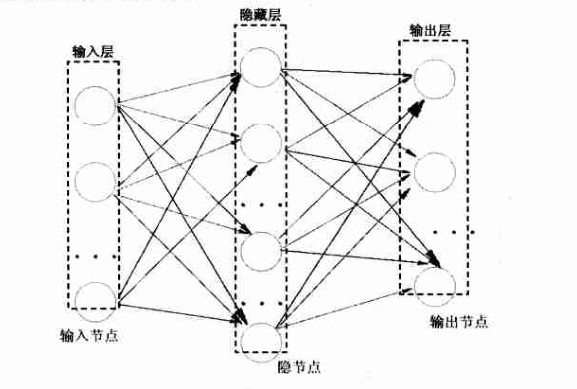

2.神经网络层

一个三层的BP神经网络可由下图表示:

3.神经元和神经网络层的标准C++定义

由2中的三层BP神经网络的示意图中可以看出,隐藏层和输出层是具有类似的结构的。神经元和神经网络层的定义如下:

////////////////////////////////////////////////////////

// Neuron.h

#ifndef __SNEURON_H__

#define __SNEURON_H__

#define NEED_MOMENTUM //if you want to addmomentum, remove the annotation

#define MOMENTUM 0.6 //momentumcoefficient, works on when defined NEED_MOMENTUM

typedef double WEIGHT_TYPE; // definedatatype of the weight

struct SNeuron{//neuron cell

/******Data*******/

intm_nInput; //number of inputs

WEIGHT_TYPE*m_pWeights; //weights array of inputs

#ifdef NEED_MOMENTUM

WEIGHT_TYPE*m_pPrevUpdate; //record last weights update when momentum is needed

#endif

doublem_dActivation; //output value, through Sigmoid function

doublem_dError; //error value of neuron

/********Functions*************/

voidInit(int nInput){

m_nInput= nInput + 1; //add a side term,number of inputs is actual number of actualinputs plus 1

m_pWeights= new WEIGHT_TYPE[m_nInput];//allocate for weights array

#ifdef NEED_MOMENTUM

m_pPrevUpdate= new WEIGHT_TYPE[m_nInput];//allocate for the last weights array

#endif

m_dActivation= 0; //output value, through SIgmoid function

m_dError= 0; //error value of neuron

}

~SNeuron(){

//releasememory

delete[]m_pWeights;

#ifdef NEED_MOMENTUM

delete[]m_pPrevUpdate;

#endif

}

};//SNeuron

struct SNeuronLayer{//neuron layer

/************Data**************/

intm_nNeuron; //Neuron number of this layer

SNeuron*m_pNeurons; //Neurons array

/*************Functions***************/

SNeuronLayer(intnNeuron, int nInputsPerNeuron){

m_nNeuron= nNeuron;

m_pNeurons= new SNeuron[nNeuron]; //allocatememory for nNeuron neurons

for(inti=0; i<nNeuron; i++){

m_pNeurons[i].Init(nInputsPerNeuron); //initialize neuron

}

}

~SNeuronLayer(){

delete[]m_pNeurons; //release neurons array

}

};//SNeuronLayer

#endif//__SNEURON_H__

代码中定义了一个NEED_MOMENTUM,它是用来解决局部极小值的问题的,其含义是本次权重的更新是依赖于上一次权重更新的。另外还有一种解决局部极小值问题的方法是,将w初始化为接近于0的随机数。

4.反向传播(BP)算法

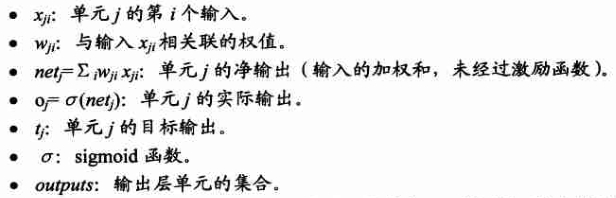

前面虽然有了神经元和神经网络层的定义,但要构造一个三层的BP神经网络之前,还要搞清楚BP算法是如何来学习神经网络的参数的。它仍采用梯度下降算法,但不同的是这里的输出是整个网络的输出,而不再是一个单元的输出,所有对误差函数E重新定义如下:

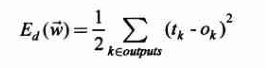

其中outputs是网络中输出层单元的集合,tkd,okd是训练样本中第d个观测值在第k个输出单元的而输出值。

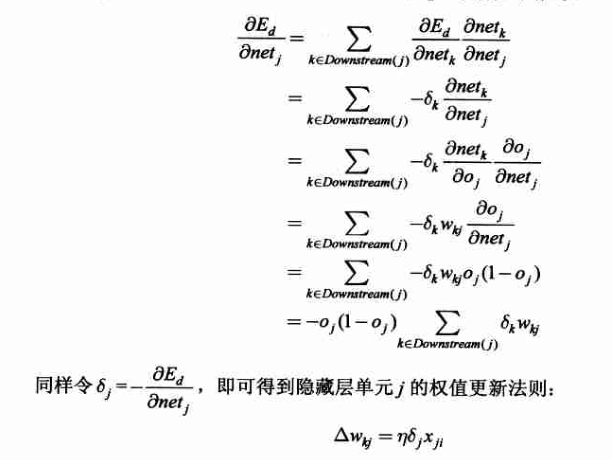

1)BP算法推导

先引入下列符号:

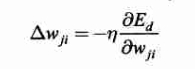

增量梯度下降算法中,对于每个训练样本中第d个观测的一个输入权重wij的增量如下表示:

其中Ed是训练样本中第d个观测的误差,通过对输出层所有单元的求和得到:

这里说明一下,神经网络输出层的所有单元联合一起表示一个样本观测的训练值的。假设样本观测值为5种,即5种类别,那么先验训练数据的类别表示为:1,0,0,0,0;0,1,0,0,0;0,0,1,0,0;0,0,0,1,0;0,0,0,0,1。这样在对神经网络训练时,我们的训练输出值的表示也就是类似的,当然基于神经元的结构表示,我们也可以将先验训练数据的类别表示中的1换成0.9等。

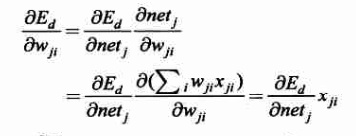

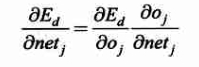

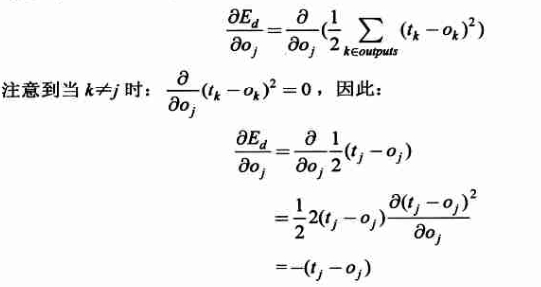

下面我们就要求梯度了(要分层求解,输出层,隐藏层),梯度向量中的各元素求解如下:

1)当单元j是一个输出单元时:

其中:

于是得到:

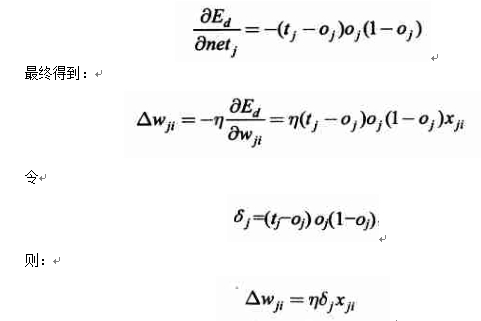

2)当单元j是一个隐藏层单元时,有如下推导:

5.标准C++构建三层BP神经网络

该神经网络提供了重要的两个接口。一个是一次训练训练接口TrainingEpoch,可用于上层算法构建训练函数时调用;另一个是计算给定一个输入神经网络的输出接口CalculateOutput,它在一次训练函数中被调用,更重要的是,在上层算法中构建识别函数调用。

头文件:

// NeuralNet.h: interface for theCNeuralNet class.

//

//////////////////////////////////////////////////////////////////////

#ifndef __NEURALNET_H__

#define __NEURALNET_H__

#include <vector>

#include <math.h>

#include "Neuron.h"

using namespace std;

typedef vector<double> iovector;

#define BIAS 1 //bias term's coefficient w0

/*************Random functions initializingweights*************/

#define WEIGHT_FACTOR 0.1 //used to confineinitial weights

/*Return a random float between 0 to 1*/

inline double RandFloat(){ return(rand())/(RAND_MAX+1.0); }

/*Return a random float between -1 to 1*/

inline double RandomClamped(){ returnWEIGHT_FACTOR*(RandFloat() - RandFloat()); }

class CNeuralNet{

private:

/*Initialparameters, can not be changed throghout the whole training.*/

intm_nInput; //number of inputs

intm_nOutput; //number of outputs

intm_nNeuronsPerLyr; //unit number of hidden layer

intm_nHiddenLayer; //hidden layer, not including the output layer

/***Dinamicparameters****/

doublem_dErrorSum; //one epoch's sum-error

SNeuronLayer*m_pHiddenLyr; //hidden layer

SNeuronLayer*m_pOutLyr; //output layer

public:

/*

*Constructorand Destructor.

*/

CNeuralNet(intnInput, int nOutput, int nNeuronsPerLyr, int nHiddenLayer);

~CNeuralNet();

/*

*Computeoutput of network, feedforward.

*/

bool CalculateOutput(vector<double> input,vector<double>& output);

/*

*Trainingan Epoch, backward adjustment.

*/

bool TrainingEpoch(vector<iovector>& SetIn,vector<iovector>& SetOut, double LearningRate);

/*

*Geterror-sum.

*/

doubleGetErrorSum(){ return m_dErrorSum; }

SNeuronLayer*GetHiddenLyr(){ return m_pHiddenLyr; }

SNeuronLayer*GetOutLyr(){ return m_pOutLyr; }

private:

/*

*Biuldnetwork, allocate memory for each layer.

*/

voidCreateNetwork();

/*

*Initializenetwork.

*/

voidInitializeNetwork();

/*

*Sigmoidencourage fuction.

*/

doubleSigmoid(double netinput){

doubleresponse = 1.0; //control steep degreeof sigmoid function

return(1 / ( 1 + exp(-netinput / response) ) );

}

};

#endif //__NEURALNET_H__

实现文件:

// NeuralNet.cpp: implementation of theCNeuralNet class.

//

//////////////////////////////////////////////////////////////////////

#include "stdafx.h"

#include "NeuralNet.h"

#include <assert.h>

CNeuralNet::CNeuralNet(int nInput, intnOutput, int nNeuronsPerLyr, int nHiddenLayer){

assert(nInput>0 && nOutput>0 && nNeuronsPerLyr>0 &&nHiddenLayer>0 );

m_nInput= nInput;

m_nOutput= nOutput;

m_nNeuronsPerLyr= nNeuronsPerLyr;

if(nHiddenLayer!= 1)

m_nHiddenLayer= 1;

else

m_nHiddenLayer= nHiddenLayer; //temporarily surpport only one hidden layer

m_pHiddenLyr= NULL;

m_pOutLyr= NULL;

CreateNetwork(); //allocate for each layer

InitializeNetwork(); //initialize the whole network

}

CNeuralNet::~CNeuralNet(){

if(m_pHiddenLyr!= NULL)

deletem_pHiddenLyr;

if(m_pOutLyr!= NULL)

deletem_pOutLyr;

}

void CNeuralNet::CreateNetwork(){

m_pHiddenLyr= new SNeuronLayer(m_nNeuronsPerLyr, m_nInput);

m_pOutLyr= new SNeuronLayer(m_nOutput, m_nNeuronsPerLyr);

}

void CNeuralNet::InitializeNetwork(){

inti, j; //variables for loop

/*usepresent time as random seed, so every time runs this programm can producedifferent random sequence*/

//srand((unsigned)time(NULL) );

/*initializehidden layer's weights*/

for(i=0;i<m_pHiddenLyr->m_nNeuron; i++){

for(j=0;j<m_pHiddenLyr->m_pNeurons[i].m_nInput; j++){

m_pHiddenLyr->m_pNeurons[i].m_pWeights[j]= RandomClamped();

#ifdefNEED_MOMENTUM

/*whenthe first epoch train started, there is no previous weights update*/

m_pHiddenLyr->m_pNeurons[i].m_pPrevUpdate[j]= 0;

#endif

}

}

/*initializeoutput layer's weights*/

for(i=0;i<m_pOutLyr->m_nNeuron; i++){

for(intj=0; j<m_pOutLyr->m_pNeurons[i].m_nInput; j++){

m_pOutLyr->m_pNeurons[i].m_pWeights[j]= RandomClamped();

#ifdefNEED_MOMENTUM

/*whenthe first epoch train started, there is no previous weights update*/

m_pOutLyr->m_pNeurons[i].m_pPrevUpdate[j]= 0;

#endif

}

}

m_dErrorSum= 9999.0; //initialize a large trainingerror, it will be decreasing with training

}

boolCNeuralNet::CalculateOutput(vector<double> input,vector<double>& output){

if(input.size()!= m_nInput){ //input feature vector's dimention not equals to input of network

returnfalse;

}

inti, j;

doublenInputSum; //sum term

/*computehidden layer output*/

for(i=0;i<m_pHiddenLyr->m_nNeuron; i++){

nInputSum= 0;

for(j=0;j<m_pHiddenLyr->m_pNeurons[i].m_nInput-1; j++){

nInputSum+= m_pHiddenLyr->m_pNeurons[i].m_pWeights[j] * input[j];

}

/*plusbias term*/

nInputSum+= m_pHiddenLyr->m_pNeurons[i].m_pWeights[j] * BIAS;

/*computesigmoid fuction's output*/

m_pHiddenLyr->m_pNeurons[i].m_dActivation= Sigmoid(nInputSum);

}

/*computeoutput layer's output*/

for(i=0;i<m_pOutLyr->m_nNeuron; i++){

nInputSum= 0;

for(j=0;j<m_pOutLyr->m_pNeurons[i].m_nInput-1; j++){

nInputSum+= m_pOutLyr->m_pNeurons[i].m_pWeights[j]

*m_pHiddenLyr->m_pNeurons[j].m_dActivation;

}

/*plusbias term*/

nInputSum+= m_pOutLyr->m_pNeurons[i].m_pWeights[j] * BIAS;

/*computesigmoid fuction's output*/

m_pOutLyr->m_pNeurons[i].m_dActivation= Sigmoid(nInputSum);

/*saveit to the output vector*/

output.push_back(m_pOutLyr->m_pNeurons[i].m_dActivation);

}

returntrue;

}

bool CNeuralNet::TrainingEpoch(vector<iovector>&SetIn, vector<iovector>& SetOut, double LearningRate){

inti, j, k;

doubleWeightUpdate; //weight's update value

doubleerr; //error term

/*increment'sgradient decrease(update weights according to each training sample)*/

m_dErrorSum= 0; // sum of error term

for(i=0;i<SetIn.size(); i++){

iovectorvecOutputs;

/*forwardlyspread inputs through network*/

if(!CalculateOutput(SetIn[i],vecOutputs)){

returnfalse;

}

/*updatethe output layer's weights*/

for(j=0;j<m_pOutLyr->m_nNeuron; j++){

/*computeerror term*/

err= ((double)SetOut[i][j]-vecOutputs[j])*vecOutputs[j]*(1-vecOutputs[j]);

m_pOutLyr->m_pNeurons[j].m_dError= err; //record this unit's error

/*updatesum error*/

m_dErrorSum+= ((double)SetOut[i][j] - vecOutputs[j]) * ((double)SetOut[i][j] -vecOutputs[j]);

/*updateeach input's weight*/

for(k=0;k<m_pOutLyr->m_pNeurons[j].m_nInput-1; k++){

WeightUpdate= err * LearningRate * m_pHiddenLyr->m_pNeurons[k].m_dActivation;

#ifdef NEED_MOMENTUM

/*updateweights with momentum*/

m_pOutLyr->m_pNeurons[j].m_pWeights[k]+=

WeightUpdate+ m_pOutLyr->m_pNeurons[j].m_pPrevUpdate[k] * MOMENTUM;

m_pOutLyr->m_pNeurons[j].m_pPrevUpdate[k]= WeightUpdate;

#else

/*updateunit weights*/

m_pOutLyr->m_pNeurons[j].m_pWeights[k]+= WeightUpdate;

#endif

}

/*biasupdate volume*/

WeightUpdate= err * LearningRate * BIAS;

#ifdef NEED_MOMENTUM

/*updatebias with momentum*/

m_pOutLyr->m_pNeurons[j].m_pWeights[k]+=

WeightUpdate+ m_pOutLyr->m_pNeurons[j].m_pPrevUpdate[k] * MOMENTUM;

m_pOutLyr->m_pNeurons[j].m_pPrevUpdate[k]= WeightUpdate;

#else

/*updatebias*/

m_pOutLyr->m_pNeurons[j].m_pWeights[k]+= WeightUpdate;

#endif

}//for out layer

/*updatethe hidden layer's weights*/

for(j=0;j<m_pHiddenLyr->m_nNeuron; j++){

err= 0;

for(intk=0; k<m_pOutLyr->m_nNeuron; k++){

err+= m_pOutLyr->m_pNeurons[k].m_dError *m_pOutLyr->m_pNeurons[k].m_pWeights[j];

}

err*= m_pHiddenLyr->m_pNeurons[j].m_dActivation * (1 -m_pHiddenLyr->m_pNeurons[j].m_dActivation);

m_pHiddenLyr->m_pNeurons[j].m_dError= err; //record this unit's error

/*updateeach input's weight*/

for(k=0;k<m_pHiddenLyr->m_pNeurons[j].m_nInput-1; k++){

WeightUpdate= err * LearningRate * SetIn[i][k];

#ifdef NEED_MOMENTUM

/*updateweights with momentum*/

m_pHiddenLyr->m_pNeurons[j].m_pWeights[k]+=

WeightUpdate+ m_pHiddenLyr->m_pNeurons[j].m_pPrevUpdate[k] * MOMENTUM;

m_pHiddenLyr->m_pNeurons[j].m_pPrevUpdate[k]= WeightUpdate;

#else

m_pHiddenLyr->m_pNeurons[j].m_pWeights[k]+= WeightUpdate;

#endif

}

/*biasupdate volume*/

WeightUpdate= err * LearningRate * BIAS;

#ifdef NEED_MOMENTUM

/*updatebias with momentum*/

m_pHiddenLyr->m_pNeurons[j].m_pWeights[k]+=

WeightUpdate+ m_pHiddenLyr->m_pNeurons[j].m_pPrevUpdate[k] * MOMENTUM;

m_pHiddenLyr->m_pNeurons[j].m_pPrevUpdate[k]= WeightUpdate;

#else

/*updatebias*/

m_pHiddenLyr->m_pNeurons[j].m_pWeights[k]+= WeightUpdate;

#endif

}//forhidden layer

}//forone epoch

returntrue;

}

6.基于BP核心算法构建MVC框架

到此为止我们的核心算法已经构建出来了,再应用两次Strategy 设计模式,我们就很容易构建出一个MVC框架(see also:http://remyspot.blog.51cto.com/8218746/1574484)。下面给出一个应用Strategy设计模式基于CNeuralNet类构建一个Controller,在Controller中我们就可以开始依赖特定的GUI库了。下面的这个Controller是不能直接使用的,你所要做的是参考该代码(重点参看

boolTrain(vector<iovector>& SetIn, vector<iovector>& SetOut);

bool SaveTrainResultToFile(const char* lpszFileName, boolbCreate);

bool LoadTrainResultFromFile(const char* lpszFileName, DWORDdwStartPos);

int Recognize(CString strPathName, CRect rt, double&dConfidence);

接口的实现), 然后基于前面的核心BP算构建你自己的Controller,然后在该Controller的上层实现你自己的交互功能。

说明一下,Train接口中的SetIn是训练数据的特征,SetOut是训练数据的类别表示。

头文件:

#ifndef __OPERATEONNEURALNET_H__

#define __OPERATEONNEURALNET_H__

#include "NeuralNet.h"

#define NEURALNET_VERSION 1.0

#define RESAMPLE_LEN 4

class COperateOnNeuralNet{

private:

/*network*/

CNeuralNet*m_oNetWork;

/*network'sparameter*/

intm_nInput;

intm_nOutput;

intm_nNeuronsPerLyr;

intm_nHiddenLayer;

/*trainingconfiguration*/

intm_nMaxEpoch; // max training epoch times

doublem_dMinError; // error threshold

doublem_dLearningRate;

/*dinamiccurrent parameter*/

intm_nEpochs;

doublem_dErr; //mean error of oneepoch(m_dErrorSum/(num-of-samples * num-of-output))

boolm_bStop; //control whether stop or not during the training

vector<double> m_vecError; //record each epoch'straining error, used for drawing error curve

public:

COperateOnNeuralNet();

~COperateOnNeuralNet();

voidSetNetWorkParameter(int nInput, int nOutput, int nNeuronsPerLyr, intnHiddenLayer);

boolCreatNetWork();

voidSetTrainConfiguration(int nMaxEpoch, double dMinError, double dLearningRate);

voidSetStopFlag(bool bStop) { m_bStop = bStop; }

doubleGetError(){ return m_dErr; }

intGetEpoch(){ return m_nEpochs; }

intGetNumNeuronsPerLyr(){ return m_nNeuronsPerLyr; }

boolTrain(vector<iovector>& SetIn, vector<iovector>& SetOut);

bool SaveTrainResultToFile(const char* lpszFileName, boolbCreate);

bool LoadTrainResultFromFile(const char* lpszFileName, DWORDdwStartPos);

int Recognize(CString strPathName, CRect rt, double&dConfidence);

};

/*

* Can be used when saving or readingtraining result.

*/

struct NEURALNET_HEADER{

DWORDdwVersion; //version imformation

/*initialparameters*/

intm_nInput; //number of inputs

intm_nOutput; //number of outputs

intm_nNeuronsPerLyr; //unit number of hidden layer

intm_nHiddenLayer; //hidden layer, not including the output layer

/*trainingconfiguration*/

intm_nMaxEpoch; // max training epoch times

doublem_dMinError; // error threshold

doublem_dLearningRate;

/*dinamiccurrent parameter*/

intm_nEpochs;

doublem_dErr; //mean error of oneepoch(m_dErrorSum/(num-of-samples * num-of-output))

};

#endif //__OPERATEONNEURALNET_H__

实现文件:

// OperateOnNeuralNet.cpp: implementationof the COperateOnNeuralNet class.

//

//////////////////////////////////////////////////////////////////////

#include "stdafx.h"

#include "MyDigitRec.h"

#include "OperateOnNeuralNet.h"

#include "Img.h"

#include <assert.h>

/*

*Handle message during waiting.

*/

void WaitForIdle()

{

MSGmsg;

while(::PeekMessage(&msg,NULL, 0, 0, PM_REMOVE))

{

::TranslateMessage(&msg);

::DispatchMessage(&msg);

}

}

COperateOnNeuralNet::COperateOnNeuralNet(){

m_nInput= 0;

m_nOutput= 0;

m_nNeuronsPerLyr= 0;

m_nHiddenLayer= 0;

m_nMaxEpoch= 0;

m_dMinError= 0;

m_dLearningRate= 0;

m_oNetWork= 0;

m_nEpochs= 0;

m_dErr= 0;

m_bStop= false;

}

COperateOnNeuralNet::~COperateOnNeuralNet(){

if(m_oNetWork)

deletem_oNetWork;

}

voidCOperateOnNeuralNet::SetNetWorkParameter(int nInput, int nOutput, intnNeuronsPerLyr, int nHiddenLayer){

assert(nInput>0 && nOutput>0 && nNeuronsPerLyr>0 &&nHiddenLayer>0 );

m_nInput= nInput;

m_nOutput= nOutput;

m_nNeuronsPerLyr= nNeuronsPerLyr;

m_nHiddenLayer= nHiddenLayer;

}

bool COperateOnNeuralNet::CreatNetWork(){

assert(m_nInput>0 && m_nOutput>0 && m_nNeuronsPerLyr>0&& m_nHiddenLayer>0 );

m_oNetWork= new CNeuralNet(m_nInput, m_nOutput, m_nNeuronsPerLyr, m_nHiddenLayer);

if(m_oNetWork)

returntrue;

else

returnfalse;

}

voidCOperateOnNeuralNet::SetTrainConfiguration(int nMaxEpoch, double dMinError,double dLearningRate){

assert(nMaxEpoch>0&& !(dMinError<0) && dLearningRate!=0);

m_nMaxEpoch= nMaxEpoch;

m_dMinError= dMinError;

m_dLearningRate= dLearningRate;

}

boolCOperateOnNeuralNet::Train(vector<iovector>& SetIn, vector<iovector>&SetOut){

m_bStop= false; //no stop during training

CStringstrOutMsg;

do{

/*trainone epoch*/

if(!m_oNetWork->TrainingEpoch(SetIn, SetOut, m_dLearningRate) ){

strOutMsg.Format("Erroroccured at training %dth epoch!",m_nEpochs+1);

AfxMessageBox(strOutMsg);

returnfalse;

}else{

m_nEpochs++;

}

/*computemean error of one epoch(m_dErrorSum/(num-of-samples * num-of-output))*/

intsum = m_oNetWork->GetErrorSum();

m_dErr= m_oNetWork->GetErrorSum() / ( m_nOutput * SetIn.size() );

m_vecError.push_back(m_dErr);

if(m_dErr< m_dMinError){

break;

}

/*stopin loop to chech wether user's action made or message sent, mostly for changem_bStop */

WaitForIdle();

if(m_bStop){

break;

}

}while(--m_nMaxEpoch> 0);

returntrue;

}

boolCOperateOnNeuralNet::SaveTrainResultToFile(const char* lpszFileName, boolbCreate){

CFilefile;

if(bCreate){

if(!file.Open(lpszFileName,CFile::modeWrite|CFile::modeCreate))

returnfalse;

}else{

if(!file.Open(lpszFileName,CFile::modeWrite))

returnfalse;

file.SeekToEnd(); //add to end of file

}

/*createnetwork head information*/

/*initialparameter*/

NEURALNET_HEADERheader = {0};

header.dwVersion= NEURALNET_VERSION;

header.m_nInput= m_nInput;

header.m_nOutput= m_nOutput;

header.m_nNeuronsPerLyr= m_nNeuronsPerLyr;

header.m_nHiddenLayer= m_nHiddenLayer;

/*trainingconfiguration*/

header.m_nMaxEpoch= m_nMaxEpoch;

header.m_dMinError= m_dMinError;

header.m_dLearningRate= m_dLearningRate;

/*dinamiccurrent parameter*/

header.m_nEpochs= m_nEpochs;

header.m_dErr= m_dErr;

file.Write(&header,sizeof(header));

/*writeweight information to file*/

inti, j;

/*hiddenlayer weight*/

for(i=0;i<m_oNetWork->GetHiddenLyr()->m_nNeuron; i++){

file.Write(&m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dActivation,

sizeof(m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dActivation));

file.Write(&m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dError,

sizeof(m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dError));

for(j=0;j<m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_nInput; j++){

file.Write(&m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_pWeights[j],

sizeof(m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_pWeights[j]));

}

}

/*outputlayer weight*/

for(i=0;i<m_oNetWork->GetOutLyr()->m_nNeuron; i++){

file.Write(&m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dActivation,

sizeof(m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dActivation));

file.Write(&m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dError,

sizeof(m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dError));

for(j=0;j<m_oNetWork->GetOutLyr()->m_pNeurons[i].m_nInput; j++){

file.Write(&m_oNetWork->GetOutLyr()->m_pNeurons[i].m_pWeights[j],

sizeof(m_oNetWork->GetOutLyr()->m_pNeurons[i].m_pWeights[j]));

}

}

file.Close();

returntrue;

}

boolCOperateOnNeuralNet::LoadTrainResultFromFile(const char* lpszFileName, DWORDdwStartPos){

CFilefile;

if(!file.Open(lpszFileName,CFile::modeRead)){

returnfalse;

}

file.Seek(dwStartPos,CFile::begin); //point to dwStartPos

/*readin NeuralNet_Head infomation*/

NEURALNET_HEADERheader = {0};

if(file.Read(&header, sizeof(header)) != sizeof(header) ){

returnfalse;

}

/*chechversion*/

if(header.dwVersion!= NEURALNET_VERSION){

returnfalse;

}

/*checkbasic NeuralNet's structure*/

if(header.m_nInput!= m_nInput

||header.m_nOutput != m_nOutput

||header.m_nNeuronsPerLyr != m_nNeuronsPerLyr

||header.m_nHiddenLayer != m_nHiddenLayer

||header.m_nMaxEpoch != m_nMaxEpoch

||header.m_dMinError != m_dMinError

||header.m_dLearningRate != m_dLearningRate ){

returnfalse;

}

/*dynamicparameters*/

m_nEpochs= header.m_nEpochs; //update trainingepochs

m_dErr= header.m_dErr; //update training error

/*readin NetWork's weights*/

inti,j;

/*readin hidden layer weights*/

for(i=0;i<m_oNetWork->GetHiddenLyr()->m_nNeuron; i++){

file.Read(&m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dActivation,

sizeof(m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dActivation));

file.Read(&m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dError,

sizeof(m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_dError));

for(j=0;j<m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_nInput; j++){

file.Read(&m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_pWeights[j],

sizeof(m_oNetWork->GetHiddenLyr()->m_pNeurons[i].m_pWeights[j]));

}

}

/*readin out layer weights*/

for(i=0;i<m_oNetWork->GetOutLyr()->m_nNeuron; i++){

file.Read(&m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dActivation,

sizeof(m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dActivation));

file.Read(&m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dError,

sizeof(m_oNetWork->GetOutLyr()->m_pNeurons[i].m_dError));

for(j=0;j<m_oNetWork->GetOutLyr()->m_pNeurons[i].m_nInput; j++){

file.Read(&m_oNetWork->GetOutLyr()->m_pNeurons[i].m_pWeights[j],

sizeof(m_oNetWork->GetOutLyr()->m_pNeurons[i].m_pWeights[j]));

}

}

returntrue;

}

int COperateOnNeuralNet::Recognize(CStringstrPathName, CRect rt, double &dConfidence){

intnBestMatch; //category number

doubledMaxOut1 = 0; //max output

doubledMaxOut2 = 0; //second max output

CImggray;

if(!gray.AttachFromFile(strPathName)){

return-1;

}

/*convert the picture waitiong for being recognized to vector*/

vector<double>vecToRec;

for(intj=rt.top; j<rt.bottom; j+= RESAMPLE_LEN){

for(inti=rt.left; i<rt.right; i+=RESAMPLE_LEN){

intnGray = 0;

for(intmm=j; mm<j+RESAMPLE_LEN; mm++){

for(intnn=i; nn<i+RESAMPLE_LEN; nn++)

nGray+= gray.GetGray(nn, mm);

}

nGray/= RESAMPLE_LEN*RESAMPLE_LEN;

vecToRec.push_back(nGray/255.0);

}

}

/*computethe output result*/

vector<double>outputs;

if(!m_oNetWork->CalculateOutput(vecToRec,outputs)){

AfxMessageBox("Recfailed!");

return-1;

}

/*findthe max output unit, and its unit number is the category number*/

nBestMatch= 0;

for(intk=0; k<outputs.size(); k++){

if(outputs[k]> dMaxOut1){

dMaxOut2= dMaxOut1;

dMaxOut1= outputs[k];

nBestMatch= k;

}

}

dConfidence= dMaxOut1 - dMaxOut2; //compute beliefdegree

returnnBestMatch;

}

本文出自 “Remys” 博客,谢绝转载!