Caffe代码导读(5):对数据集进行Testing

上一篇介绍了如何准备数据集,做好准备之后我们先看怎样对训练好的模型进行Testing。

先用手写体识别例子,MNIST是数据集(包括训练数据和测试数据),深度学习模型采用LeNet(具体介绍见http://yann.lecun.com/exdb/lenet/),由Yann LeCun教授提出。

如果你编译好了Caffe,那么在CAFFE_ROOT下运行如下命令:

$ ./build/tools/caffe.bin test -model=examples/mnist/lenet_train_test.prototxt -weights=examples/mnist/lenet_iter_10000.caffemodel -gpu=0

就可以实现Testing。参数说明如下:

test:表示对训练好的模型进行Testing,而不是training。其他参数包括train, time, device_query。

-model=XXX:指定模型prototxt文件,这是一个文本文件,详细描述了网络结构和数据集信息。我用的prototxt内容如下:

name: "LeNet"

layers {

name: "mnist"

type: DATA

top: "data"

top: "label"

data_param {

source: "examples/mnist/mnist_train_lmdb"

backend: LMDB

batch_size: 64

}

transform_param {

scale: 0.00390625

}

include: { phase: TRAIN }

}

layers {

name: "mnist"

type: DATA

top: "data"

top: "label"

data_param {

source: "examples/mnist/mnist_test_lmdb"

backend: LMDB

batch_size: 100

}

transform_param {

scale: 0.00390625

}

include: { phase: TEST }

}

layers {

name: "conv1"

type: CONVOLUTION

bottom: "data"

top: "conv1"

blobs_lr: 1

blobs_lr: 2

convolution_param {

num_output: 20

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

name: "pool1"

type: POOLING

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layers {

name: "conv2"

type: CONVOLUTION

bottom: "pool1"

top: "conv2"

blobs_lr: 1

blobs_lr: 2

convolution_param {

num_output: 50

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

name: "pool2"

type: POOLING

bottom: "conv2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layers {

name: "ip1"

type: INNER_PRODUCT

bottom: "pool2"

top: "ip1"

blobs_lr: 1

blobs_lr: 2

inner_product_param {

num_output: 500

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

name: "relu1"

type: RELU

bottom: "ip1"

top: "ip1"

}

layers {

name: "ip2"

type: INNER_PRODUCT

bottom: "ip1"

top: "ip2"

blobs_lr: 1

blobs_lr: 2

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

name: "accuracy"

type: ACCURACY

bottom: "ip2"

bottom: "label"

top: "accuracy"

include: { phase: TEST }

}

layers {

name: "loss"

type: SOFTMAX_LOSS

bottom: "ip2"

bottom: "label"

top: "loss"

}

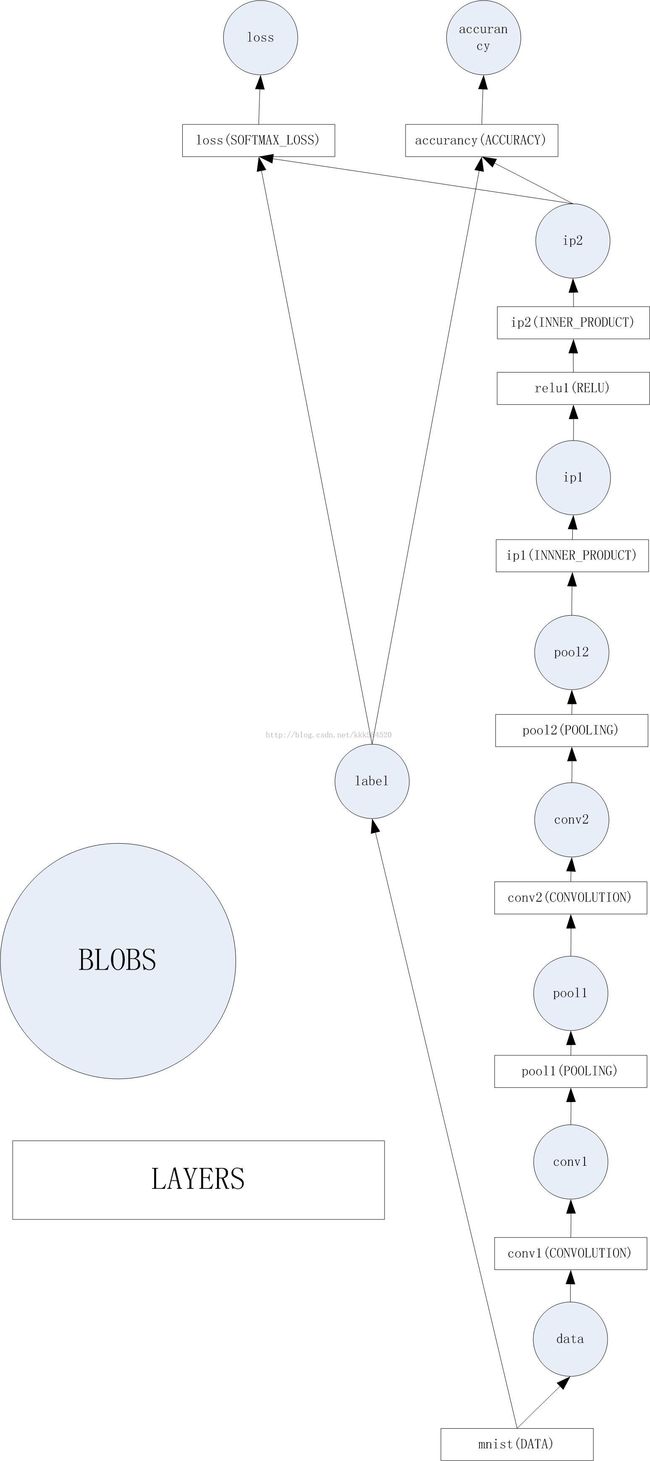

里面定义的网络结构如下图所示:

-weights=XXX:指定训练好的caffemodel二进制文件。如果你手头没有训练好的可以下载这个(http://download.csdn.net/detail/kkk584520/8219443)。

-gpu=0:指定在GPU上运行,GPUID=0。如果你没有GPU就去掉这个参数,默认在CPU上运行。

运行输出如下:

I1203 18:47:00.073052 4610 caffe.cpp:134] Use GPU with device ID 0

I1203 18:47:00.367065 4610 net.cpp:275] The NetState phase (1) differed from the phase (0) specified by a rule in layer mnist

I1203 18:47:00.367269 4610 net.cpp:39] Initializing net from parameters:

name: "LeNet"

layers {

top: "data"

top: "label"

name: "mnist"

type: DATA

data_param {

source: "examples/mnist/mnist_test_lmdb"

batch_size: 100

backend: LMDB

}

include {

phase: TEST

}

transform_param {

scale: 0.00390625

}

}

layers {

bottom: "data"

top: "conv1"

name: "conv1"

type: CONVOLUTION

blobs_lr: 1

blobs_lr: 2

convolution_param {

num_output: 20

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

bottom: "conv1"

top: "pool1"

name: "pool1"

type: POOLING

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layers {

bottom: "pool1"

top: "conv2"

name: "conv2"

type: CONVOLUTION

blobs_lr: 1

blobs_lr: 2

convolution_param {

num_output: 50

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

bottom: "conv2"

top: "pool2"

name: "pool2"

type: POOLING

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layers {

bottom: "pool2"

top: "ip1"

name: "ip1"

type: INNER_PRODUCT

blobs_lr: 1

blobs_lr: 2

inner_product_param {

num_output: 500

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

bottom: "ip1"

top: "ip1"

name: "relu1"

type: RELU

}

layers {

bottom: "ip1"

top: "ip2"

name: "ip2"

type: INNER_PRODUCT

blobs_lr: 1

blobs_lr: 2

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layers {

bottom: "ip2"

bottom: "label"

top: "accuracy"

name: "accuracy"

type: ACCURACY

include {

phase: TEST

}

}

layers {

bottom: "ip2"

bottom: "label"

top: "loss"

name: "loss"

type: SOFTMAX_LOSS

}

I1203 18:47:00.367391 4610 net.cpp:67] Creating Layer mnist

I1203 18:47:00.367409 4610 net.cpp:356] mnist -> data

I1203 18:47:00.367435 4610 net.cpp:356] mnist -> label

I1203 18:47:00.367451 4610 net.cpp:96] Setting up mnist

I1203 18:47:00.367571 4610 data_layer.cpp:68] Opening lmdb examples/mnist/mnist_test_lmdb

I1203 18:47:00.367609 4610 data_layer.cpp:128] output data size: 100,1,28,28

I1203 18:47:00.367832 4610 net.cpp:103] Top shape: 100 1 28 28 (78400)

I1203 18:47:00.367849 4610 net.cpp:103] Top shape: 100 1 1 1 (100)

I1203 18:47:00.367863 4610 net.cpp:67] Creating Layer label_mnist_1_split

I1203 18:47:00.367873 4610 net.cpp:394] label_mnist_1_split <- label

I1203 18:47:00.367892 4610 net.cpp:356] label_mnist_1_split -> label_mnist_1_split_0

I1203 18:47:00.367908 4610 net.cpp:356] label_mnist_1_split -> label_mnist_1_split_1

I1203 18:47:00.367919 4610 net.cpp:96] Setting up label_mnist_1_split

I1203 18:47:00.367929 4610 net.cpp:103] Top shape: 100 1 1 1 (100)

I1203 18:47:00.367938 4610 net.cpp:103] Top shape: 100 1 1 1 (100)

I1203 18:47:00.367950 4610 net.cpp:67] Creating Layer conv1

I1203 18:47:00.367959 4610 net.cpp:394] conv1 <- data

I1203 18:47:00.367969 4610 net.cpp:356] conv1 -> conv1

I1203 18:47:00.367982 4610 net.cpp:96] Setting up conv1

I1203 18:47:00.392133 4610 net.cpp:103] Top shape: 100 20 24 24 (1152000)

I1203 18:47:00.392204 4610 net.cpp:67] Creating Layer pool1

I1203 18:47:00.392217 4610 net.cpp:394] pool1 <- conv1

I1203 18:47:00.392231 4610 net.cpp:356] pool1 -> pool1

I1203 18:47:00.392247 4610 net.cpp:96] Setting up pool1

I1203 18:47:00.392273 4610 net.cpp:103] Top shape: 100 20 12 12 (288000)

I1203 18:47:00.392297 4610 net.cpp:67] Creating Layer conv2

I1203 18:47:00.392307 4610 net.cpp:394] conv2 <- pool1

I1203 18:47:00.392318 4610 net.cpp:356] conv2 -> conv2

I1203 18:47:00.392330 4610 net.cpp:96] Setting up conv2

I1203 18:47:00.392669 4610 net.cpp:103] Top shape: 100 50 8 8 (320000)

I1203 18:47:00.392729 4610 net.cpp:67] Creating Layer pool2

I1203 18:47:00.392756 4610 net.cpp:394] pool2 <- conv2

I1203 18:47:00.392768 4610 net.cpp:356] pool2 -> pool2

I1203 18:47:00.392781 4610 net.cpp:96] Setting up pool2

I1203 18:47:00.392793 4610 net.cpp:103] Top shape: 100 50 4 4 (80000)

I1203 18:47:00.392810 4610 net.cpp:67] Creating Layer ip1

I1203 18:47:00.392819 4610 net.cpp:394] ip1 <- pool2

I1203 18:47:00.392832 4610 net.cpp:356] ip1 -> ip1

I1203 18:47:00.392844 4610 net.cpp:96] Setting up ip1

I1203 18:47:00.397348 4610 net.cpp:103] Top shape: 100 500 1 1 (50000)

I1203 18:47:00.397372 4610 net.cpp:67] Creating Layer relu1

I1203 18:47:00.397382 4610 net.cpp:394] relu1 <- ip1

I1203 18:47:00.397394 4610 net.cpp:345] relu1 -> ip1 (in-place)

I1203 18:47:00.397407 4610 net.cpp:96] Setting up relu1

I1203 18:47:00.397420 4610 net.cpp:103] Top shape: 100 500 1 1 (50000)

I1203 18:47:00.397434 4610 net.cpp:67] Creating Layer ip2

I1203 18:47:00.397442 4610 net.cpp:394] ip2 <- ip1

I1203 18:47:00.397456 4610 net.cpp:356] ip2 -> ip2

I1203 18:47:00.397469 4610 net.cpp:96] Setting up ip2

I1203 18:47:00.397532 4610 net.cpp:103] Top shape: 100 10 1 1 (1000)

I1203 18:47:00.397547 4610 net.cpp:67] Creating Layer ip2_ip2_0_split

I1203 18:47:00.397557 4610 net.cpp:394] ip2_ip2_0_split <- ip2

I1203 18:47:00.397565 4610 net.cpp:356] ip2_ip2_0_split -> ip2_ip2_0_split_0

I1203 18:47:00.397583 4610 net.cpp:356] ip2_ip2_0_split -> ip2_ip2_0_split_1

I1203 18:47:00.397593 4610 net.cpp:96] Setting up ip2_ip2_0_split

I1203 18:47:00.397603 4610 net.cpp:103] Top shape: 100 10 1 1 (1000)

I1203 18:47:00.397611 4610 net.cpp:103] Top shape: 100 10 1 1 (1000)

I1203 18:47:00.397622 4610 net.cpp:67] Creating Layer accuracy

I1203 18:47:00.397631 4610 net.cpp:394] accuracy <- ip2_ip2_0_split_0

I1203 18:47:00.397640 4610 net.cpp:394] accuracy <- label_mnist_1_split_0

I1203 18:47:00.397650 4610 net.cpp:356] accuracy -> accuracy

I1203 18:47:00.397661 4610 net.cpp:96] Setting up accuracy

I1203 18:47:00.397673 4610 net.cpp:103] Top shape: 1 1 1 1 (1)

I1203 18:47:00.397687 4610 net.cpp:67] Creating Layer loss

I1203 18:47:00.397696 4610 net.cpp:394] loss <- ip2_ip2_0_split_1

I1203 18:47:00.397706 4610 net.cpp:394] loss <- label_mnist_1_split_1

I1203 18:47:00.397714 4610 net.cpp:356] loss -> loss

I1203 18:47:00.397725 4610 net.cpp:96] Setting up loss

I1203 18:47:00.397737 4610 net.cpp:103] Top shape: 1 1 1 1 (1)

I1203 18:47:00.397745 4610 net.cpp:109] with loss weight 1

I1203 18:47:00.397776 4610 net.cpp:170] loss needs backward computation.

I1203 18:47:00.397785 4610 net.cpp:172] accuracy does not need backward computation.

I1203 18:47:00.397794 4610 net.cpp:170] ip2_ip2_0_split needs backward computation.

I1203 18:47:00.397801 4610 net.cpp:170] ip2 needs backward computation.

I1203 18:47:00.397809 4610 net.cpp:170] relu1 needs backward computation.

I1203 18:47:00.397816 4610 net.cpp:170] ip1 needs backward computation.

I1203 18:47:00.397825 4610 net.cpp:170] pool2 needs backward computation.

I1203 18:47:00.397832 4610 net.cpp:170] conv2 needs backward computation.

I1203 18:47:00.397843 4610 net.cpp:170] pool1 needs backward computation.

I1203 18:47:00.397851 4610 net.cpp:170] conv1 needs backward computation.

I1203 18:47:00.397860 4610 net.cpp:172] label_mnist_1_split does not need backward computation.

I1203 18:47:00.397867 4610 net.cpp:172] mnist does not need backward computation.

I1203 18:47:00.397874 4610 net.cpp:208] This network produces output accuracy

I1203 18:47:00.397884 4610 net.cpp:208] This network produces output loss

I1203 18:47:00.397905 4610 net.cpp:467] Collecting Learning Rate and Weight Decay.

I1203 18:47:00.397915 4610 net.cpp:219] Network initialization done.

I1203 18:47:00.397923 4610 net.cpp:220] Memory required for data: 8086808

I1203 18:47:00.432165 4610 caffe.cpp:145] Running for 50 iterations.

I1203 18:47:00.435849 4610 caffe.cpp:169] Batch 0, accuracy = 0.99

I1203 18:47:00.435879 4610 caffe.cpp:169] Batch 0, loss = 0.018971

I1203 18:47:00.437434 4610 caffe.cpp:169] Batch 1, accuracy = 0.99

I1203 18:47:00.437471 4610 caffe.cpp:169] Batch 1, loss = 0.0117609

I1203 18:47:00.439000 4610 caffe.cpp:169] Batch 2, accuracy = 1

I1203 18:47:00.439020 4610 caffe.cpp:169] Batch 2, loss = 0.00555977

I1203 18:47:00.440551 4610 caffe.cpp:169] Batch 3, accuracy = 0.99

I1203 18:47:00.440575 4610 caffe.cpp:169] Batch 3, loss = 0.0412139

I1203 18:47:00.442105 4610 caffe.cpp:169] Batch 4, accuracy = 0.99

I1203 18:47:00.442126 4610 caffe.cpp:169] Batch 4, loss = 0.0579313

I1203 18:47:00.443619 4610 caffe.cpp:169] Batch 5, accuracy = 0.99

I1203 18:47:00.443639 4610 caffe.cpp:169] Batch 5, loss = 0.0479742

I1203 18:47:00.445159 4610 caffe.cpp:169] Batch 6, accuracy = 0.98

I1203 18:47:00.445179 4610 caffe.cpp:169] Batch 6, loss = 0.0570176

I1203 18:47:00.446712 4610 caffe.cpp:169] Batch 7, accuracy = 0.99

I1203 18:47:00.446732 4610 caffe.cpp:169] Batch 7, loss = 0.0272363

I1203 18:47:00.448249 4610 caffe.cpp:169] Batch 8, accuracy = 1

I1203 18:47:00.448269 4610 caffe.cpp:169] Batch 8, loss = 0.00680142

I1203 18:47:00.449801 4610 caffe.cpp:169] Batch 9, accuracy = 0.98

I1203 18:47:00.449821 4610 caffe.cpp:169] Batch 9, loss = 0.0288398

I1203 18:47:00.451352 4610 caffe.cpp:169] Batch 10, accuracy = 0.98

I1203 18:47:00.451372 4610 caffe.cpp:169] Batch 10, loss = 0.0603264

I1203 18:47:00.452883 4610 caffe.cpp:169] Batch 11, accuracy = 0.98

I1203 18:47:00.452903 4610 caffe.cpp:169] Batch 11, loss = 0.0524943

I1203 18:47:00.454407 4610 caffe.cpp:169] Batch 12, accuracy = 0.95

I1203 18:47:00.454427 4610 caffe.cpp:169] Batch 12, loss = 0.106648

I1203 18:47:00.455955 4610 caffe.cpp:169] Batch 13, accuracy = 0.98

I1203 18:47:00.455976 4610 caffe.cpp:169] Batch 13, loss = 0.0450225

I1203 18:47:00.457484 4610 caffe.cpp:169] Batch 14, accuracy = 1

I1203 18:47:00.457504 4610 caffe.cpp:169] Batch 14, loss = 0.00531614

I1203 18:47:00.459038 4610 caffe.cpp:169] Batch 15, accuracy = 0.98

I1203 18:47:00.459056 4610 caffe.cpp:169] Batch 15, loss = 0.065209

I1203 18:47:00.460577 4610 caffe.cpp:169] Batch 16, accuracy = 0.98

I1203 18:47:00.460597 4610 caffe.cpp:169] Batch 16, loss = 0.0520317

I1203 18:47:00.462123 4610 caffe.cpp:169] Batch 17, accuracy = 0.99

I1203 18:47:00.462143 4610 caffe.cpp:169] Batch 17, loss = 0.0328681

I1203 18:47:00.463656 4610 caffe.cpp:169] Batch 18, accuracy = 0.99

I1203 18:47:00.463676 4610 caffe.cpp:169] Batch 18, loss = 0.0175973

I1203 18:47:00.465188 4610 caffe.cpp:169] Batch 19, accuracy = 0.97

I1203 18:47:00.465208 4610 caffe.cpp:169] Batch 19, loss = 0.0576884

I1203 18:47:00.466749 4610 caffe.cpp:169] Batch 20, accuracy = 0.97

I1203 18:47:00.466769 4610 caffe.cpp:169] Batch 20, loss = 0.0850501

I1203 18:47:00.468278 4610 caffe.cpp:169] Batch 21, accuracy = 0.98

I1203 18:47:00.468298 4610 caffe.cpp:169] Batch 21, loss = 0.0676049

I1203 18:47:00.469805 4610 caffe.cpp:169] Batch 22, accuracy = 0.99

I1203 18:47:00.469825 4610 caffe.cpp:169] Batch 22, loss = 0.0448538

I1203 18:47:00.471328 4610 caffe.cpp:169] Batch 23, accuracy = 0.97

I1203 18:47:00.471349 4610 caffe.cpp:169] Batch 23, loss = 0.0333992

I1203 18:47:00.487124 4610 caffe.cpp:169] Batch 24, accuracy = 1

I1203 18:47:00.487180 4610 caffe.cpp:169] Batch 24, loss = 0.0281527

I1203 18:47:00.489002 4610 caffe.cpp:169] Batch 25, accuracy = 0.99

I1203 18:47:00.489048 4610 caffe.cpp:169] Batch 25, loss = 0.0545881

I1203 18:47:00.490890 4610 caffe.cpp:169] Batch 26, accuracy = 0.98

I1203 18:47:00.490932 4610 caffe.cpp:169] Batch 26, loss = 0.115576

I1203 18:47:00.492620 4610 caffe.cpp:169] Batch 27, accuracy = 1

I1203 18:47:00.492640 4610 caffe.cpp:169] Batch 27, loss = 0.0149555

I1203 18:47:00.494161 4610 caffe.cpp:169] Batch 28, accuracy = 0.98

I1203 18:47:00.494181 4610 caffe.cpp:169] Batch 28, loss = 0.0398991

I1203 18:47:00.495693 4610 caffe.cpp:169] Batch 29, accuracy = 0.96

I1203 18:47:00.495713 4610 caffe.cpp:169] Batch 29, loss = 0.115862

I1203 18:47:00.497226 4610 caffe.cpp:169] Batch 30, accuracy = 1

I1203 18:47:00.497246 4610 caffe.cpp:169] Batch 30, loss = 0.0116793

I1203 18:47:00.498785 4610 caffe.cpp:169] Batch 31, accuracy = 1

I1203 18:47:00.498817 4610 caffe.cpp:169] Batch 31, loss = 0.00451814

I1203 18:47:00.500329 4610 caffe.cpp:169] Batch 32, accuracy = 0.98

I1203 18:47:00.500349 4610 caffe.cpp:169] Batch 32, loss = 0.0244668

I1203 18:47:00.501878 4610 caffe.cpp:169] Batch 33, accuracy = 1

I1203 18:47:00.501899 4610 caffe.cpp:169] Batch 33, loss = 0.00285445

I1203 18:47:00.503411 4610 caffe.cpp:169] Batch 34, accuracy = 0.98

I1203 18:47:00.503429 4610 caffe.cpp:169] Batch 34, loss = 0.0566256

I1203 18:47:00.504940 4610 caffe.cpp:169] Batch 35, accuracy = 0.95

I1203 18:47:00.504961 4610 caffe.cpp:169] Batch 35, loss = 0.154924

I1203 18:47:00.506500 4610 caffe.cpp:169] Batch 36, accuracy = 1

I1203 18:47:00.506520 4610 caffe.cpp:169] Batch 36, loss = 0.00451233

I1203 18:47:00.508111 4610 caffe.cpp:169] Batch 37, accuracy = 0.97

I1203 18:47:00.508131 4610 caffe.cpp:169] Batch 37, loss = 0.0572309

I1203 18:47:00.509635 4610 caffe.cpp:169] Batch 38, accuracy = 0.99

I1203 18:47:00.509655 4610 caffe.cpp:169] Batch 38, loss = 0.0192229

I1203 18:47:00.511181 4610 caffe.cpp:169] Batch 39, accuracy = 0.99

I1203 18:47:00.511200 4610 caffe.cpp:169] Batch 39, loss = 0.029272

I1203 18:47:00.512725 4610 caffe.cpp:169] Batch 40, accuracy = 0.99

I1203 18:47:00.512745 4610 caffe.cpp:169] Batch 40, loss = 0.0258552

I1203 18:47:00.514317 4610 caffe.cpp:169] Batch 41, accuracy = 0.99

I1203 18:47:00.514338 4610 caffe.cpp:169] Batch 41, loss = 0.0752082

I1203 18:47:00.515854 4610 caffe.cpp:169] Batch 42, accuracy = 1

I1203 18:47:00.515873 4610 caffe.cpp:169] Batch 42, loss = 0.0283319

I1203 18:47:00.517379 4610 caffe.cpp:169] Batch 43, accuracy = 0.99

I1203 18:47:00.517398 4610 caffe.cpp:169] Batch 43, loss = 0.0112394

I1203 18:47:00.518925 4610 caffe.cpp:169] Batch 44, accuracy = 0.98

I1203 18:47:00.518946 4610 caffe.cpp:169] Batch 44, loss = 0.0413653

I1203 18:47:00.520457 4610 caffe.cpp:169] Batch 45, accuracy = 0.98

I1203 18:47:00.520478 4610 caffe.cpp:169] Batch 45, loss = 0.0501227

I1203 18:47:00.521989 4610 caffe.cpp:169] Batch 46, accuracy = 1

I1203 18:47:00.522009 4610 caffe.cpp:169] Batch 46, loss = 0.0114459

I1203 18:47:00.523540 4610 caffe.cpp:169] Batch 47, accuracy = 1

I1203 18:47:00.523561 4610 caffe.cpp:169] Batch 47, loss = 0.0163504

I1203 18:47:00.525075 4610 caffe.cpp:169] Batch 48, accuracy = 0.97

I1203 18:47:00.525095 4610 caffe.cpp:169] Batch 48, loss = 0.0450363

I1203 18:47:00.526633 4610 caffe.cpp:169] Batch 49, accuracy = 1

I1203 18:47:00.526651 4610 caffe.cpp:169] Batch 49, loss = 0.0046898

I1203 18:47:00.526662 4610 caffe.cpp:174] Loss: 0.041468

I1203 18:47:00.526674 4610 caffe.cpp:186] accuracy = 0.9856

I1203 18:47:00.526687 4610 caffe.cpp:186] loss = 0.041468 (* 1 = 0.041468 loss)