从稀疏表示到低秩表示(一)

确定研究方向后一直在狂补理论,最近看了一些文章,有了些想法,顺便也总结了representation系列的文章,由于我刚接触,可能会有些不足,愿大家共同指正。

从稀疏表示到低秩表示系列文章包括如下内容:

一、 sparse representation

二、NCSR(NonlocallyCentralized Sparse Representation)

三、GHP(GradientHistogram Preservation)

四、Group sparsity

五、Rankdecomposition

一、 sparse representation

1 sparsity

一个线性表示解决的问题如下图所示:

但是,数据量增大后,这个线性表达的基求解十分复杂,而且很多是冗余的,稀疏表示能解决这个问题:

稀疏直观理解就是在满足误差小和非零项尽可能多,非零项就是解决l0-norm问题,但是这个约束太强,弱化条件就是l1-norm,这是个Convex Optimization,于是有一系列的lp-norm。

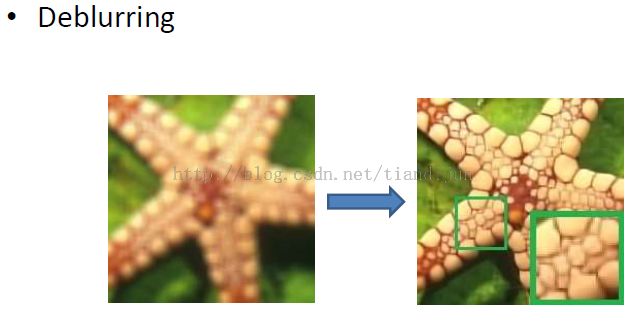

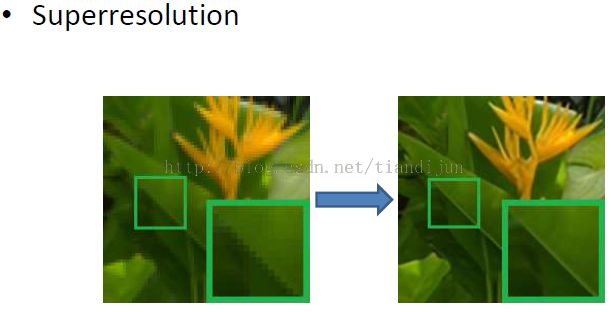

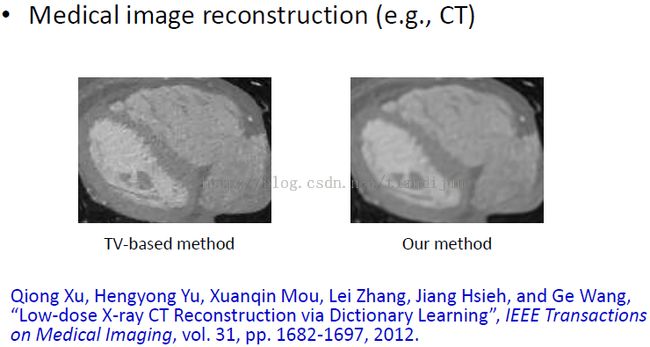

关于lp-norm的求解方法如上图所示,所有的paper用到的方法都在之内。稀疏表达的应用就非常广泛了,包括去噪,去雾,分割,分类,人脸识别等。

2 why sparse?

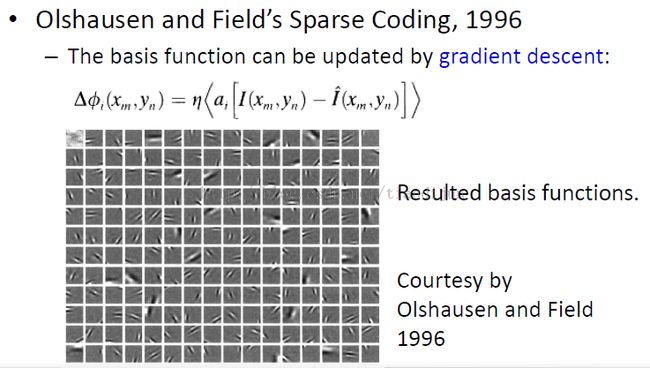

关于稀疏的可行原理要追溯到神经科学的突破上,emergence of simple-cell receptive field propertiesby learning a sparse code for nature images.于1996年Cornell大学心理学院的Bruno在Nature上发表的文章。

结论:哺乳动物的初级视觉的简单细胞的感受野具有空域局部性、方向性和带通性(在不同尺度下,对不同结构具有选择性),和小波变换的基函数具有一定的相似性。

而后的概率贝叶斯probabilistic Bayes perspective目标函数相似:

接着是上世纪末的comprehensive sensing解决信号的稀疏等,现在大量用于机器视觉图像理解和分类上。

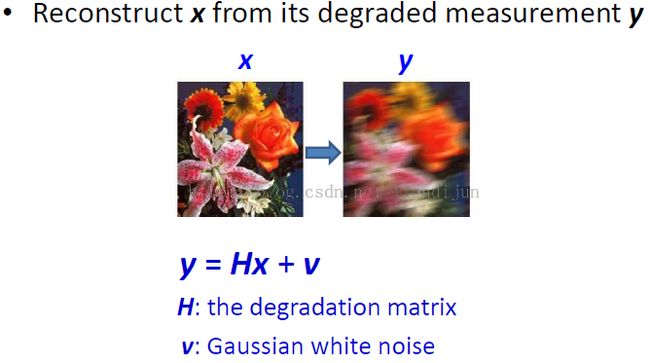

无论是去噪还是图像恢复都是解决如下的问题:

Imagereconstruction: the problem:

详细的优化过程如下:

Image reconstruction by sparse coding---the basic procedures

3 How sparsityhelps?

An interesting example:

Suppose you are looking for agirlfriend/boyfriend.

– i.e., you are “reconstructing” the desired signal.

• Your objective is that she/he is “白-富-美”/ “高-富-帅”.

– i.e., you want a “clean” and “perfect”reconstruction.

• However, the candidates are limited.

– i.e., the dictionary is small.

• Can you findyour ideal girlfriend/boyfriend?

假设你设定某些单一的标准,如handsome、 rich, tall,那么这些相当于basis,针对具体的样本人选,根据其特征映射这些basis,然后权衡,choose the best candidate.

•Candidate Ais tall; however, he is not handsome.

•Candidate Bis rich; however, he is too fat.

•Candidate Cis handsome; however, he is poor.

• If you sparsely select one of them, none is ideal foryou

– i.e., a sparse representation vector such as [0, 1, 0].

• How about a dense solution: (A+B+C)/3?

– i.e., a dense representation vector [1,1, 1]/3

– The “reconstructed” boyfriend is acompromiseof “高-富-帅”, and he is fat (i.e., has some noise) at the same time.

So what’s wrong?

– This is because thedictionaryis toosmall!

• If you can select yourboyfriend/girlfriend from boys/girls all over the world (i.e.,a largeenough dictionary), there is a very high probability (nearly 1) that you will find him/her!– i.e.,a very sparse solution such as [0, …, 1, …, 0]

• In summary, a sparse solution with an over-complete dictionary often works!

•Sparsityand redundancyare the two sides of the same coin.

4 what is the dictionary?

纵观所有的重构问题,或多或少多设计dictionarylearning 问题,具体的方法的总结和应用可以参考(图像分类的字典学习方法概述),全面的介绍各种dictionary learning 貌离神合的相似。

5 Does sparse enough?

It just sparsethe representation and reduce the redundancy, so how about the similarity andstructure between the atoms? What if there is corruption such as light etc.noise, it can’t be robust to various noises.

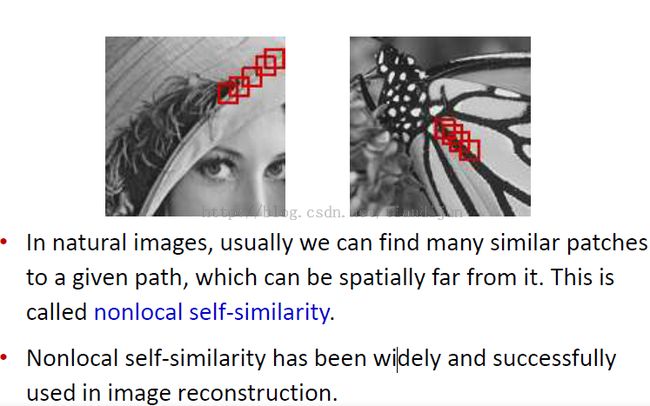

Such as Nonlocalself-similarity

6 Beyond sparse

Limitations of sparse representation

1) sparse representation often learns a dictionary on the basis ofwell-construct and compact dictionary, once the input data has changed, it willcost additional time to construct a new dictionary;

2) If the training data is contaminated (i.e.occlusion,disguise, lighting variations, pixel corruptionetc.) , sparse is notrobust and will be deteriorate;

3) When the data to be analyzed from the same class and sharingcommon (correlated) features (i.e. texture),sparse coding would be still performed for each input signal independently, it lacksstructureand correlations within and between classes.

So how to find efficientrepresentation ?

1) Structure:

Data enough: relations within class, regularized nearest space

Data small: across class representations, collaborativerepresentation

2) Robust: low-rank decomposition & sparsenoise

最后,推荐一篇低秩原理和应用的综述:http://media.au.tsinghua.edu.cn/2013_ATCA_Review.pdf