Looper,Handler, HandlerThread,Message,MessageQueue分析

首先,还是从SystemServer开始

(frameworks/base/services/java/com/android/server/SystemServer.java)

public void run() {

....

Looper.prepareMainLooper();

...

HandlerThread uiHandlerThread = new HandlerThread("UI");

uiHandlerThread.start();

Handler uiHandler = new Handler(uiHandlerThread.getLooper());

uiHandler.post(new Runnable() {

@Override

public void run() {

//Looper.myLooper().setMessageLogging(new LogPrinter(

// Log.VERBOSE, "WindowManagerPolicy", Log.LOG_ID_SYSTEM));

android.os.Process.setThreadPriority(

android.os.Process.THREAD_PRIORITY_FOREGROUND);

android.os.Process.setCanSelfBackground(false);

// For debug builds, log event loop stalls to dropbox for analysis.

if (StrictMode.conditionallyEnableDebugLogging()) {

Slog.i(TAG, "Enabled StrictMode logging for UI Looper");

}

}

});

// Create a handler thread just for the window manager to enjoy.

HandlerThread wmHandlerThread = new HandlerThread("WindowManager");

wmHandlerThread.start();

Handler wmHandler = new Handler(wmHandlerThread.getLooper());

wmHandler.post(new Runnable() {

@Override

public void run() {

//Looper.myLooper().setMessageLogging(new LogPrinter(

// android.util.Log.DEBUG, TAG, android.util.Log.LOG_ID_SYSTEM));

android.os.Process.setThreadPriority(

android.os.Process.THREAD_PRIORITY_DISPLAY);

android.os.Process.setCanSelfBackground(false);

// For debug builds, log event loop stalls to dropbox for analysis.

if (StrictMode.conditionallyEnableDebugLogging()) {

Slog.i(TAG, "Enabled StrictMode logging for WM Looper");

}

}

});

...

}

从Looper.prepareMainLooper();看起

public static void prepareMainLooper() {

prepare(false);

synchronized (Looper.class) {

if (sMainLooper != null) {

throw new IllegalStateException("The main Looper has already been prepared.");

}

sMainLooper = myLooper();

}

}

private static void prepare(boolean quitAllowed) {

if (sThreadLocal.get() != null) {

throw new RuntimeException("Only one Looper may be created per thread");

}

sThreadLocal.set(new Looper(quitAllowed));

}

private Looper(boolean quitAllowed) {

mQueue = new MessageQueue(quitAllowed);

mRun = true;

mThread = Thread.currentThread();

}

MessageQueue(boolean quitAllowed) {

mQuitAllowed = quitAllowed;

mPtr = nativeInit();

}

prepareMainLooper主要是调用了prepare函数,并创建了一个quitAllowed为false的MessageQueue(也就说每个looper有一个MessageQueue),而MesageQueue的创建是通过JNI层下nativeInit()函数,我们进入看看,主要进行了什么操作

(framework/base/core/jni/android_os_MessageQueue.cpp)

static jint android_os_MessageQueue_nativeInit(JNIEnv* env, jclass clazz) {

NativeMessageQueue* nativeMessageQueue = new NativeMessageQueue();

if (!nativeMessageQueue) {

jniThrowRuntimeException(env, "Unable to allocate native queue");

return 0;

}

nativeMessageQueue->incStrong(env);

return reinterpret_cast<jint>(nativeMessageQueue);

}

在这里创建了了一个NativeMessageQueue,并把指针转int型返回给java层

NativeMessageQueue::NativeMessageQueue() : mInCallback(false), mExceptionObj(NULL) {

mLooper = Looper::getForThread();

if (mLooper == NULL) {

mLooper = new Looper(false);

Looper::setForThread(mLooper);

}

}

可以看到,到了native层的messagequeue自己创建了Looper,同时通过pthread为指定线程特定数据键设置线程特定绑定(looper)

Looper::Looper(bool allowNonCallbacks) :

mAllowNonCallbacks(allowNonCallbacks), mSendingMessage(false),

mResponseIndex(0), mNextMessageUptime(LLONG_MAX) {

int wakeFds[2];

int result = pipe(wakeFds); // 建立读写管道

LOG_ALWAYS_FATAL_IF(result != 0, "Could not create wake pipe. errno=%d", errno);

mWakeReadPipeFd = wakeFds[0];

mWakeWritePipeFd = wakeFds[1];

// 操作文件描述符

result = fcntl(mWakeReadPipeFd, F_SETFL, O_NONBLOCK);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not make wake read pipe non-blocking. errno=%d",

errno);

result = fcntl(mWakeWritePipeFd, F_SETFL, O_NONBLOCK);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not make wake write pipe non-blocking. errno=%d",

errno);

// Allocate the epoll instance and register the wake pipe.

// 创建一个epoll的句柄,size用来告诉内核这个监听的数目一共有多大

mEpollFd = epoll_create(EPOLL_SIZE_HINT);

LOG_ALWAYS_FATAL_IF(mEpollFd < 0, "Could not create epoll instance. errno=%d", errno);

struct epoll_event eventItem;

memset(& eventItem, 0, sizeof(epoll_event)); // zero out unused members of data field union

eventItem.events = EPOLLIN;

eventItem.data.fd = mWakeReadPipeFd;

// epoll的事件注册函数

result = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, mWakeReadPipeFd, & eventItem);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not add wake read pipe to epoll instance. errno=%d",

errno);

}

Native的Looper主要是创建了管道,并通过epoll创建了事件

prepareMainLooper很简单,那我们接着看HandleThread

HandlerThread uiHandlerThread = new HandlerThread("UI");

uiHandlerThread.start();

HandleThread继承于Thread,主要重写了run函数

@Override

public void run() {

mTid = Process.myTid();

Looper.prepare();

synchronized (this) {

mLooper = Looper.myLooper();

notifyAll();

}

Process.setThreadPriority(mPriority);

onLooperPrepared();

Looper.loop();

mTid = -1;

}

可以看出,其是调用了Looper的prepare,myLooper和loop,前面看过prepare,创建了looper,而myLooper则是获取当前线程的looper,而loop

public static void loop() {

final Looper me = myLooper(); // 获取looper

if (me == null) {

throw new RuntimeException("No Looper; Looper.prepare() wasn't called on this thread.");

}

final MessageQueue queue = me.mQueue; // 在looper里面拿出MessageQueue

// Make sure the identity of this thread is that of the local process,

// and keep track of what that identity token actually is.

Binder.clearCallingIdentity();

final long ident = Binder.clearCallingIdentity();

for (;;) { // 一直做循环

// 从队列里面拿出消息

Message msg = queue.next(); // might block

if (msg == null) {

// No message indicates that the message queue is quitting.

return;

}

// This must be in a local variable, in case a UI event sets the logger

Printer logging = me.mLogging;

if (logging != null) {

logging.println(">>>>> Dispatching to " + msg.target + " " +

msg.callback + ": " + msg.what);

}

// 往Message中的目标派发消息

msg.target.dispatchMessage(msg);

if (logging != null) {

logging.println("<<<<< Finished to " + msg.target + " " + msg.callback);

}

// Make sure that during the course of dispatching the

// identity of the thread wasn't corrupted.

final long newIdent = Binder.clearCallingIdentity();

if (ident != newIdent) {

Log.wtf(TAG, "Thread identity changed from 0x"

+ Long.toHexString(ident) + " to 0x"

+ Long.toHexString(newIdent) + " while dispatching to "

+ msg.target.getClass().getName() + " "

+ msg.callback + " what=" + msg.what);

}

// 回收msg的资源

msg.recycle();

}

}

可以看到loop是在做循环,先从MessageQueue中获取出msg

先看下next

Message next() {

int pendingIdleHandlerCount = -1; // -1 only during first iteration

int nextPollTimeoutMillis = 0;

for (;;) { // 做循环

if (nextPollTimeoutMillis != 0) {

Binder.flushPendingCommands();

}

nativePollOnce(mPtr, nextPollTimeoutMillis); //通过native 的looper pollonce 调用 epoll_wait等待

synchronized (this) {

// Try to retrieve the next message. Return if found.

final long now = SystemClock.uptimeMillis();

Message prevMsg = null;

Message msg = mMessages;

if (msg != null && msg.target == null) {

// Stalled by a barrier. Find the next asynchronous message in the queue.

do {

prevMsg = msg;

msg = msg.next;

} while (msg != null && !msg.isAsynchronous());

}

if (msg != null) {

if (now < msg.when) {

// Next message is not ready. Set a timeout to wake up when it is ready.

nextPollTimeoutMillis = (int) Math.min(msg.when - now, Integer.MAX_VALUE);

} else {

// Got a message.

mBlocked = false;

if (prevMsg != null) {

prevMsg.next = msg.next;

} else {

mMessages = msg.next;

}

msg.next = null;

if (false) Log.v("MessageQueue", "Returning message: " + msg);

msg.markInUse();

return msg;

}

} else {

// No more messages.

nextPollTimeoutMillis = -1;

}

// Process the quit message now that all pending messages have been handled.

if (mQuiting) {

dispose();

return null;

}

// If first time idle, then get the number of idlers to run.

// Idle handles only run if the queue is empty or if the first message

// in the queue (possibly a barrier) is due to be handled in the future.

if (pendingIdleHandlerCount < 0

&& (mMessages == null || now < mMessages.when)) {

pendingIdleHandlerCount = mIdleHandlers.size();

}

if (pendingIdleHandlerCount <= 0) {

// No idle handlers to run. Loop and wait some more.

mBlocked = true;

continue;

}

if (mPendingIdleHandlers == null) {

mPendingIdleHandlers = new IdleHandler[Math.max(pendingIdleHandlerCount, 4)];

}

mPendingIdleHandlers = mIdleHandlers.toArray(mPendingIdleHandlers);

}

// Run the idle handlers.

// We only ever reach this code block during the first iteration.

for (int i = 0; i < pendingIdleHandlerCount; i++) {

final IdleHandler idler = mPendingIdleHandlers[i];

mPendingIdleHandlers[i] = null; // release the reference to the handler

boolean keep = false;

try {

keep = idler.queueIdle();

} catch (Throwable t) {

Log.wtf("MessageQueue", "IdleHandler threw exception", t);

}

if (!keep) {

synchronized (this) {

mIdleHandlers.remove(idler);

}

}

}

// Reset the idle handler count to 0 so we do not run them again.

pendingIdleHandlerCount = 0;

// While calling an idle handler, a new message could have been delivered

// so go back and look again for a pending message without waiting.

nextPollTimeoutMillis = 0;

}

}

通过next获取到msg后,根据Message的target 把消息派发,派发到msg各个回调去,而回调是一个Handler对象里面的runnable对象,加入没有runnable对象,则是调用其Callback对象

public interface Callback {

public boolean handleMessage(Message msg);

}

把消息派发,或者直接handleMessage(Message msg);

这个就是HandlerThread实现的功能,主要创建MessageQueue,然后做主循环,从MessageQueue里面获取Message,并通过Message的target(Handler)回调到Message的Runnable或者Handler的handlemessage

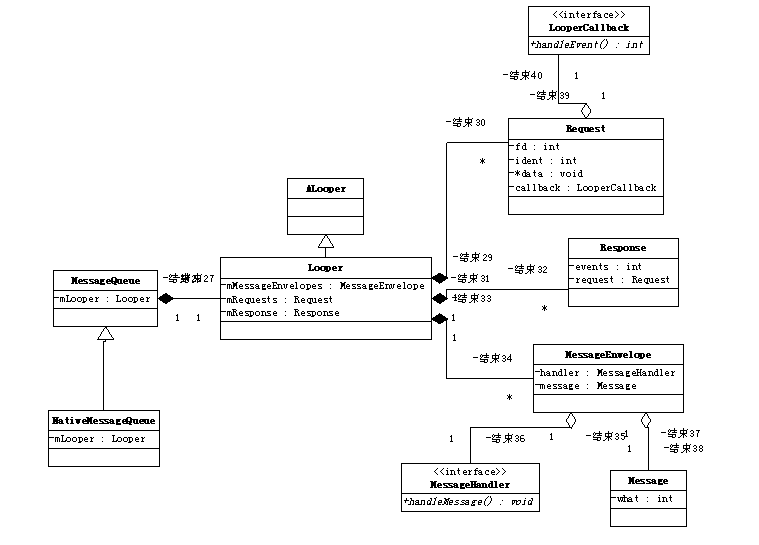

HandleThread作为一个thread,大概关系如上图

我们继续看接下去的代码

Handler uiHandler = new Handler(uiHandlerThread.getLooper());

uiHandler.post(new Runnable() {

@Override

public void run() {

//Looper.myLooper().setMessageLogging(new LogPrinter(

// Log.VERBOSE, "WindowManagerPolicy", Log.LOG_ID_SYSTEM));

android.os.Process.setThreadPriority(

android.os.Process.THREAD_PRIORITY_FOREGROUND);

android.os.Process.setCanSelfBackground(false);

// For debug builds, log event loop stalls to dropbox for analysis.

if (StrictMode.conditionallyEnableDebugLogging()) {

Slog.i(TAG, "Enabled StrictMode logging for UI Looper");

}

}

});

public Handler(Looper looper) {

this(looper, null, false);

}

public Handler(Looper looper, Callback callback, boolean async) {

mLooper = looper;

mQueue = looper.mQueue;

mCallback = callback;

mAsynchronous = async;

}

可以看出Handler得到了looper,messagequeue,callback为null,然后通过post执行了uihandler

public final boolean post(Runnable r)

{

return sendMessageDelayed(getPostMessage(r), 0);

}

private static Message getPostMessage(Runnable r) {

Message m = Message.obtain();

m.callback = r;

return m;

}

把runnable赋值给Message

public final boolean sendMessageDelayed(Message msg, long delayMillis)

{

if (delayMillis < 0) {

delayMillis = 0;

}

return sendMessageAtTime(msg, SystemClock.uptimeMillis() + delayMillis);

}

<pre name="code" class="java">public boolean sendMessageAtTime(Message msg, long uptimeMillis) {

MessageQueue queue = mQueue;

if (queue == null) {

RuntimeException e = new RuntimeException(

this + " sendMessageAtTime() called with no mQueue");

Log.w("Looper", e.getMessage(), e);

return false;

}

return enqueueMessage(queue, msg, uptimeMillis);

}

private boolean enqueueMessage(MessageQueue queue, Message msg, long uptimeMillis) {

msg.target = this;

if (mAsynchronous) {

msg.setAsynchronous(true);

}

return queue.enqueueMessage(msg, uptimeMillis);

}

把msg target指向handler对象本身,同时把调用msg的enqueueMessage,通过把msg入mMessages队列,同时需要唤醒mPtr(thread中的NativeMessageQueue对象)则调用nativeWake(管道发送“w”)唤醒

需注意uiHanlder用于WindowManagerPolicy, KeyguardViewManager, DisplayManagerService

wmHandler用于window manager

ava分析到这,我们在深入分析下native的

根据刚才上面知道,上面主要调用到了android_os_MessageQueue_nativeInit,

android_os_MessageQueue_nativePollOnce,android_os_MessageQueue_nativeWake

(framework/base/core/jni/android_os_MessageQueue.cpp)

在looper中创建的MessageQueue会调用到nativeInit

MessageQueue(boolean quitAllowed) {

mQuitAllowed = quitAllowed;

mPtr = nativeInit();

}

static jint android_os_MessageQueue_nativeInit(JNIEnv* env, jclass clazz) {

// 创建NativeMessageQueue

NativeMessageQueue* nativeMessageQueue = new NativeMessageQueue();

if (!nativeMessageQueue) {

jniThrowRuntimeException(env, "Unable to allocate native queue");

return 0;

}

nativeMessageQueue->incStrong(env);

// 强制转化成整形

return reinterpret_cast<jint>(nativeMessageQueue);

}

NativeMessageQueue::NativeMessageQueue() : mInCallback(false), mExceptionObj(NULL) {

mLooper = Looper::getForThread(); // 通过tls,类似java的ThreadLocal获取looper

if (mLooper == NULL) {

mLooper = new Looper(false);//创建一个新的looper

Looper::setForThread(mLooper);// 把looper放入线程中

}

}

可以看出MessageQueue创建了一个looper,这跟java不一样的地方,java是looper创建MessageQueue

Looper::Looper(bool allowNonCallbacks) :

mAllowNonCallbacks(allowNonCallbacks), mSendingMessage(false),

mResponseIndex(0), mNextMessageUptime(LLONG_MAX) {

int wakeFds[2];

int result = pipe(wakeFds); // 创建管道

LOG_ALWAYS_FATAL_IF(result != 0, "Could not create wake pipe. errno=%d", errno);

mWakeReadPipeFd = wakeFds[0];

mWakeWritePipeFd = wakeFds[1];

// 让管道读设置为不阻塞

result = fcntl(mWakeReadPipeFd, F_SETFL, O_NONBLOCK);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not make wake read pipe non-blocking. errno=%d",

errno);

// 让管道写设置为不阻塞

result = fcntl(mWakeWritePipeFd, F_SETFL, O_NONBLOCK);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not make wake write pipe non-blocking. errno=%d",

errno);

// Allocate the epoll instance and register the wake pipe.

// 创建一个epoll的句柄,size用来告诉内核这个监听的数目一共有多大

mEpollFd = epoll_create(EPOLL_SIZE_HINT);

LOG_ALWAYS_FATAL_IF(mEpollFd < 0, "Could not create epoll instance. errno=%d", errno);

struct epoll_event eventItem;

memset(& eventItem, 0, sizeof(epoll_event)); // zero out unused members of data field union

eventItem.events = EPOLLIN;

eventItem.data.fd = mWakeReadPipeFd;

// epoll的事件注册函数

result = epoll_ctl(mEpollFd, EPOLL_CTL_ADD, mWakeReadPipeFd, & eventItem);

LOG_ALWAYS_FATAL_IF(result != 0, "Could not add wake read pipe to epoll instance. errno=%d",

errno);

}

从代码可以看出主要创建了管道,并根据管道读,注册epoll的EPOLLIN事件。

接着看 android_os_MessageQueue_nativePollOnce,在java中在looper中loop循环会调用到此函数

static void android_os_MessageQueue_nativePollOnce(JNIEnv* env, jclass clazz,

jint ptr, jint timeoutMillis) {

NativeMessageQueue* nativeMessageQueue = reinterpret_cast<NativeMessageQueue*>(ptr);

nativeMessageQueue->pollOnce(env, timeoutMillis);

}

根据java层传过来MessageQueue对象中mPtr(在nativeInit中创建的NativeMessageQueue),调用其pollOnce函数

void NativeMessageQueue::pollOnce(JNIEnv* env, int timeoutMillis) {

mInCallback = true;

mLooper->pollOnce(timeoutMillis);

mInCallback = false;

if (mExceptionObj) {

env->Throw(mExceptionObj);

env->DeleteLocalRef(mExceptionObj);

mExceptionObj = NULL;

}

}

pollOnce函数又调用了NativeMessageQueue创建的Looper对象的pollOnce

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

int result = 0;

for (;;) {

while (mResponseIndex < mResponses.size()) {

const Response& response = mResponses.itemAt(mResponseIndex++);

int ident = response.request.ident;

if (ident >= 0) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning signalled identifier %d: "

"fd=%d, events=0x%x, data=%p",

this, ident, fd, events, data);

#endif

if (outFd != NULL) *outFd = fd;

if (outEvents != NULL) *outEvents = events;

if (outData != NULL) *outData = data;

return ident;

}

}

if (result != 0) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning result %d", this, result);

#endif

if (outFd != NULL) *outFd = 0;

if (outEvents != NULL) *outEvents = 0;

if (outData != NULL) *outData = NULL;

return result;

}

result = pollInner(timeoutMillis);

}

}

因为一开始mResponseIndex和mResponses.size())都为0,所以跑pollInner函数

int Looper::pollOnce(int timeoutMillis, int* outFd, int* outEvents, void** outData) {

int result = 0;

for (;;) {

while (mResponseIndex < mResponses.size()) {

const Response& response = mResponses.itemAt(mResponseIndex++);

int ident = response.request.ident;

if (ident >= 0) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning signalled identifier %d: "

"fd=%d, events=0x%x, data=%p",

this, ident, fd, events, data);

#endif

if (outFd != NULL) *outFd = fd;

if (outEvents != NULL) *outEvents = events;

if (outData != NULL) *outData = data;

return ident;

}

}

if (result != 0) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - returning result %d", this, result);

#endif

if (outFd != NULL) *outFd = 0;

if (outEvents != NULL) *outEvents = 0;

if (outData != NULL) *outData = NULL;

return result;

}

result = pollInner(timeoutMillis);

}

}

因为一开始mResponseIndex和mResponses.size())都为0,所以跑pollInner函数

int Looper::pollInner(int timeoutMillis) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - waiting: timeoutMillis=%d", this, timeoutMillis);

#endif

// Adjust the timeout based on when the next message is due.

if (timeoutMillis != 0 && mNextMessageUptime != LLONG_MAX) {

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

int messageTimeoutMillis = toMillisecondTimeoutDelay(now, mNextMessageUptime);

if (messageTimeoutMillis >= 0

&& (timeoutMillis < 0 || messageTimeoutMillis < timeoutMillis)) {

timeoutMillis = messageTimeoutMillis;

}

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - next message in %lldns, adjusted timeout: timeoutMillis=%d",

this, mNextMessageUptime - now, timeoutMillis);

#endif

}

// Poll.

int result = ALOOPER_POLL_WAKE;

mResponses.clear();

mResponseIndex = 0;

struct epoll_event eventItems[EPOLL_MAX_EVENTS];

// 调用epoll等待管道读事件

int eventCount = epoll_wait(mEpollFd, eventItems, EPOLL_MAX_EVENTS, timeoutMillis);

// Acquire lock.

mLock.lock();

// Check for poll error.

if (eventCount < 0) {

if (errno == EINTR) {

goto Done;

}

ALOGW("Poll failed with an unexpected error, errno=%d", errno);

result = ALOOPER_POLL_ERROR;

goto Done;

}

// Check for poll timeout.

if (eventCount == 0) {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - timeout", this);

#endif

result = ALOOPER_POLL_TIMEOUT;

goto Done;

}

// Handle all events.

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ pollOnce - handling events from %d fds", this, eventCount);

#endif

for (int i = 0; i < eventCount; i++) {

int fd = eventItems[i].data.fd;

uint32_t epollEvents = eventItems[i].events;

if (fd == mWakeReadPipeFd) { // 如果是读管道则awoken下

if (epollEvents & EPOLLIN) {

awoken();

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on wake read pipe.", epollEvents);

}

} else { // 如果不是,是write的,则调用pushResponse把request和event加入reponse列表里面

ssize_t requestIndex = mRequests.indexOfKey(fd);

if (requestIndex >= 0) {

int events = 0;

if (epollEvents & EPOLLIN) events |= ALOOPER_EVENT_INPUT;

if (epollEvents & EPOLLOUT) events |= ALOOPER_EVENT_OUTPUT;

if (epollEvents & EPOLLERR) events |= ALOOPER_EVENT_ERROR;

if (epollEvents & EPOLLHUP) events |= ALOOPER_EVENT_HANGUP;

pushResponse(events, mRequests.valueAt(requestIndex));

} else {

ALOGW("Ignoring unexpected epoll events 0x%x on fd %d that is "

"no longer registered.", epollEvents, fd);

}

}

}

Done: ;

// Invoke pending message callbacks.

mNextMessageUptime = LLONG_MAX;

while (mMessageEnvelopes.size() != 0) { // 一开始是没的,所以不走这条路

nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC);

const MessageEnvelope& messageEnvelope = mMessageEnvelopes.itemAt(0);

if (messageEnvelope.uptime <= now) {

// Remove the envelope from the list.

// We keep a strong reference to the handler until the call to handleMessage

// finishes. Then we drop it so that the handler can be deleted *before*

// we reacquire our lock.

{ // obtain handler

sp<MessageHandler> handler = messageEnvelope.handler;

Message message = messageEnvelope.message;

mMessageEnvelopes.removeAt(0);

mSendingMessage = true;

mLock.unlock();

#if DEBUG_POLL_AND_WAKE || DEBUG_CALLBACKS

ALOGD("%p ~ pollOnce - sending message: handler=%p, what=%d",

this, handler.get(), message.what);

#endif

handler->handleMessage(message);

} // release handler

mLock.lock();

mSendingMessage = false;

result = ALOOPER_POLL_CALLBACK;

} else {

// The last message left at the head of the queue determines the next wakeup time.

mNextMessageUptime = messageEnvelope.uptime;

break;

}

}

// Release lock.

mLock.unlock();

// Invoke all response callbacks.

for (size_t i = 0; i < mResponses.size(); i++) {

Response& response = mResponses.editItemAt(i);

if (response.request.ident == ALOOPER_POLL_CALLBACK) {

int fd = response.request.fd;

int events = response.events;

void* data = response.request.data;

#if DEBUG_POLL_AND_WAKE || DEBUG_CALLBACKS

ALOGD("%p ~ pollOnce - invoking fd event callback %p: fd=%d, events=0x%x, data=%p",

this, response.request.callback.get(), fd, events, data);

#endif

int callbackResult = response.request.callback->handleEvent(fd, events, data);

if (callbackResult == 0) {

removeFd(fd);

}

// Clear the callback reference in the response structure promptly because we

// will not clear the response vector itself until the next poll.

response.request.callback.clear();

result = ALOOPER_POLL_CALLBACK;

}

}

return result;

}

这一步只看epoll_wait函数,epoll_wait函数在等待着EPOLLIN,而EPOLLIN的唤醒则是通过java层SendMessage调用到native层的android_os_MessageQueue_nativeWake

static void android_os_MessageQueue_nativeWake(JNIEnv* env, jclass clazz, jint ptr) {

NativeMessageQueue* nativeMessageQueue = reinterpret_cast<NativeMessageQueue*>(ptr);

return nativeMessageQueue->wake();

}

void NativeMessageQueue::wake() {

mLooper->wake();

}

void Looper::wake() {

#if DEBUG_POLL_AND_WAKE

ALOGD("%p ~ wake", this);

#endif

ssize_t nWrite;

do {

nWrite = write(mWakeWritePipeFd, "W", 1);

} while (nWrite == -1 && errno == EINTR);

if (nWrite != 1) {

if (errno != EAGAIN) {

ALOGW("Could not write wake signal, errno=%d", errno);

}

}

}

所以唤醒了,从而能继续next,next之后就msg.target.dispatchMessage(msg);派发消息

至于native looper机制后面再跟踪下代码