Moses运行过程记录---Moses语言模型和翻译模型构建(三)

This time I want to translate English to Chinese, so I choose Chinese as a language model.Go to the directory:/home/tianliang/mosesdecoder/srilm/bin/i686-gcc4, we will use the “ngram-count” to build a 5-gram model. The process is like below:

tianliang@ubuntu:~/mosesdecoder/srilm/bin/i686-gcc4/test$ mkdir test

tianliang@ubuntu:~/mosesdecoder/srilm/bin/i686-gcc4/test$ cd test

tianliang@ubuntu:~/mosesdecoder/srilm/bin/i686-gcc4/test$ ./ngram-count -text clean.chn -lm chinese.gz -order 5 -unk -wbdiscount -interpolate

Here it means: we will build Chinese file “clean.chn” into a 5-gram language model chinese.gz using the smoothing methods called Witten-Bell discounting and interpolated estimates. The chinese.gz looks like:

It shows the number of the n-gram models. For example, there are 594 3-gram models in our corpus.

Moses' toolkit does a great job of wrapping up calls to mkcls and GIZA++ inside a training script, and outputting the phrase and reordering tables needed for decoding. The script that does this is called train-factored-phrase-model.perl. In my running, the train-factored-phrase-model.perl is located at

/home/tianliang/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training. The training process takes place in nine steps, all of them executed by the scripts. The nine steps are:

Ø Prepare data

Ø Run GIZA++

Ø Align words

Ø Get lexical translation table

Ø Extract phrase

Ø Score phrase

Ø Build lexicalized reordering model

Ø Build generation models

Ø Create configure file

If you want to check the details of the nine steps, you can refer to the User Manual on Page 66-78. Now we will build the translation model using the following command:

$train-factored-phrase-model.perl --scripts-root-dir --corpus corpus /directory --f target language --e source language

There should be two files in the corpus directory and the files should be sentence-aligned halves of the parallel corpus. In my corpus, the English corpus called clean.eng and the Chinese corpus called clean.chn. “clean.chn” should contain only Chinese and the other should contain English only. The Command I use is like below:

First we should export the path to the scripts like this:

tianliang@ubuntu:~/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training$ export

SCRIPTS_ROOTDIR=/home/tianliang/mosesdecoder/moses/scripts/target/scripts-20100126-0922

Then I will use the “SCRIPTS_ROOTDIR” in our next command for short without inputting full path.

tianliang@ubuntu:~/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training$ ./train-factored-phrase-model.perl --scripts-root-dir $SCRIPTS_ROOTDIR --corpus /home/tianliang/mosesdecoder/corpus/clean --f eng

--e chn --alignment grow-diag-final-and-reordering msd-bidirectional-fe --lm

0:5:/home/tianliang/mosesdecoder/corpus/chinese.gz:0

Here I just use some parameters to train the model. “--alignment grow-diag-final-and-reordering

msd-bidirectional-fe” means that I want to build alignment table and reordering table. Actually speaking, this command equals: “--alignment grow-diag-final --reordering msd-bidirectional-fe”. The other parameters you can add if you need. The last step will get something like:

![]()

For I didn't use the --generation parameter, there is warning telling you that you didn't request the generation model like below:

Now would be a good time to look at what we've done. Enter the training folder; you can see that four new folders have been made.

![]()

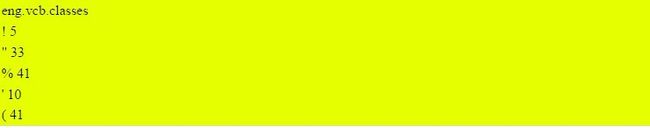

In folder “corpus”: Two vocabulary files are generated and the parallel corpus is converted into a numberized format. The vocabulary files contain words, integer word identifiers and word count information:

The sentence-aligned corpus now looks like this:

We can see that the alignment between Chinese and English from these two files. A sentence pair now consists of three lines: First the frequency of this sentence. In our training process this is always 1. This number can be used for weighting different parts of the training corpus differently. The two lines below contain word ids of Chinese and the English sentence.

GIZA++ also requires words to be placed into word classes. This is done automatically by calling the mkcls program. Word classes are only used for the IBM reordering model in GIZA++. A peek into the English word class file:

In folder giza.chn-eng :

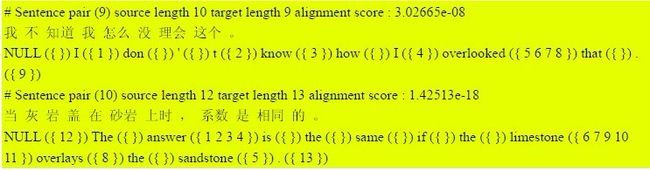

This is generated by GIZA++, what we get now is the alignment of the Chinese and English. The most important file we get is called:chn-eng.A3.final .It looks like:

In this file, after some statistical information and the Chinese sentence, the English sentence is listed word by word, with references to aligned Chinese words: The first word I ({ 1 }) is aligned to the first Chinese word 我. The second word don ({ }) ' ({ }) t ({ 2 }) is aligned to Chinese word 不 And so on.

In folder giza.eng-chn:

It's the inverse of the last one ,Here I won't give the content of the folder. I am sure you have guessed it.

Note:

What I want to mention is that Moses word alignments are taken from the intersection of bidirectional runs of GIZA++ plus some additional alignment points from the union of the two runs. So we get the two folders in two inverse pairs like shown above.

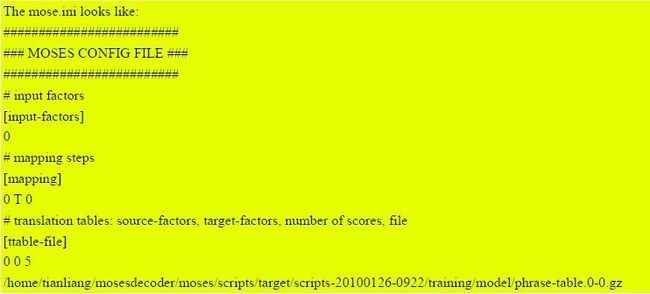

In folder model:

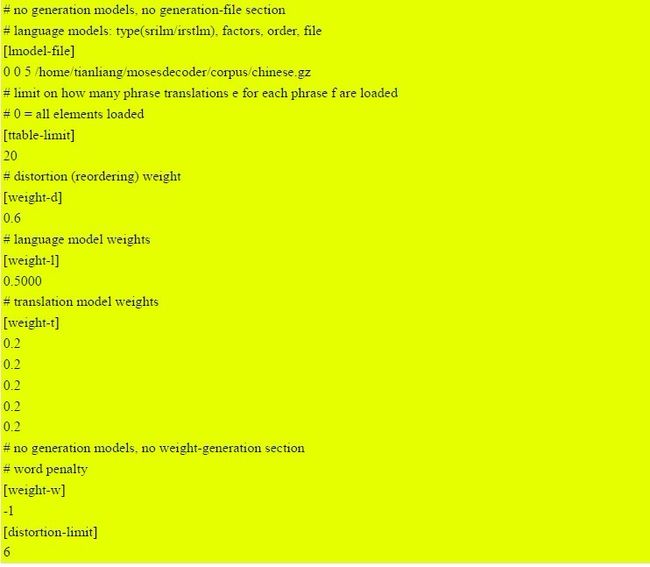

This is the actual decoder we will use. In this directory, we get the phrase table and mose.ini. We can copy the executable program moses (in ~/moses/moses-cmd/src) to this folder. With these tools we can get the final result!

最后一部分将给出moses的运行结果和评测过程,请看第四部分“Moses运行过程记录---结果和评测(四)”。