Hive学习六:HIVE日志分析(用户画像)

Hive学习六:HIVE日志分析(用户画像)

标签(空格分隔): Hive

- Hive学习六HIVE日志分析用户画像

- 案例分析思路

- 一创建临时中间表

- 二将中间结果存放到临时表中

- 三创建结果表并存入最终jieguoji

- 总结

案例分析思路

根据原始数据表里面的信息提取用户画像信息,一方面实现难度较大,另一方面由于数据量较大,从而导致实现的性能较差。由于以上2点,所以考虑从原始表中提取用户的会话信息放到临时中间表中,最终通过关联多个临时中间表获取需要的用户信息,可以大大提高查询的性能和降低提取的难度。

原始表结构:

drop table if exists db_track.track_log ;

create table db_track.track_log(

id string,

url string,

referer string,

keyword string,

type string,

guid string,

pageId string,

moduleId string,

linkId string,

attachedInfo string,

sessionId string,

trackerU string,

trackerType string,

ip string,

trackerSrc string,

cookie string,

orderCode string,

trackTime string,

endUserId string,

firstLink string,

sessionViewNo string,

productId string,

curMerchantId string,

provinceId string,

cityId string,

fee string,

edmActivity string,

edmEmail string,

edmJobId string,

ieVersion string,

platform string,

internalKeyword string,

resultSum string,

currentPage string,

linkPosition string,

buttonPosition string

)

partitioned by(date string, hour string)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' ;加载数据:

load data local inpath '/opt/datas/2015082818' overwrite into table db_track.track_log partition(date='20150828',hour='18');

load data local inpath '/opt/datas/2015082819' overwrite into table db_track.track_log partition(date='20150828',hour='19');查询测试数据:

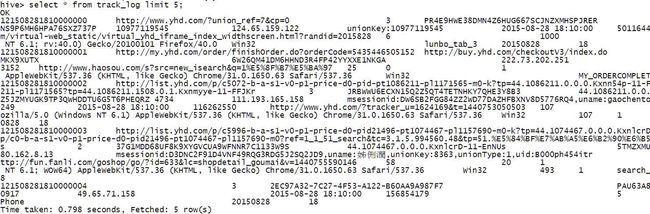

select * from track_log limit 5;一,创建临时中间表

1,创建session_info表

此表存放用户完成的会话信息

drop table if exists db_track.session_info;

create table db_track.session_info(

session_id string,

guid string,

trackerU string,

langding_url string,

landing_url_ref string,

user_id string,

pv string,

stay_time string,

min_trackTime string,

ip string,provinceId string) partitioned by(date string)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'; 2,创建tmp_session_info

此表存放session_id,guid,user_id,pv,stay_time,min_trackTime,ip,provinceId等字段信息。

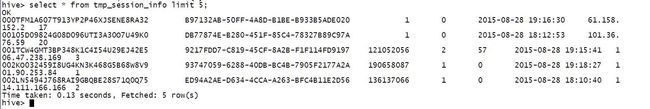

drop table if exists tmp_session_info;

create table tmp_session_info as select a.sessionid session_id, max(a.guid) guid, --trackerU, -- langding_url, --landing_url_ref, max(a.enduserid) user_id, count(a.url) pv, (unix_timestamp(max(a.trackTime))-unix_timestamp(min(a.trackTime))) stay_time, min(a.trackTime) min_trackTime, max(a.ip) ip, max(a.provinceid) provinceId from db_track.track_log a where date='20150828' group by a.sessionid;select * from tmp_session_info limit 5;3,创建tmp_track_url

此表存放sessionid,tracktime,trackeru,url,referer信息

drop table if exists tmp_track_url;

create table tmp_track_url as select sessionid, tracktime, trackeru, url, referer from db_track.track_log where date='20150828';select * from tmp_track_url limit 5;二,将中间结果存放到临时表中

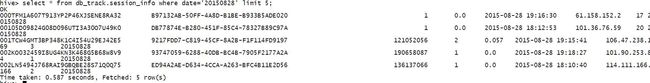

insert overwrite table db_track.session_info partition(date='20150828') select a.session_id session_id, max(a.guid) guid, max(b.trackeru) trackerU, max(b.url) langding_url, max(b.referer) landing_url_ref, max(a.user_id) user_id, max(a.pv) pv, max(a.stay_time/1000) stay_time, max(a.min_tracktime) min_trackTime, max(a.ip) ip, max(a.provinceid) provinceId from tmp_session_info a join tmp_track_url b on a.session_id=b.sessionid and a.min_tracktime=b.tracktime group by a.session_id;select * from db_track.session_info where date='20150828' limit 5;三,创建结果表并存入最终jieguoji

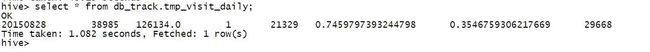

drop table if exists db_track.tmp_visit_daily;

create table db_track.tmp_visit_daily as select date, count(distinct guid) uv, sum(pv) pv, count(distinct case when user_id='' then user_id else null end) login_users, count(distinct case when user_id='' then guid else null end) visit_users, avg(stay_time) avg_stay_time, count(case when pv>2 then session_id else null end)/count(session_id) second_rate, count(distinct ip) ip_number from db_track.session_info where date='20150828' group by date;select * from db_track.tmp_visit_daily;总结

通过该案例主要有以下几点收货:

- 充分利用中间结果集获取最终的数据集,可以大大提高查询的性能并且降低获取数据的难度。

- 复习分区表的创建

- 复习了UDF函数的使用

- 复习了hiveql中case when的用法