IMF SPARK 源代码发行定制班 预习课程 Spark框架源码的调试 (2) 从master worker main入口进行调试

1.在Master 所在节点的conf/spark-env.sh脚本中添加以下配置:

export SPARK_MASTER_OPTS="$SPARK_MASTER_OPTS -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y"

2.stop

root@master:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# stop-master.sh

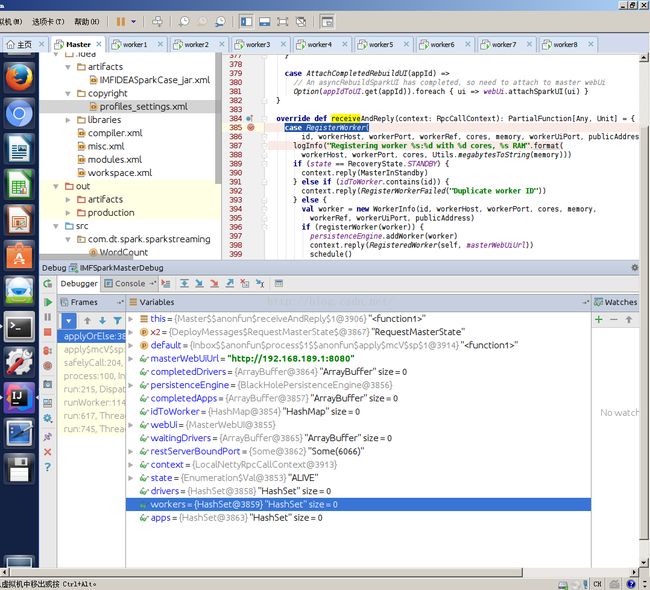

3.start

root@master:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

4.查看日志

root@master:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# cat /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

Spark Command: /usr/local/jdk1.8.0_60/bin/java -cp /usr/local/spark-1.6.1-bin-hadoop2.6/conf/:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-core-3.2.10.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-api-jdo-3.2.6.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-rdbms-3.2.9.jar:/usr/local/hadoop-2.6.0/etc/hadoop/ -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y -Xms1g -Xmx1g org.apache.spark.deploy.master.Master --ip 192.168.189.1 --port 7077 --webui-port 8080

========================================

Listening for transport dt_socket at address: 5005

5.调试 在master 设置断点

override def receiveAndReply(context: RpcCallContext): PartialFunction[Any, Unit] = {

case RegisterWorker(

id, workerHost, workerPort, workerRef, cores, memory, workerUiPort, publicAddress) => {

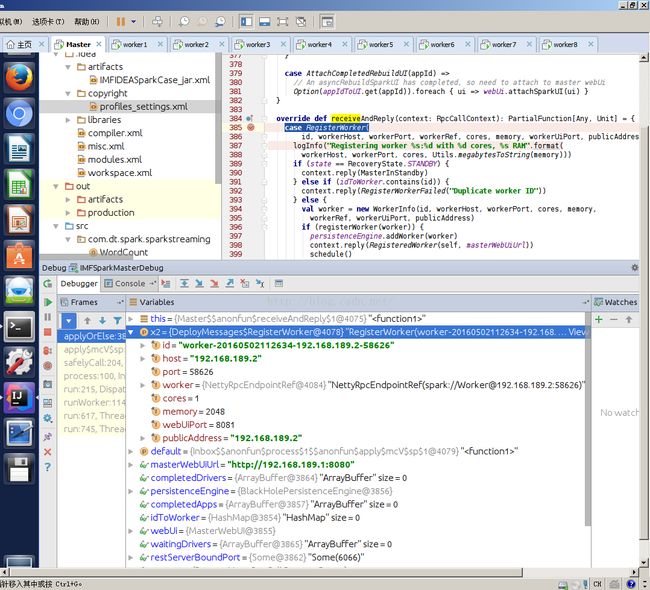

6.重启worker1观测注册情况

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# stop-slave.sh

no org.apache.spark.deploy.worker.Worker to stop

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# start-slave.sh spark://192.168.189.1:7077

starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

7.在master上远程调试worker1

在worker1 所在节点的conf/spark-env.sh脚本中添加以下配置:

export SPARK_WORKER_OPTS="$SPARK_WORKER_OPTS -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y"

8 在master的IDE中创建remote调试,修改hostname为worker1,端口port为上面设置的address 5005

9. 在object Worker的main方法中设置断点,启动IDE中的remote调试

10.重启worker1观测

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# stop-slave.sh

no org.apache.spark.deploy.worker.Worker to stop

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# start-slave.sh spark://192.168.189.1:7077

starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# cat /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

Spark Command: /usr/local/jdk1.8.0_60/bin/java -cp /usr/local/spark-1.6.1-bin-hadoop2.6/conf/:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-core-3.2.10.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-api-jdo-3.2.6.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-rdbms-3.2.9.jar:/usr/local/hadoop-2.6.0/etc/hadoop/ -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y -Xms1g -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://192.168.189.1:7077

========================================

Listening for transport dt_socket at address: 5005

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin#

11.将master conf中调试语句注释掉

#export SPARK_MASTER_OPTS="$SPARK_MASTER_OPTS -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y"

12. 再次在master上远程调试worker1,hostname为worker1,端口port为上面设置的address 5005,这次可以了

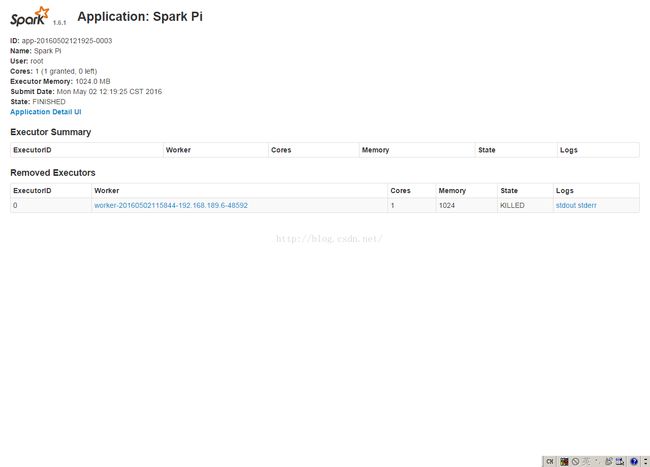

13. 查看webui,woker1调试

至此我们完成了spark 框架的master worker的调试.

export SPARK_MASTER_OPTS="$SPARK_MASTER_OPTS -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y"

2.stop

root@master:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# stop-master.sh

3.start

root@master:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

4.查看日志

root@master:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# cat /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.out

Spark Command: /usr/local/jdk1.8.0_60/bin/java -cp /usr/local/spark-1.6.1-bin-hadoop2.6/conf/:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-core-3.2.10.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-api-jdo-3.2.6.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-rdbms-3.2.9.jar:/usr/local/hadoop-2.6.0/etc/hadoop/ -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y -Xms1g -Xmx1g org.apache.spark.deploy.master.Master --ip 192.168.189.1 --port 7077 --webui-port 8080

========================================

Listening for transport dt_socket at address: 5005

5.调试 在master 设置断点

override def receiveAndReply(context: RpcCallContext): PartialFunction[Any, Unit] = {

case RegisterWorker(

id, workerHost, workerPort, workerRef, cores, memory, workerUiPort, publicAddress) => {

6.重启worker1观测注册情况

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# stop-slave.sh

no org.apache.spark.deploy.worker.Worker to stop

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# start-slave.sh spark://192.168.189.1:7077

starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

7.在master上远程调试worker1

在worker1 所在节点的conf/spark-env.sh脚本中添加以下配置:

export SPARK_WORKER_OPTS="$SPARK_WORKER_OPTS -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y"

8 在master的IDE中创建remote调试,修改hostname为worker1,端口port为上面设置的address 5005

9. 在object Worker的main方法中设置断点,启动IDE中的remote调试

10.重启worker1观测

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# stop-slave.sh

no org.apache.spark.deploy.worker.Worker to stop

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# start-slave.sh spark://192.168.189.1:7077

starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin# cat /usr/local/spark-1.6.1-bin-hadoop2.6/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-worker1.out

Spark Command: /usr/local/jdk1.8.0_60/bin/java -cp /usr/local/spark-1.6.1-bin-hadoop2.6/conf/:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-core-3.2.10.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-api-jdo-3.2.6.jar:/usr/local/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-rdbms-3.2.9.jar:/usr/local/hadoop-2.6.0/etc/hadoop/ -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y -Xms1g -Xmx1g org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://192.168.189.1:7077

========================================

Listening for transport dt_socket at address: 5005

root@worker1:/usr/local/spark-1.6.1-bin-hadoop2.6/sbin#

11.将master conf中调试语句注释掉

#export SPARK_MASTER_OPTS="$SPARK_MASTER_OPTS -Xdebug -server -Xrunjdwp:transport=dt_socket,address=5005,server=y,suspend=y"

12. 再次在master上远程调试worker1,hostname为worker1,端口port为上面设置的address 5005,这次可以了

13. 查看webui,woker1调试

至此我们完成了spark 框架的master worker的调试.

spark源代码万里长征终于迈出了第一步!